Animal video label automatic generation method based on deep learning, terminal and medium

A video tag and automatic generation technology, applied in the field of video tag, can solve the problems of low model recognition accuracy, increase the burden of subsequent feature extraction and recognition, redundant windows, etc., and achieve the effect of improving recognition efficiency and recognition accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

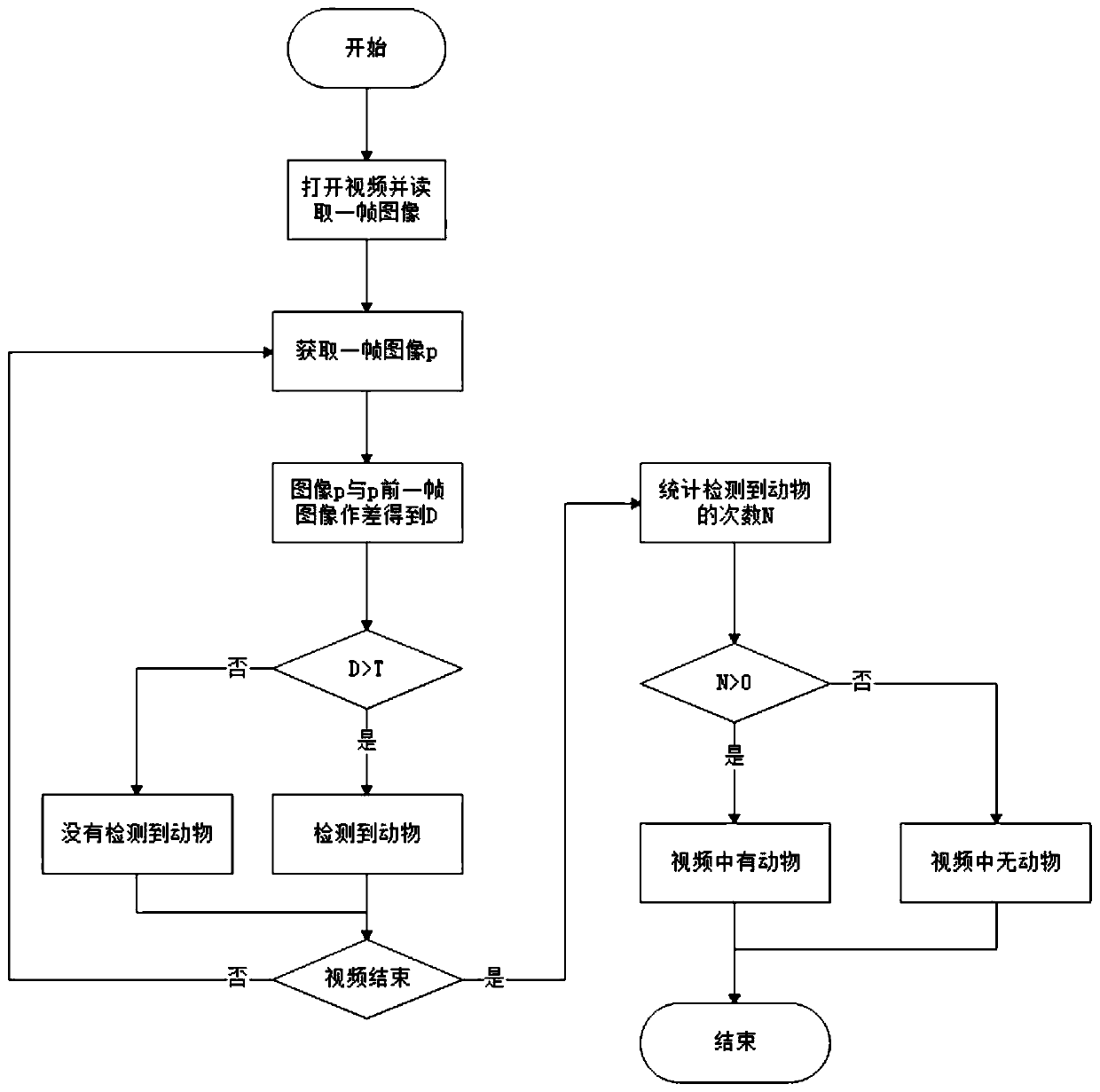

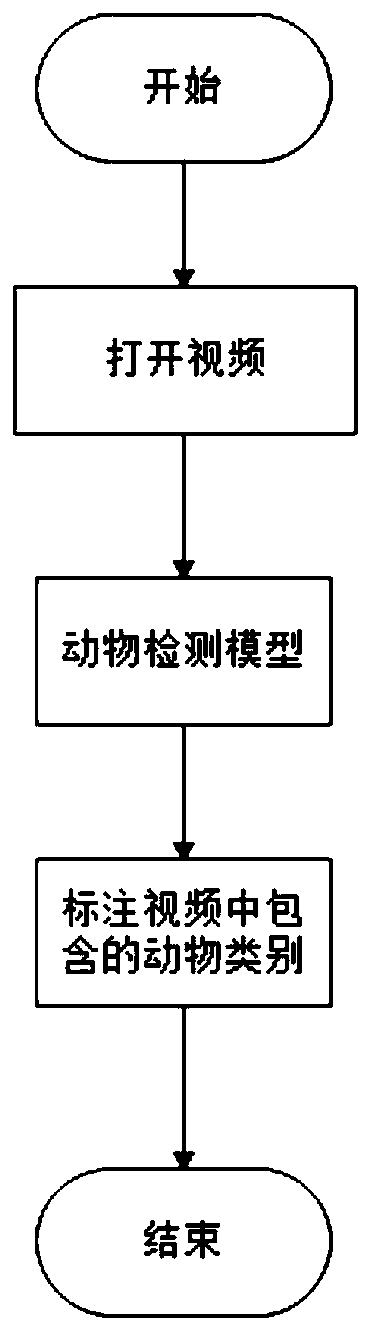

[0049] A method for automatic generation of animal video tags based on deep learning, see Image 6 , including the following steps:

[0050] Extract several key frame images in the video to be detected, and input the key frame images into the feature extraction model;

[0051] Input the feature information output by the feature extraction model into the trained target detection algorithm model;

[0052] Record the position and category of the target object output by the target detection algorithm model in the video to be detected, and define the category of the target object as the animal label of the video to be detected.

[0053] Specifically, the method for automatically generating animal video tags provided in this embodiment includes a feature extraction model and a target detection model. Wherein the feature extraction model is composed of a convolutional neural network, and is trained through the ImageNet classification data set. The feature extraction model is used ...

Embodiment 2

[0055] Embodiment 2 On the basis of Embodiment 1, the training method of the target detection model is further limited.

[0056] see Figure 7 , the target detection model is trained by the following method:

[0057] Obtain a training set consisting of multiple training pictures, and label the position and category of objects in each training picture;

[0058] Realize target detection algorithm based on TensorFlow framework programming;

[0059] using the training set to train the target detection algorithm;

[0060] Save the trained target detection algorithm as the target detection algorithm model.

[0061] Specifically, the training pictures in the training set can be determined according to the specific user's business situation and use situation. For example, select an appropriate number of pictures based on the pictures of animals that have appeared in the business provided by the user, mark the position and category of the animals in the pictures, and use all these ...

Embodiment 3

[0080] Embodiment 3 On the basis of the foregoing embodiments, a terminal is provided.

[0081] A terminal, including a processor, an input device, an output device and a memory, the processor, the input device, the output device and the memory are connected to each other, wherein the memory is used to store a computer program, and the computer program includes program instructions, The processor is configured to invoke the program instructions to execute the above method.

[0082] It should be understood that in the embodiment of the present invention, the so-called processor may be a central processing unit (Central Processing Unit, CPU), and the processor may also be other general-purpose processors, digital signal processors (Digital Signal Processor, DSP), dedicated integrated Circuit (Application Specific Integrated Circuit, ASIC), off-the-shelf programmable gate array (Field-Programmable Gate Array, FPGA) or other programmable logic devices, discrete gate or transistor ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com