Multi-scene indoor action recognition method based on channel state information and BiLSTM

A technology of channel state information and action recognition, which is applied in the field of human activity recognition, can solve the problems that the model cannot be used in multiple scenarios and the recognition degree is low, and achieve the effects of reducing cost and time, improving accuracy and simplicity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Example Embodiment

[0036] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings of the specification.

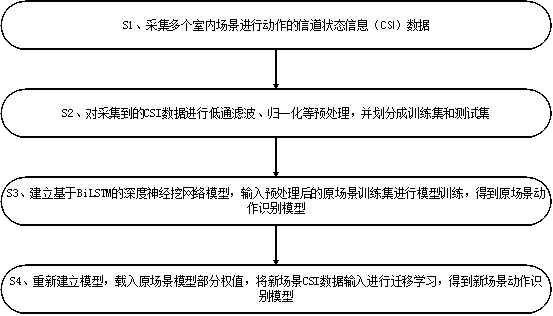

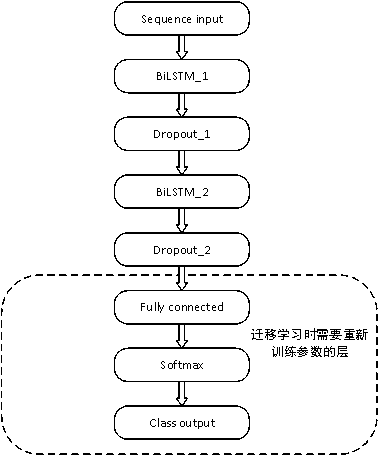

[0037] A multi-scene indoor action recognition method based on channel state information and BiLSTM, which specifically includes the following steps:

[0038] S1: Receive Wi-Fi signals when multiple indoor scenes perform indoor sports, and extract channel state information CSI data from the Wi-Fi signals.

[0039] Place the Wi-Fi transmitter and receiver in the room where motion recognition is required, connect the receiver to the PC used for collection, and install the corresponding collection software; turn on the transmitter, receiver and collection PC, and start with the collection software Receive Wi-Fi signals and extract channel state information CSI data; while collecting, tag the CSI data that needs to be used as a training set with corresponding action labels. For example, using 3 transmitting antennas and 3 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com