Robot control method, system and robot based on visual excitement point

A control method and robot technology, applied in the field of robots, to achieve lifelike bionic effects and improve intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

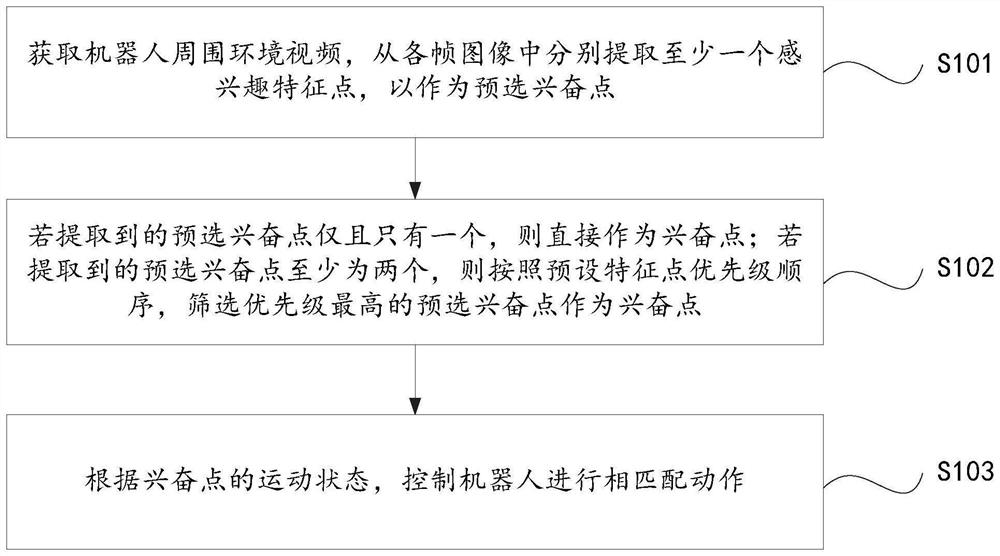

[0032] figure 1 Provide the main principle of the robot control method based on visual excitement point of the present invention, it is:

[0033] S101: Obtain a video of the surrounding environment of the robot, and extract at least one feature point of interest from each frame of image as a pre-selected exciting point;

[0034] S102: If there is only one and only one preselected excitement point extracted, use it as the excitement point directly; if there are at least two preselected excitement points extracted, select the preselected excitement point with the highest priority according to the priority order of the preset feature points as excitement

[0035] S103: According to the motion state of the exciting point, control the robot to perform matching actions.

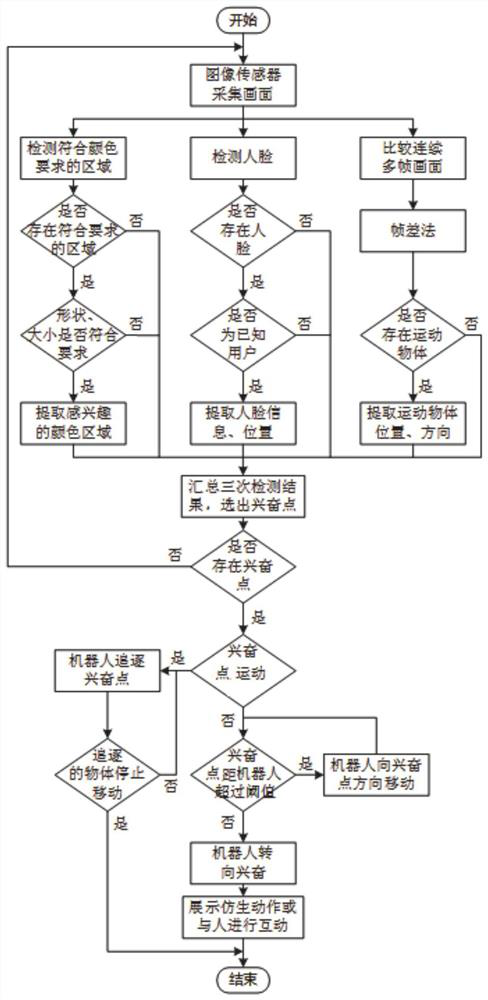

[0036] Specifically, the feature points of interest include, but are not limited to, feature points of color regions of interest, feature points of recorded user faces, or feature points of moving objects.

[00...

Embodiment 2

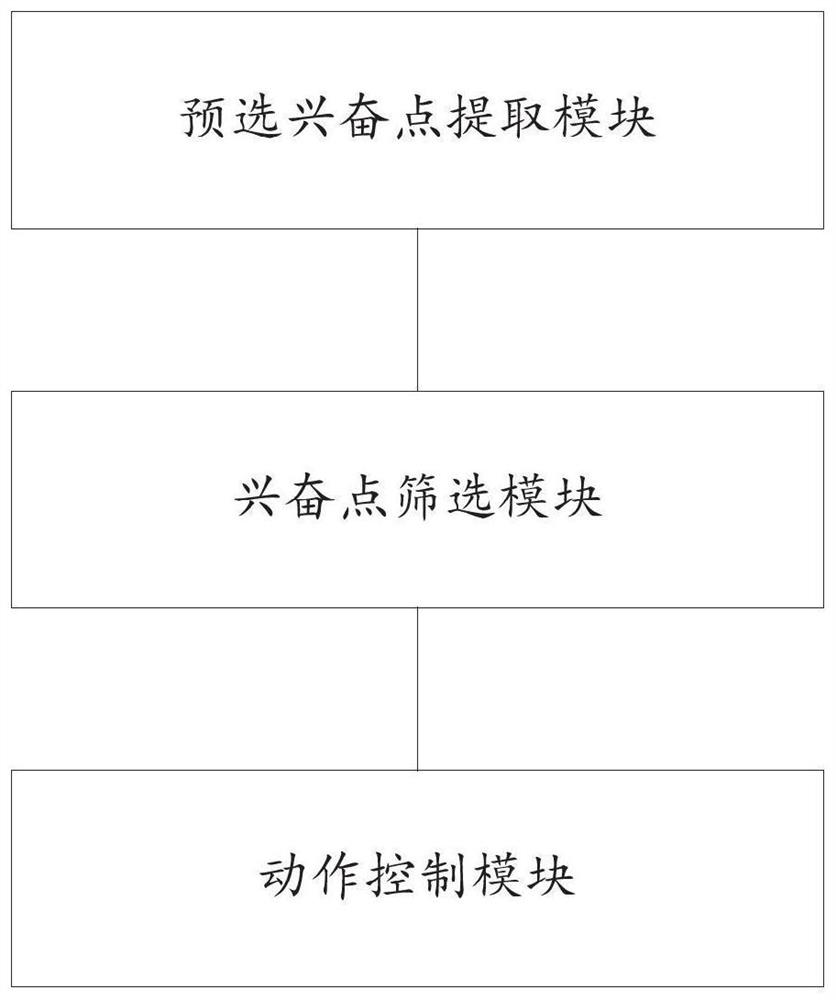

[0055] Such as image 3 As shown, the robot control system based on visual excitement of the present embodiment includes:

[0056] (1) pre-selected exciting point extraction module, which is used to obtain the surrounding environment video of the robot, and extracts at least one feature point of interest from each frame image as a pre-selected exciting point;

[0057] In a specific implementation, the feature points of interest include, but are not limited to, feature points of a color region of interest, feature points of a recorded user's face, or feature points of a moving object.

[0058] (2) Exciting point screening module, which is used to directly serve as an exciting point if there is only one pre-selected exciting point extracted; if at least two pre-selected exciting points are extracted, then according to the preset feature point priority order , select the pre-selected excitement point with the highest priority as the excitement point;

[0059] (3) Action control...

Embodiment 3

[0064] This embodiment provides a robot, which includes the robot control system based on visual excitement as described in Embodiment 1.

[0065] What needs to be explained here is that other structures of the robot are existing structures, which will not be repeated here.

[0066] In this embodiment, at least one feature point of interest is respectively extracted from each frame image of the surrounding environment video of the robot as a preselected exciting point; the excited point is screened according to the number of extracted preselected exciting points, and when there is only one preselected exciting point, Directly as the excitement point; when there are at least two pre-selected excitement points, according to the priority order of the preset feature points, select the pre-selected excitement point with the highest priority as the excitement point, and finally control the robot to perform matching actions according to the motion state of the excitement point , real...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com