Single-camera multi-view calibration method based on robot

A calibration method and robot technology, applied in the field of robot vision, can solve problems such as high cost, high cost, and inapplicability for large-scale promotion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

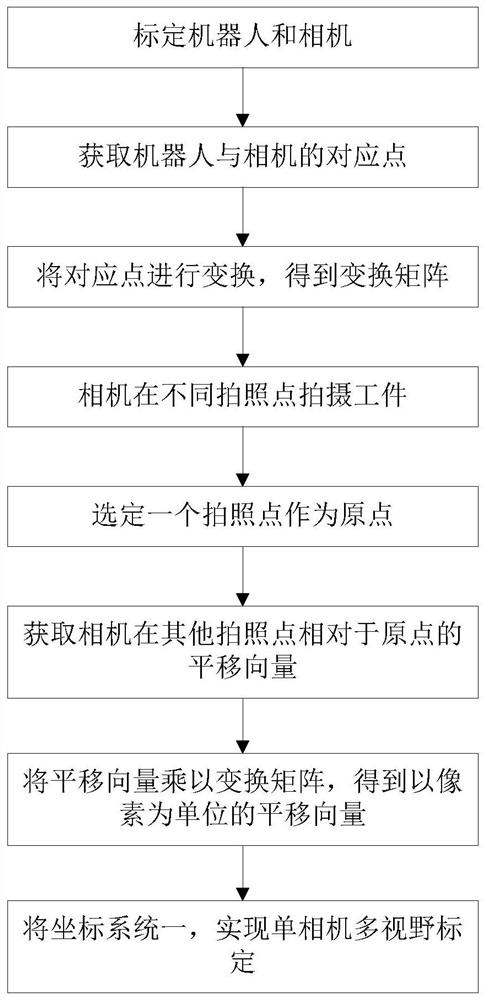

[0034] Such as figure 1 As shown, a robot-based single-camera multi-view calibration method includes the following steps:

[0035] S1: Calibrate the robot and camera;

[0036] S2: Obtain the corresponding points between the robot and the camera;

[0037] S3: Transform the corresponding points to obtain the transformation matrix T;

[0038] S4: The camera shoots the workpiece at different photo points;

[0039] S5: select a photographing point as the origin;

[0040] S6: Obtain the translation vector T of the camera at other photographing points relative to the origin through the robot i ;

[0041] S7: Multiply the translation vector by the transformation matrix to obtain the translation vector in pixels

[0042] S8: Use coordinate system 1 to realize single-camera multi-view calibration.

[0043] More specifically, in step S1, the camera plane and the robot base plane are kept parallel to the working plane.

[0044] More specifically, in step S2, at least three corre...

Embodiment 2

[0053] A robot-based single-camera multi-view calibration method is basically the same as the robot-based single-camera multi-view calibration method described in Embodiment 1, the difference being:

[0054] More specifically, in step S4, the camera is fixed at a fixed position, and the manipulator of the robot moves the workpiece to take pictures, so that the camera can take pictures of the workpiece at different photographing points.

[0055] More specifically, in step S6, the obtained translation vector needs to be multiplied by a coefficient -1.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com