Hash cross-modal information retrieval method based on dictionary pair learning

An information retrieval, cross-modal technology, applied in digital data information retrieval, character and pattern recognition, electrical digital data processing, etc., can solve the problem of reducing retrieval accuracy, difficult to obtain consistent hash code, difficult to obtain code and other problems, to achieve the effect of high average retrieval accuracy, overcoming computing resources, and fast retrieval speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

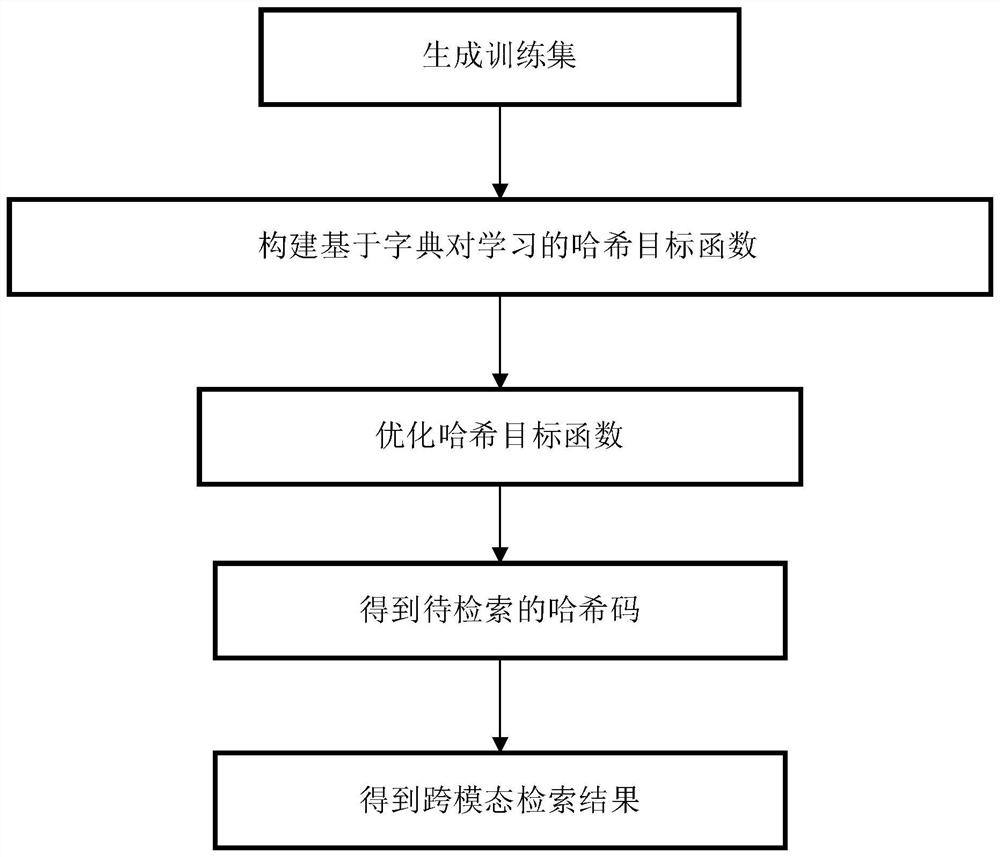

[0026] Attached below figure 1 The present invention is further described.

[0027] Step 1, generate a training set.

[0028] Randomly select modal data of two different physical forms and the category label matrix of the items corresponding to each modal, and homogenize the feature matrices of the two modal data to form a training set. Among them, the two modal data The label matrix of the model is consistent, and the data volume of the two selected modal data is the same.

[0029] Embodiments of the present invention take text and image modes as examples for description.

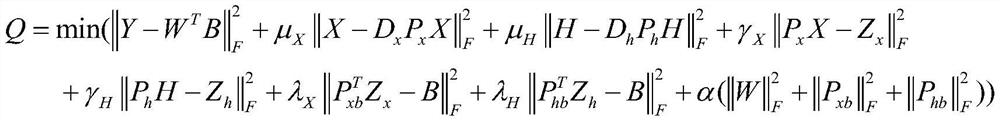

[0030] Step 2, construct the hash objective function Q based on dictionary pair learning.

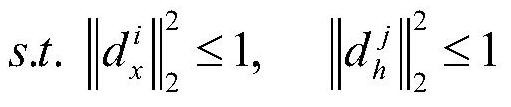

[0031]

[0032]

[0033] Among them, min( ) means to take the minimum value operation, ||·|| F Indicates the operation of taking the F norm, Y indicates the label matrix, W indicates the linear classifier to be obtained by optimizing the objective function Q through the optimal direction method, (·) T Repre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com