Resistive processing unit architecture with separate weight update and inference circuitry

A weight update and processing unit technology, applied in neural architecture, physical implementation, biological neural network models, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] Embodiments of the present invention will now be discussed in more detail with respect to RPU cell architectures and methods in which individual matrices are utilized to independently perform individual Weight update accumulation and inference (weight read) operations. It should be noted that the same or similar reference numerals are used throughout the drawings to denote the same or similar features, elements or structures, and therefore, reference to the same or similar features, elements or structures will not be repeated for each drawing detailed instructions.

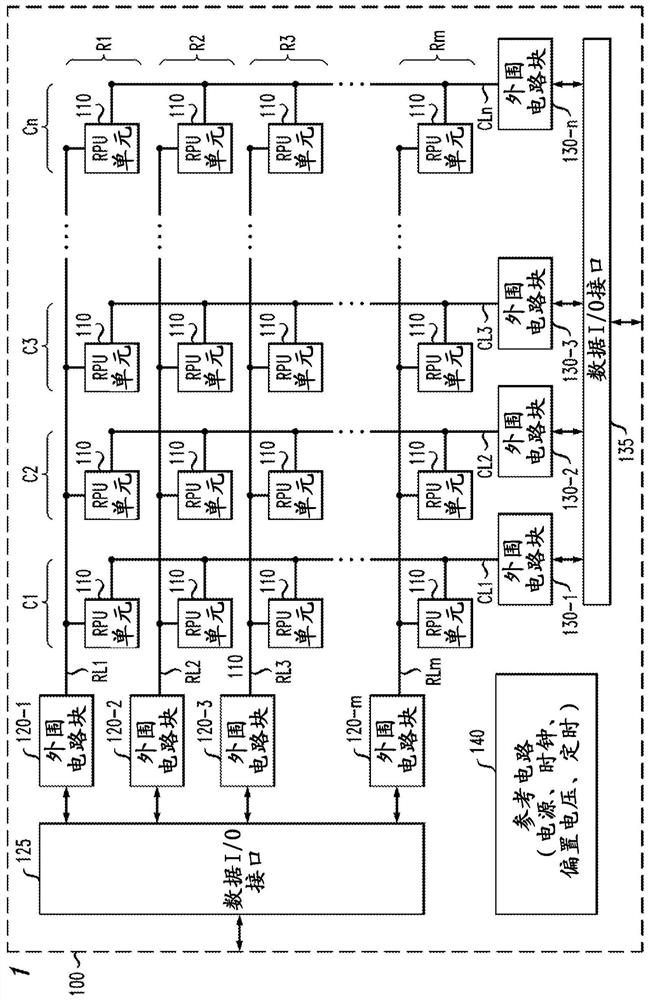

[0020] figure 1 It schematically shows an RPU system 100 that can be implemented with an RPU unit architecture according to an embodiment of the present invention. The RPU system 100 includes a two-dimensional (2D) interleaved array of RPU units 110 arranged in a plurality of rows R1, R2, R3, . . . , Rm and a plurality of columns C1, C2, C3, . . . , Cn. The RPU units 110 in each row R1, R2, R3, ..., Rm a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com