Gradient boosting tree modeling method and device and terminal

A gradient boosting tree and modeling method technology, applied in the field of machine learning, can solve problems such as huge costs, achieve the effect of breaking data islands, promoting data circulation, and effective privacy protection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

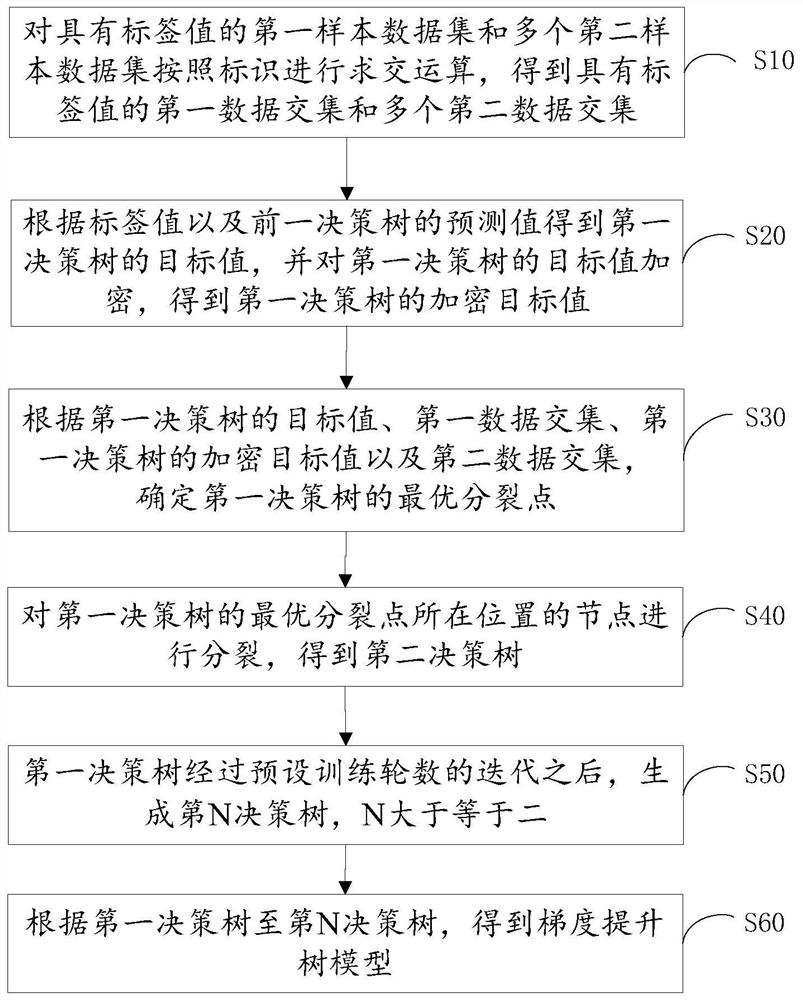

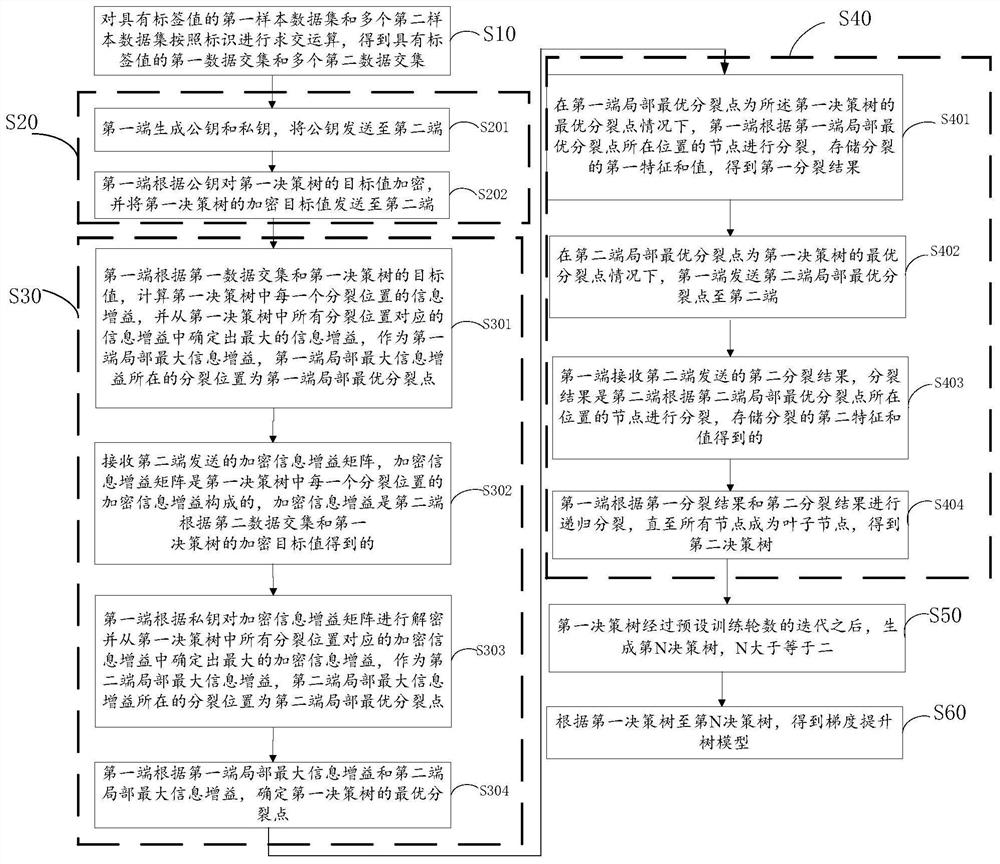

[0069] In a specific embodiment, a gradient boosting tree modeling method is provided, such as figure 1 shown, including:

[0070] Step S10: Perform an intersection operation on the first sample data set with label values and multiple second sample data sets according to the identifiers to obtain the intersection of the first data set with label values and the multiple second data sets.

[0071] In an example, taking two-party data as an example, a first sample data set and a second sample data set may be acquired. The first sample data set includes a plurality of first identifiers forming a column, a first label name forming a row, and a plurality of first feature names. For example, the first identification may include people such as Zhang San, Li Si, Wang Wu, Zhao Liu, etc., and the first tag name may include whether to buy insurance or not. The first feature name may include weight, income, height, and the like. The first sample data set also includes a first label ...

Embodiment 2

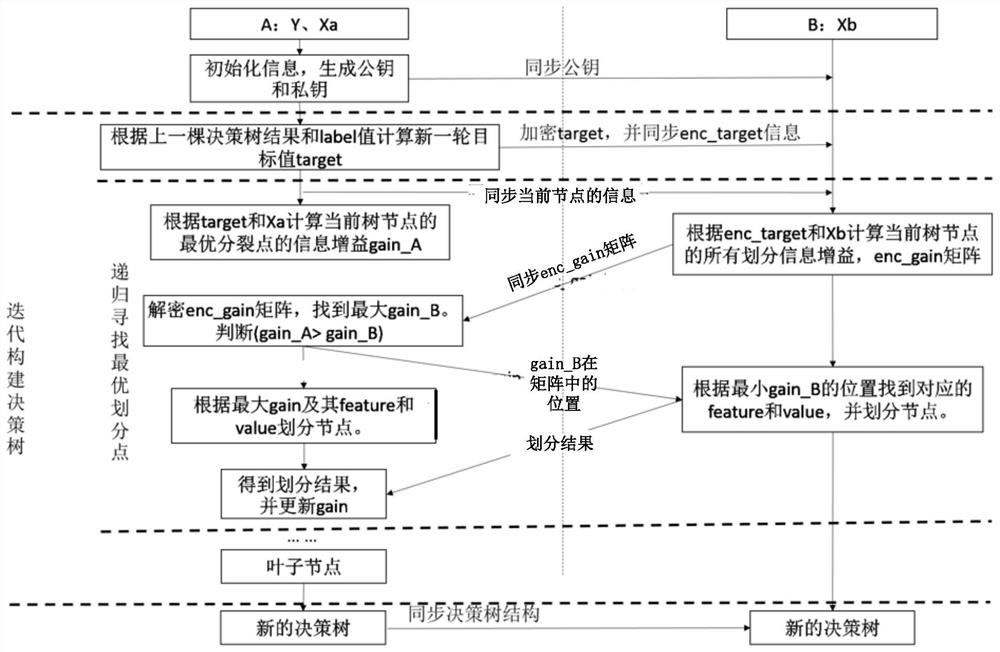

[0102] In one embodiment, multiple machines are provided, and a model training program is allowed on each machine. Then input the sample data corresponding to each machine into the corresponding local model training program, and start running the program at the same time. During the running of multiple programs, some encrypted information will be exchanged to jointly generate a model. The whole process is called the process of multi-party joint gradient boosting tree modeling.

[0103] Take the A machine and the B machine as examples for specific description. A and B have the first sample data set Qa and the second sample data set Qb respectively. Qa includes a plurality of first identifications forming rows, label names and a plurality of first feature names forming rows, and a plurality of first label values and a plurality of first feature values corresponding to a plurality of first identifications; Qb It includes a plurality of second identifiers forming a column, a...

Embodiment 3

[0125] In another specific embodiment, a gradient boosting tree modeling device is provided, such as Figure 8 shown, including:

[0126] The data set intersection module 10 is configured to perform an intersection operation on the first sample data set with a label value and a plurality of second sample data sets according to the identification to obtain the first data intersection with a label value and a plurality of second data sets intersection;

[0127] The target value encryption module 20 is used to obtain the target value of the first decision tree according to the label value and the predicted value of the previous decision tree, and encrypt the target value of the first decision tree to obtain the encryption of the first decision tree target value;

[0128] An optimal split point determining module 30, configured to determine the second data intersection according to the target value of the first decision tree, the first data intersection, the encryption target va...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com