Multi-modal intention reverse active fusion human-computer interaction method

A human-computer interaction, multi-modal technology, applied in the field of human-computer interaction, can solve the problem of low accuracy of the real intention of the elderly, and achieve the effect of accurate intention recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

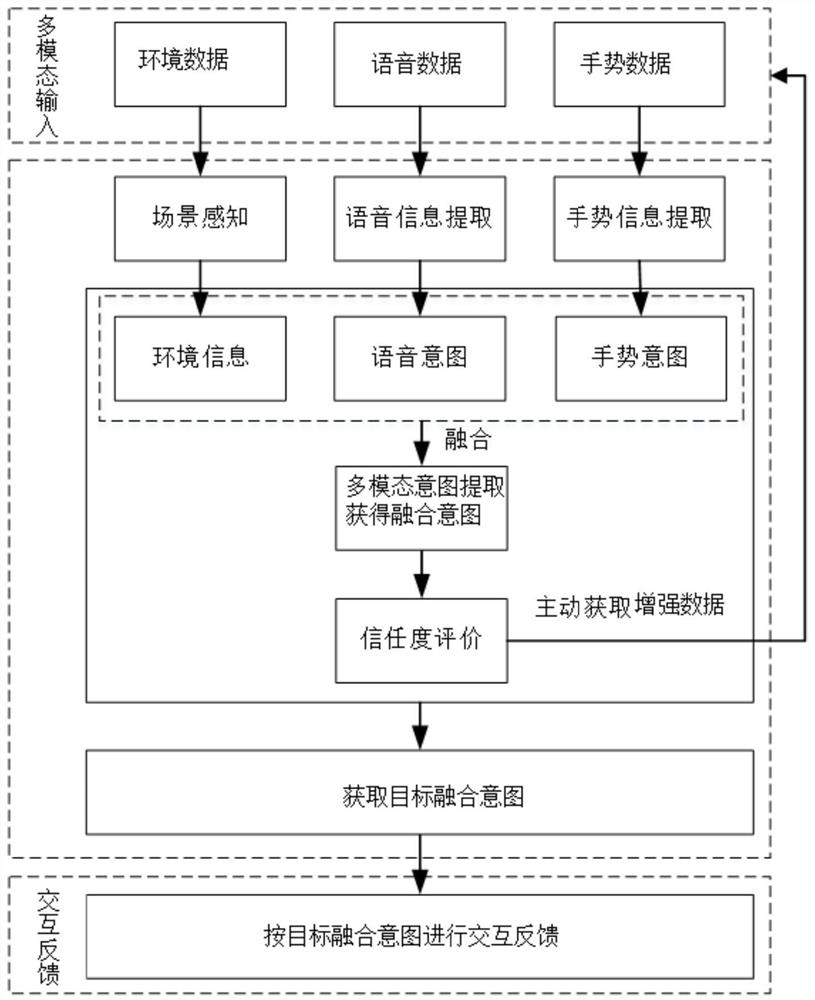

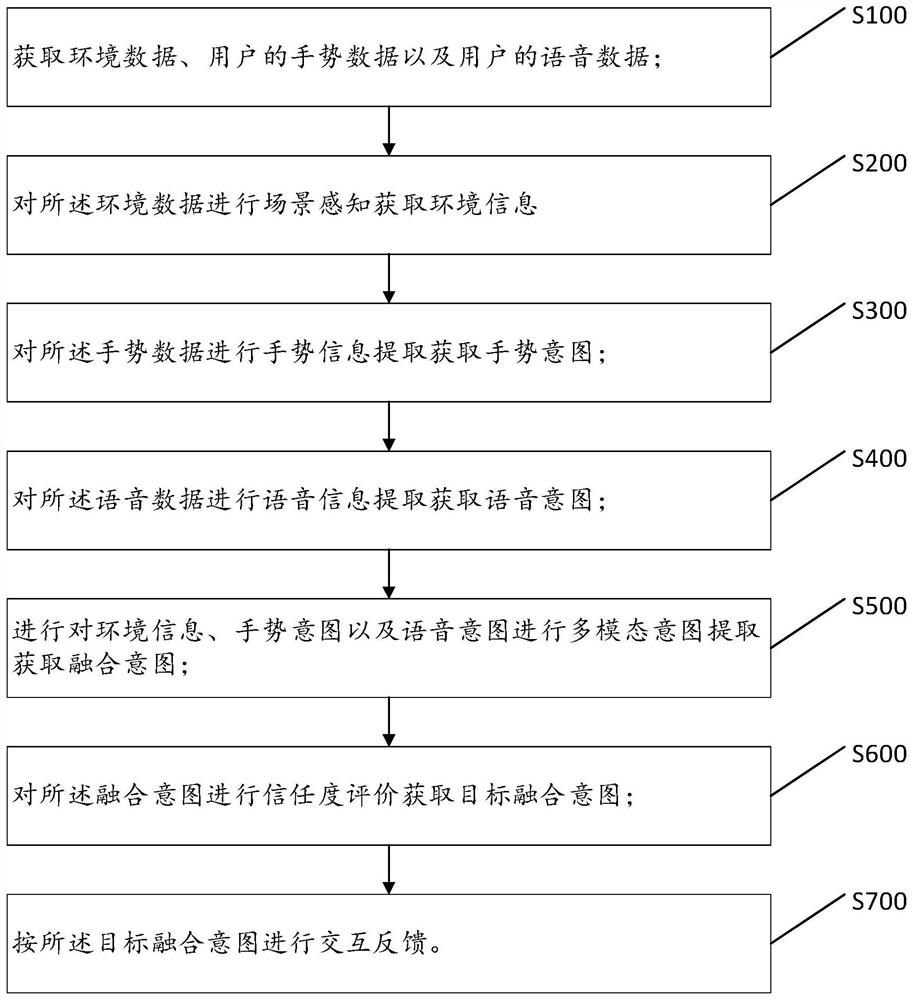

[0054] combined reference figure 1 with figure 2 As shown, the present invention provides a human-computer interaction method for reverse active fusion of multimodal intentions, including:

[0055] S100, acquiring environment data, user gesture data and user voice data; specifically, acquiring environment data in video format and user gesture data through an RGB-D depth camera, and acquiring voice data in audio format through a microphone. When collecting environmental data, the RGB-D depth camera rotates 360° horizontally to collect environmental data, and records the moment of collecting environmental data.

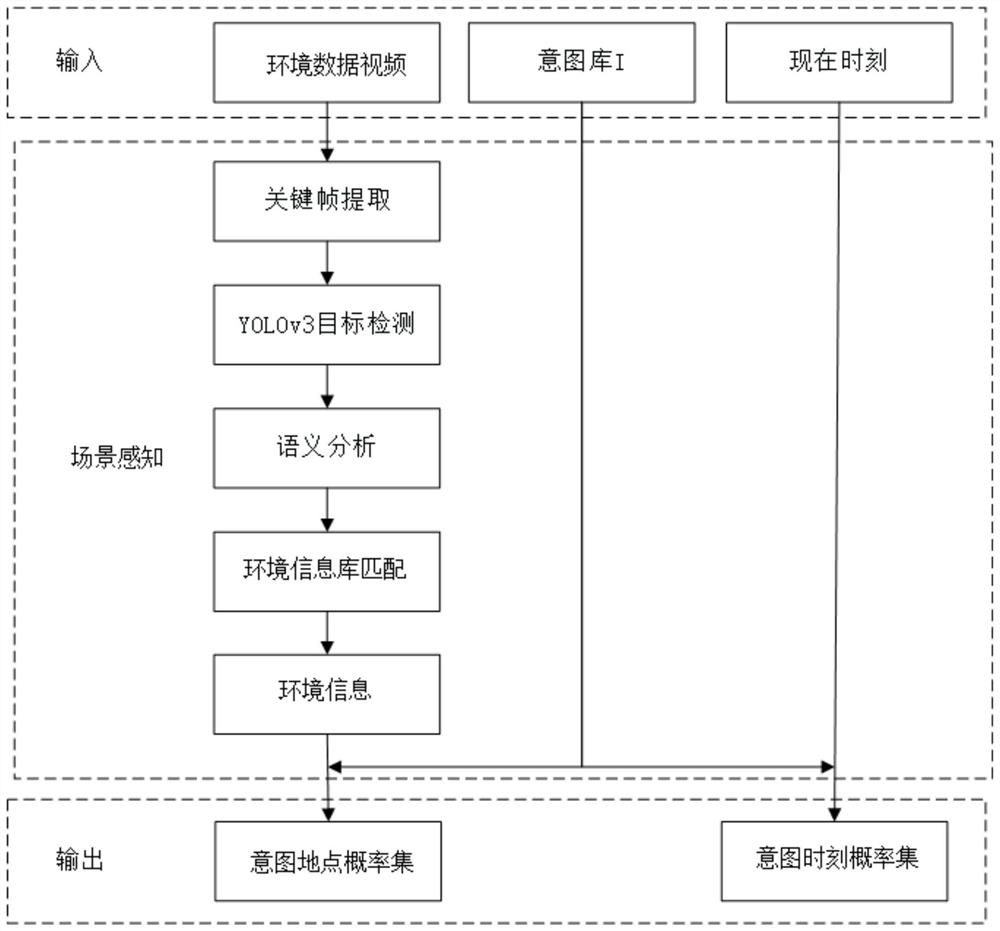

[0056] S200, perform scene perception on the environmental data to obtain environmental information, specifically, refer to image 3 with Figure 4 As shown, performing scene perception on the environment...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com