Visual odometer construction method in robot visual navigation

A visual odometry and robot vision technology, which is applied in the field of visual odometry construction in robot visual navigation, can solve the problems of feature point mismatch, poor robustness and accuracy, and affect positioning accuracy and mapping accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

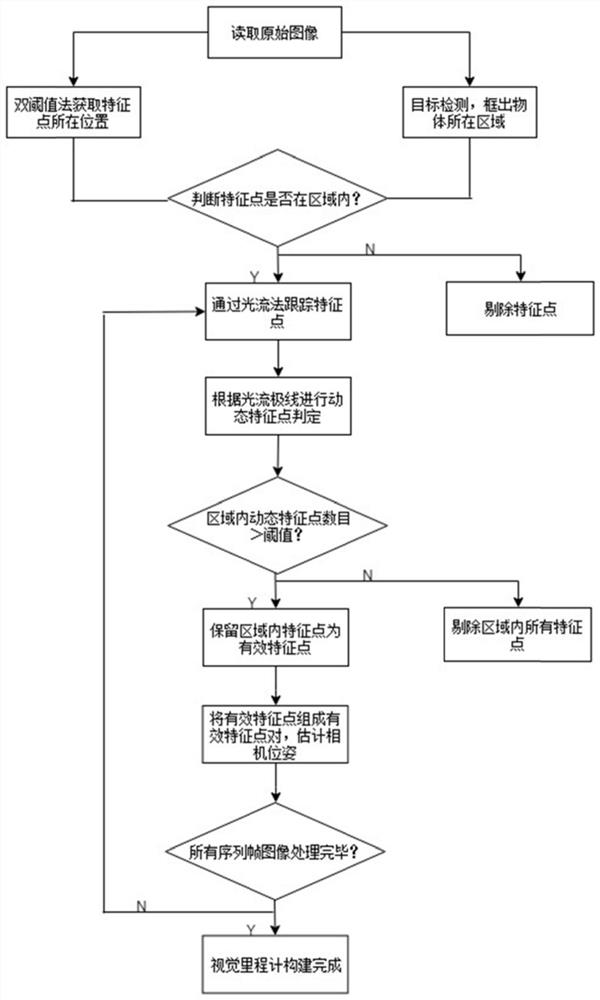

[0068] Such as figure 1 As shown, the present invention discloses a method for constructing a visual odometer in robot visual navigation, comprising the following steps:

[0069] Step 1, read the original image, extract the feature points and obtain the position of the feature points through the double threshold method;

[0070] Step 2, perform target detection on the original image through the YOLOv3 algorithm, and obtain the position of the bounding box of the object; image 3 Shown is the target detection result map, which respectively frame the bounding boxes of tricycles, pedestrians, and cars.

[0071] Step 3, according to the position of the feature point and the position of the object bounding box, judge whether the feature point is in the object bounding box, remove the feature points not in the object bounding box, and obtain the feature points in the object bounding box;

[0072] Step 4, performing optical flow tracking on the feature points in the bounding box of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com