Method and system for driving character gesture by voice

A technology for driving characters and gestures, applied in the field of computer vision, can solve problems such as inability to generate continuous gestures, inability to properly generate two gestures at the same time, and achieve the effect of wide application

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and through specific implementation methods.

[0019] Wherein, the accompanying drawings are only for illustrative purposes, showing only schematic diagrams, rather than physical drawings, and should not be construed as limitations on this patent; in order to better illustrate the embodiments of the present invention, some parts of the accompanying drawings will be omitted, Enlargement or reduction does not represent the size of the actual product; for those skilled in the art, it is understandable that certain known structures and their descriptions in the drawings may be omitted.

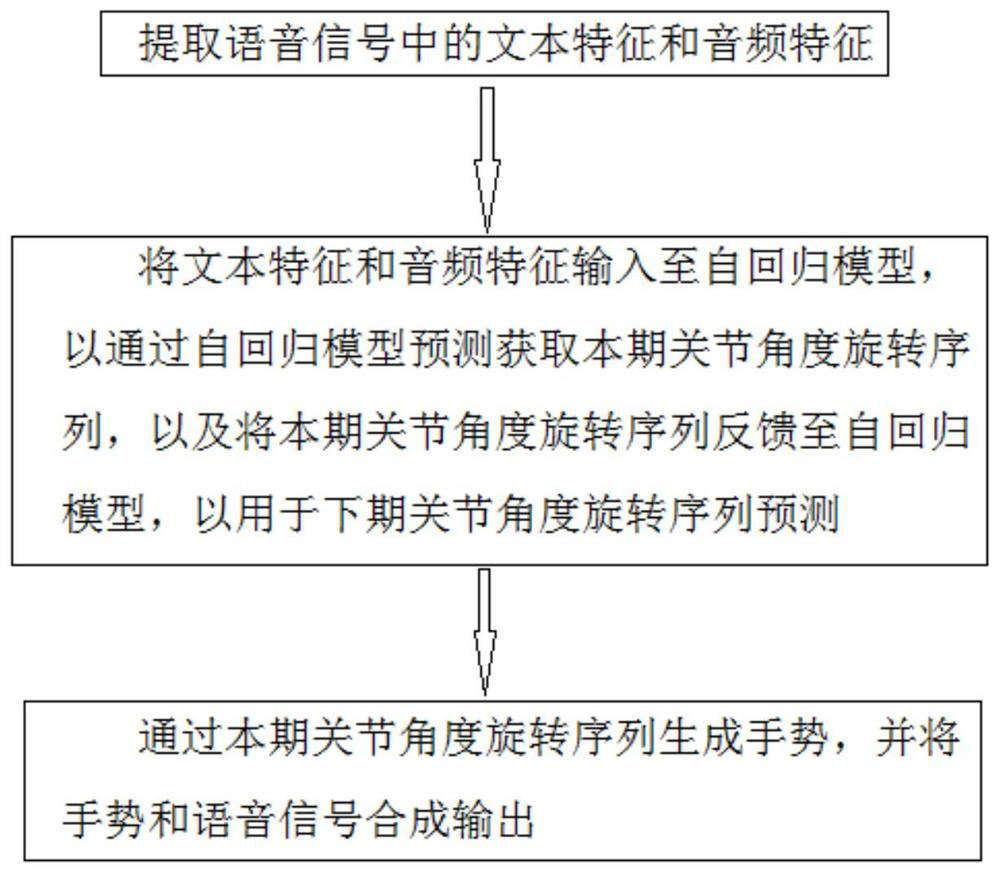

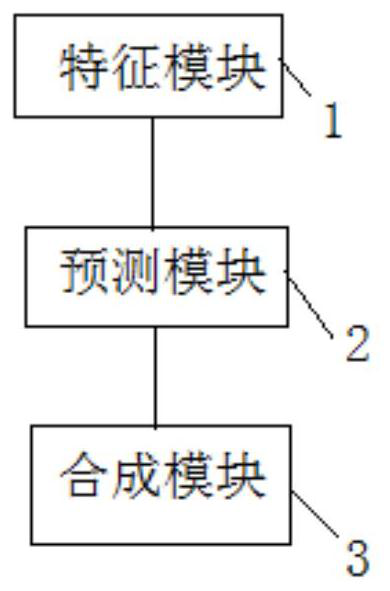

[0020] A voice-driven character gesture method provided by an embodiment of the present invention, such as figure 1 shown, including the following:

[0021] Extract text features and audio features in the speech signal;

[0022] Input the text features and audio f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com