Convolution calculation data reuse method based on heterogeneous many-core processor

A technology of many-core processors and convolution, applied in the field of deep learning, to achieve the effect of reducing memory access requirements, high performance, and improving data reuse rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

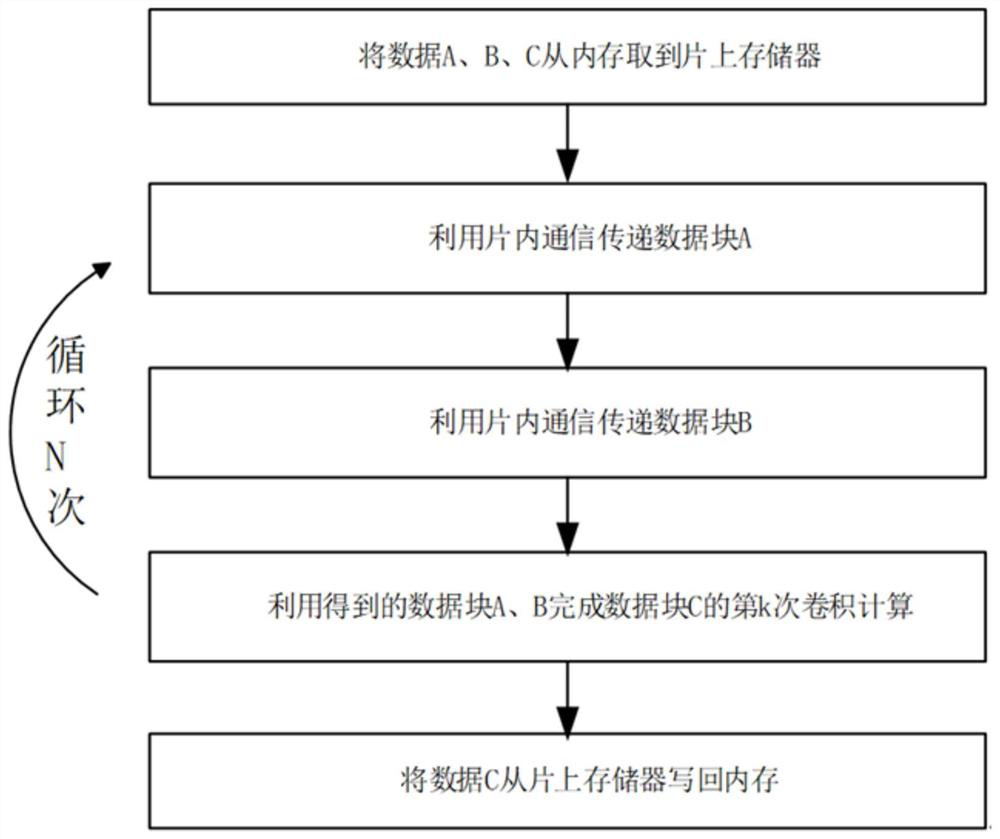

[0015] Embodiment: A method for reusing data for convolution calculation based on heterogeneous many-core processors. Based on a large-scale heterogeneous system, the CPU completes the convolution calculation of data block C through data block A and data block B, including the following steps:

[0016] S1. According to the number of cores NUM of the heterogeneous many-core processor, two-dimensionally map the cores of the heterogeneous many-core processor into N*N cores, where the value of N is the largest integer that does not exceed the square root of NUM, and N *N cores are numbered, and data block A, data block B, and data block C are each divided into N*N blocks in two-dimensional equal parts. The (i, j)th core divides data block A, data block B, and data The (j, i) block data of block C are respectively read from the memory into their own on-chip memory, and the convolution calculation of data block C (i, j) requires data block A (i, k) and data block B (k , j), where k=...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com