Patents

Literature

48results about How to "Full use of computing power" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

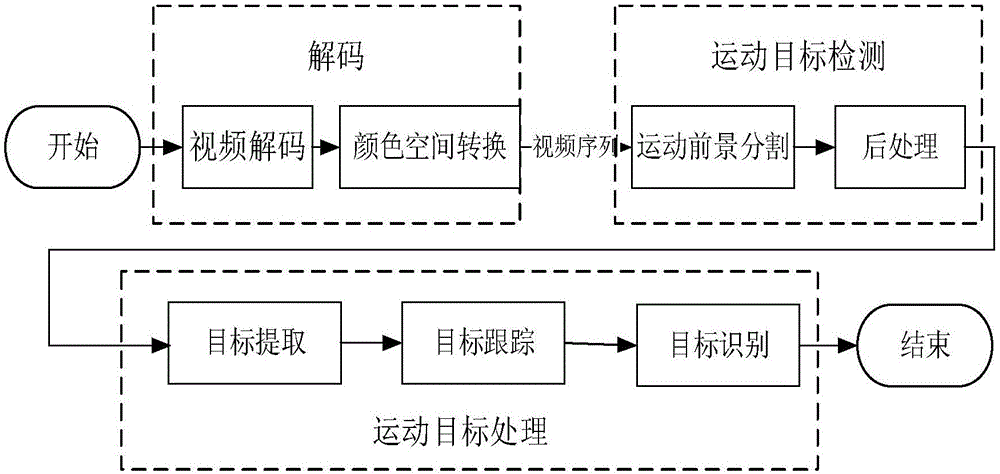

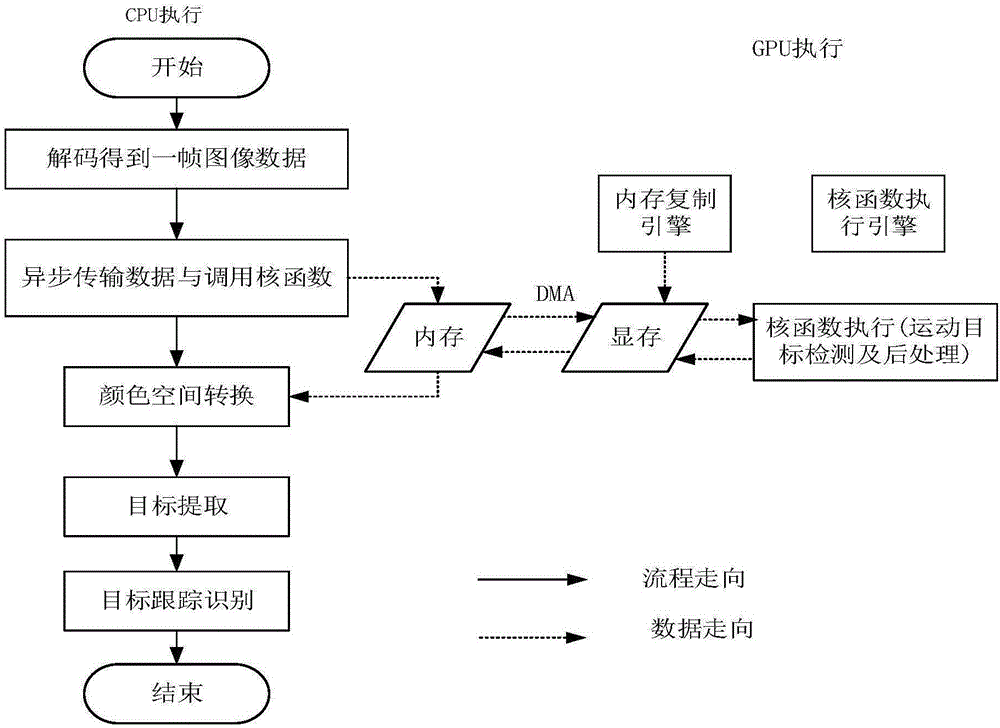

Video analysis and accelerating method based on thread level flow line

ActiveCN106358003AFull use of computing powerReduce communication overheadTelevision system detailsColor television detailsAdjacent levelComputational resource

The invention discloses a video analysis and accelerating method. The method comprises the following steps: dividing a video frame processing task into four levels of subtasks according a sequential order, and allocating the subtasks to GPU and CPU to process; realizing each level of subtask through a thread, transmitting data to a thread for the next subtask after processing, and ensuring that all the threads perform concurrent execution; pausing and waiting when new tasks does not exist or a thread for the next level of subtask does not accomplish processing; adopting a first-in first-out (FIFO) buffer queue to transmit data between threads for two adjacent levels of subtasks; realizing asynchronous cooperation concurrency of CPU and GPU subtasks through CUDA function asynchronous invocation for two subtask not in a dependency relationship. According to the method, various computing resources in a heterogeneous system are effectively utilized, a reasonable task scheduling mechanism is established, and communication overhead between different processors is reduced, so that the computing power of each computing resource is given into full play, and the system efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

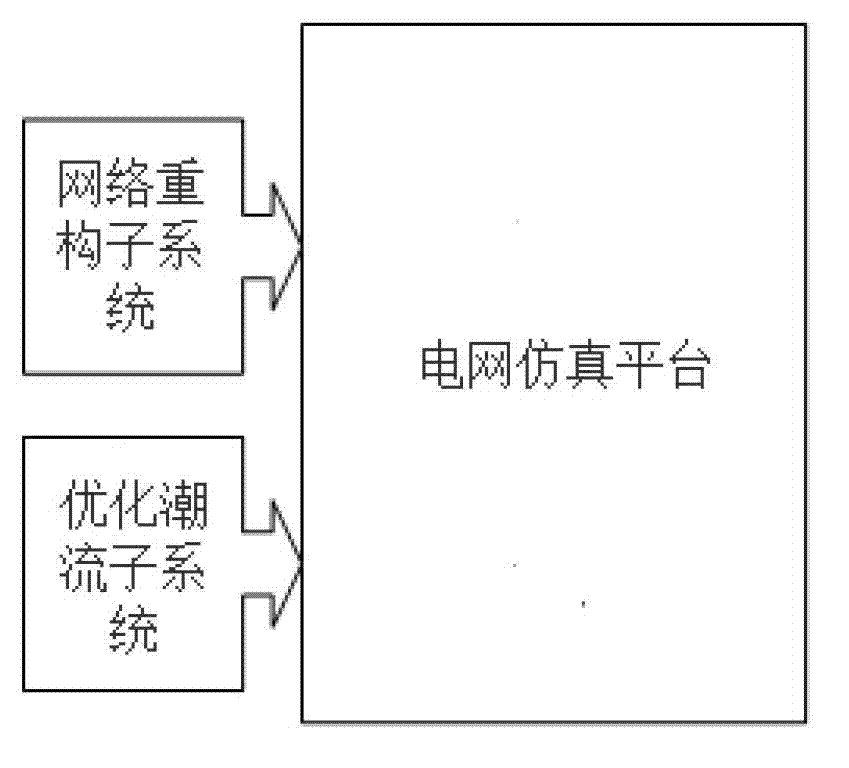

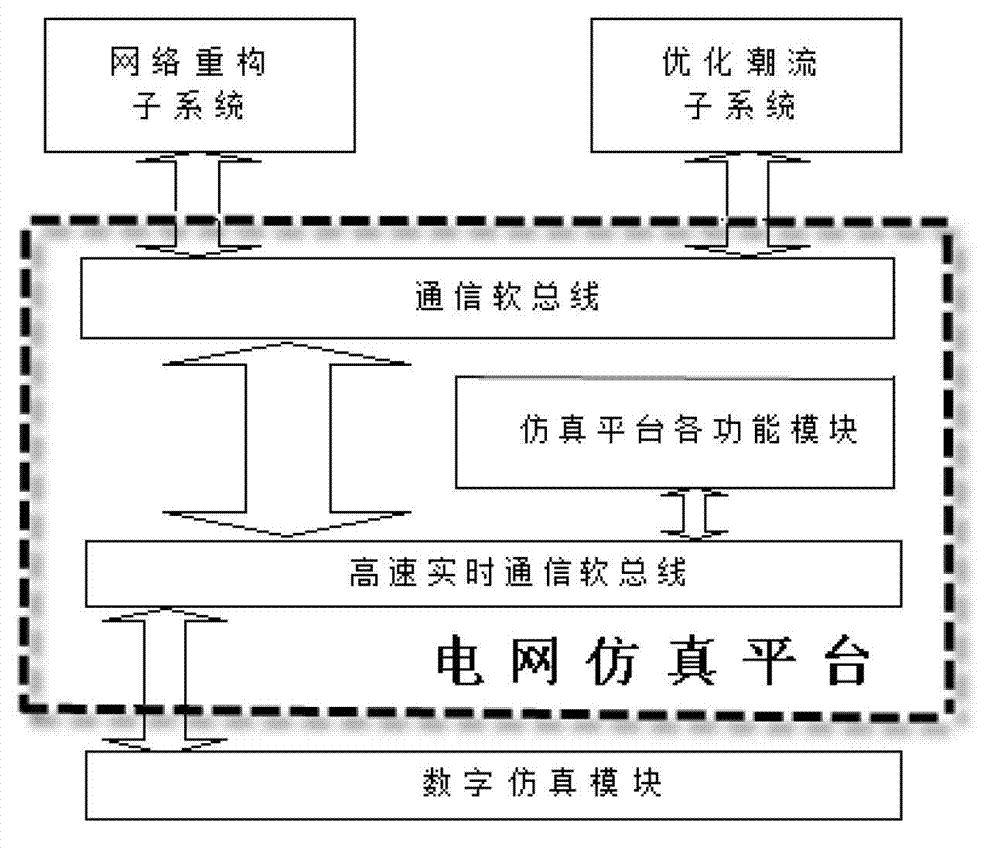

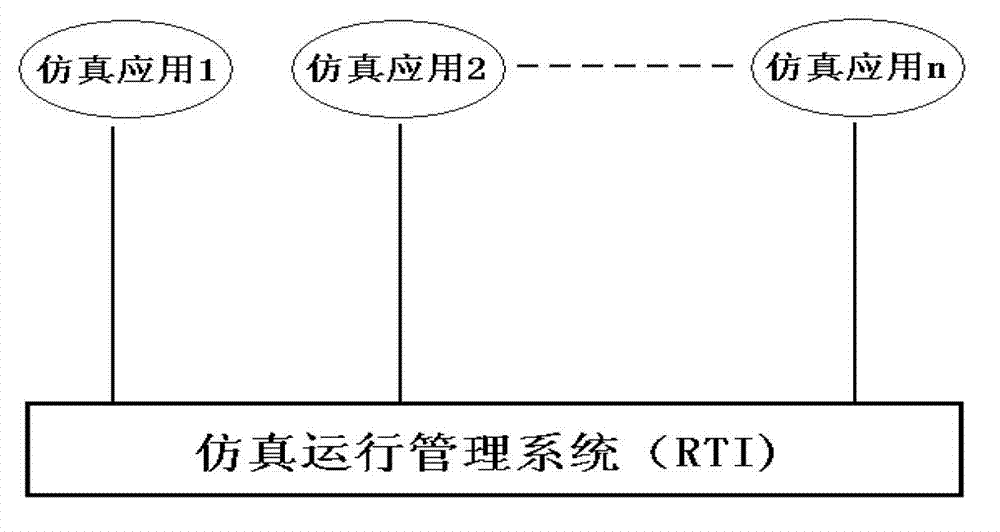

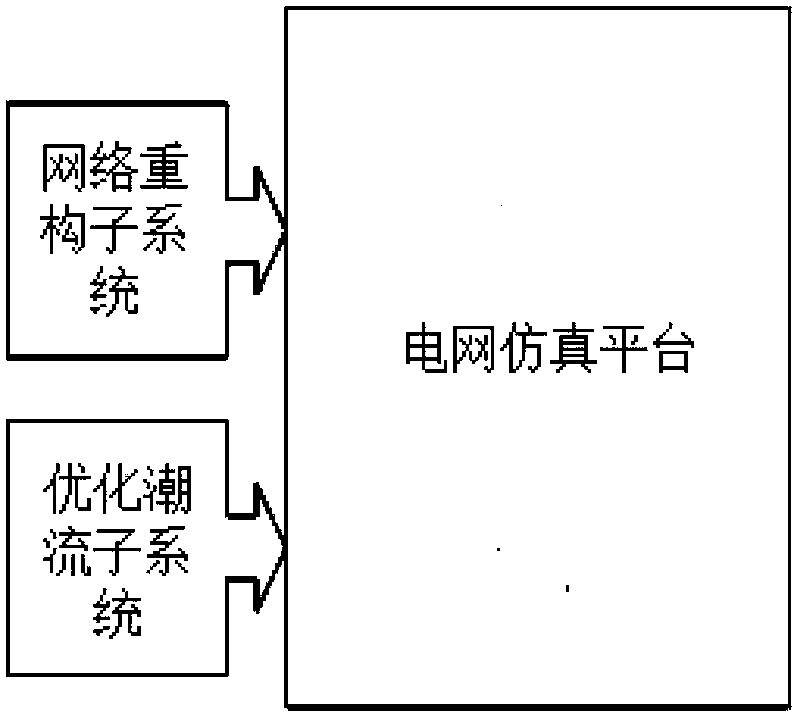

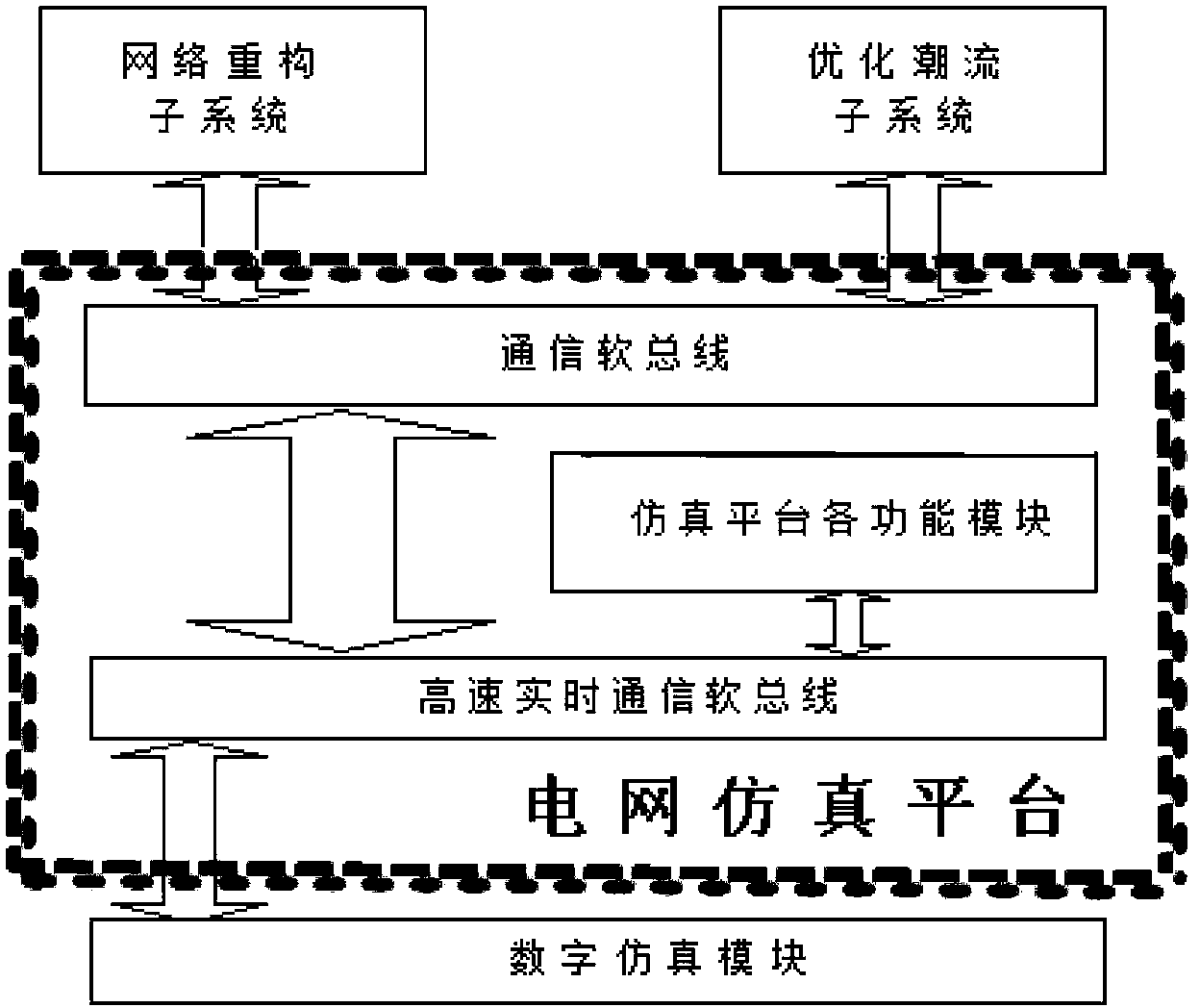

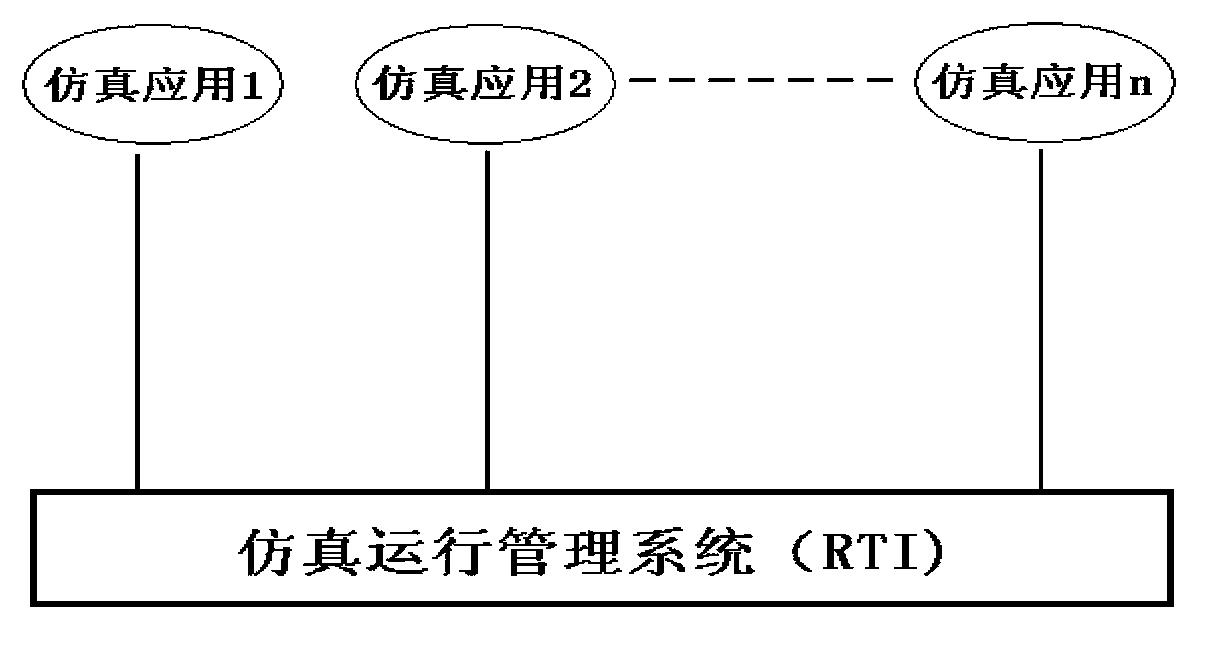

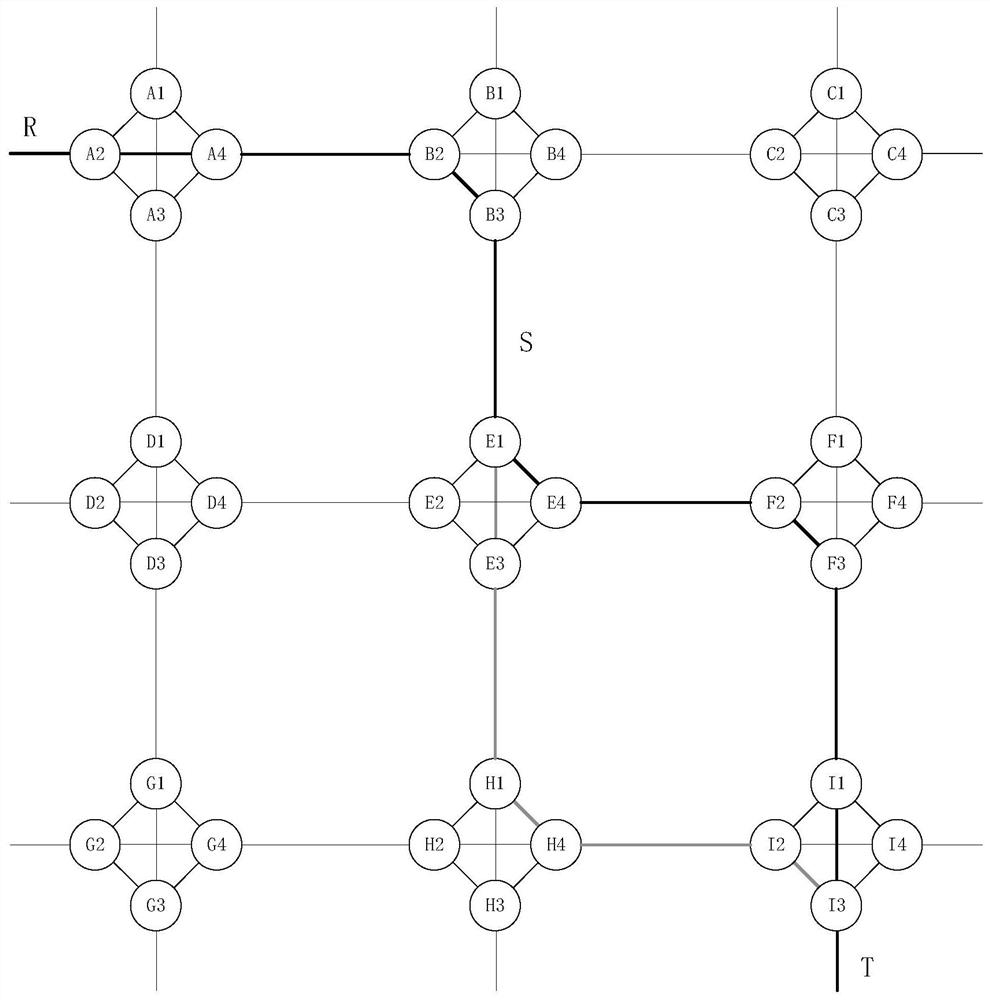

Power network planning construction method based on network reconstruction and optimized load-flow simulating calculation

ActiveCN103199521AReduce maintenanceEasy accessFlexible AC transmissionAc network circuit arrangementsElectric power systemPower grid

The invention relates to a power network planning construction method based on network reconstruction and optimized load-flow simulating calculation. The power network planning construction method based on the network reconstruction and the optimized load-flow simulating calculation comprises the steps that load-flow of a power system is calculated by a digital simulation module in a power network simulation platform, constraint of a future power network is set by an operator, interface service calls used for power network planning are increased, a network reconstruction subsystem obtains power network data, rules followed by the network reconstruction subsystem in calculation are determined, calculation constraint is determined, the network reconstruction subsystem acquires an optimal solution, artificial interfering correction is conducted to a network reconstruction result, the result is written into the power network simulation platform, an optimized load-flow subsystem obtains power network data, constraint is determined before the calculation of the optimized loading-flow subsystem, optimized load-flow calculation is developed, the result is exported and sent to the power network simulation platform, artificial interfering correction is conducted to the exported result, and the result after the artificial interfering correction is stored in the power network simulation platform. The power network planning construction method based on the network reconstruction and the optimized load-flow simulating calculation largely improves the working efficiency of power network planning and the efficiency of a power network operation mode, and thus a power network planning working process which is convenient and high in efficiency is created.

Owner:STATE GRID TIANJIN ELECTRIC POWER +2

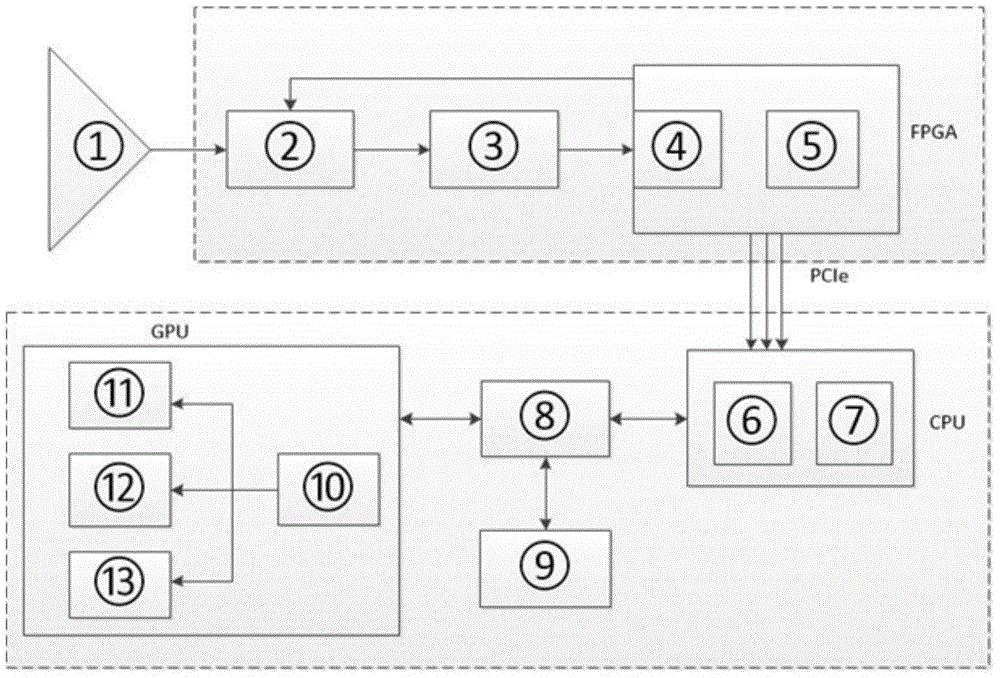

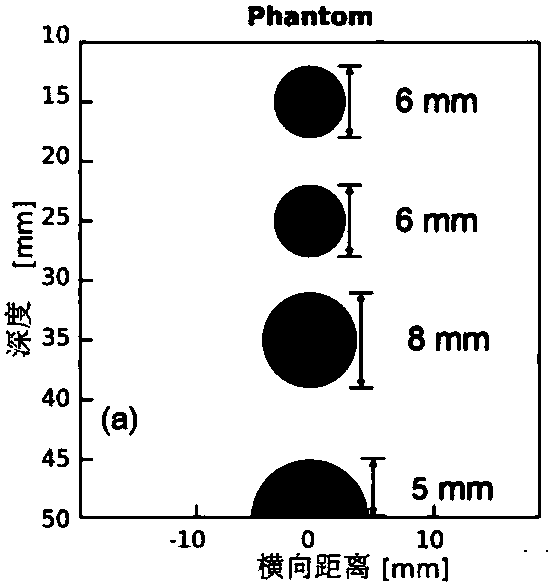

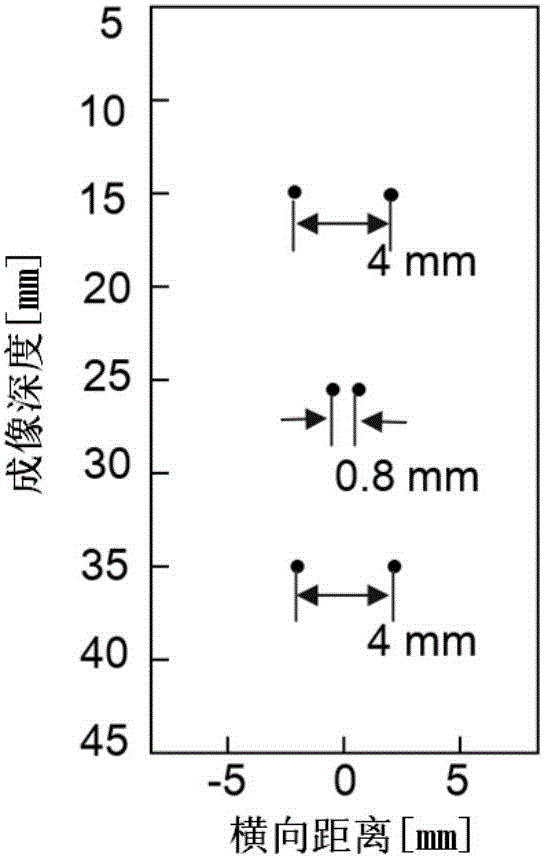

Ultra high speed ultrasonic imaging device and method based on central processing unit (CPU) + graphic processing unit (GPU) isomeric framework

InactiveCN104688273AMake full use of back-end processing capabilitiesImprove performanceBlood flow measurement devicesInfrasonic diagnosticsSonificationData acquisition

The invention discloses an ultra high speed ultrasonic imaging device and method based on a central processing unit (CPU) + a graphic processing unit (GPU) isomeric framework. An FPGA control probe transmits multi-angle plane wave ultrasonic signals to biological texture to be detected and conducts analog-to-digital conversion and pre-amplification on received data. A large amount of data subjected to preprocessing are transferred into a CPU through a high speed PCI e channel. Data beam forming is achieved in the CPU, and functions like human-computer interaction are achieved. GPU reads data in the inner storage of the CPU, and utilizes the parallel calculation capability and variable frame rate plane wave composite technology to combine and demodulate signals to further achieve super speed imaging. By means of the device and the method, the high-integrity transmitting and receiving scheme and the unified data collection framework are adopted to simplify the front end, the beam forming module is moved to the rear end, the front end is further simplified, the rear end processing capability is fully utilized, and the system performance is improved.

Owner:HARBIN INST OF TECH

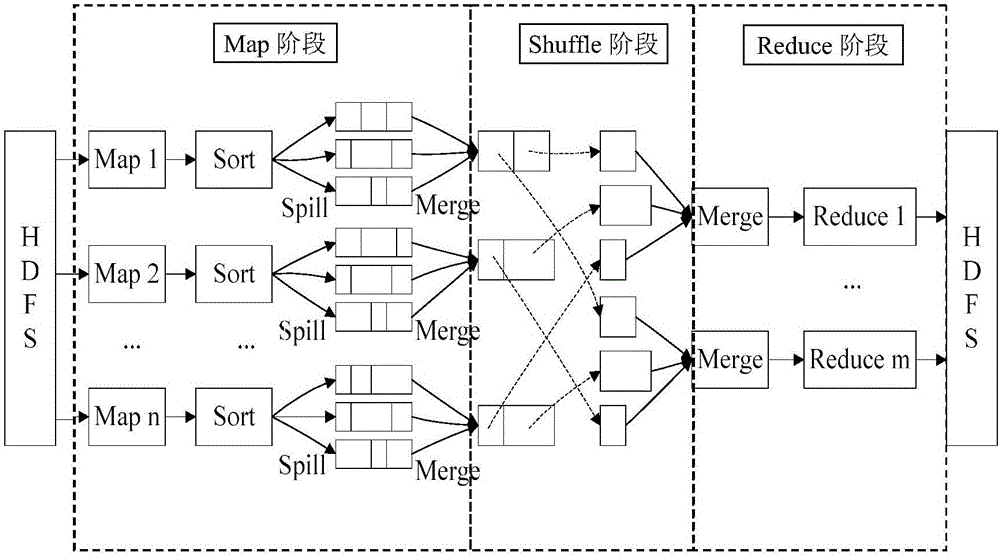

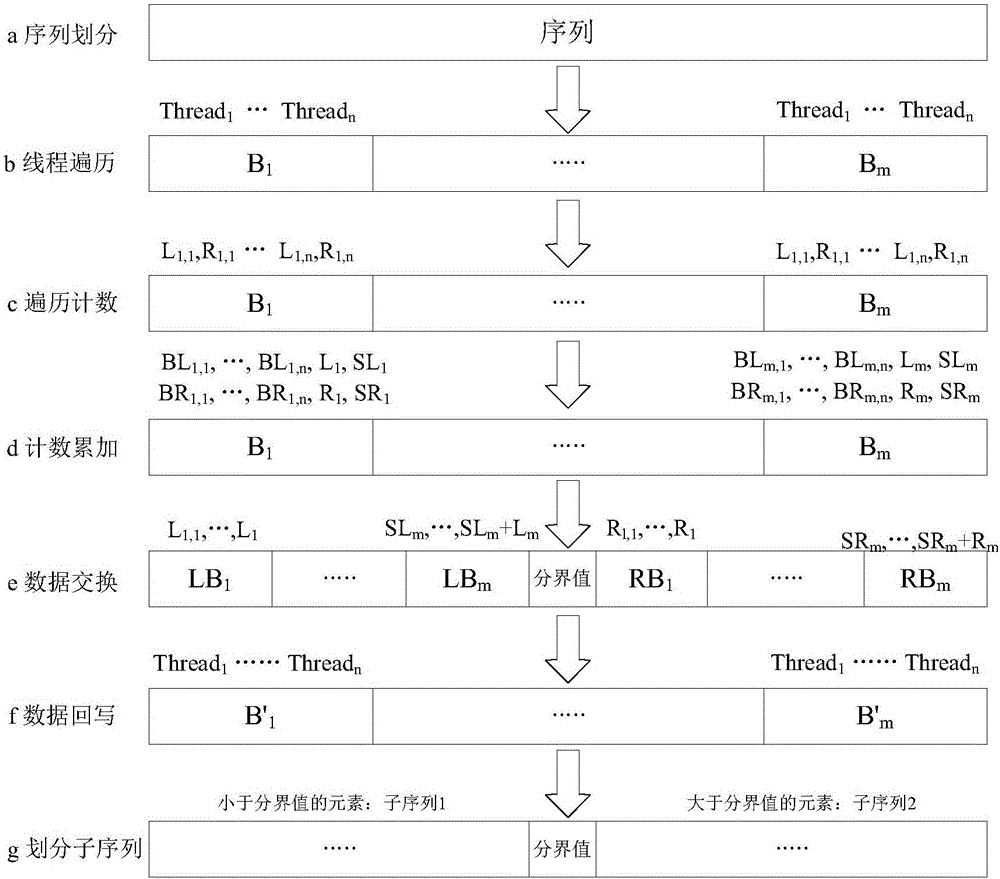

Data processing method based on hardware sorting MapReduce

InactiveCN107102839AProcessing speedImprove MapReduce performanceDigital data processing detailsSpecial data processing applicationsHeapsortMerge sort

The invention discloses a data processing method based on hardware sorting MapReduce. The method comprises the following steps that CPU-based quick sorting is replaced by GPU-based quick sorting; CPU-based merge sorting is replaced by GPU-based merge sorting; CPU-based heap sort is replaced by GPU-based merge sorting. The GPU-based sort algorithm replaces the CPU-based sort algorithm, the powerful computing capability of a GPU is made full use of, the middle data processing speed is improved, the MapReduce performance is improved, and the method is especially suitable for the large data field.

Owner:青岛蓝云信息技术有限公司

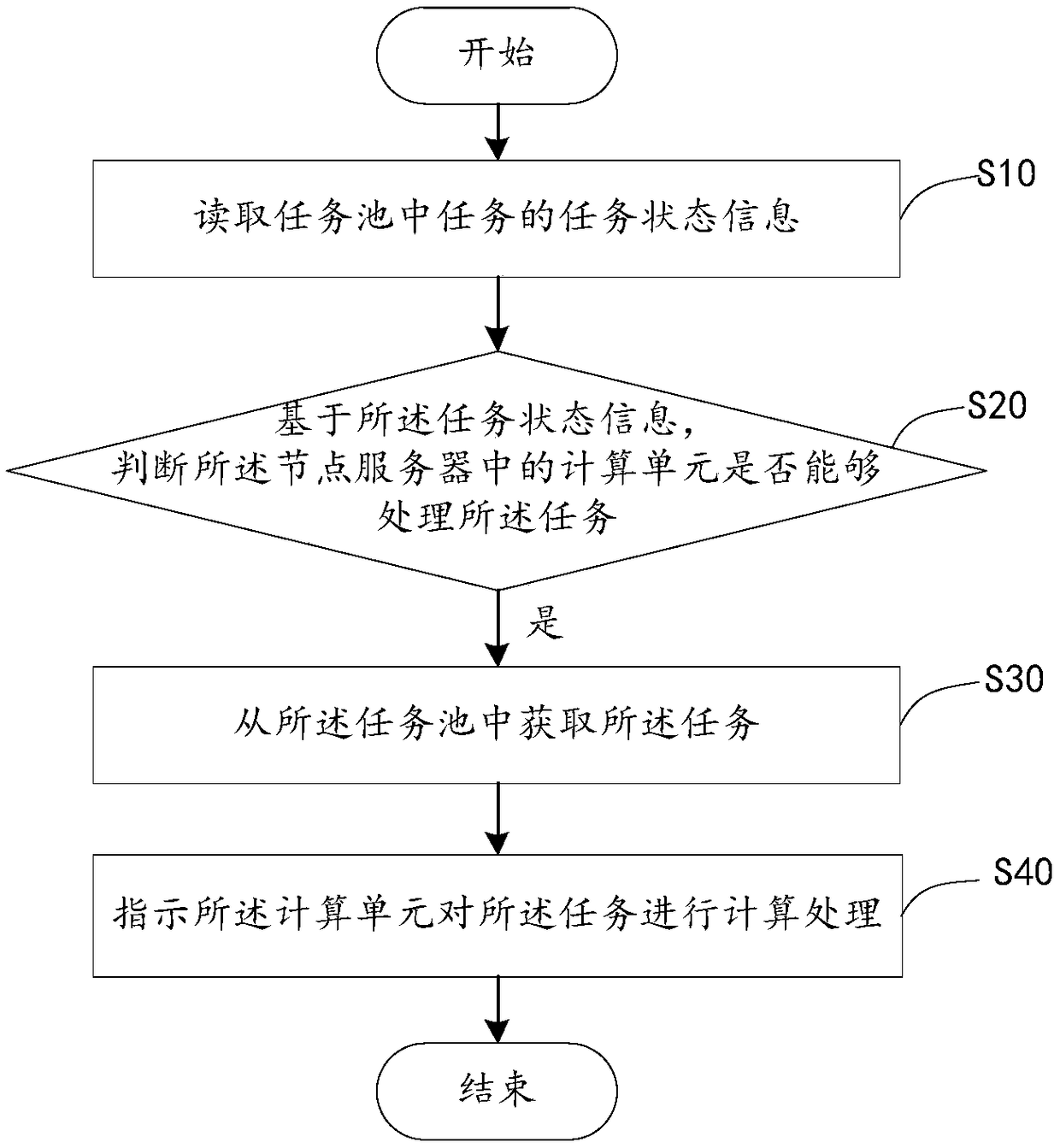

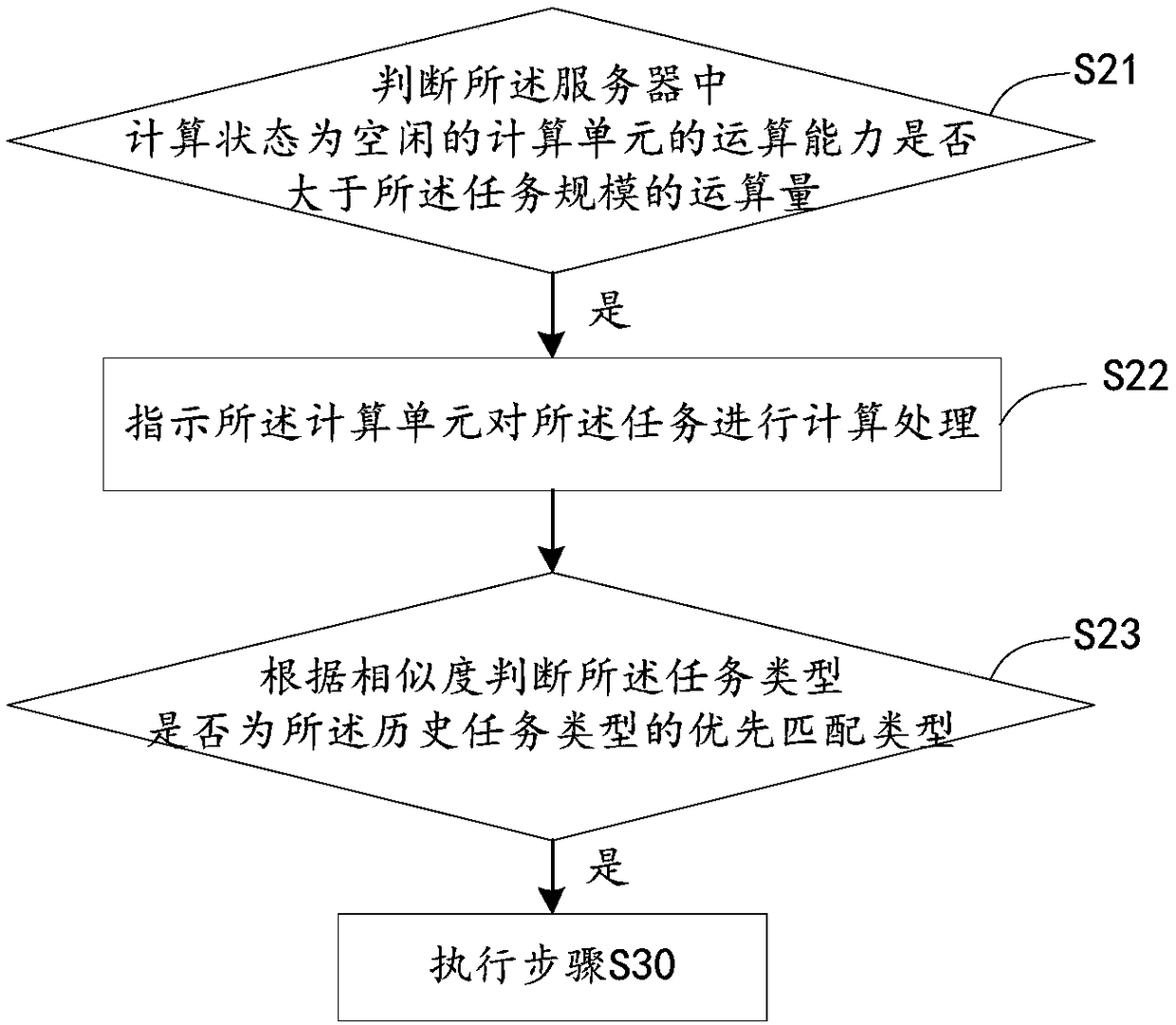

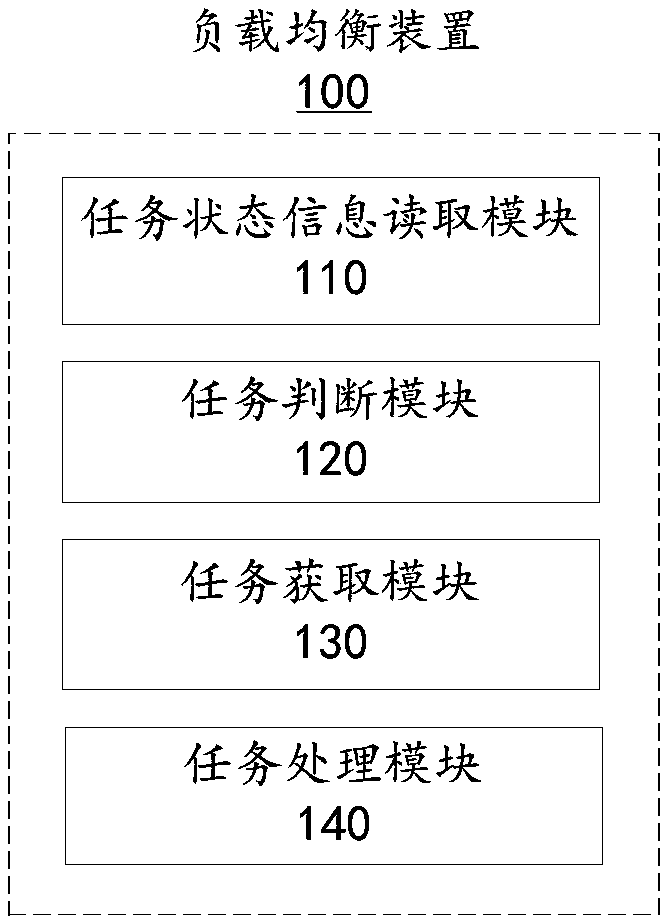

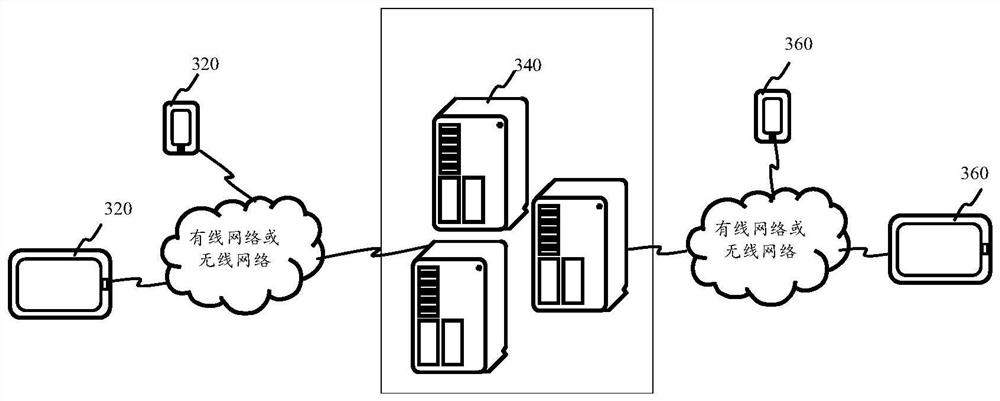

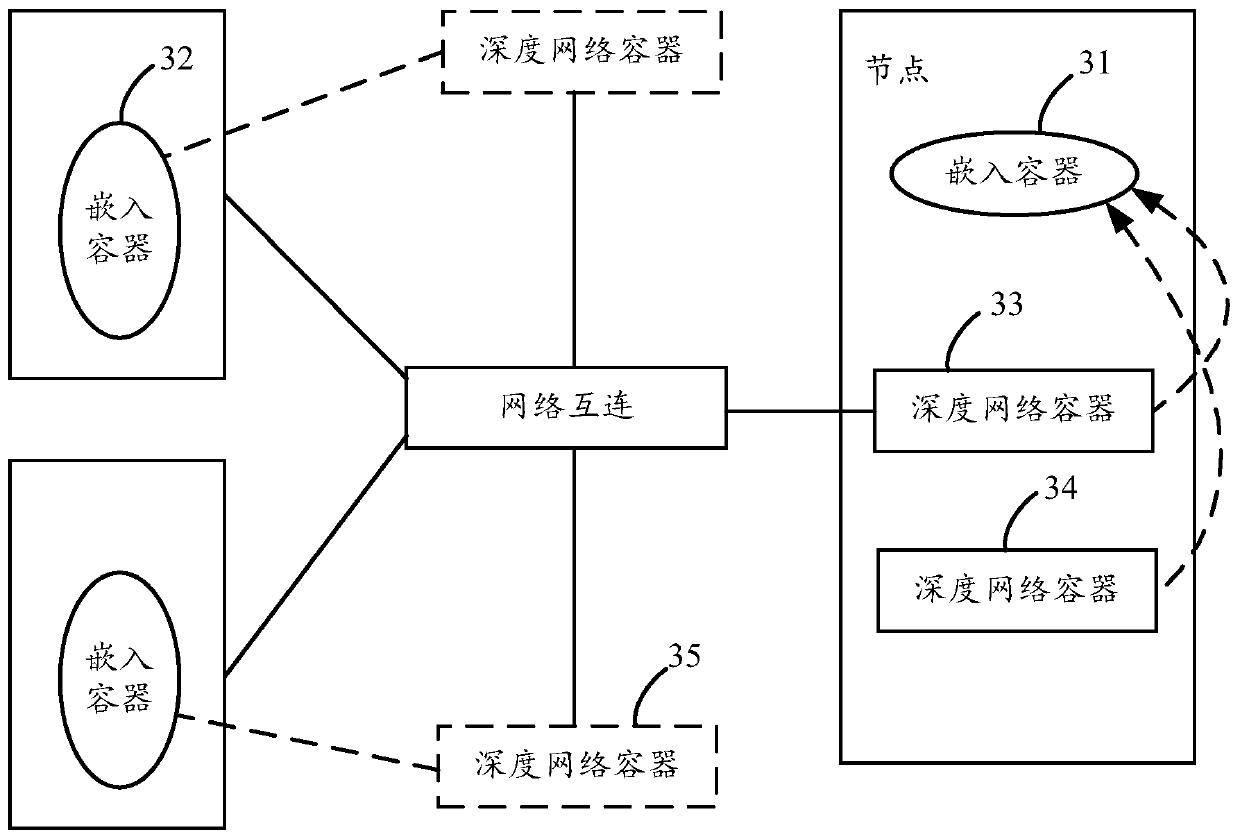

Load balancing method, device and system for computing cluster

InactiveCN108924214ALimit processing powerFull use of computing powerTransmissionDistributed computingInformation processing

The invention provides a load balancing method, device and system for a computing cluster, and relates to the technical field of load balancing of computing clusters. The load balancing method for thecomputing cluster comprises the steps of first reading task state information of a task in a task pool; based on the task state information, judging whether a computing unit in a node server can process the task; if yes, obtaining the task from the task pool; and instructing the computing unit to perform computing processing on the task. The load balancing method of the computing cluster cooperates with the load balancing device and the load balancing system to automatically allocate the information processing requests according to real-time resource conditions of the server without introducing additional devices, thereby avoiding waste of system computing resources caused by reasons of a key node bottleneck, unreasonable task allocation and the like.

Owner:CHINA CONSTRUCTION BANK

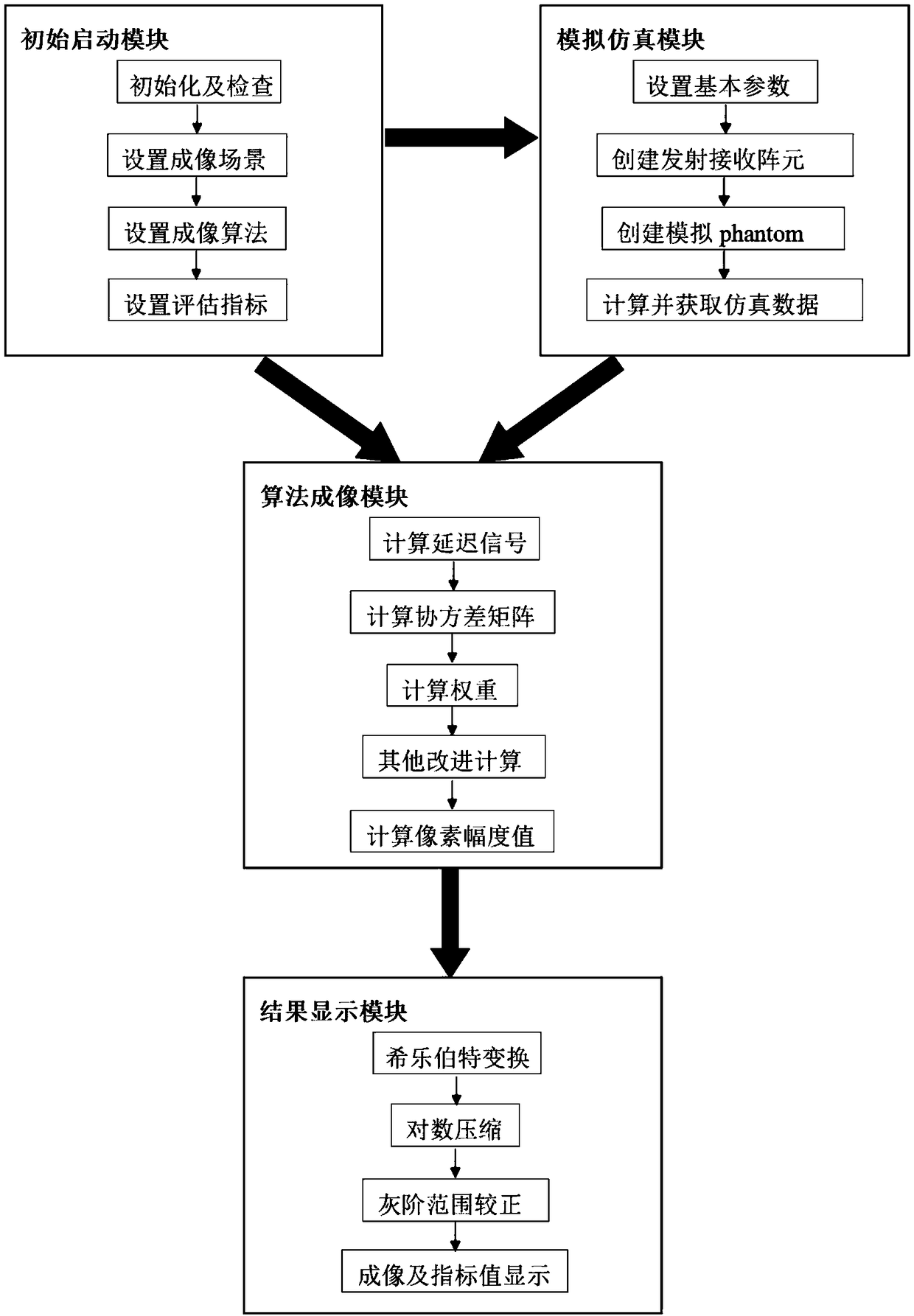

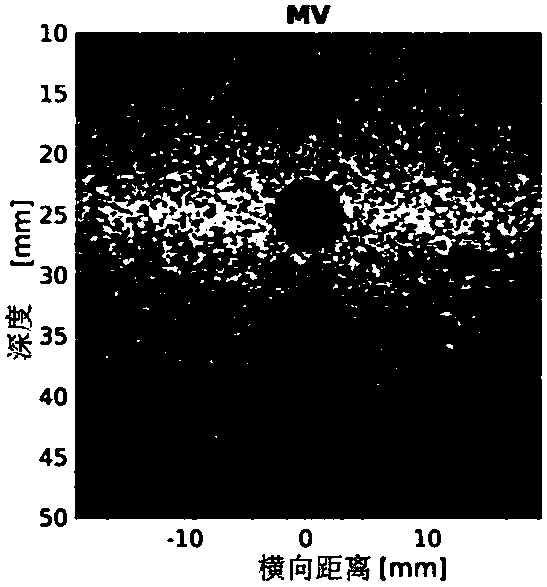

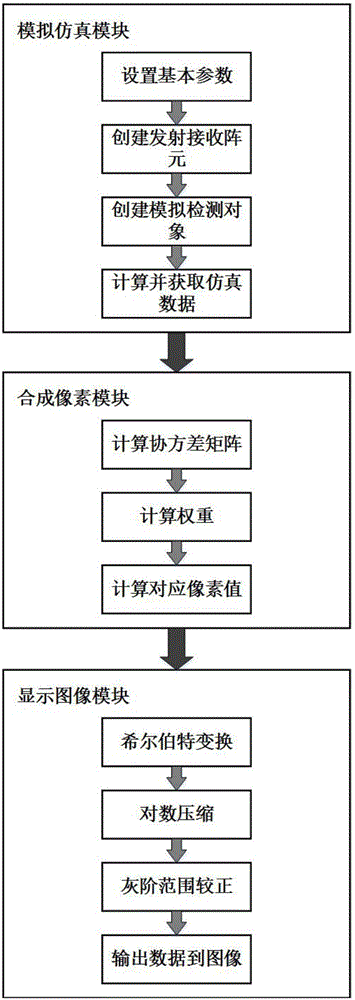

Quick medical ultrasonic image system for multiple MV high-definition algorithms based on GPU

InactiveCN108354626AFast Medical Ultrasound ImagingQuality improvementUltrasonic/sonic/infrasonic diagnosticsInfrasonic diagnosticsImaging algorithmData acquisition

The invention discloses a quick medical ultrasonic image system for multiple MV high-definition algorithms based on a GPU. The system comprises an initial starting module, an analog simulation module,an algorithm imaging module and a result display module, wherein the initial starting module completes system initialization setting and the calls the algorithm imaging module for imaging, the analogsimulation module serves as an auxiliary module to produce analog data to be used for other modules, the analog simulation module is a core module of the whole system and receives parameter settingsfrom the initial starting module and data of the analog simulation module or a data acquisition device for imaging calculation, and the result display module conducts last processing and display on the results of other modules and finally achieves quick imaging functions of the multiple MV algorithms. The quick medical ultrasonic image system can complete completed calculation of high-definition medical ultrasonic image algorithms within very short time, used GPU programming for the MV high-definition algorithms can be conveniently deployed on a computer with the GPU, and the quick medical ultrasonic image system can effectively meet the use demand and is good in practicability.

Owner:SOUTH CHINA UNIV OF TECH

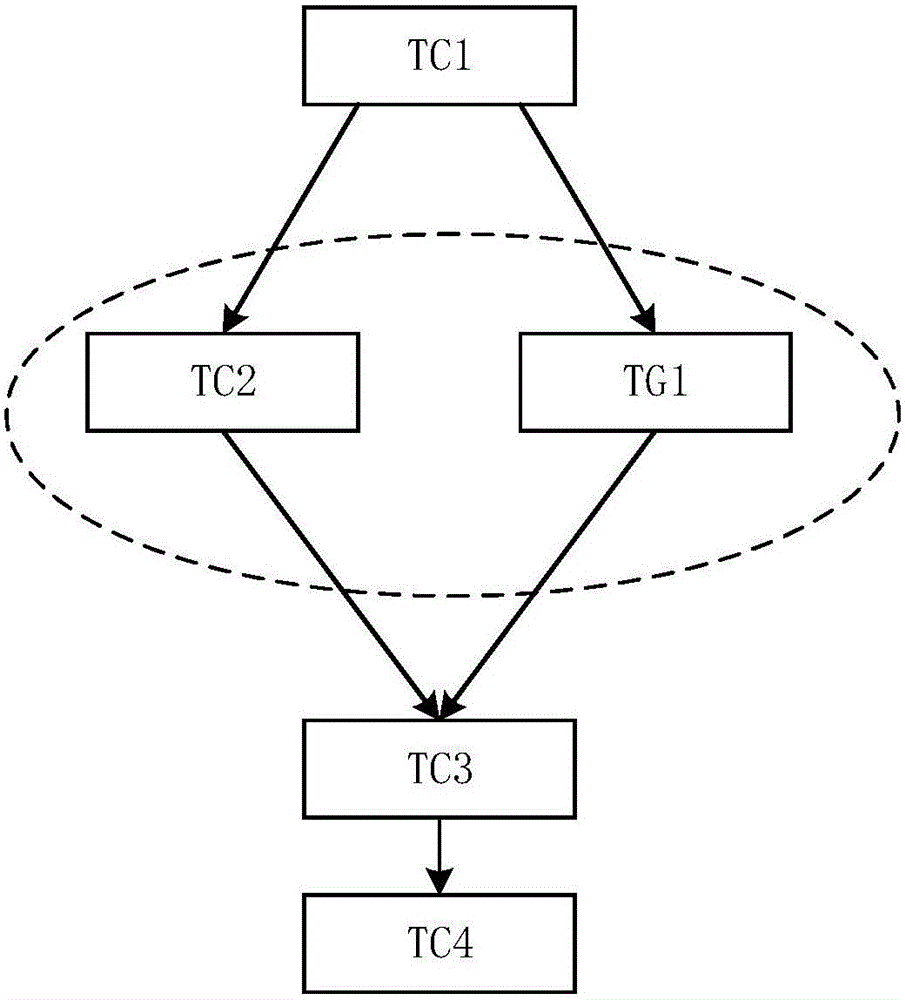

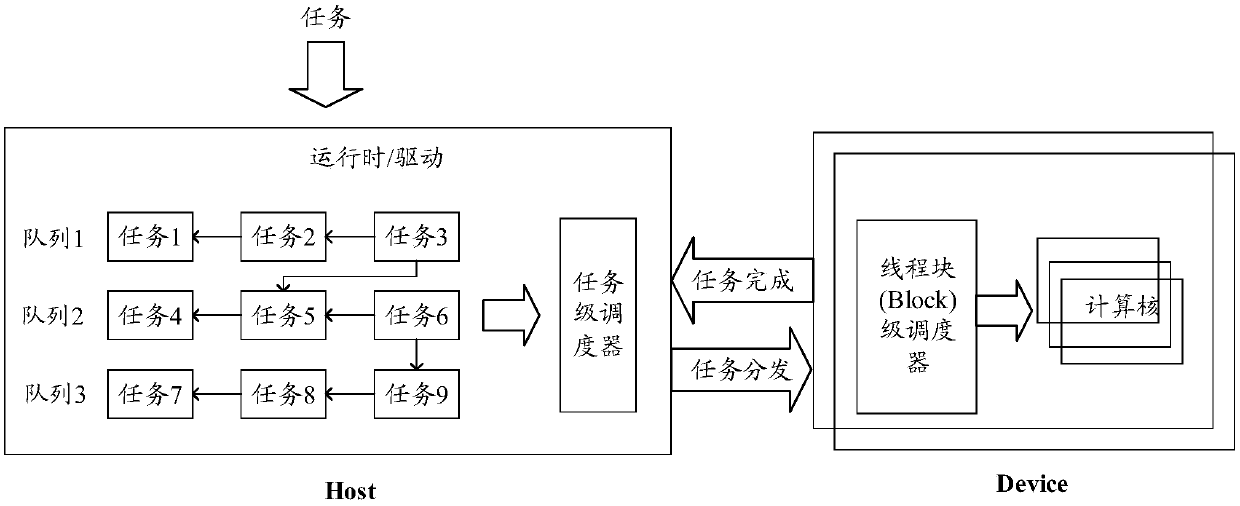

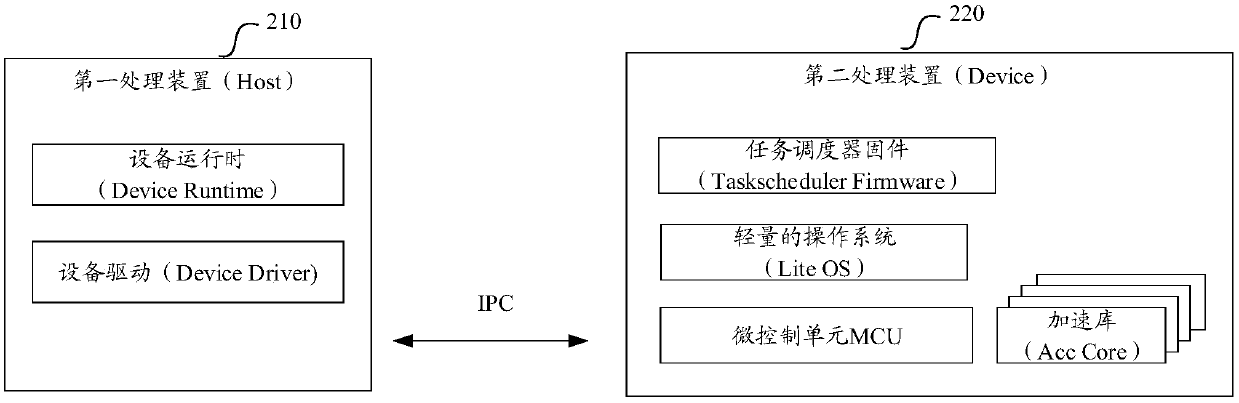

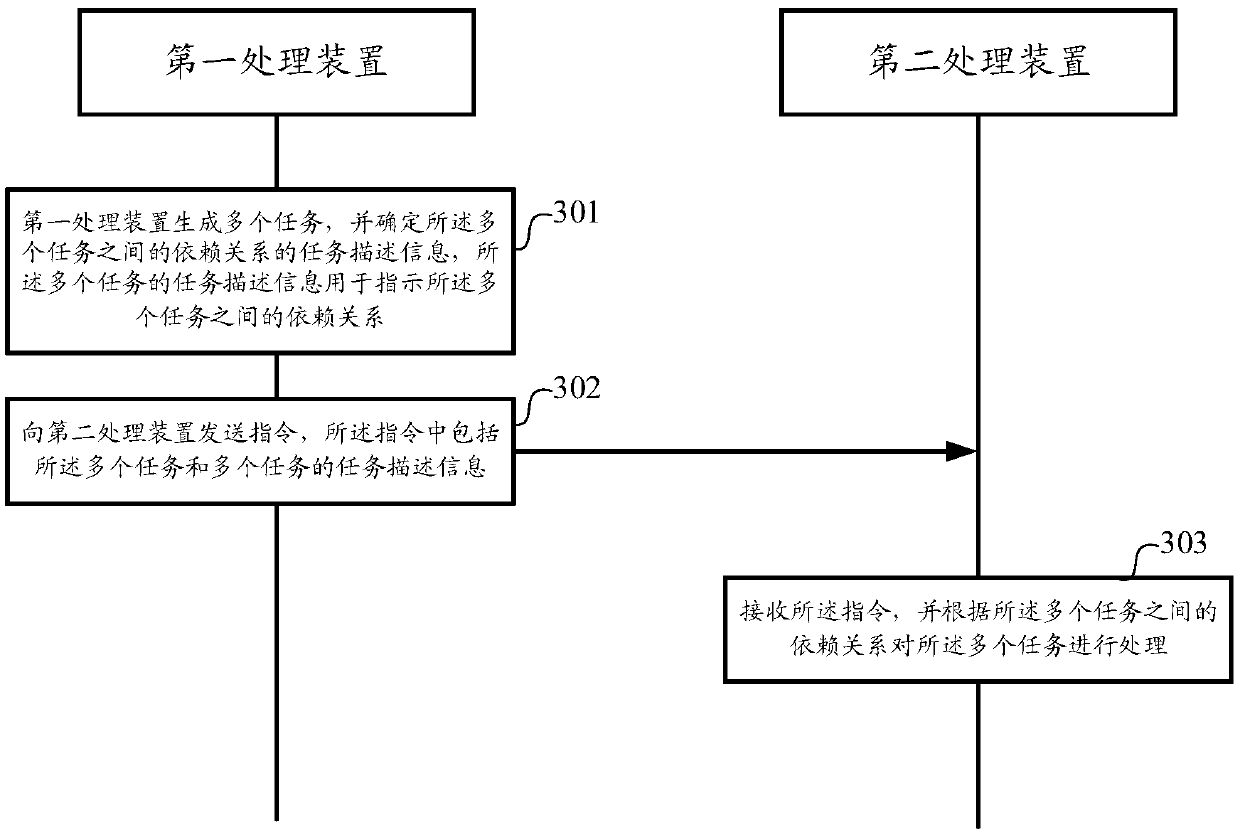

Task processing method and processing device and computer system

ActiveCN110489213AReduce processing loadFull use of computing powerProgram initiation/switchingComputerized systemParallel computing

The invention relates to the technical field of computers. The invention discloses a task processing method, a task processing device and a computer system. Implementation of the task processing method comprises the following steps: a first processing device generates a plurality of tasks and determines task description information of the plurality of tasks, and the task description information ofthe plurality of tasks is used for indicating a dependency relationship among the plurality of tasks, wherein in any two tasks with the dependency relationship, processing of one task needs to wait for a processing result of the other task; the first processing device sends an instruction to a second processing device, wherein the instruction comprises the plurality of tasks and task descriptioninformation of the plurality of tasks; and the second processing device receives the instruction and processes the plurality of tasks according to the dependency relationship among the plurality of tasks. Thus, the scheme in the embodiment of the invention can effectively reduce the waiting time delay, gives full play to the computing power of the acceleration chip, and improves the task processing efficiency.

Owner:HUAWEI TECH CO LTD

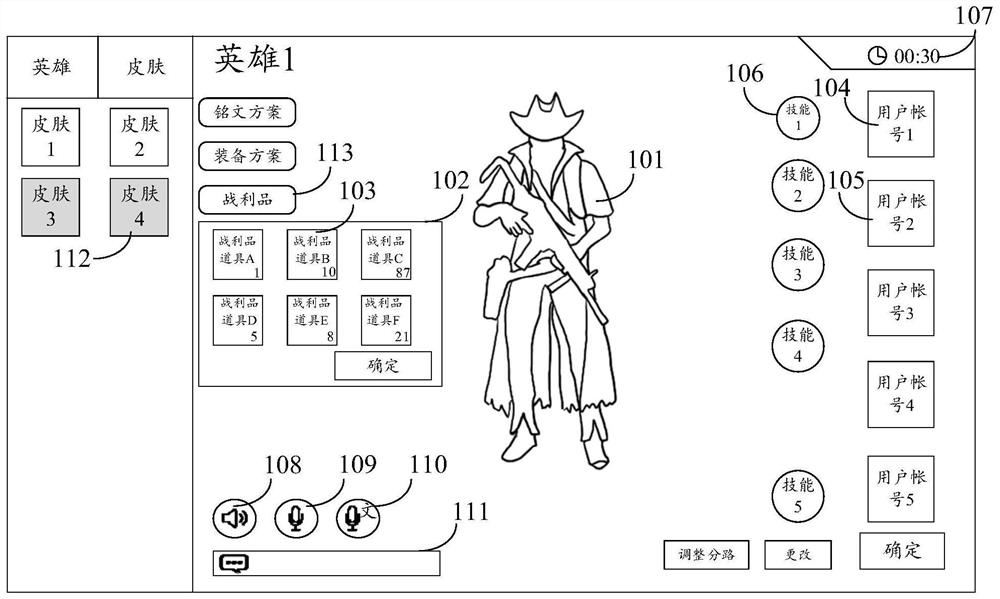

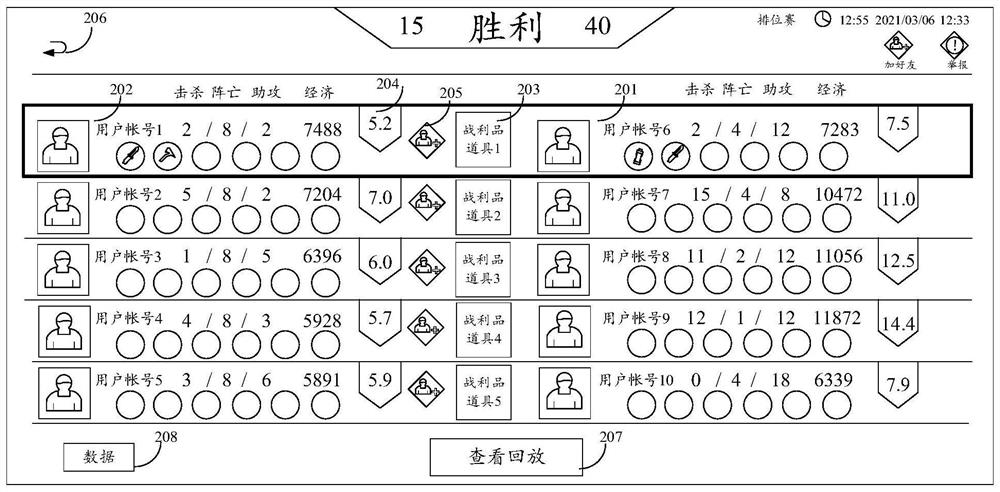

Method and device for acquiring virtual item, equipment and medium

ActiveCN112891942ASpeed up circulationFull use of computing powerVideo gamesManufacturing computing systemsEngineeringVirtual world

The invention discloses a method, a device and equipment for acquiring a virtual item and a medium. The method is applied to the field of computer virtual world. The method comprises the steps that a battle preparation interface is displayed, the battle preparation interface comprises candidate warfare props, the battle preparation interface is used for displaying a user interface for a first user account to prepare before battle start, and the first user account belongs to a first camp in the battle; in response to a first selection operation on a first warfare item item in the candidate warfare item items, taking the first warfare item item as a battle warfare item; in response to the fight operation, executing fight; in response to the fact that the winning condition of the battle is met, obtaining a second target battle item prop, wherein the second target battle item prop is provided by a second user account, the second user account belongs to a second camp in the battle, and the first camp and the second camp are in an enemy relationship. According to the method, the user can acquire the virtual item, and acquisition channels of the virtual item are increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

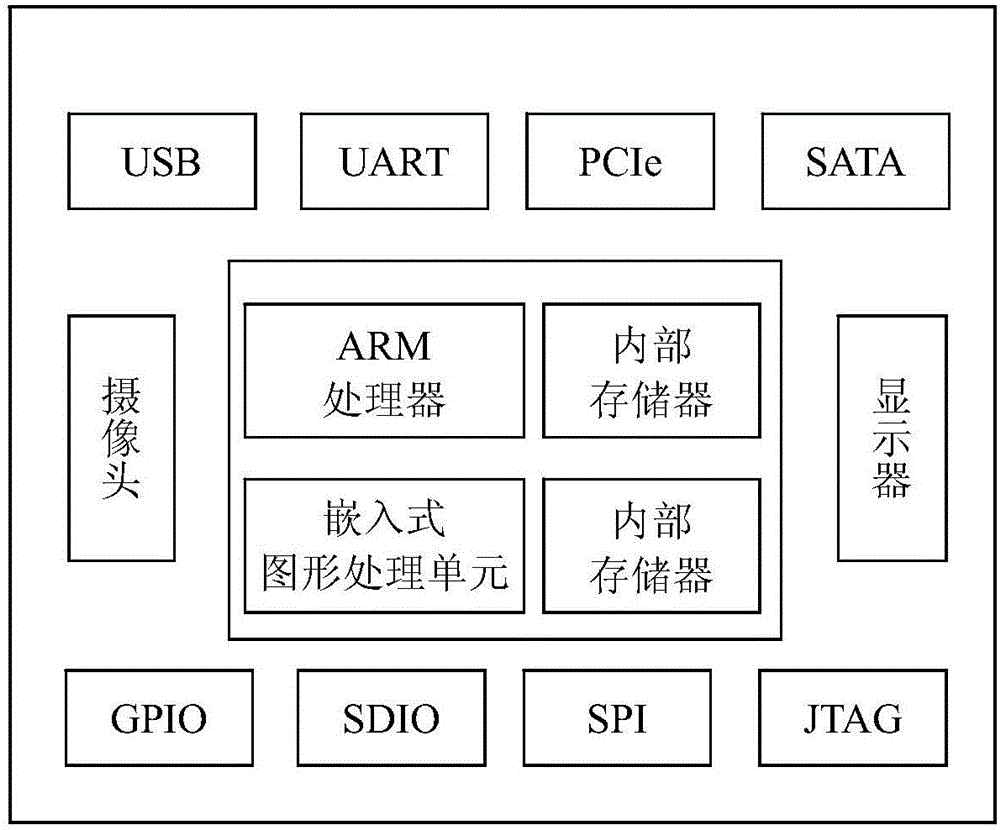

Embedded real-time high-definition medical ultrasound imaging system of integrated graphics processing unit

InactiveCN106510756AFull use of computing powerQuality improvementUltrasonic/sonic/infrasonic diagnosticsInfrasonic diagnosticsUltrasonographyImaging quality

The invention discloses an embedded real-time high-definition medical ultrasound imaging system of an integrated graphics processing unit. The system adopts embedded equipment of the integrated graphics processing unit for achieving medical ultrasound imaging, by adopting an improved high-definition imaging algorithm, the calculation process is suitable for a computing environment of the graphics processing unit, and therefore the image quality of medical ultrasound imaging is improved, and the imaging frame rate of medical ultrasound imaging is increased. Compared with a traditional portable medical ultrasound imaging system, the adopted integrated graphics processing unit embedded system has a high parallel computing capacity, complicated calculation of the high-definition medical ultrasound imaging algorithm can be completed within a short period of time, and real-time high-definition medical ultrasound imaging can be presented. Accordingly, the phenomenon that minimum variance wave beams form high-definition ultrasound imaging is achieved, and a high-definition medical ultrasound image is output in real time on the embedded system. The embedded system of the integrated graphics processing unit is low in price, high in practicability and high in cost performance.

Owner:SOUTH CHINA UNIV OF TECH

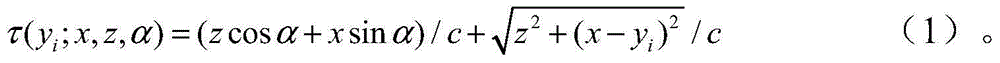

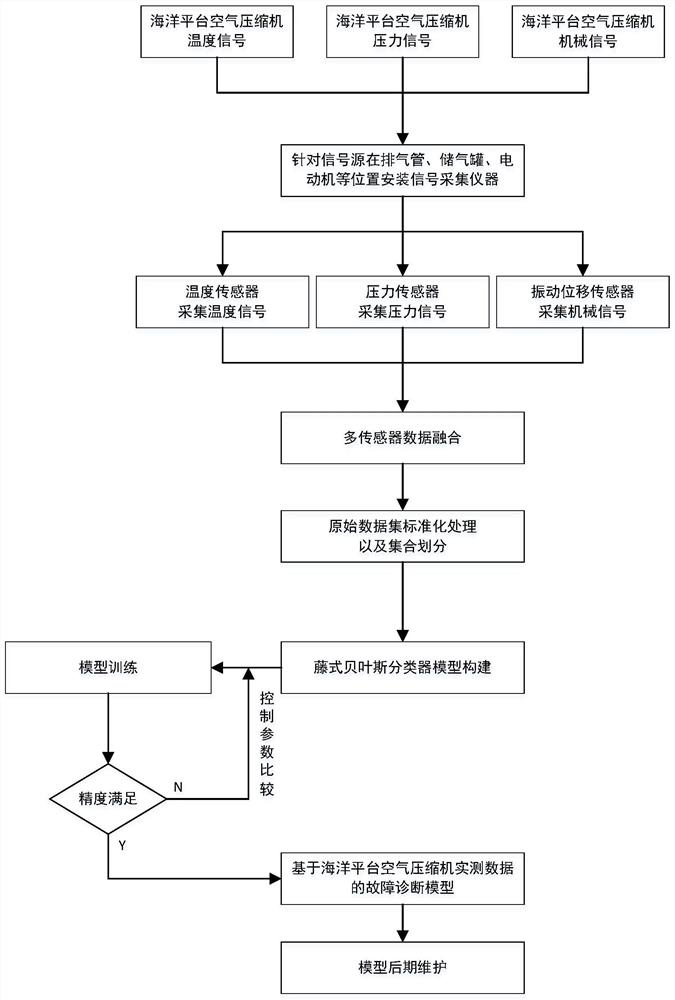

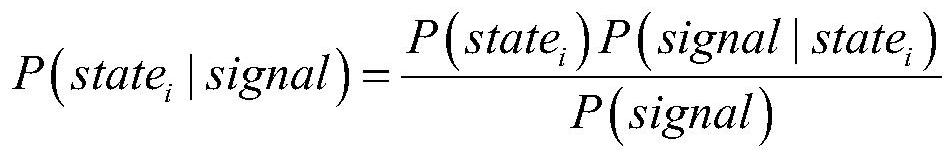

Marine platform air compressor fault diagnosis method

ActiveCN111637045AImprove denoising effectOmit processingPump testingPositive-displacement liquid enginesControl parametersKalman filter

The invention provides a marine platform air compressor fault diagnosis method and belongs to the technical field of marine engineering equipment fault diagnosis. According to the marine platform aircompressor fault diagnosis method, marine platform air compressor monitoring signals are firstly divided into three types of temperature signals, pressure signals and mechanical signals, and marine platform air compressor signal collection points are provided with data sensors correspondingly to collect working signals; linear filtering method Kalman filtering is used at a data processing stage torealize multi-sensor data fusion, and fused data are further divided into a training set, a verification set and a test set; and after logical construction is performed on a Rattan Bayesian classifier, likelihood probability control parameters are optimized by the training set data, the complete marine platform air compressor fault diagnosis method is finally obtained, and fault diagnosis can berealized after importing actually-measured data.

Owner:HARBIN ENG UNIV

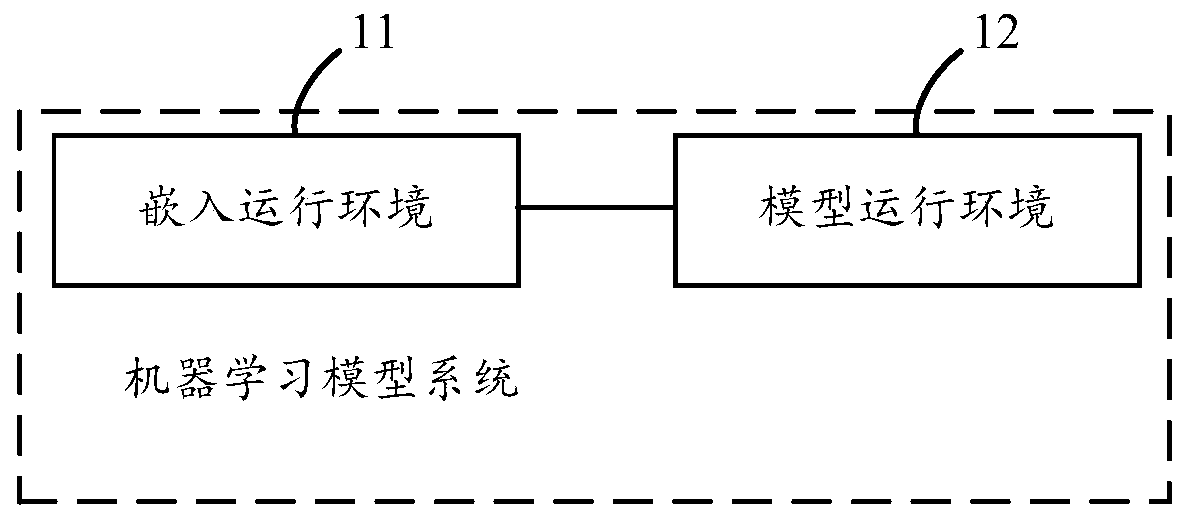

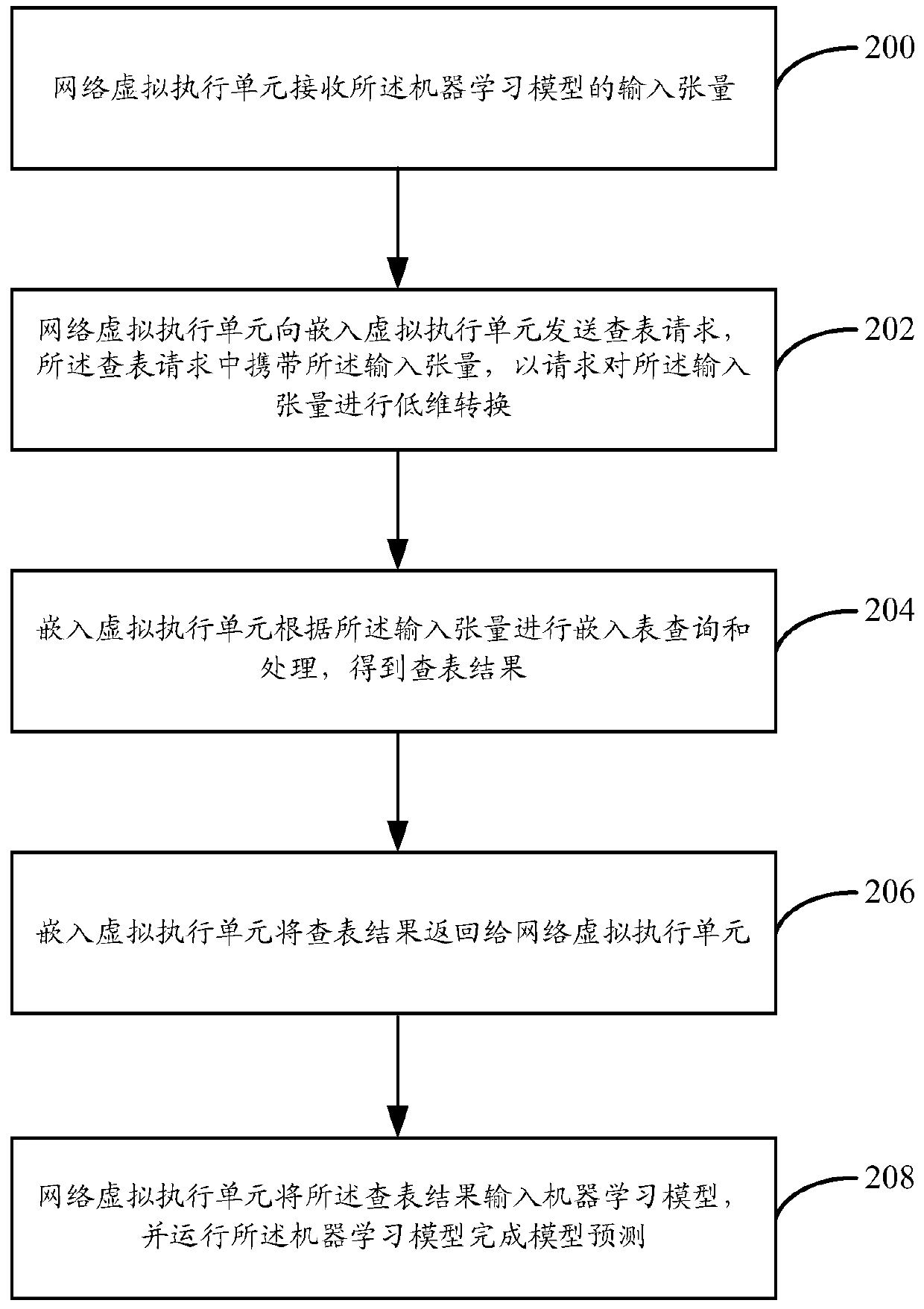

Model-based prediction method and device

ActiveCN110033091ALow running costReduce maintenance costsMachine learningNeural architecturesEmbedded systemLearning models

The embodiment of the invention provides a model-based prediction method and device, and the method comprises the steps: enabling a model operation environment to receive an input tensor of a machinelearning model; enabling the model operation environment to send a table look-up request to the embedded operation environment, wherein the table look-up request comprises the input tensor so as to request to perform low-dimensional conversion on the input tensor; enabling the model operation environment to receive a table look-up result returned by the embedded operation environment, wherein thetable look-up result is obtained by performing embedded query and processing on the embedded operation environment according to the input tensor; and enabling the model operation environment to inputthe table look-up result into a machine learning model, and operate the machine learning model to complete model-based prediction.

Owner:ADVANCED NEW TECH CO LTD

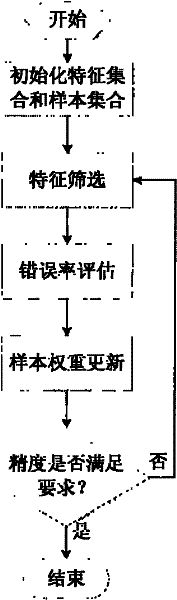

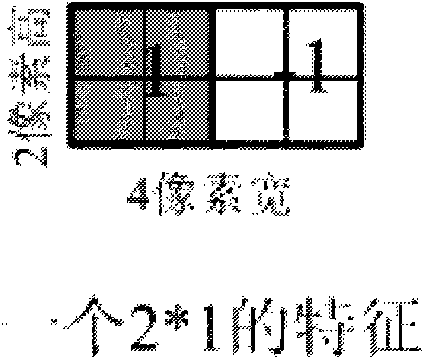

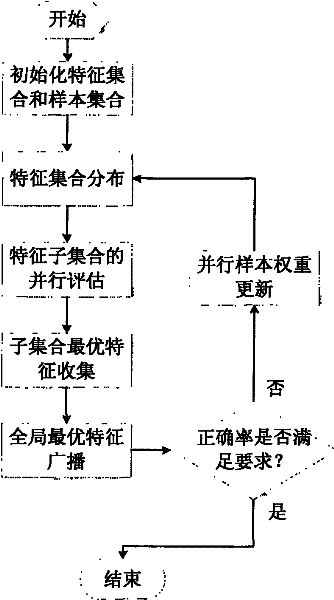

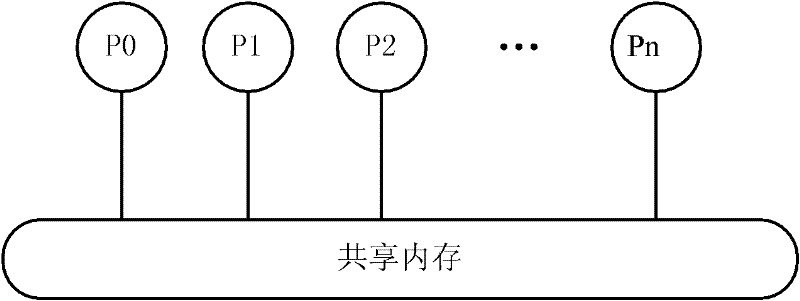

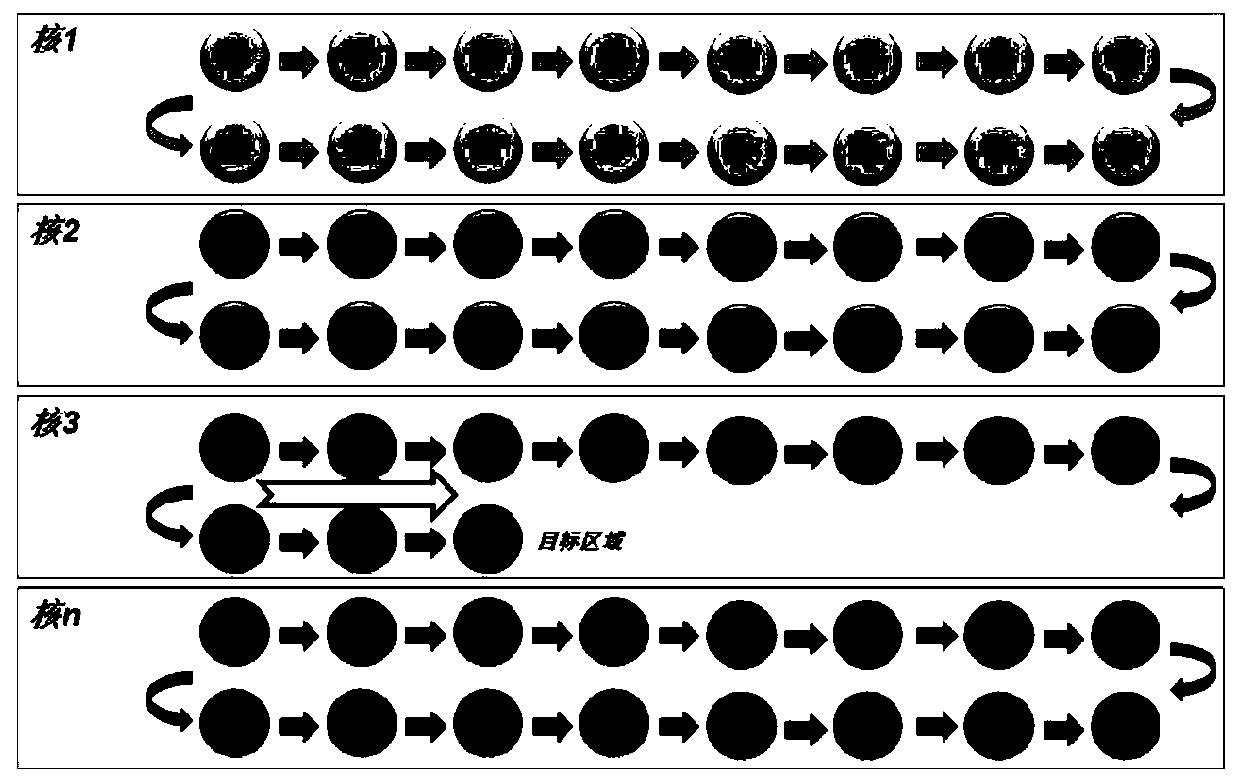

Parallel AdaBoost feature extraction method of multi-core clustered system

InactiveCN102226909AFull use of computing powerSpeed up AdaBoost feature extraction processMultiprogramming arrangementsCharacter and pattern recognitionPattern recognitionFeature set

The invention discloses a parallel AdaBoost feature extraction method of a multi-core clustered system. Each iterative process of an AdaBoost is reformed through the following method that: firstly, a feature set needed to be scanned in an AdaBoost training process is divided and uniformly distributed on each node of the multi-core clustered system; then, each computing node further divides the own distributed feature subset, different computing cores respectively scan the divided subset and collect the computing result of each computing core, so as to obtain an optimal feature in the feature subset; and finally, the clustered system specifies the optimal features in the feature subsets obtained by each node, thereby obtaining a global optimal feature. The method provided by the invention can make full use of parallel processing capability of the multi-core clustered system, thereby greatly accelerating the AdaBoost feature extraction process.

Owner:HUNAN CHUANGYUAN INTELLIGENT TECH

Power network planning construction method based on network reconstruction and optimized load-flow simulating calculation

ActiveCN103199521BReduce maintenanceEasy accessFlexible AC transmissionAc network circuit arrangementsElectric power systemPower grid

The invention relates to a power network planning construction method based on network reconstruction and optimized load-flow simulating calculation. The power network planning construction method based on the network reconstruction and the optimized load-flow simulating calculation comprises the steps that load-flow of a power system is calculated by a digital simulation module in a power network simulation platform, constraint of a future power network is set by an operator, interface service calls used for power network planning are increased, a network reconstruction subsystem obtains power network data, rules followed by the network reconstruction subsystem in calculation are determined, calculation constraint is determined, the network reconstruction subsystem acquires an optimal solution, artificial interfering correction is conducted to a network reconstruction result, the result is written into the power network simulation platform, an optimized load-flow subsystem obtains power network data, constraint is determined before the calculation of the optimized loading-flow subsystem, optimized load-flow calculation is developed, the result is exported and sent to the power network simulation platform, artificial interfering correction is conducted to the exported result, and the result after the artificial interfering correction is stored in the power network simulation platform. The power network planning construction method based on the network reconstruction and the optimized load-flow simulating calculation largely improves the working efficiency of power network planning and the efficiency of a power network operation mode, and thus a power network planning working process which is convenient and high in efficiency is created.

Owner:STATE GRID TIANJIN ELECTRIC POWER +2

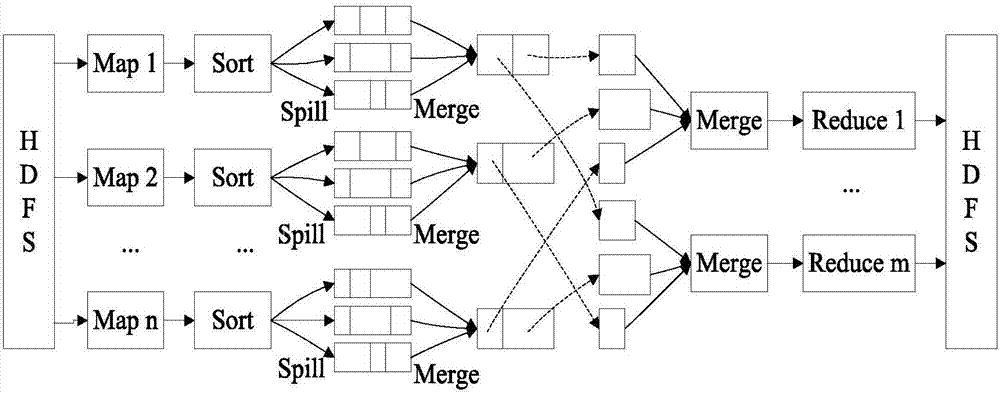

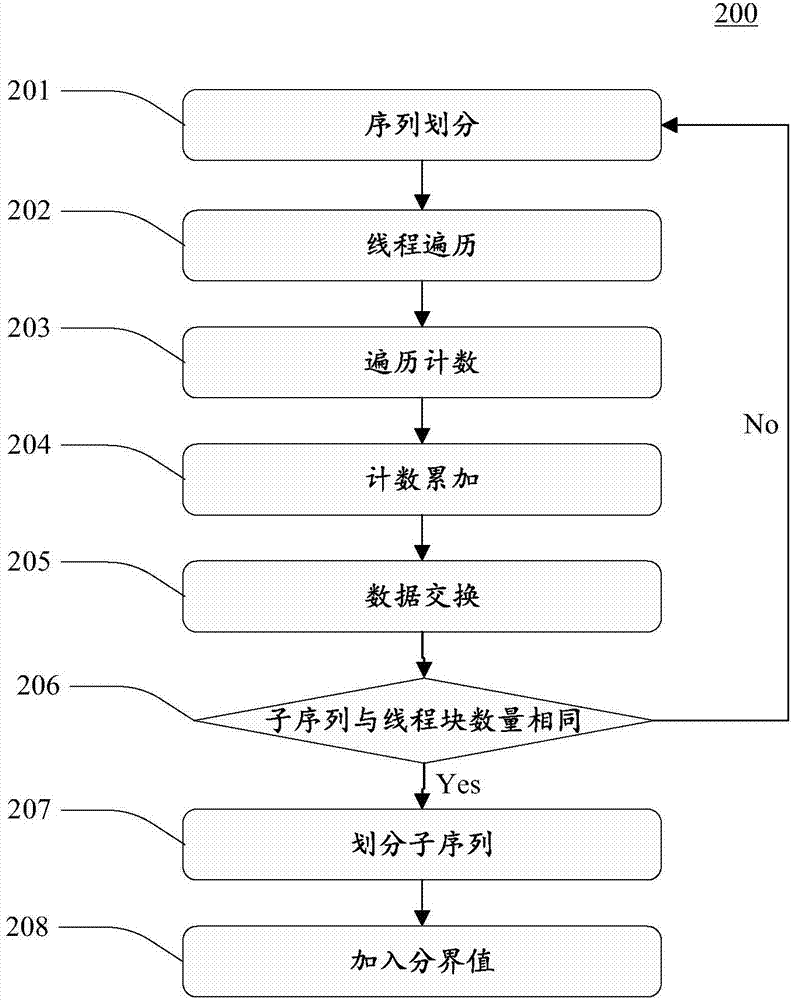

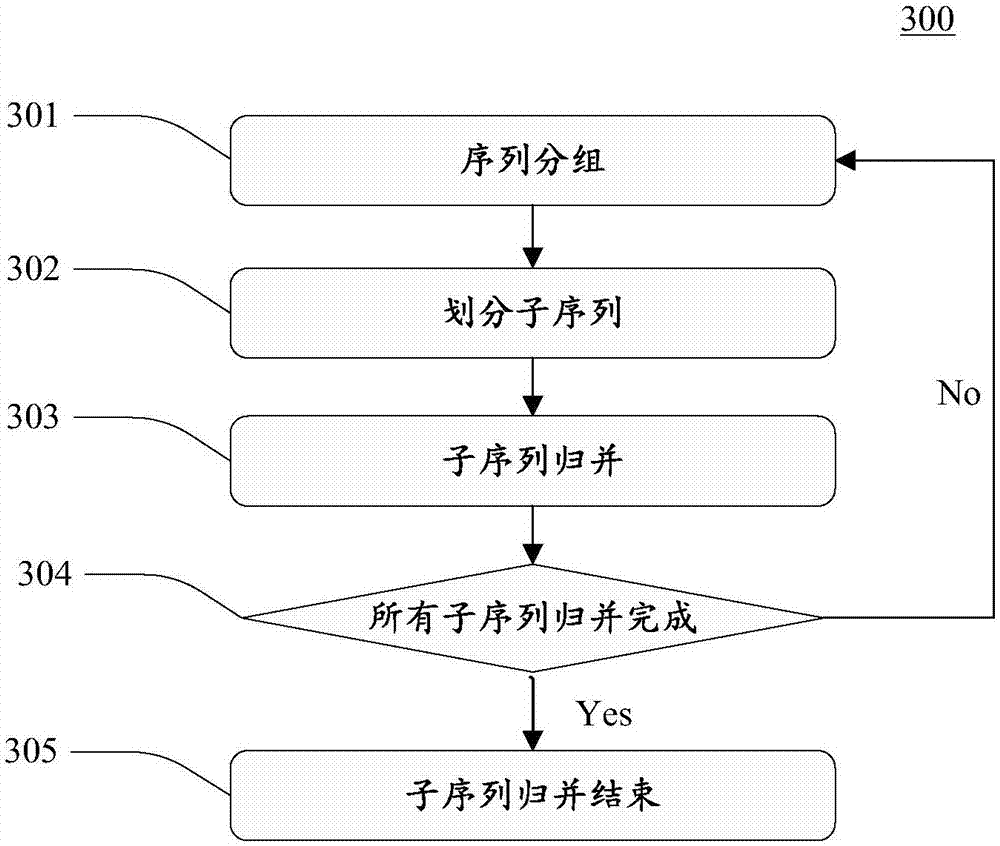

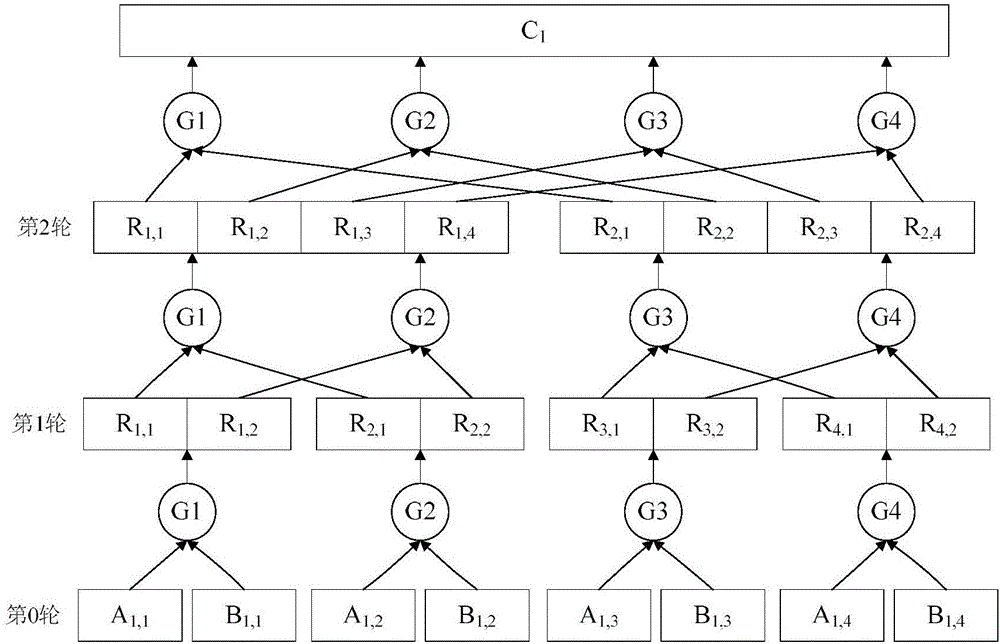

GPU (graphics processing unit) sorting-based MapReduce optimizing method

InactiveCN106802787AReduce the difficulty of implementationReduce difficultyResource allocationConcurrent instruction executionMerge sortQuicksort

The invention discloses a GPU sorting-based MapReduce optimizing method. According to the GPU sorting-based MapReduce optimizing method, MapReduce is composed of a Map stage, a Shuffle stage and a Reduce stage, wherein the Map stage comprises a Spill process and a Merge process; the Reduce stage comprises a Merge process; during the Spill process of the Map stage, a GPU-based rapid sorting process is performed; during the Merge process of the Map stage and the Merge process of the Reduce stage, a GPU-based merging sorting process is performed. By substituting traditional CPU-based (central processing unit-based) rapid sorting, merging sorting and heap sorting algorithms through the GPU-based rapid sorting and merging sorting algorithms, the GPU sorting-based MapReduce optimizing method can improve the intermediate data processing speed and further improve the performance of the MapReduce.

Owner:TIANZE INFORMATION IND

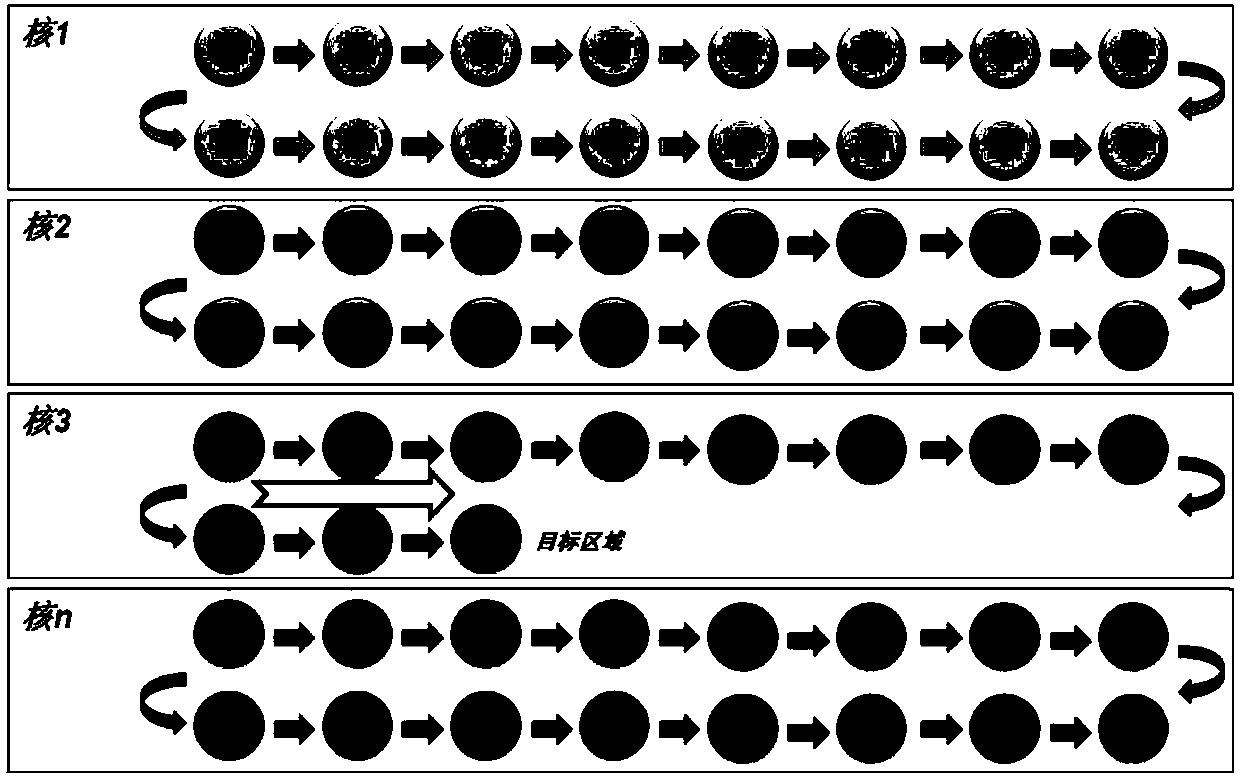

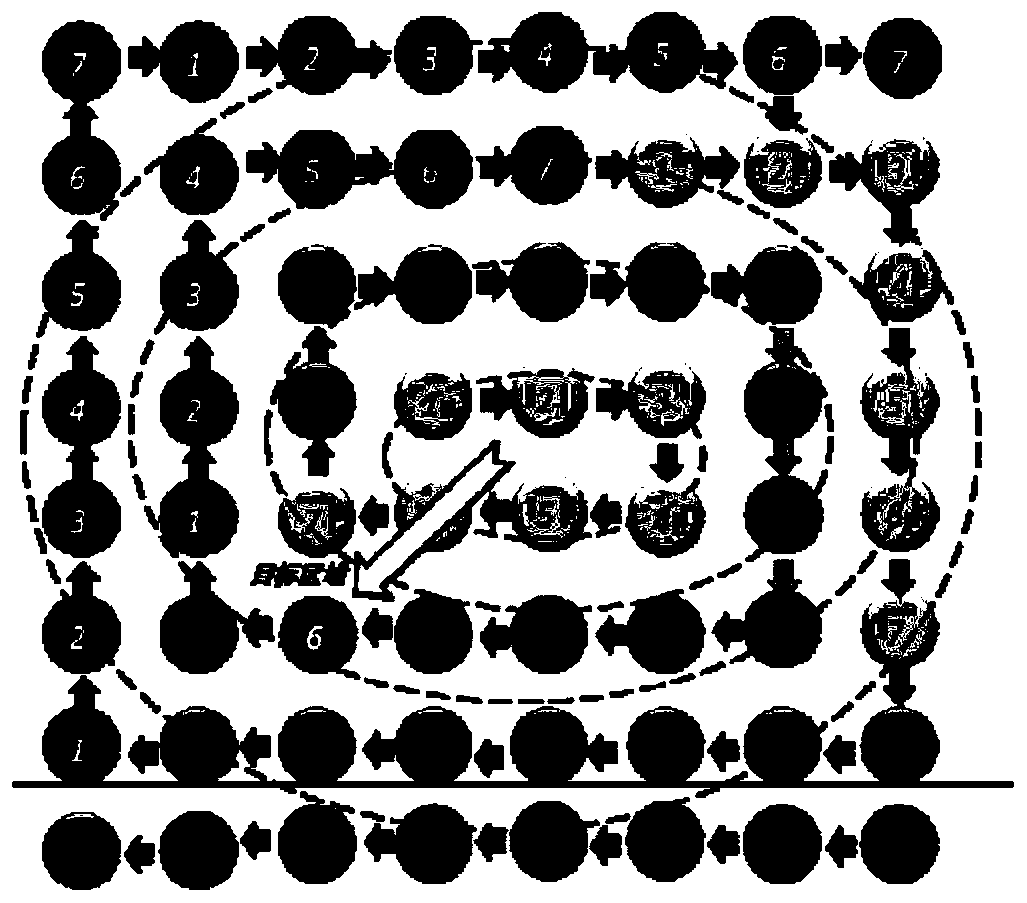

Multi-modal massive-data-flow scheduling method under multi-core DSP

ActiveCN107608784AImprove versatilityImprove portabilityResource allocationInterprogram communicationData streamParallel computing

The invention discloses a multi-modal massive-data-flow scheduling method under a multi-core DSP. The multi-core DSP includes a main control core and an acceleration core. Requests are transmitted between the main control core and the acceleration core through a request packet queue. Three data block selection methods of continuous selection, random selection and spiral selection are determined onthe basis of data dimensions and data priority orders. Two multi-core data block allocation methods of cyclic scheduling and load balancing scheduling are determined according to load balancing. Datablocks selected and determined through a data block grouping method according to allocation granularity are loaded into multiple computing cores for processing. The method adopts multi-level data block scheduling manners, satisfies requirements of system loads, data correlation, processing granularity, the data dimensions and the orders when the data blocks are scheduled, and has good generalityand portability; and expands modes and forms of data block scheduling from multiple levels, and has a wider scope of application. According to the method, a user only needs to configure the data blockscheduling manners and the allocation granularity, a system automatically completes data scheduling, and efficiency of parallel development is improved.

Owner:XIAN MICROELECTRONICS TECH INST

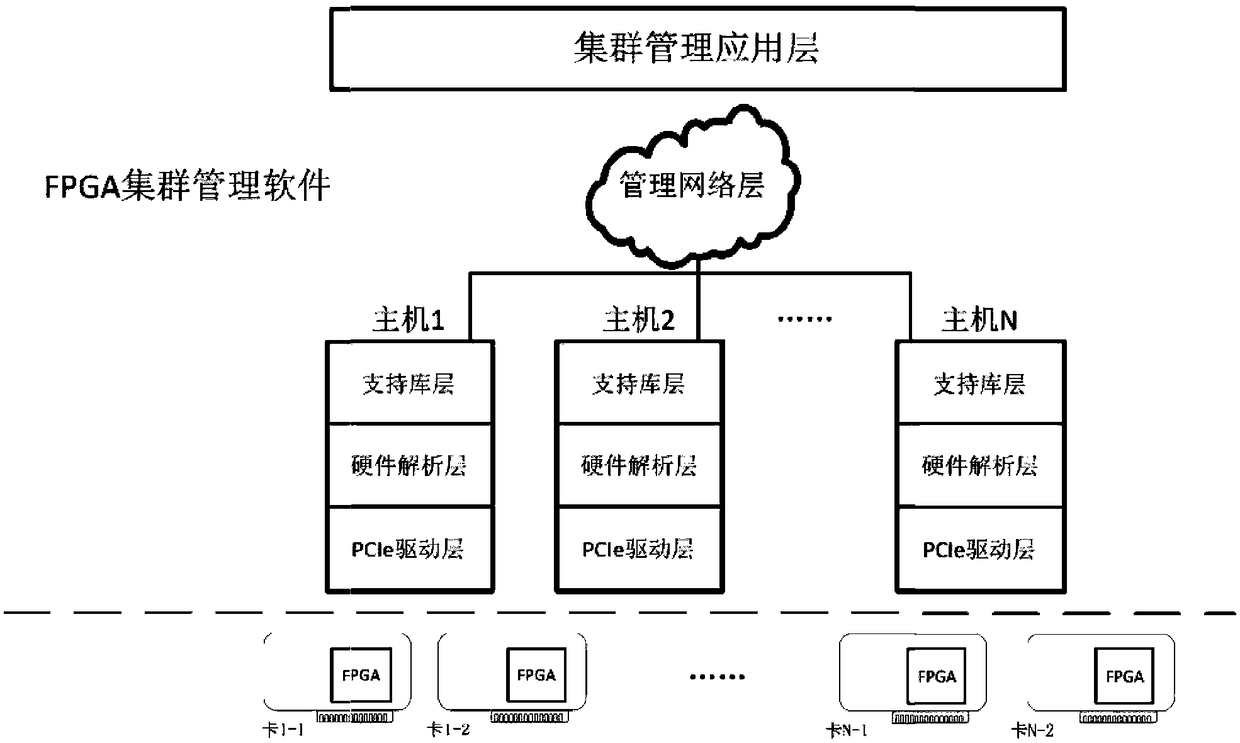

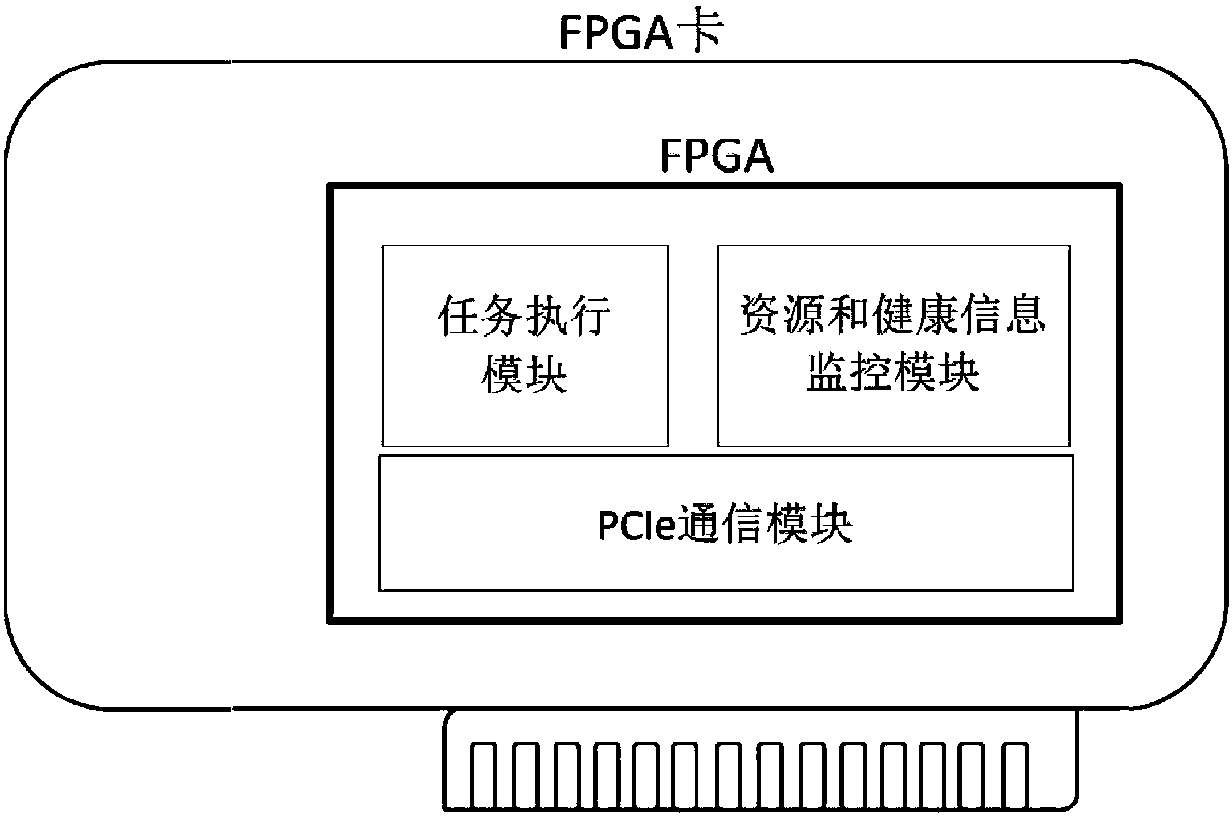

Management system of FPGA cluster and application thereof

InactiveCN108228518AEfficient managementEfficient schedulingResource allocationCluster systemsCooperative work

The invention relates to a management system of an FPGA cluster and application thereof. The management system of the FPGA cluster achieves functional uniform management and task allocation of FPGA cards through cooperative work between FPGA cluster management software and the FPGA cards. Efficient FPGA cluster management and dispatch are achieved, the expansion capability of the FPGA cluster system is improved, the calculation capability of the FPGA cards is fully utilized, and the flexibility and performance of the FPGA cluster system are improved.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

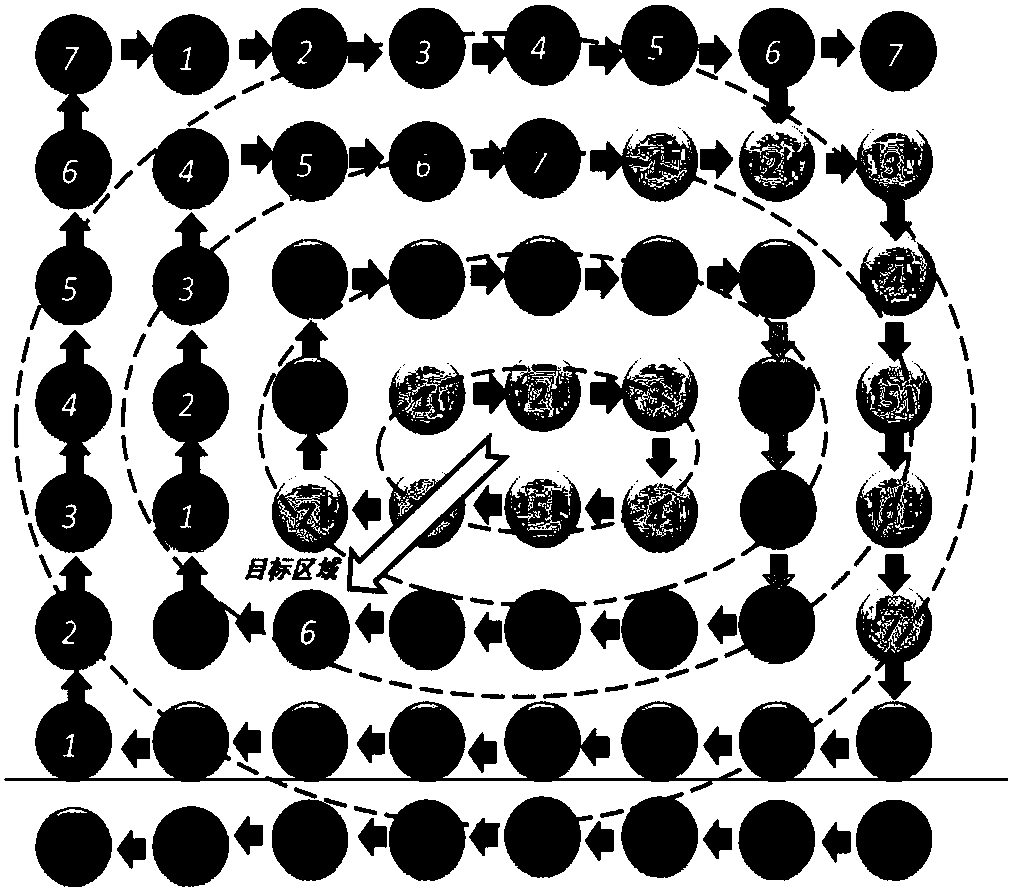

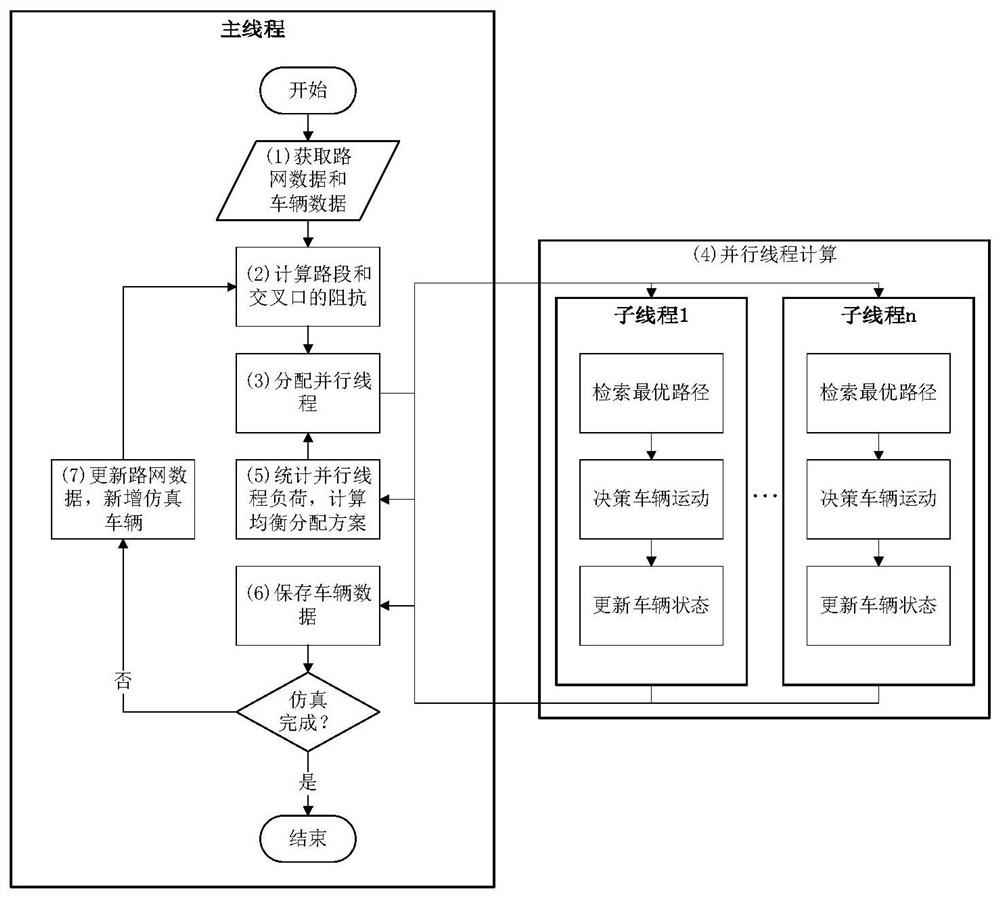

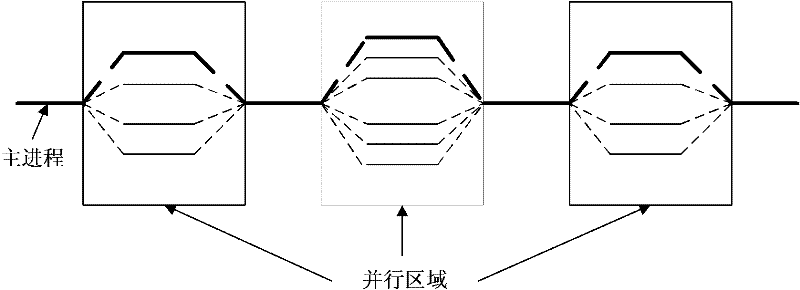

Multi-agent traffic simulation parallel computing method and device

ActiveCN113515892AFull use of computing powerImprove Simulation EfficiencyDetection of traffic movementForecastingConcurrent computationVehicle behavior

The invention discloses a multi-agent traffic simulation parallel computing method, which uses a multi-thread parallel computing mode to carry out path optimization, state updating and simulation data storage and display on an agent vehicle, can calculate road impedance according to real-time road conditions, calculates and updates the optimal path of the vehicle in combination with vehicle attributes, the thread distribution can be adjusted according to the actual calculation time consumption, and the thread load is balanced. The invention further discloses a multi-agent traffic simulation parallel computing device, individual differences of different simulation vehicles are fully considered, common simulation vehicles and intelligent vehicles capable of carrying out information interaction are supported, a multi-thread parallel computing mode is used, the CPU computing power of equipment can be fully exerted, the simulation efficiency is improved, and dynamic path planning can be realized, so that the simulation result better fits the actual situation. The method can be used for researching vehicle behaviors, predicting traffic flow states and testing management and control measures, and help is provided for relieving traffic congestion, reducing traffic hidden dangers and reducing resource waste.

Owner:SOUTHEAST UNIV

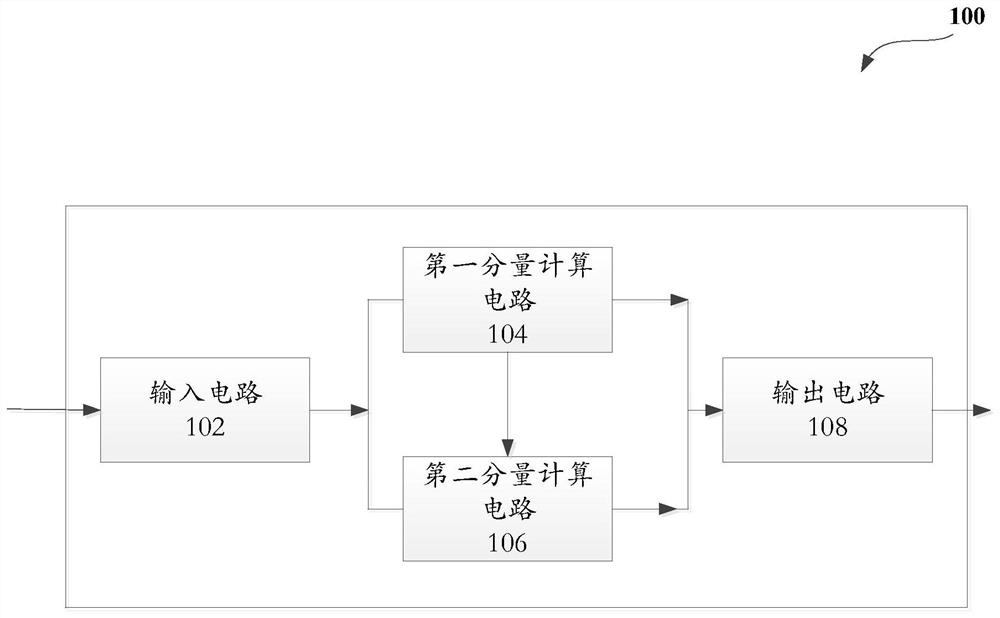

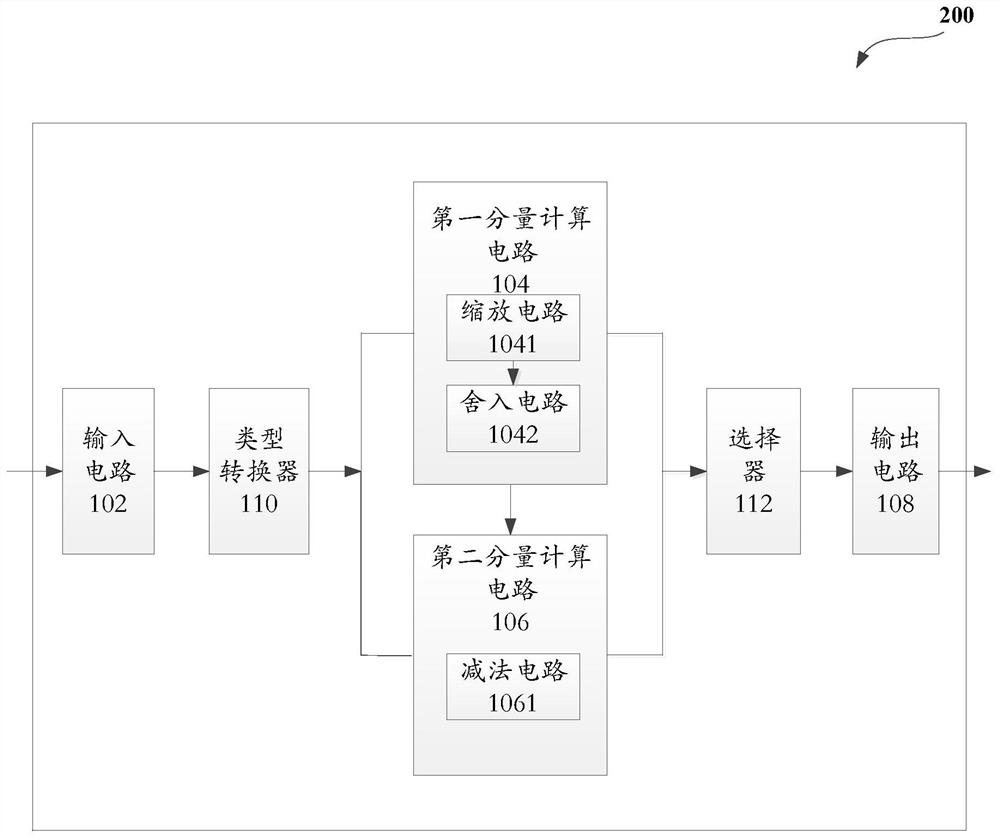

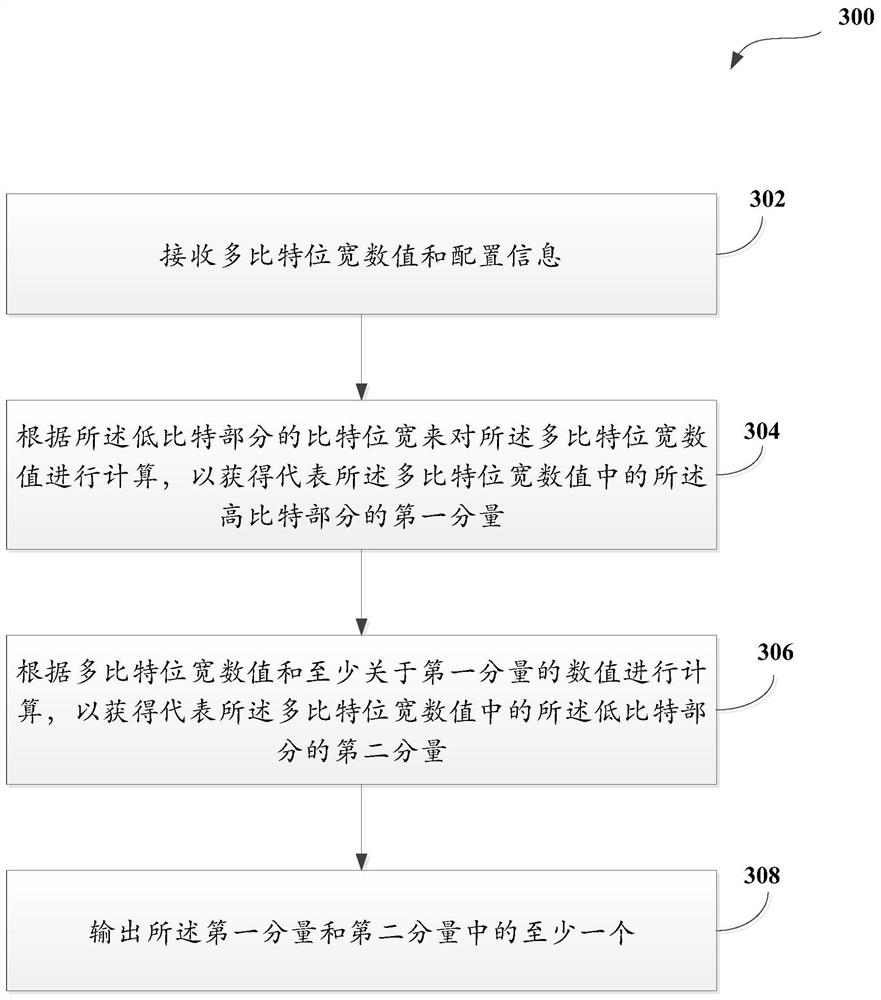

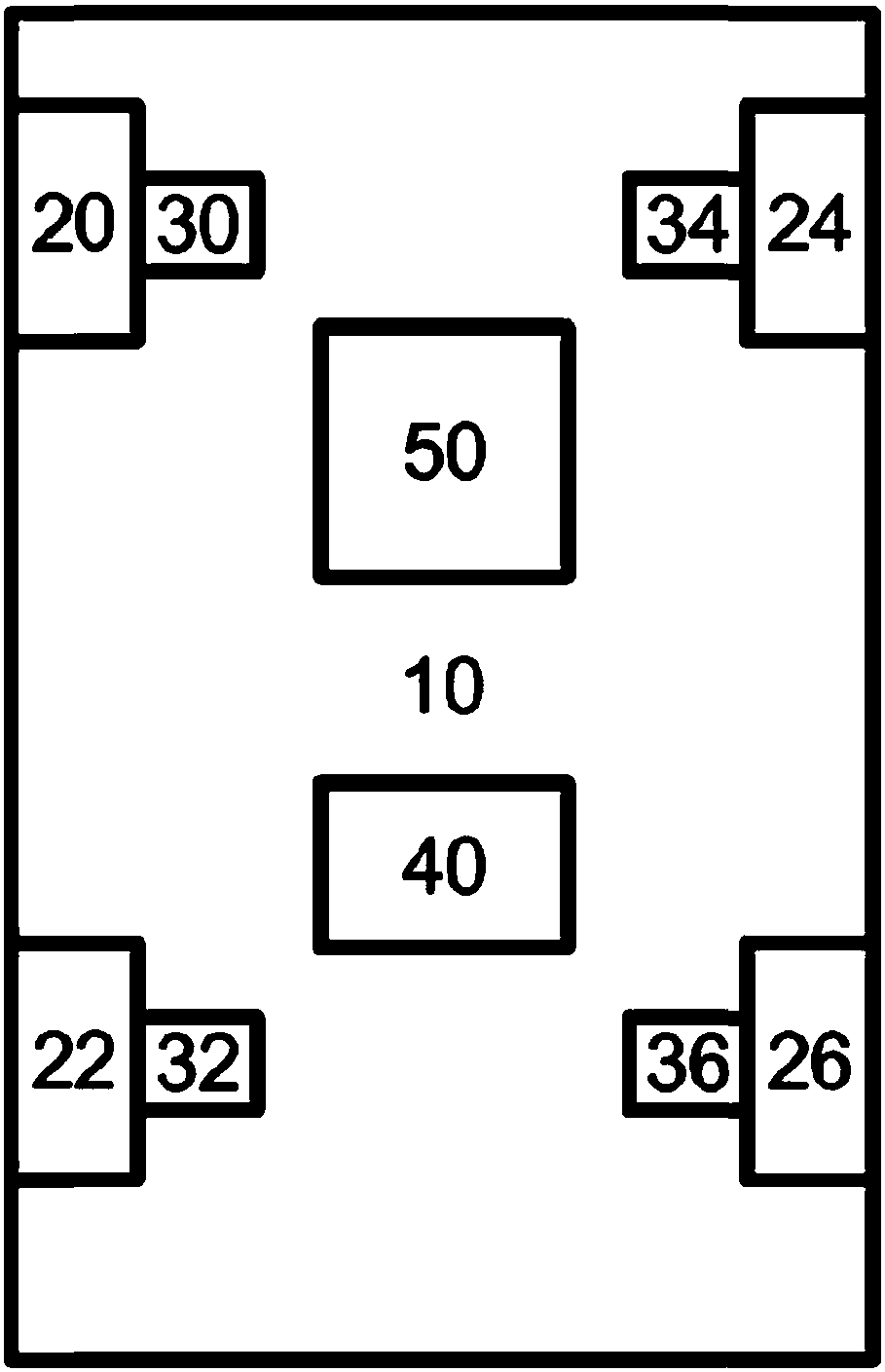

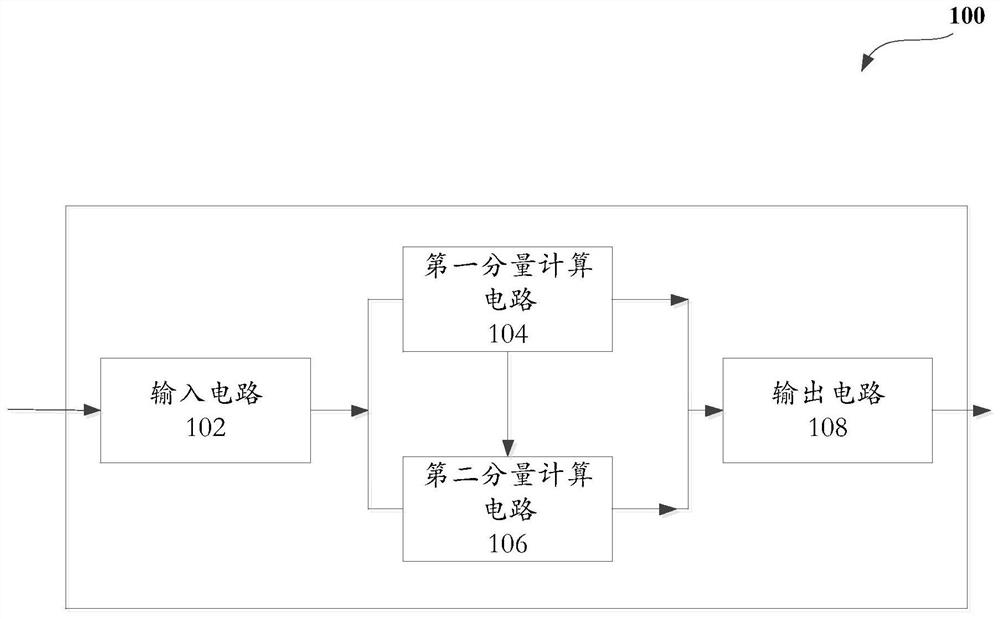

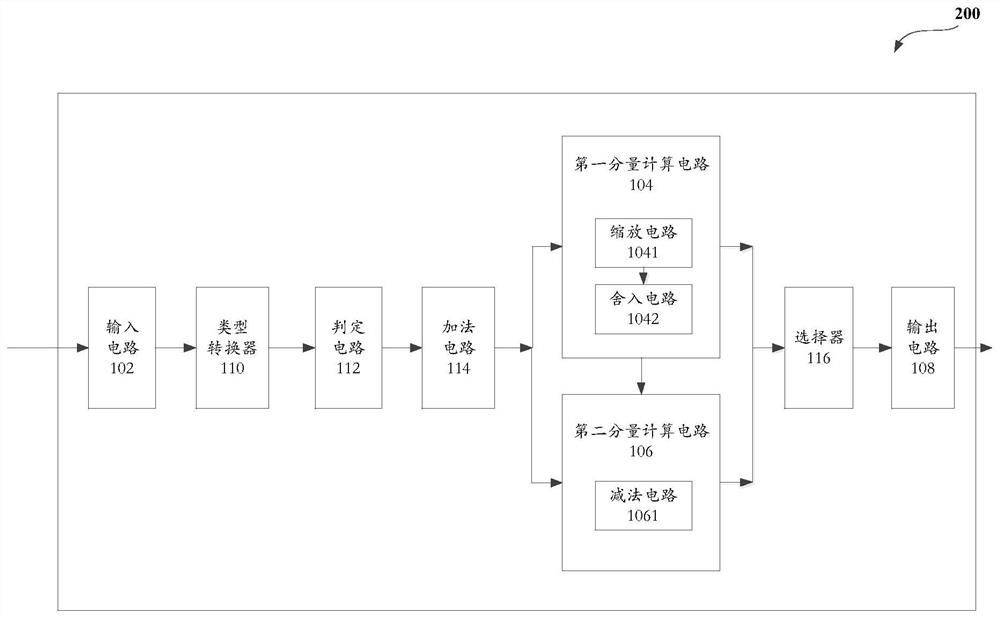

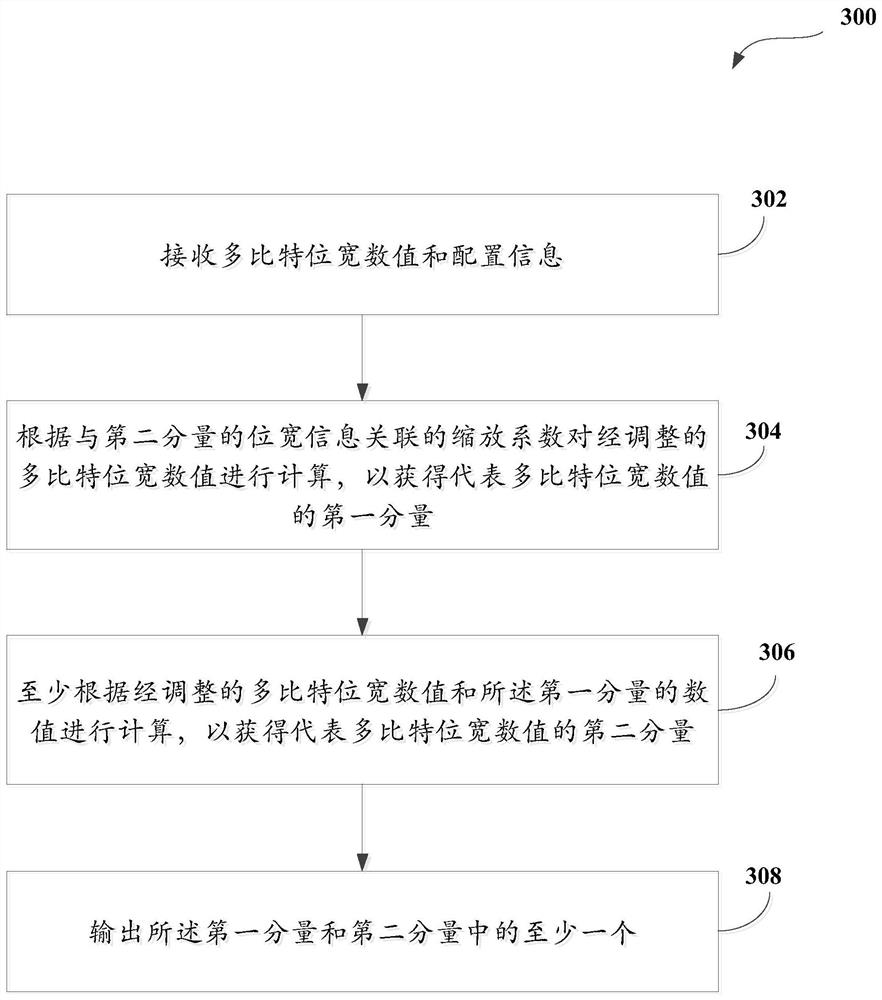

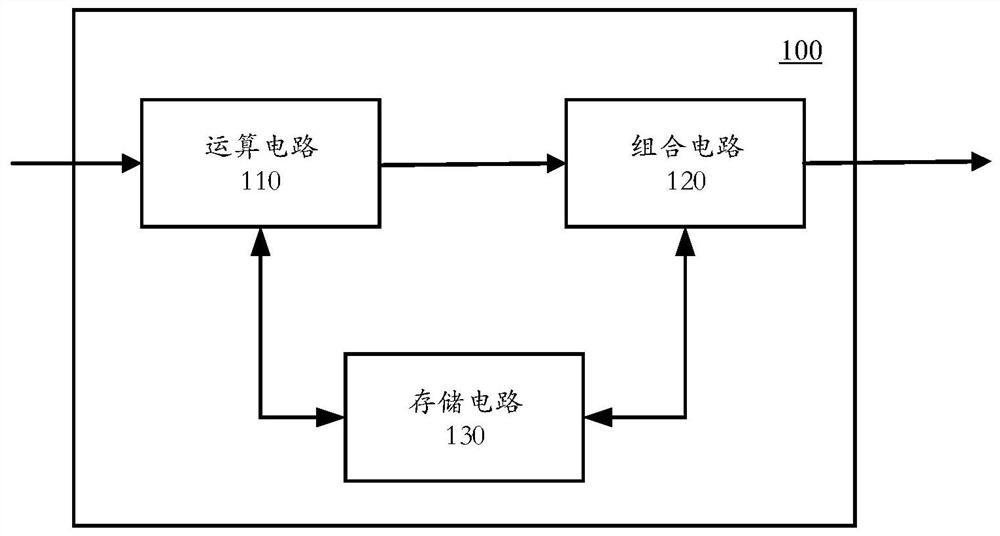

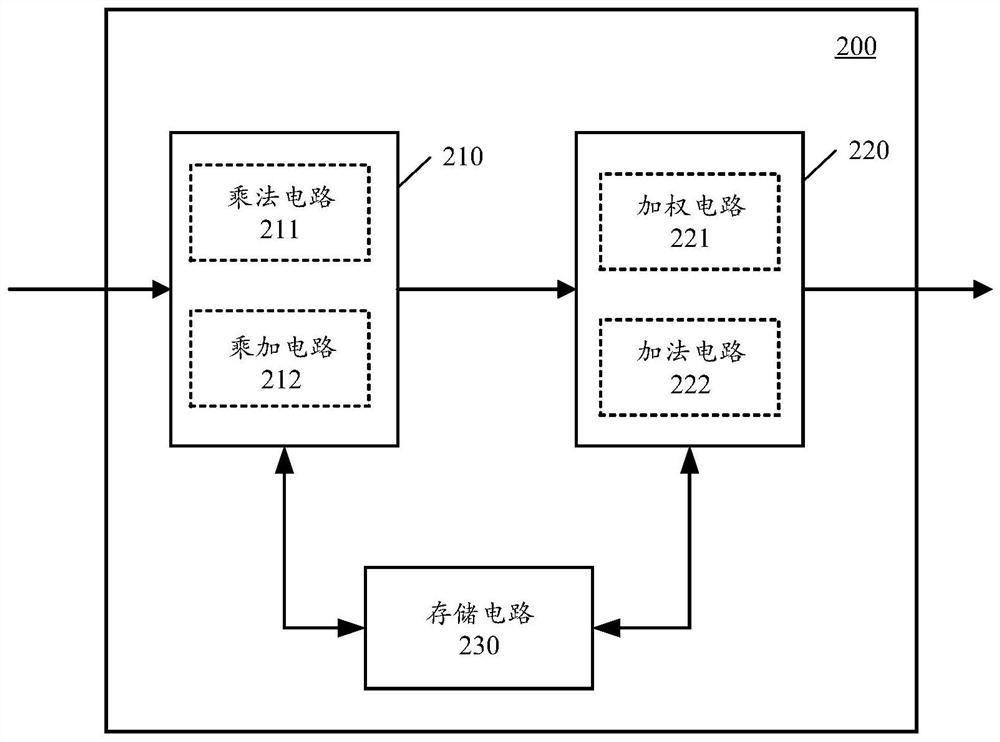

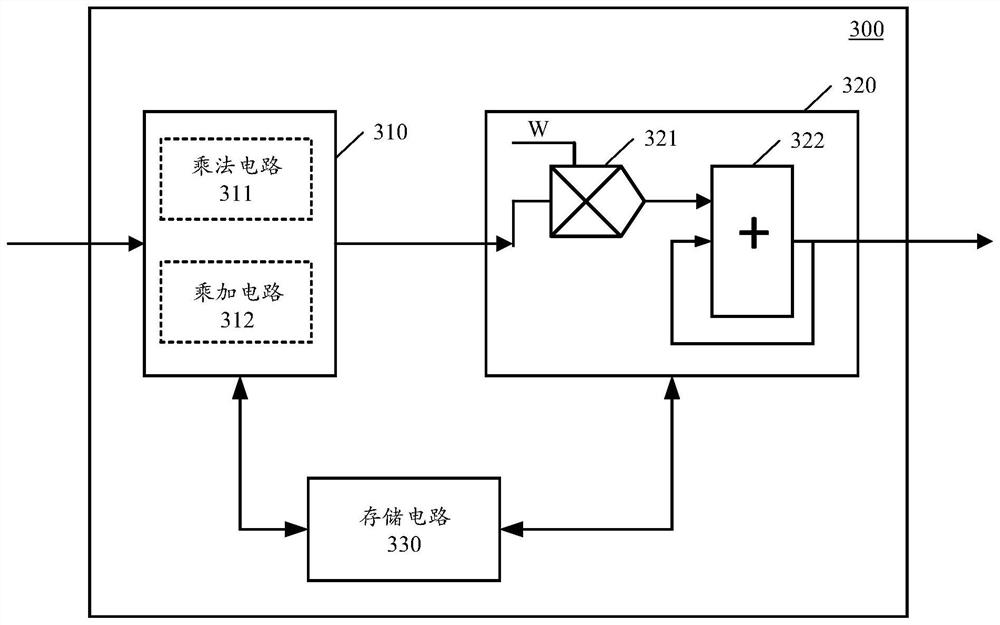

Computing device and method, board card and computer readable storage medium

PendingCN113408716ASimple calculationFull use of computing powerPhysical realisationArchitecture with single central processing unitComputer architectureEngineering

The disclosure discloses a computing device and method for processing multi-bit bit width values, an integrated circuit board, and a computer readable storage medium. The computing device can be included in a combined processing device, and the combined processing device can also include a universal interconnect interface and other processing devices; the computing device interacts with other processing devices to jointly complete computing operations specified by a user; the combined processing device can further comprise a storage device, and the storage device is connected with the equipment and the other processing devices and is used for storing data of the equipment and data of other processing devices. According to the scheme disclosed by the invention, the multi-bit bit width values can be split, so that the processing capacity of a processor is not influenced by the bit width.

Owner:ANHUI CAMBRICON INFORMATION TECH CO LTD

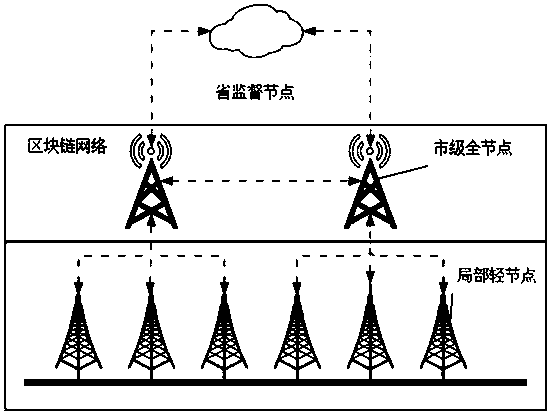

Distributed Storage and transmission structure Based on Block Chain

InactiveCN108989475AImprove real-time performanceImprove reliabilityTransmissionNetwork dataOperating system

The invention discloses a distributed storage and transmission structure based on a block chain, which comprises a local light node, a whole city node and a provincial supervision node, and is connected through a power dedicated wired network. The invention relies on the distributed calculation of the block chain technology, so that the data can be processed at the light node and analyzed at the whole node, so that the computing power of the whole network is fully used, the analysis result of the whole network data is obtained in a short time, and the real-time performance of the whole distribution network is greatly improved.

Owner:STATE GRID HENAN ELECTRIC POWER +2

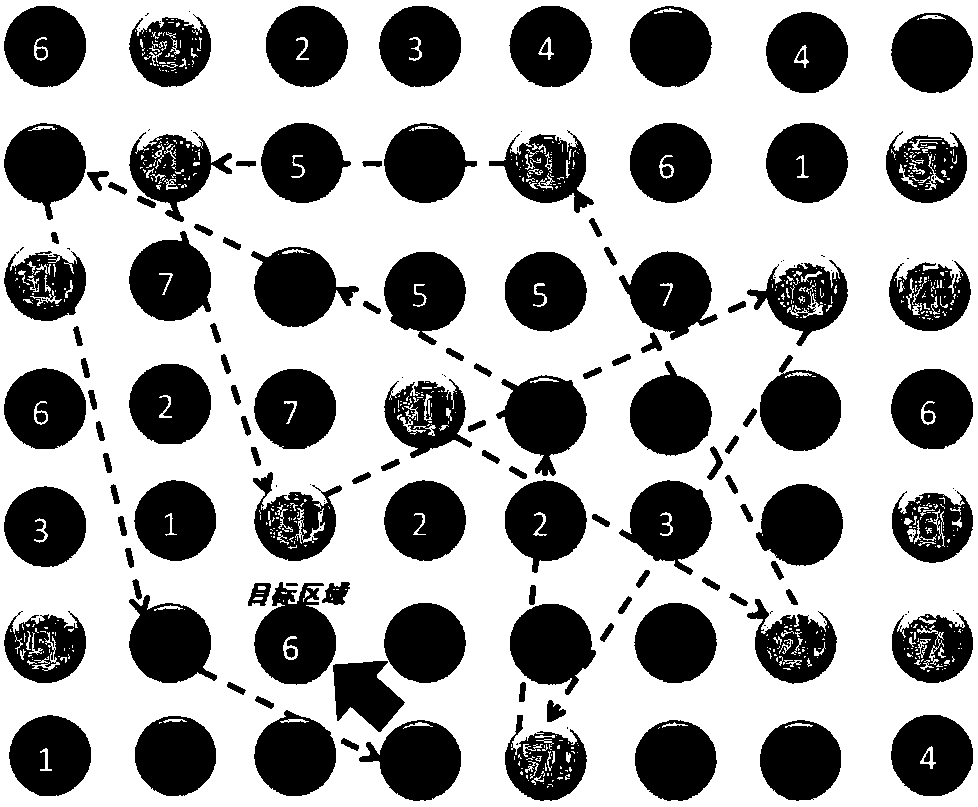

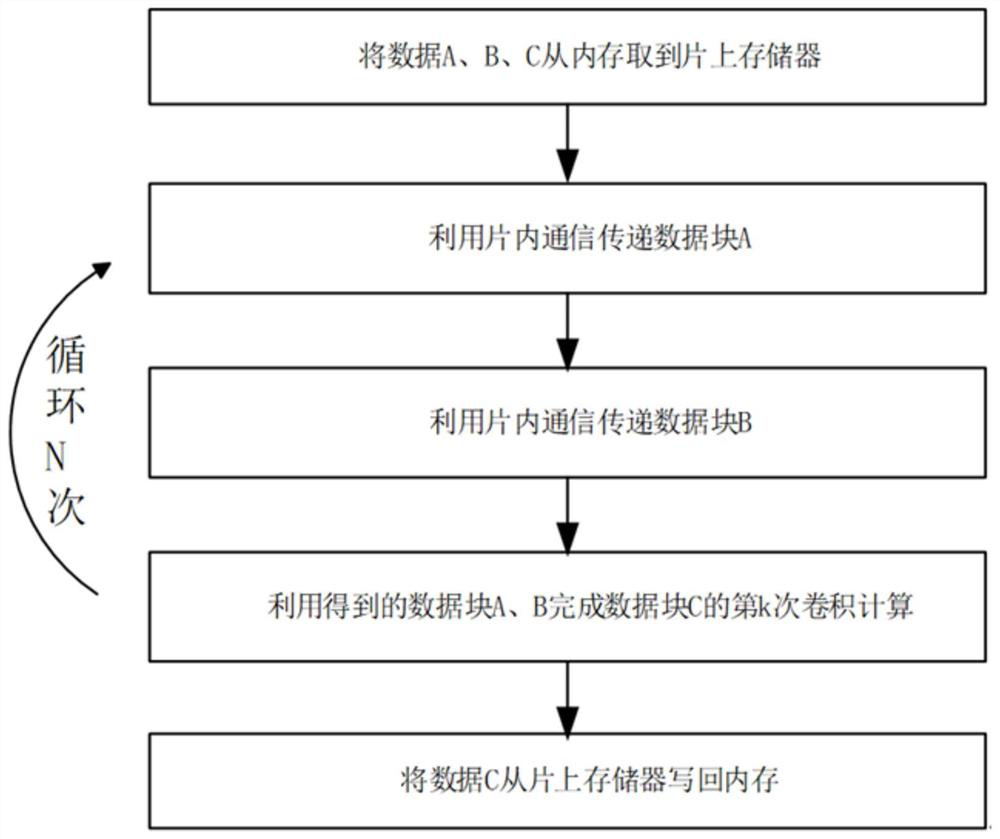

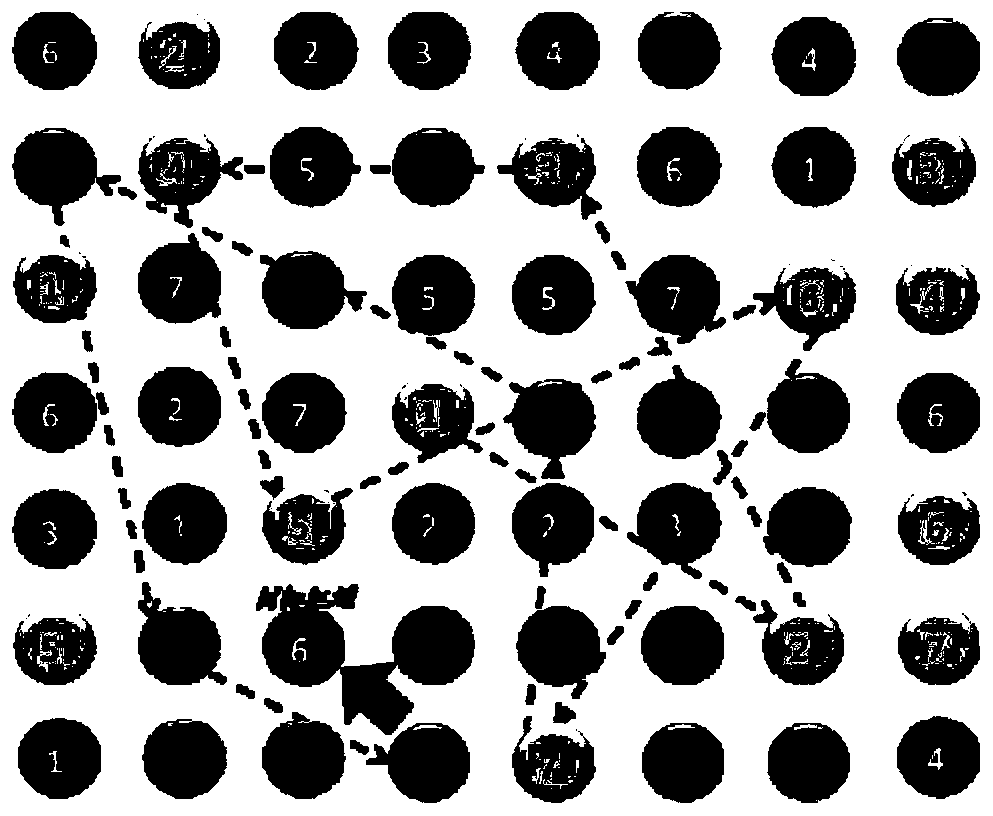

Convolution calculation data reuse method based on heterogeneous many-core processor

ActiveCN112559197AImprove data reuseReduce memory access requirementsInterprogram communicationPhysical realisationEngineeringConvolution

The invention discloses a convolution calculation data reuse method based on a heterogeneous many-core processor. A CPU completes convolution calculation of a data block C through a data block A and adata block B. The method comprises the following steps: S1, according to the number of cores of the heterogeneous many-core processor, performing two-dimensional mapping into N * N, dividing the datablock A, the data block B and the data block C into N * N blocks, enabling the (i, j)th kernel to read the (j, i)th block data from the memory into the own on-chip memory, wherein convolution calculation of the data block C (i, j) needs a data block A (i, k) and a data block B (k, j), and k = 1, 2,..., N; and S2, entering a cycle k for N cycles from 1 to N, and completing the Kth convolution calculation of the data block C by using the obtained data block A and data block B. The memory access requirement of convolution calculation on the heterogeneous many-core processor is remarkably reduced, and the many-core calculation capability is fully exerted, so that the high performance of convolution calculation is realized, and the calculation performance of convolution calculation on the heterogeneous many-core processor is improved.

Owner:JIANGNAN INST OF COMPUTING TECH

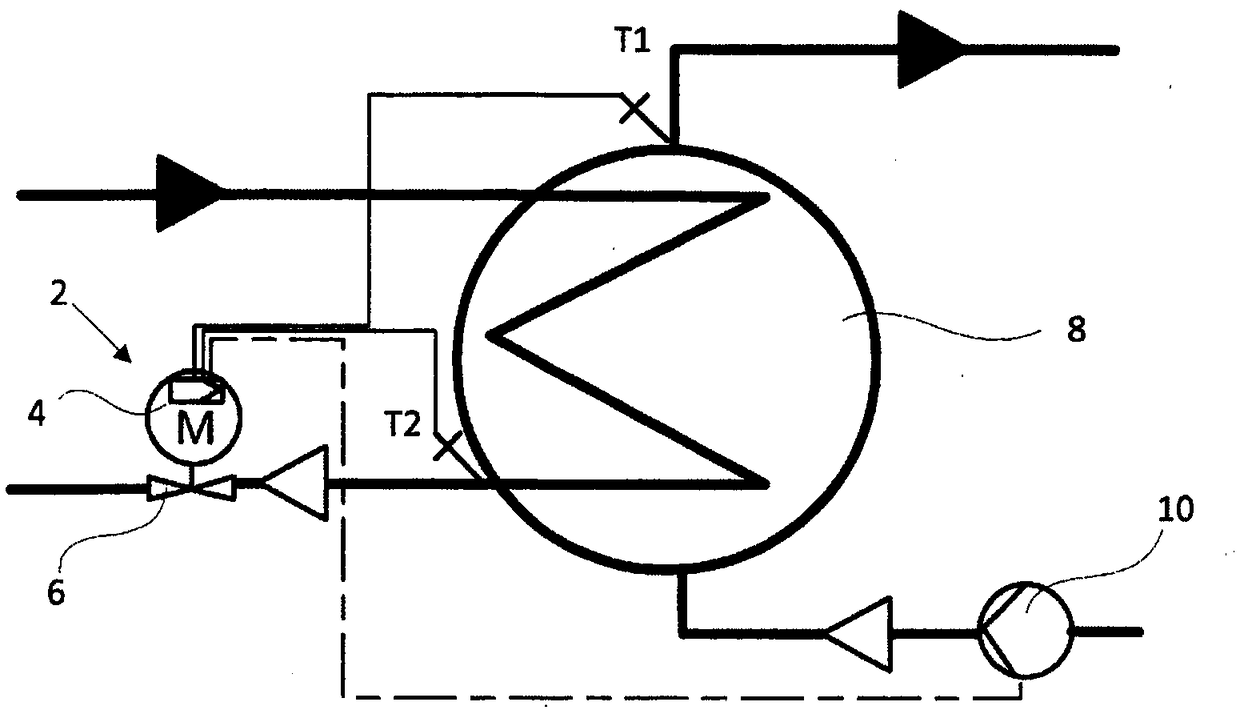

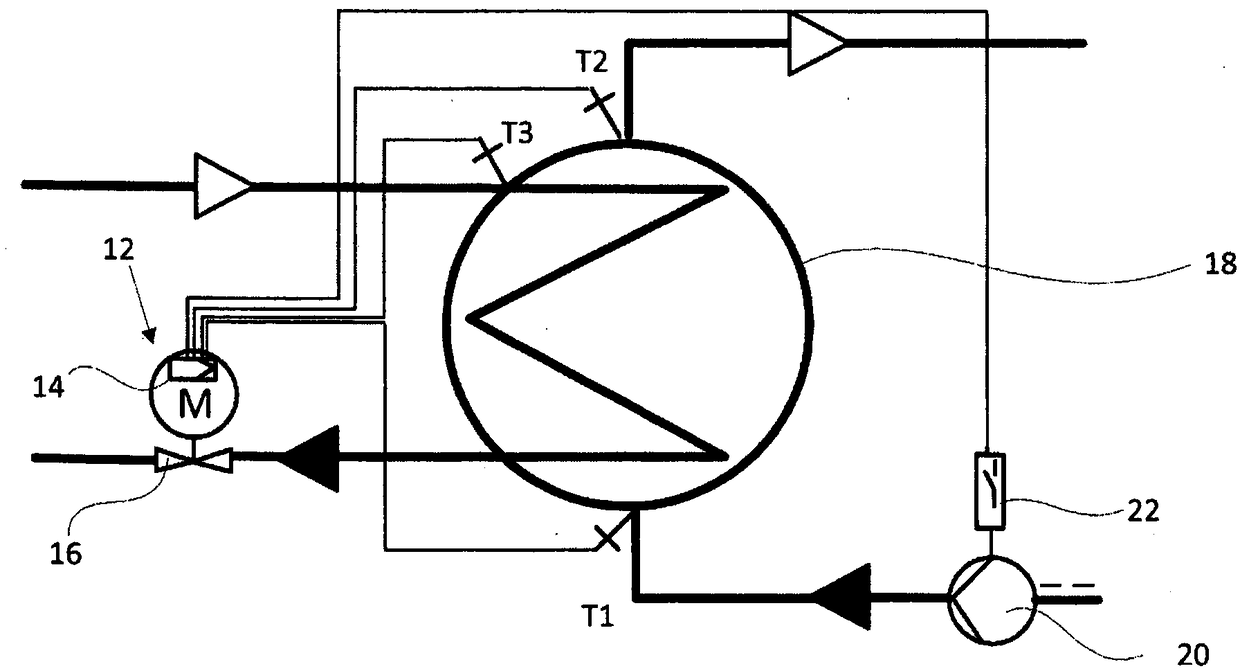

Heat exchanger diagnosing method

ActiveCN109297733ANo other costsFull use of computing powerDistrict heating systemCleaning heat-transfer devicesEngineeringMicro heat exchanger

The invention relates to a heat exchanger diagnosing method. The primary mass flow of the flowing medium flowing through a heat exchanger (8, 18) is regulated by a regulating valve (6, 16) controlledby a process controller (4, 14), wherein the valve position values provided by the process controller (4, 14) for the regulating valves (6, 16) are obtained and stored. The heat exchanger diagnosing method is characterized in that the valve position value for the regulating valve (6, 16) is recorded during the recording period, so that in at least one sequence of measurement results, the valve position value assigned to a periodic observation period is stored, wherein the observation period reflects that in the same time period of one year, the dirty degree of heat exchanger (8,18) is diagnosed by analyzing a position value of the at least one sequence of measurement results throughout the recording period when a deviation of the stored measurement value from a reference value assigned tothe sequence of measurement results is greater than a predetermined deviation from the reference value.

Owner:SAMSON AG

Method for selecting locating algorithms in a vehicle

ActiveCN107923981APrecise positioningNot easy to make mistakesNavigational calculation instrumentsSatellite radio beaconingVehicle dynamicsAlgorithm

The invention relates to a method for selecting locating algorithms in a vehicle, wherein the locating algorithms, in particular for satellite navigation or vehicle dynamics sensors, are selected on the basis of driving states.

Owner:CONTINENTAL AUTOMOTIVE TECH GMBH

Computing device and method, board card and computer readable storage medium

PendingCN113408717ASimple calculationFull use of computing powerDigital data processing detailsPhysical realisationComputer architectureEngineering

The disclosure discloses a computing device and method for processing multi-bit bit width values, an integrated circuit board and a computer readable storage medium. The computing device may be included in a combined processing device, and the combined processing device may also include a universal interconnect interface and other processing devices; the computing device interacts with other processing devices to jointly complete the computing operation specified by a user; the combined processing device can further comprise a storage device, and the storage device is connected with the equipment and other processing devices and is used for storing data of the equipment and data of other processing devices. According to the scheme disclosed by the invention, the multi-bit bit width value can be split, so that the processing capacity of a processor is not influenced by the bit width.

Owner:ANHUI CAMBRICON INFORMATION TECH CO LTD

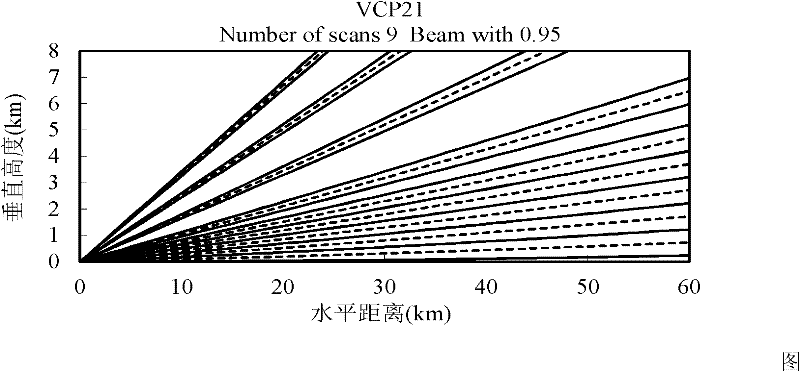

Multi-core parallel calculation method for weather radar data

ActiveCN102117227BImprove portabilityReduce computing timeProgram synchronisationMultiple digital computer combinationsRadar observationsWeather radar

The invention provides a multi-core parallel calculation method for weather radar data. The multi-core parallel calculation method is characterized by comprising the following steps of firstly memorizing detected weather radar observation data by a spherical coordinate system, secondly carrying out lattice formulation on the memorized spherical coordinate data, namely interpolating the radar datawith non-uniform spatial resolution under the spherical coordinate system into the unrelated lattice data with uniform spatial resolution under the uniform Descartes coordinate; thirdly utilizing an OPENMP program to lead the lattice data to enter corresponding CPU cores for calculation under the control of respective threads, thus obtaining the corresponding weather data; and finally synthesizing the data obtained by the calculation of each CPU core, thus obtaining the required weather data. By adopting the multi-core parallel technology, the maximum utilization ratio of a CPU can achieve 97%, the operation capability of a computer can be used sufficiently, and the real-time characteristic of the weather data calculation analysis can be improved.

Owner:NANJING NRIET IND CORP

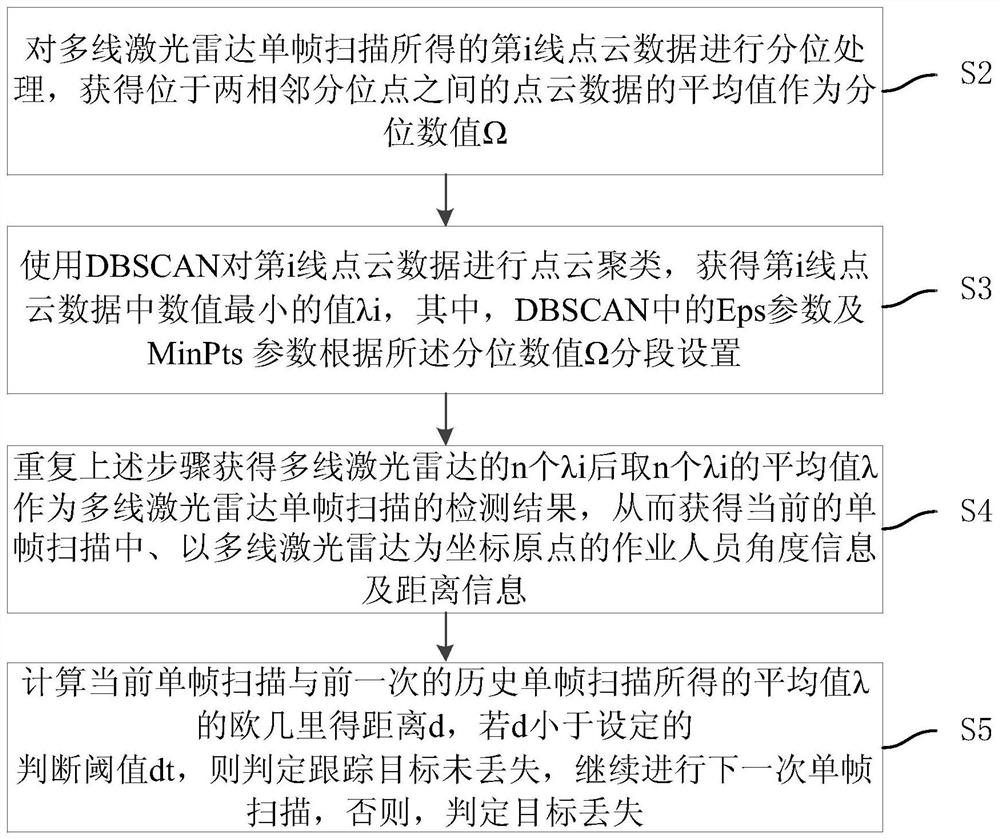

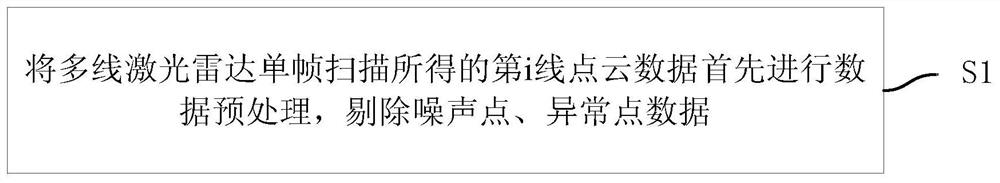

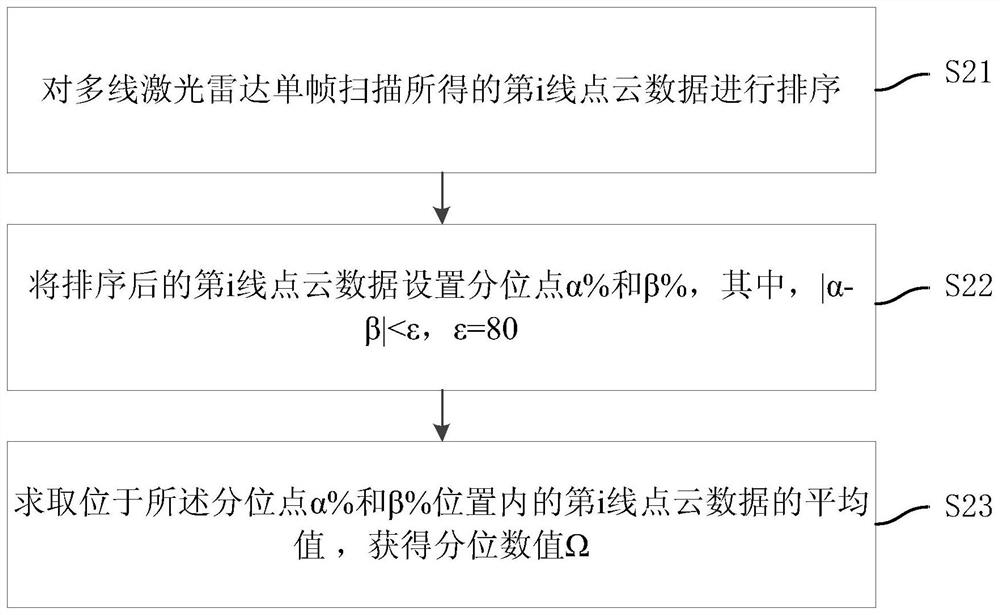

A robot intelligent self-following method, device, medium, and electronic equipment

ActiveCN112493928BHigh range resolutionImprove angular resolutionImage enhancementImage analysisPoint cloudEngineering

The invention discloses a robot intelligent self-following method, device, medium, and electronic equipment. The intelligent self-following method includes the steps of: performing quantization processing on the i-th line point cloud data obtained by multi-line lidar single-frame scanning to obtain The average value of the point cloud data located between two adjacent quantile points is taken as the quantile value Ω; use DBSCAN to perform point cloud clustering on the i-th line point cloud data, and obtain the smallest value λi in the i-th line point cloud data ;Repeat the above steps to obtain n λi of the multi-line laser radar, and then take the average value λ of n λi as the detection result of the multi-line laser radar single-frame scan; calculate the average of the current single-frame scan and the previous historical single-frame scan Euclidean distance d of the value λ and judge whether the target is lost. The present invention is applicable both day and night, and has little impact on the environment; during the following process, the tracking result of the operator is obtained by using the multi-line laser radar, and the detection accuracy is high, and the tracking accuracy is high when combined with the historical track.

Owner:广东盈峰智能环卫科技有限公司 +1

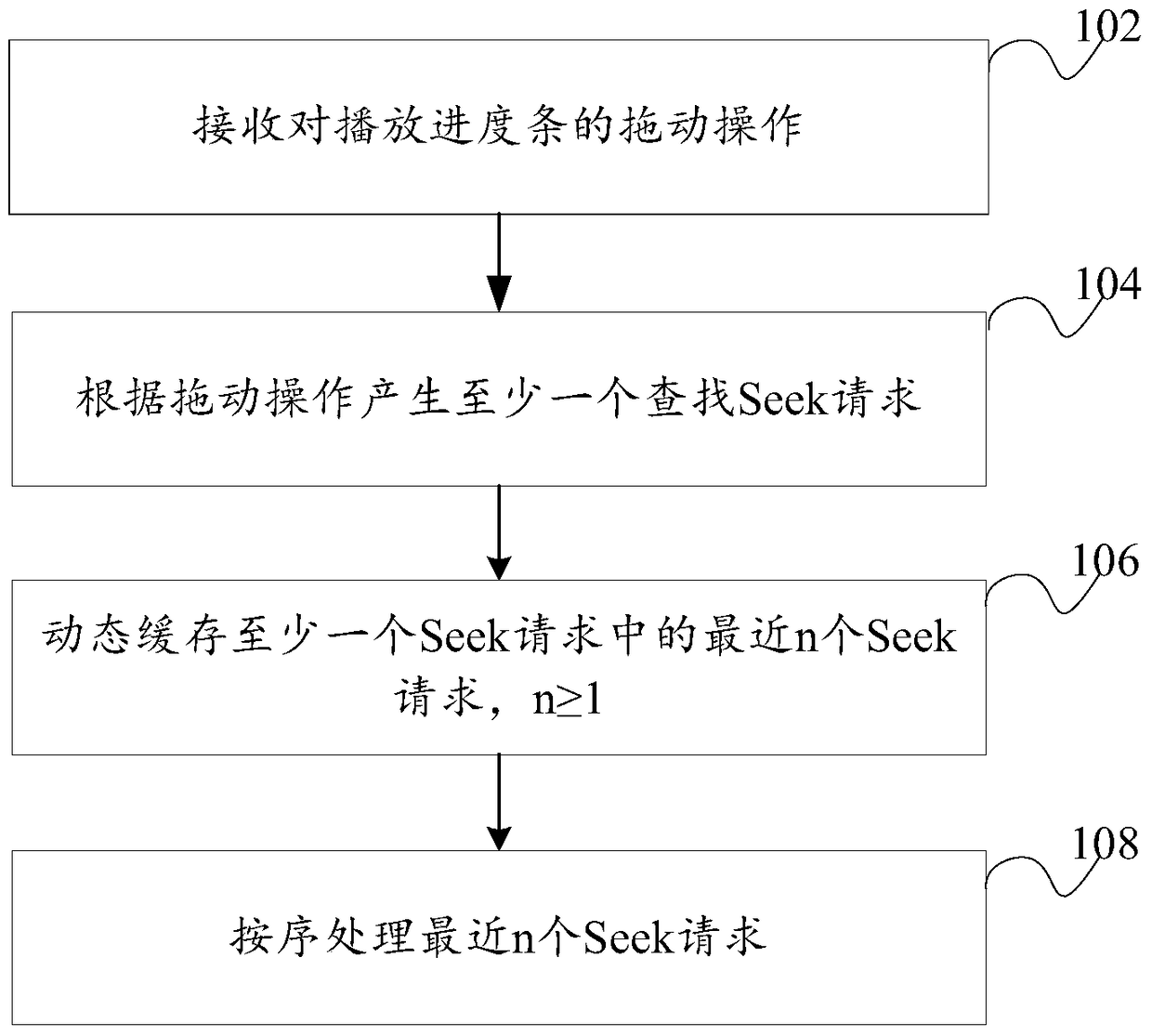

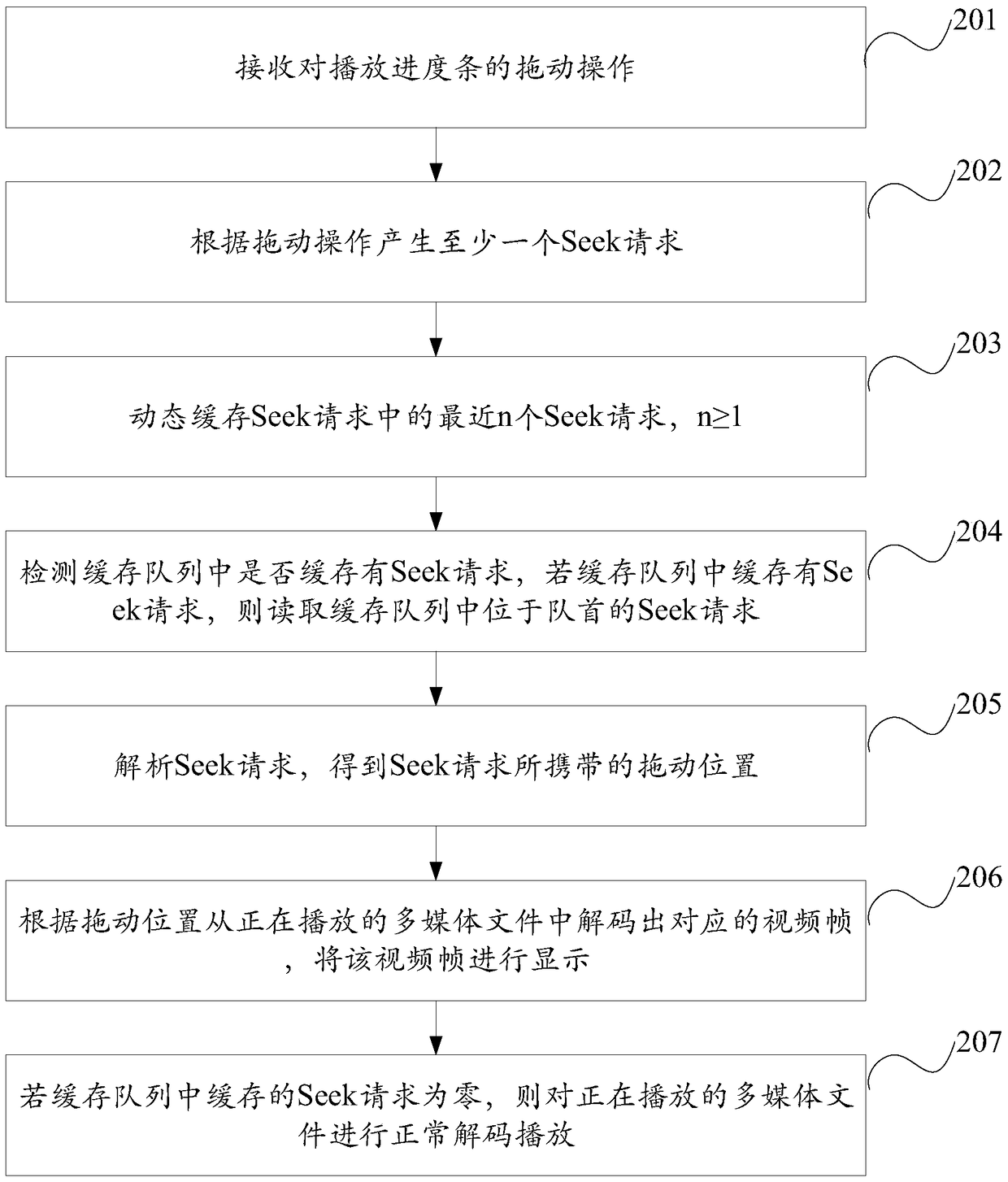

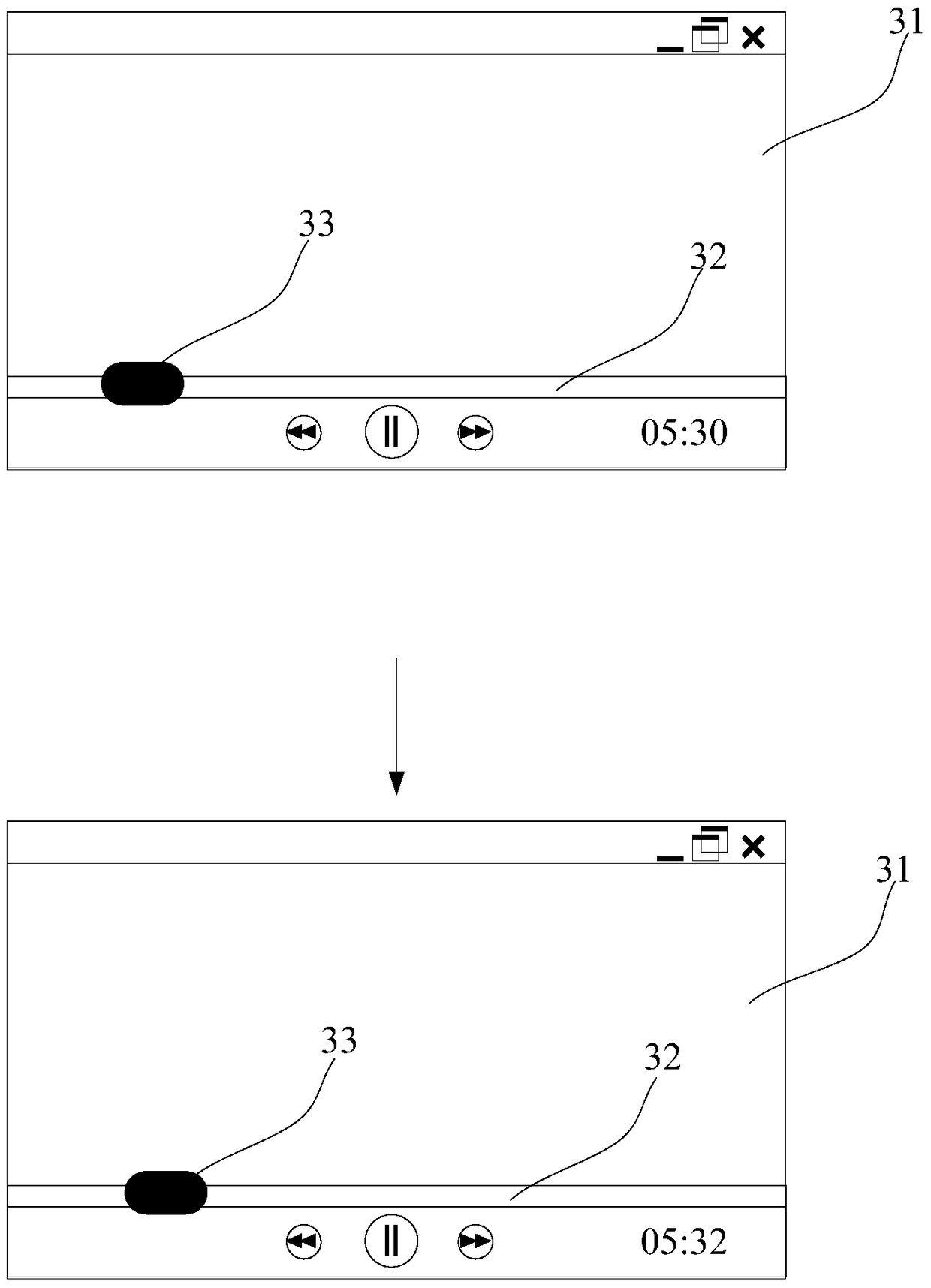

Request processing method, device and electronic device

ActiveCN104837063BFull use of computing powerSelective content distributionComputer hardwareProgress bar

Owner:TENCENT TECH (BEIJING) CO LTD

A multi-modal scheduling method for massive data streams under multi-core DSP

ActiveCN107608784BImprove versatilityImprove portabilityResource allocationInterprogram communicationData streamParallel computing

The invention discloses a multi-modal massive-data-flow scheduling method under a multi-core DSP. The multi-core DSP includes a main control core and an acceleration core. Requests are transmitted between the main control core and the acceleration core through a request packet queue. Three data block selection methods of continuous selection, random selection and spiral selection are determined onthe basis of data dimensions and data priority orders. Two multi-core data block allocation methods of cyclic scheduling and load balancing scheduling are determined according to load balancing. Datablocks selected and determined through a data block grouping method according to allocation granularity are loaded into multiple computing cores for processing. The method adopts multi-level data block scheduling manners, satisfies requirements of system loads, data correlation, processing granularity, the data dimensions and the orders when the data blocks are scheduled, and has good generalityand portability; and expands modes and forms of data block scheduling from multiple levels, and has a wider scope of application. According to the method, a user only needs to configure the data blockscheduling manners and the allocation granularity, a system automatically completes data scheduling, and efficiency of parallel development is improved.

Owner:XIAN MICROELECTRONICS TECH INST

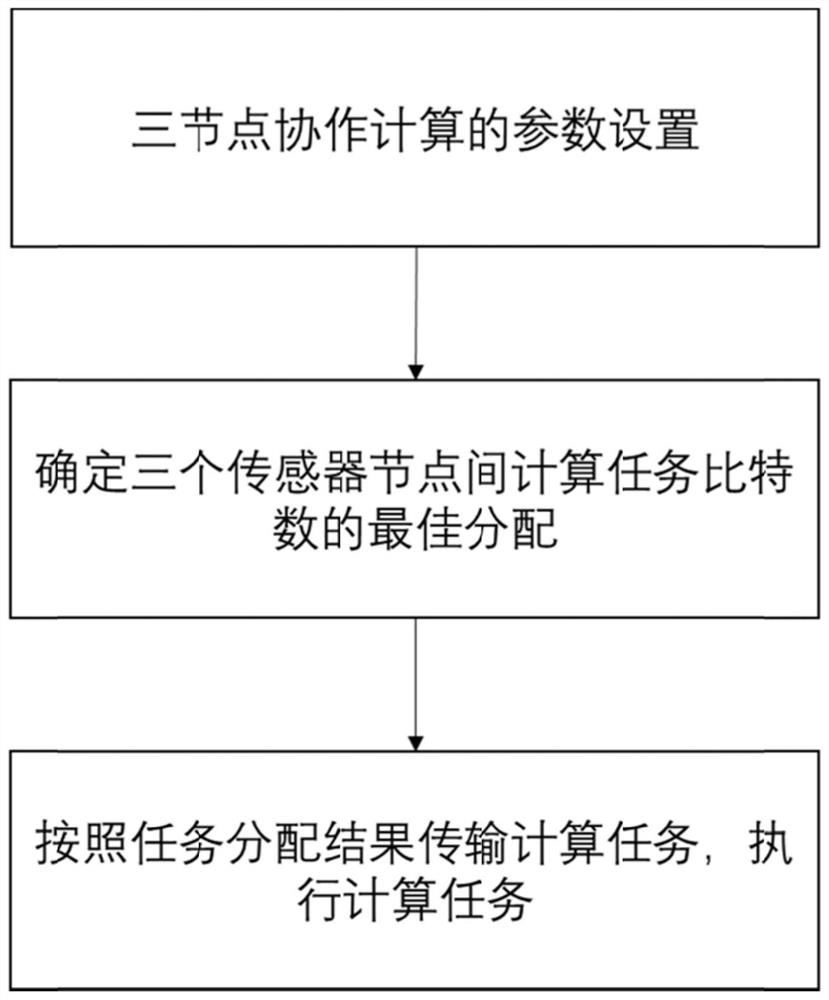

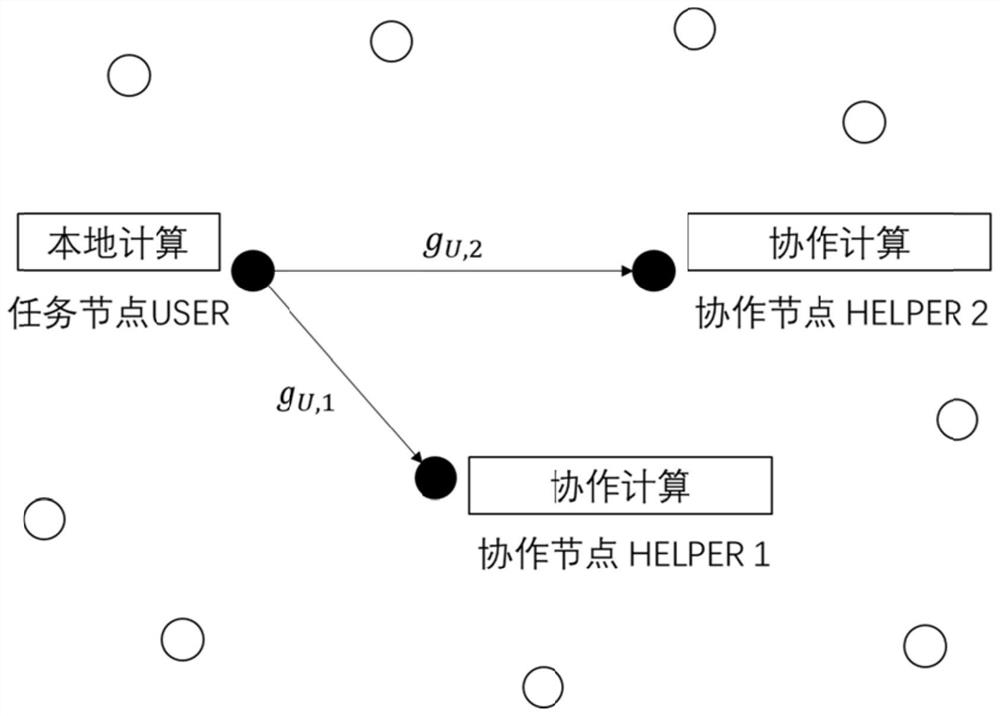

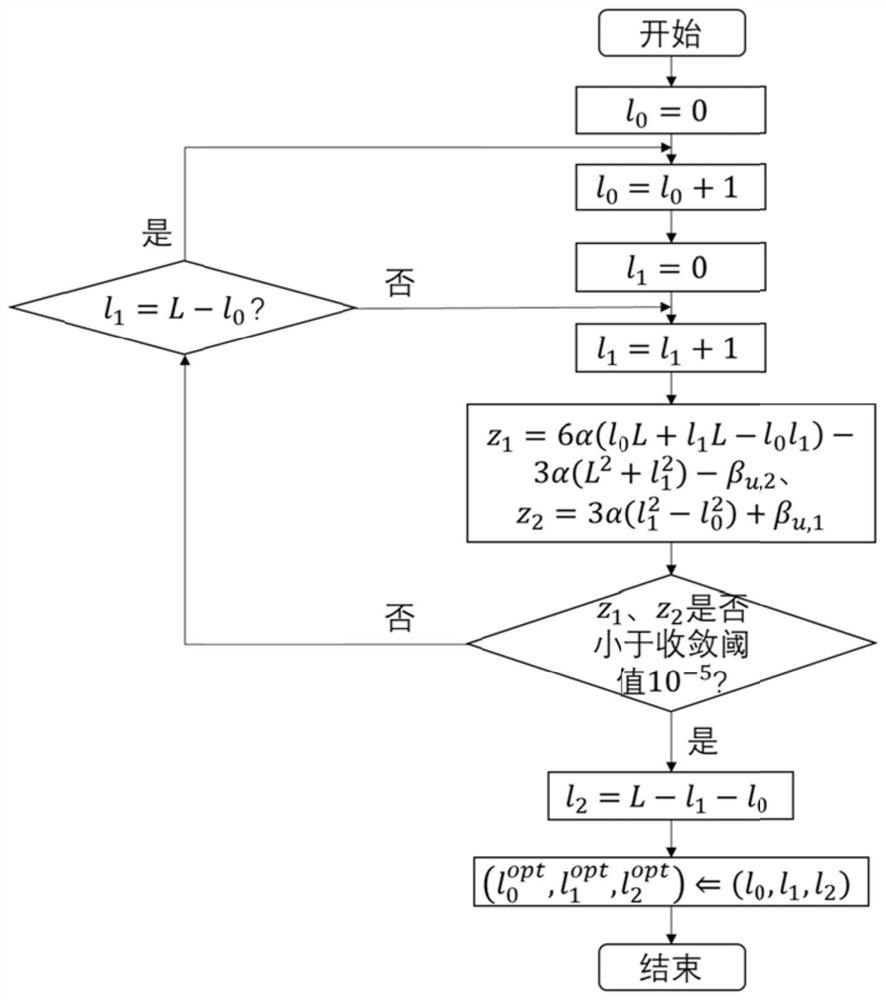

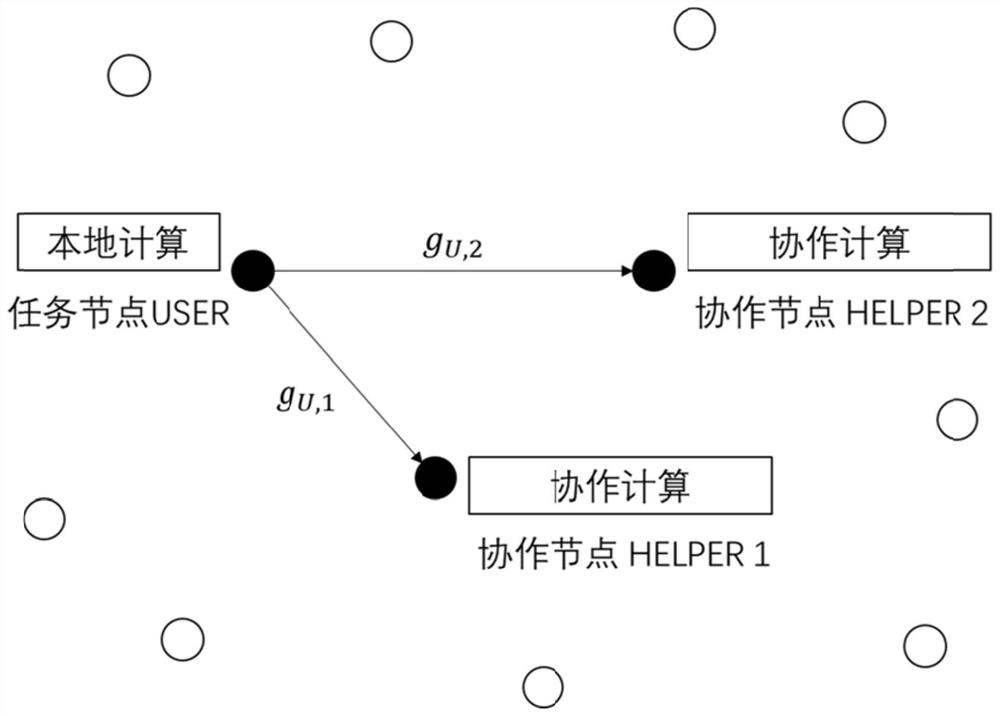

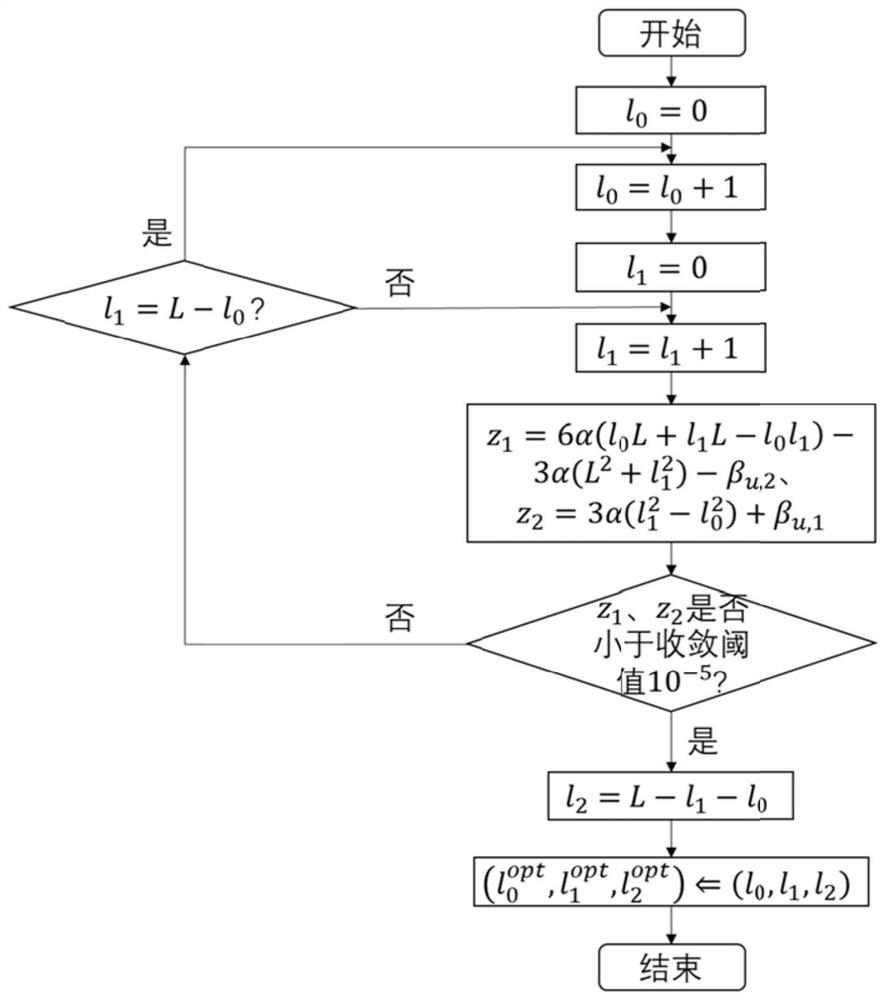

Computational task assignment method based on three-node cooperation in wireless sensor networks

ActiveCN112954635BFull use of computing powerNetwork traffic/resource managementParticular environment based servicesResource poolLine sensor

The invention relates to a calculation task allocation method based on three-node cooperation in a wireless sensor network, comprising: setting parameters for three-node cooperation calculation; determining the optimal task allocation of the number of calculation task bits among three sensor nodes; according to the task allocation results Transfer computing tasks and execute computing tasks. Compared with the prior art, the present invention can complete calculation tasks with lower energy consumption under the premise of satisfying time delay constraints, and takes into account the channel conditions, calculation task size and time delay constraints in the sensor network; the present invention Encourage individual sensor nodes to share resources through collaborative computing to build a virtual resource pool, give full play to the computing power of sensor nodes, mobilize redundant resources in the network, and regard sensor nodes in the sensor network as a unified whole, considering the overall computing energy consumption and Communication energy consumption to design and optimize collaborative computing.

Owner:ANHUI UNIVERSITY

Calculation task allocation method based on three-node cooperation in wireless sensor network

ActiveCN112954635AFull use of computing powerNetwork traffic/resource managementParticular environment based servicesResource poolLine sensor

The invention relates to a calculation task allocation method based on three-node cooperation in a wireless sensor network. The calculation task allocation method comprises the following steps: setting parameters of three-node cooperation calculation; determining the optimal task allocation for calculating the task bit number among the three sensor nodes; and transmitting the calculation task according to the task distribution result, and executing the calculation task. Compared with the prior art, the method has the advantages that the calculation task can be completed with low energy consumption on the premise of meeting the delay constraint, and the channel condition, the calculation task size and the delay constraint in the sensor network are considered; and single sensor nodes are encouraged to share resources through cooperative computing so as to construct a virtual resource pool, the computing power of the sensor nodes is fully played, redundant resources in the network are mobilized, the sensor nodes in the sensor network are used as a unified whole, the whole computing energy consumption and communication energy consumption are considered, and the cooperative calculation is designed and optimized.

Owner:ANHUI UNIVERSITY

Computing device, integrated circuit chip, integrated circuit board, computing equipment and computing method

PendingCN113934678ASimple calculationFull use of computing powerDigital data processing detailsGeneral purpose stored program computerEngineeringInterface (computing)

The disclosure discloses a computing device, an integrated circuit chip, an integrated circuit board, computing equipment and a computing method. The computing device may be included in a combined processing device, which may also include an interface device and other processing devices. The computing device interacts with other processing devices to jointly complete computing operation specified by a user. The combined processing device can further comprise a storage device, and the storage device is connected with the computing device and the other processing devices and used for storing data of the computing device and the other processing devices. According to the scheme disclosed by the invention, at least two pieces of small bit width data characterizing large bit width data can be used for executing operation processing, so that the processing capability of the processor is not influenced by bit width.

Owner:CAMBRICON TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com