Multi-modal massive-data-flow scheduling method under multi-core DSP

A scheduling method and data flow technology, applied in the directions of multi-program device, resource allocation, inter-program communication, etc., can solve problems such as multi-core load balancing multi-modal scheduling without considering massive data flow segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

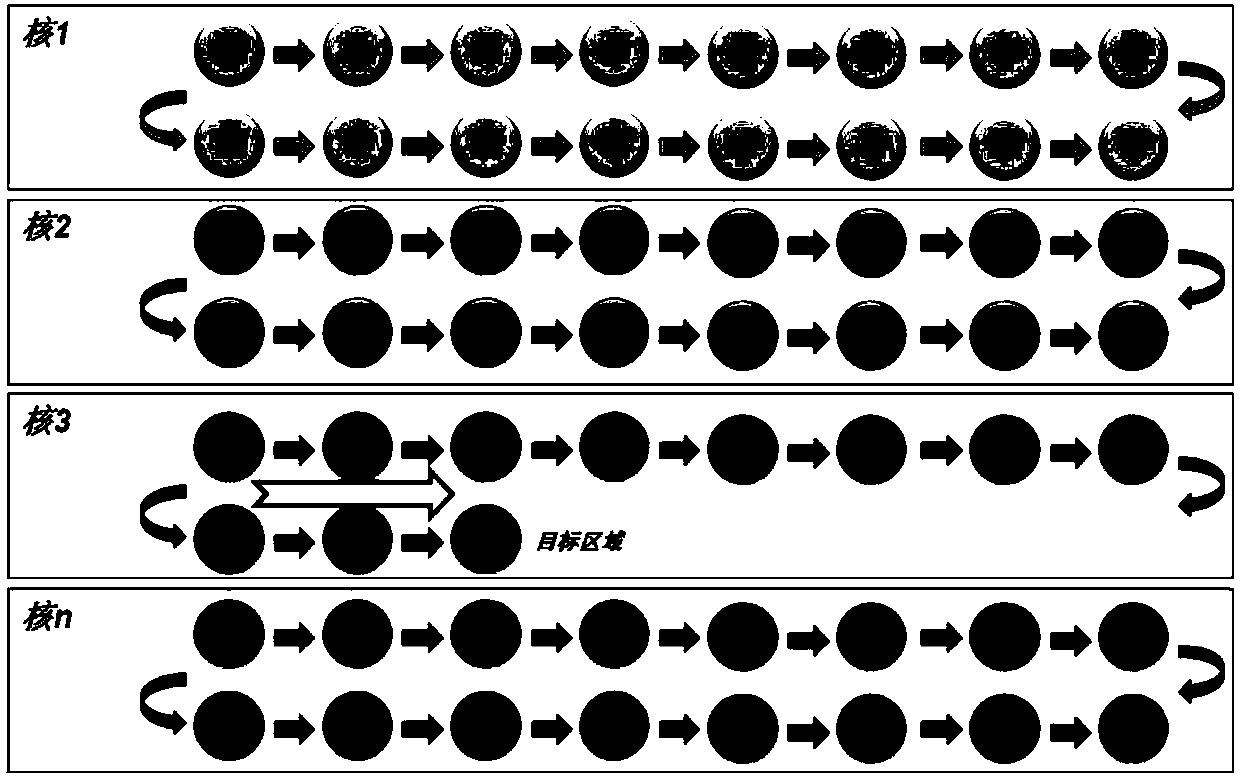

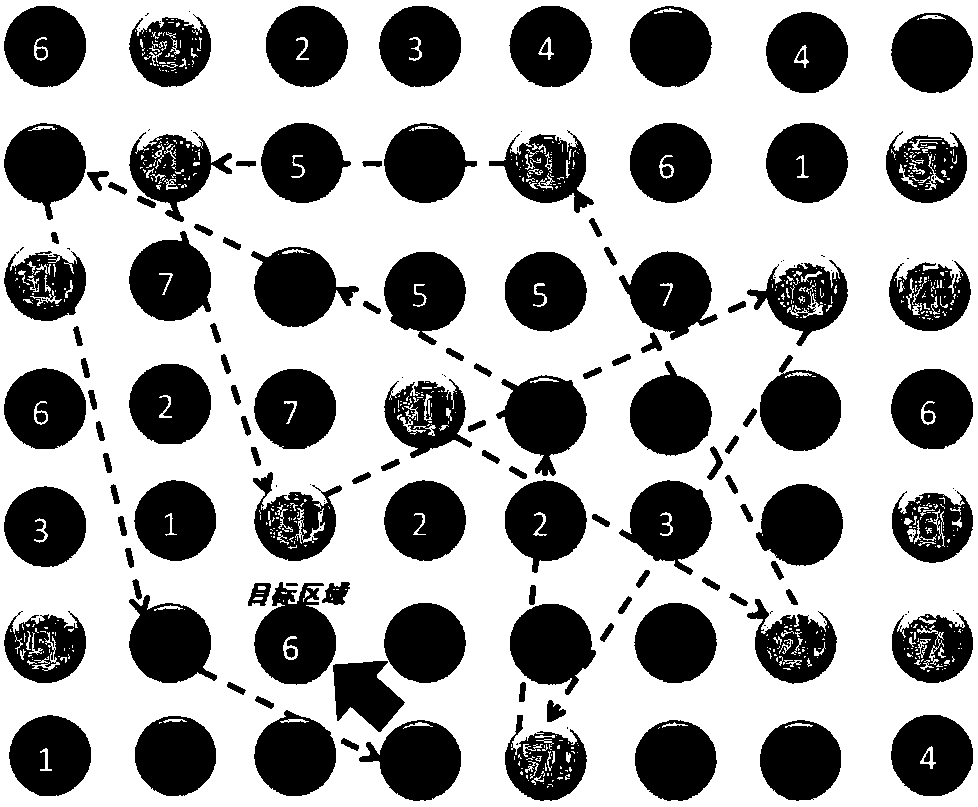

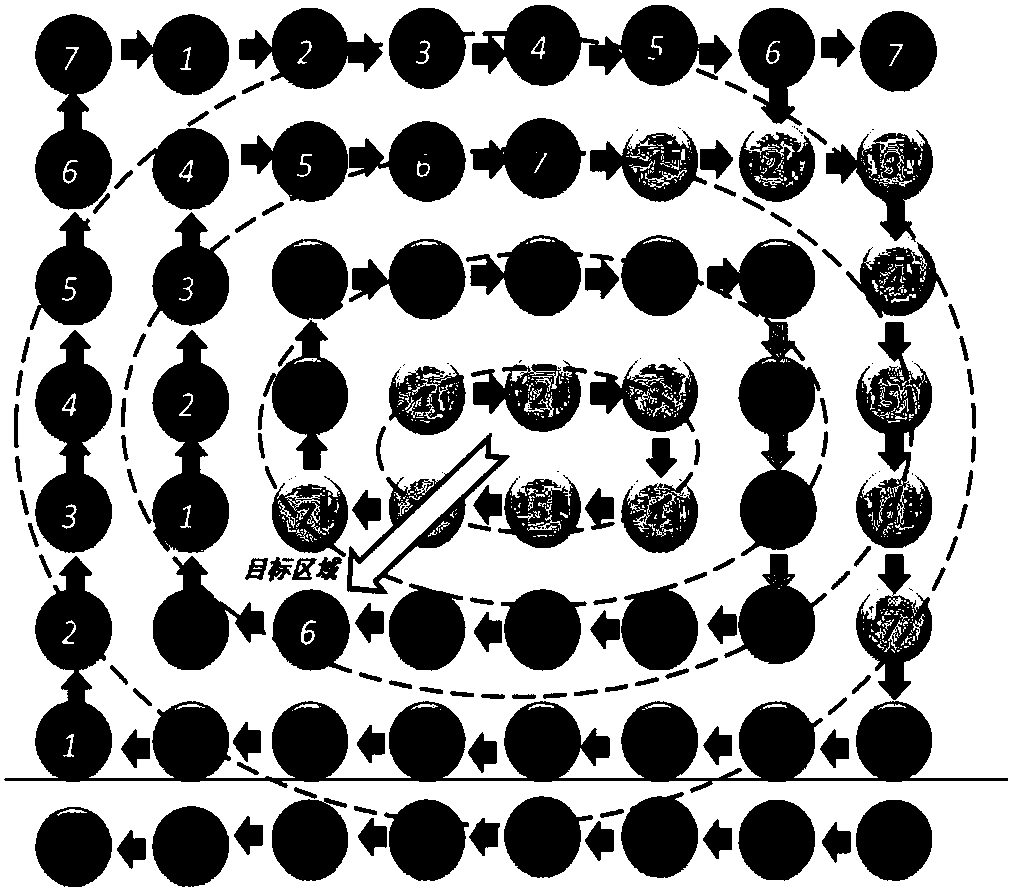

[0140] The present invention provides a multi-modal scheduling method for massive data streams under multi-core DSP. From the four perspectives of load balancing, distribution granularity, data dimension and processing sequence, the data block scheduling method is planned, and three kinds of data are proposed. Block selection method, two data allocation methods and one data block grouping method, and a flexible combination method and easy-to-use method are designed.

[0141] see Figure 7 , the present invention is a part of the multi-core DSP massive data stream parallel framework, mainly used for data block scheduling of massive data streams, divided into main control core parallel middleware and acceleration core parallel support system, the main control core is responsible for creating a massive data parallel scheduling environment , tasks and data blocks, and complete the scheduling and allocation of tasks and data blocks; the acceleration core is responsible for processi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com