A robot intelligent self-following method, device, medium, and electronic equipment

A robot intelligence and robot technology, applied in cleaning equipment, machine parts, instruments, etc., can solve the problems of being easily affected by day and night operations, poor robustness of cleaning robots, and low tracking accuracy, achieving strong adaptability and anti-interference. ability, improve tracking accuracy, and meet computing power requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be described in detail below with reference to the accompanying drawings and examples.

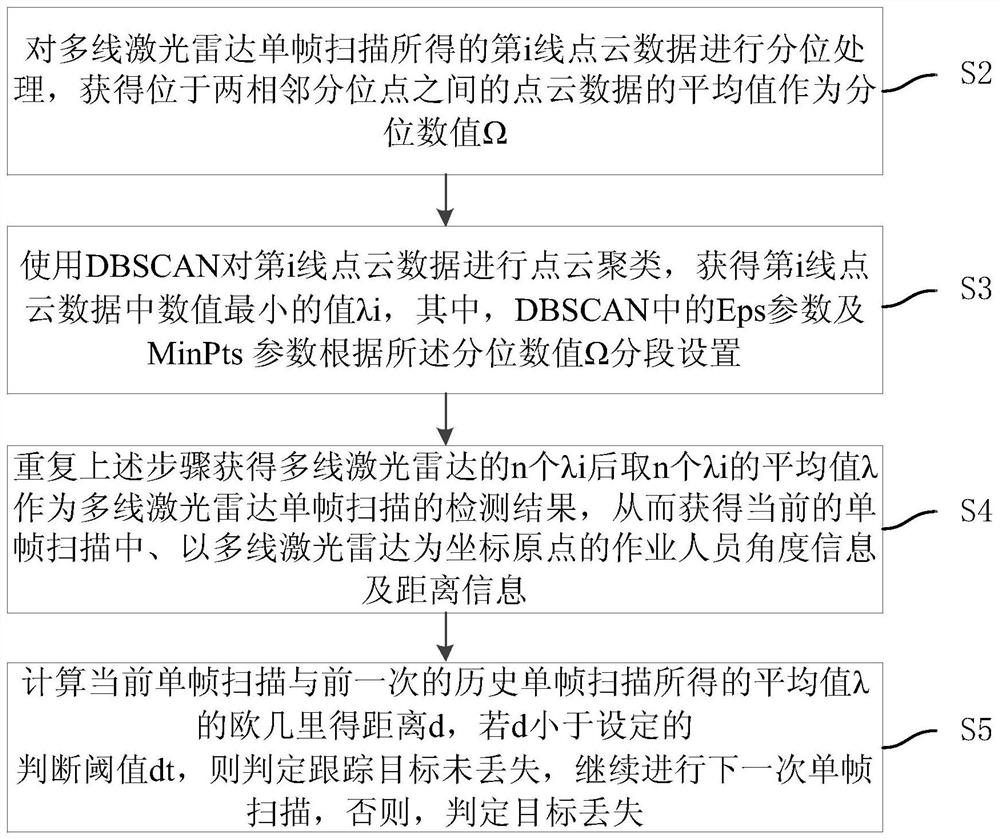

[0049] refer tofigure 1 , the preferred embodiment of the present invention provides a kind of robot intelligent self-following method, comprises steps:

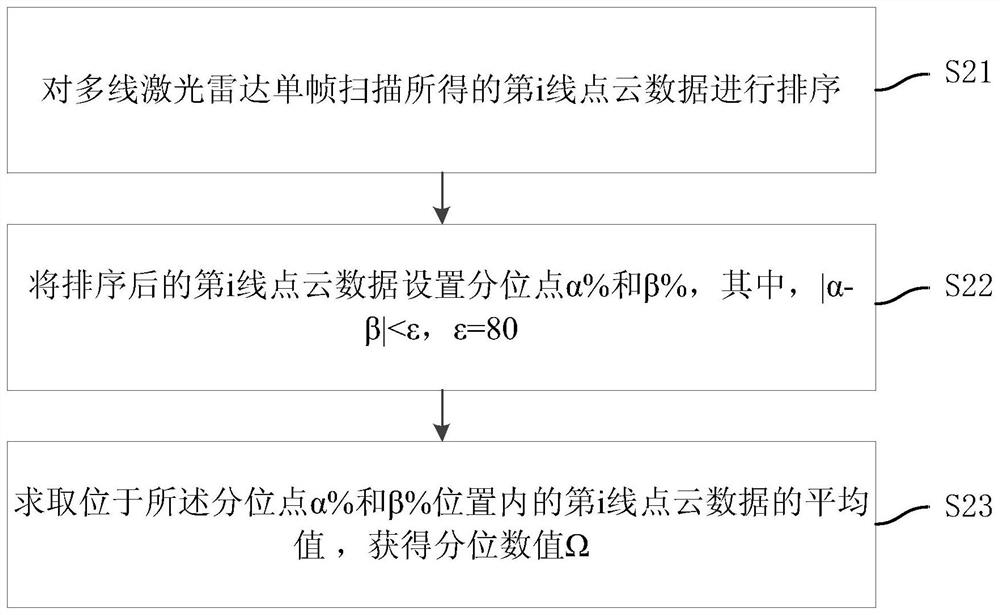

[0050] S2. Perform quantile processing on the i-th line point cloud data obtained by multi-line lidar single-frame scanning, and obtain the average value of the point cloud data located between two adjacent quantile points as the quantile value Ω;

[0051] S3. Use DBSCAN to perform point cloud clustering on the i-th line point cloud data, and obtain the value λi with the smallest value in the i-th line point cloud data, wherein the Eps parameter and MinPts parameter in DBSCAN are segmented according to the quantile value Ω set up;

[0052] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com