Network live video feature extraction method in complex scene based on joint attention ResNeSt

A network live broadcast and video feature technology, applied in image communication, selective content distribution, electrical components, etc., can solve the problems that it is difficult to effectively learn spatio-temporal context information and affect the accuracy rate, so as to save computing resources, enhance effective extraction, The effect of good discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] According to the above description, the following is a specific implementation process, but the protection scope of this patent is not limited to this implementation process. Below is the concrete workflow of the present invention:

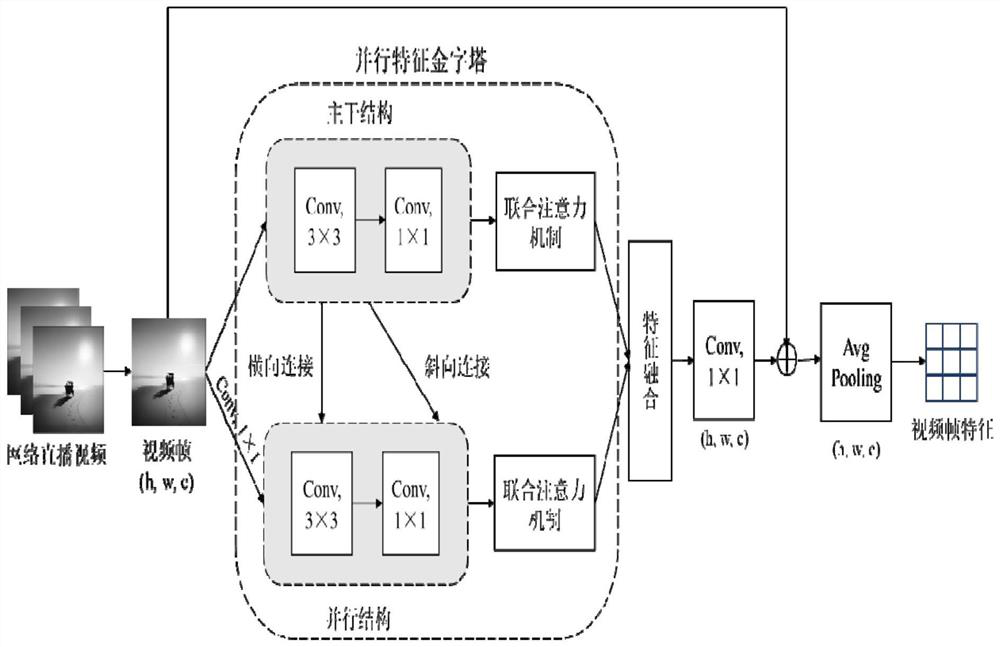

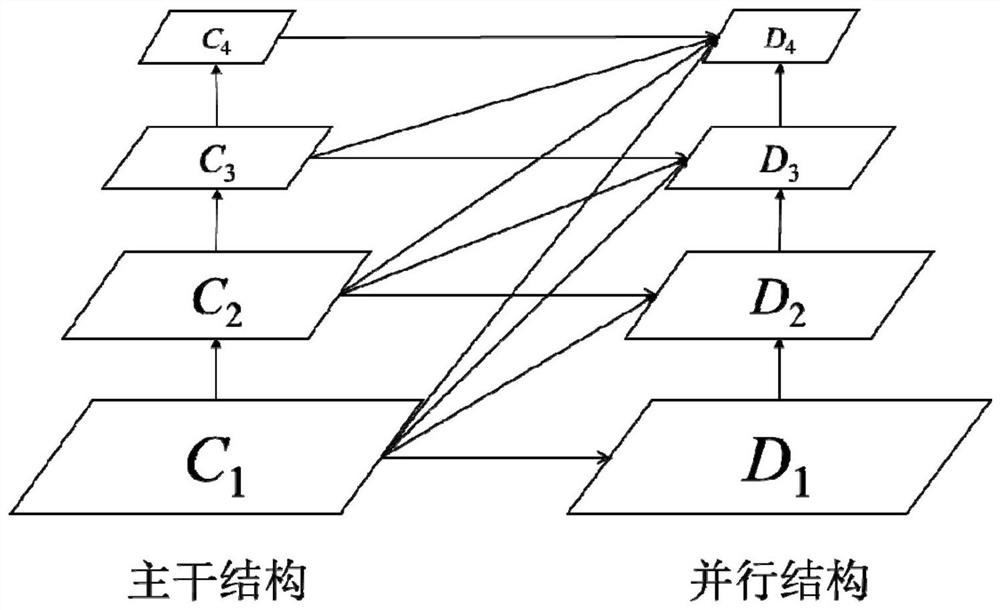

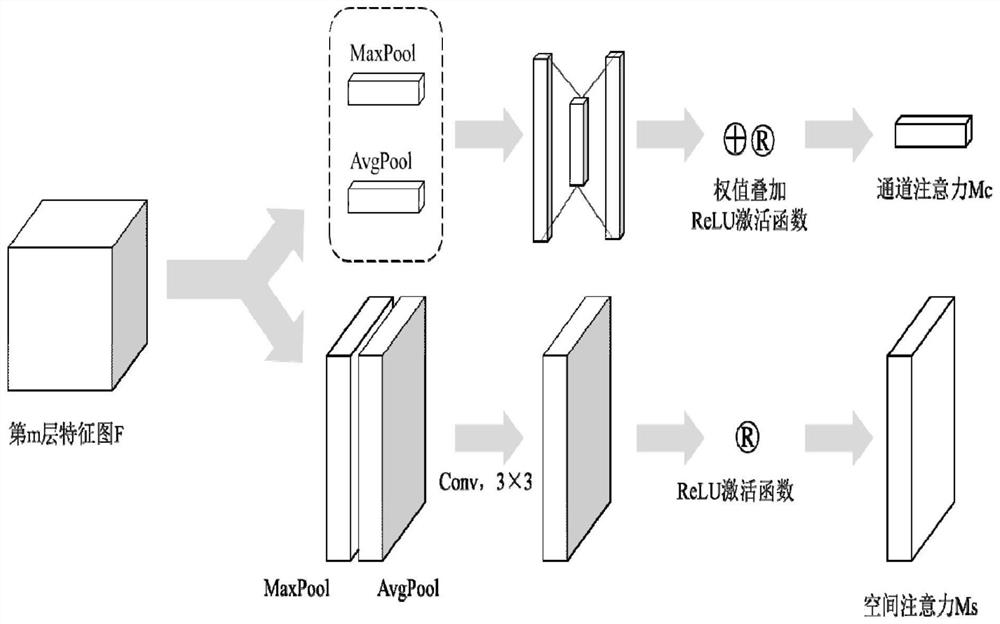

[0031] The video data used in the present invention comes from multiple network video platforms, and key frames are extracted from various downloaded live videos. During the experiment, the key frame was taken at 5 fps, and only the segment composed of 16 consecutive frames was taken to represent the video, and the video frame data of 224×224 pixels was obtained through Resize preprocessing. Put the video frame data into the feature pyramid for down-sampling to obtain feature maps of different scales; then through the calculation of the joint attention mechanism, the attention weight distribution of multi-scale features is obtained; finally, combined with convolution and pooling operations The ResNeSt module is set up, and the ResNeSt50 fe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com