Industrial man-machine interaction system and method based on vision and inertial navigation positioning

An inertial positioning, human-machine technology, applied in manipulators, manufacturing tools, program-controlled manipulators, etc., can solve problems such as inability to interact with humans and move around with people, complex image processing processes, and space constraints.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be described in detail below in conjunction with the accompanying drawings.

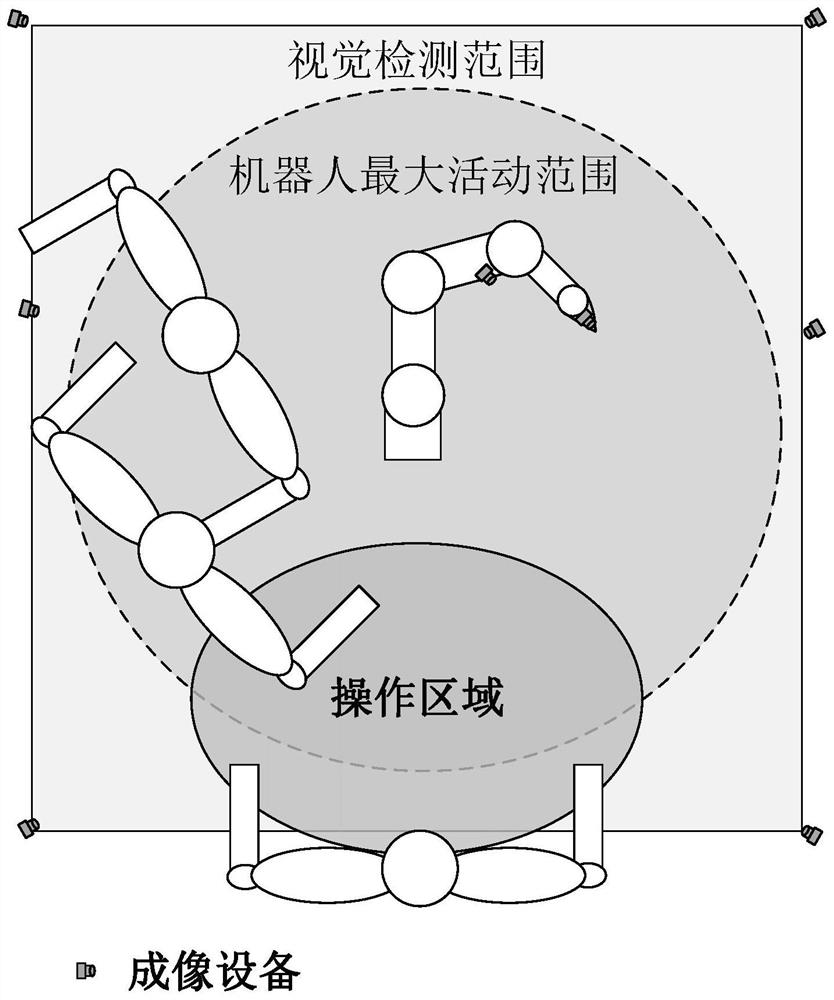

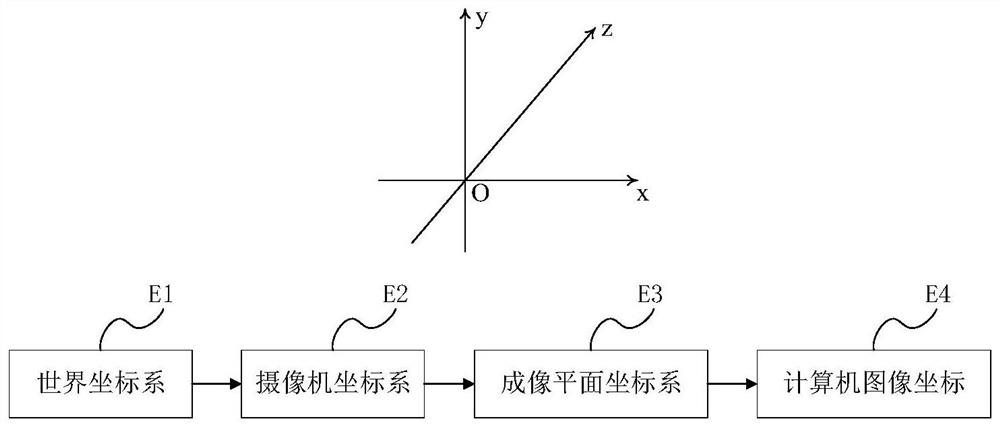

[0056] The industrial human-computer interaction system based on vision and inertial navigation positioning includes controlled equipment, scene image acquisition system and auxiliary positioning equipment worn on the operator's arm, palm and fingertips.

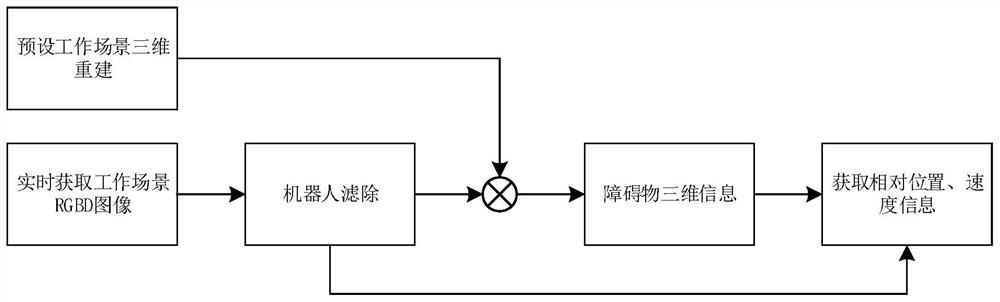

[0057] In this system, the scenes of human-computer interaction can be divided into two categories: non-interactive workspace and interactive workspace. During the working process in the interactive workspace, the operator will have direct or indirect contact with the robotic arm. The main problem that threatens people and safety in the process of human-computer interaction is the collision between the operator and the controlled equipment. The main task of the system during operation is to predict the impending collision. For two different scenarios, this system divides the problem of ensuring human-machine safet...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com