Man-machine interaction method and man-machine interaction device

A technology of human-computer interaction and gesture action, applied in the input/output of user/computer interaction, computer parts, mechanical mode conversion, etc. The effect of privacy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0112] This embodiment relates to a method for air-space control of a vehicle through gesture actions.

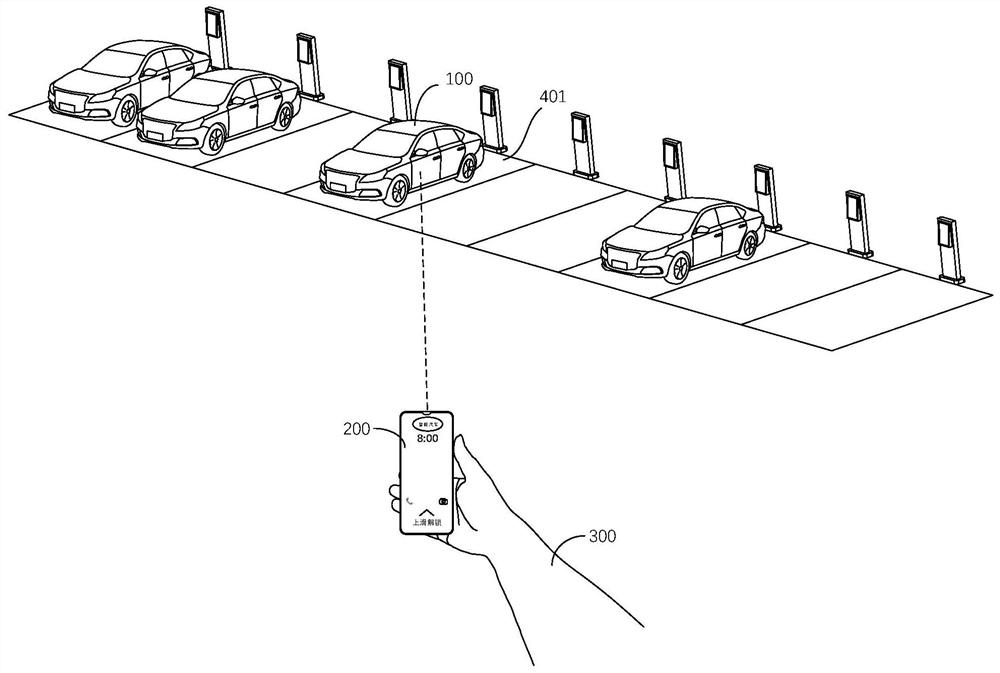

[0113] First refer to figure 1 A general description of the interaction scene of this embodiment is given.

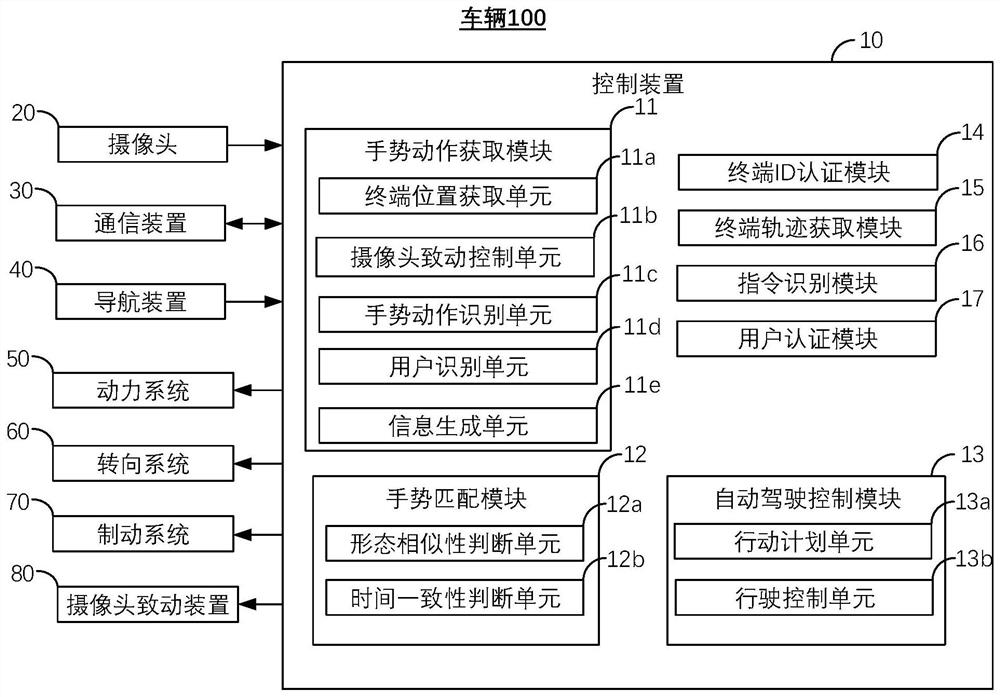

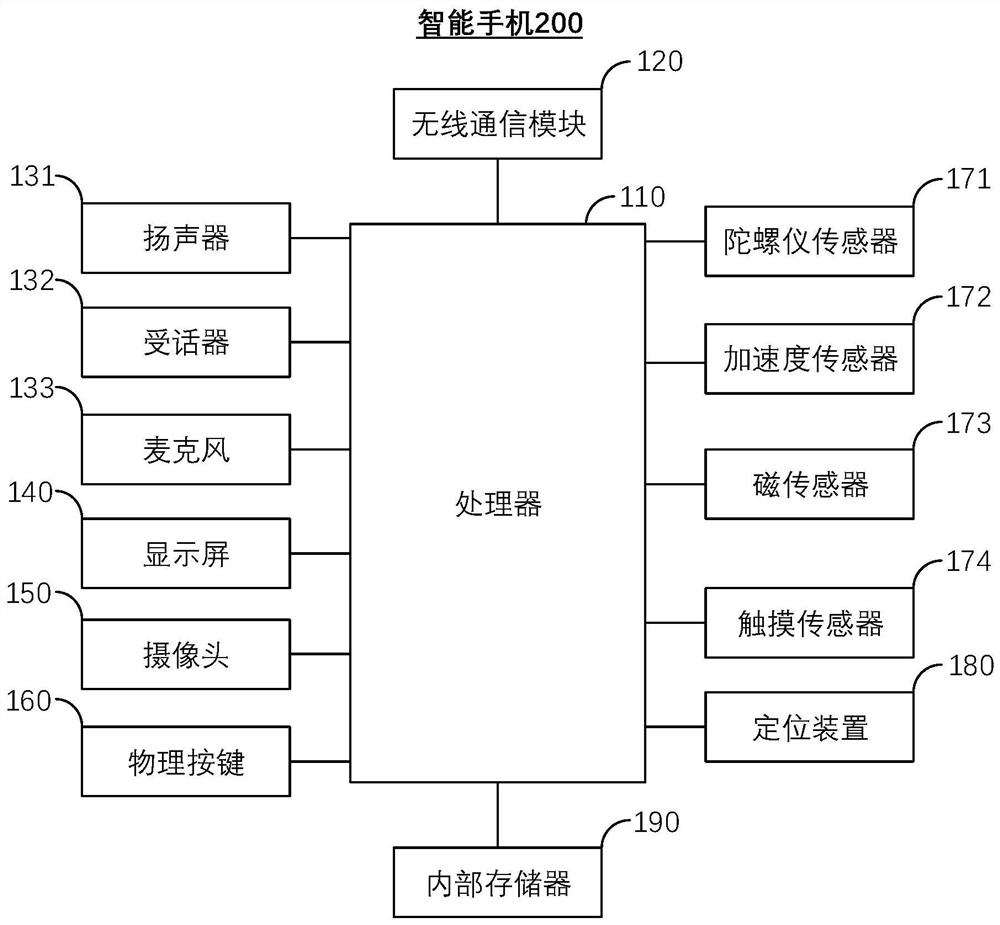

[0114] like figure 1 As shown, in this embodiment, an example of a person in the human-computer interaction is the user 300 , an example of the target device is the vehicle 100 , and an example of the mobile terminal is the smart phone 200 . Specifically, the vehicle 100 is parked in the parking space 601 of the parking lot, and the user 300 intends to control the vehicle 100 through air gestures. The mobile phone 200 and the vehicle 100 initiate a Bluetooth connection or a UWB connection), and after the vehicle 100 successfully authenticates the ID (Identification, identity, identification) of the smart phone 200, the two establish a connection. Afterwards, the user 300 performs a predetermined operation on the smart phone 200. The predetermined operation indicat...

Embodiment 2

[0230] Embodiment 2 of the present application will be described below.

[0231] This embodiment relates to a method for summoning a vehicle through a user's gesture action.

[0232] Specifically, in this embodiment, refer to Figure 9 , the user 301 operates the taxi-hailing software on the smart phone 201 to reserve an unmanned taxi (Robotaxi) through the cloud server 400. At this time, the smart phone 201 sends its location information to the cloud server 400 through the communication network, and the cloud server 400 is dispatched. After the processing, the vehicle 101 is selected as an unmanned taxi, and the location information of the smart phone 201 is sent to the vehicle 101 through the communication network, and the vehicle 101 travels to the user 301 according to the location information. When arriving near the user 301 (for example, 100 meters or tens of meters), the vehicle 101 hopes to know the precise location of the user 301 in order to provide more detailed se...

Embodiment 3

[0304] This embodiment relates to a method for a user to interact with a meal delivery robot.

[0305] Recently, more and more restaurants use food delivery robots for food delivery. At this time, it is generally necessary to pre-set the position of the specific dining table for the food delivery robot to deliver the food accurately, which prevents the customer from choosing the position freely or changing the position after selecting the position.

[0306] In addition, sometimes, multiple customers on the same table order separately, or some tables are long rows of tables (common in fast food restaurants), at this time, the robot cannot accurately distinguish which customer is the correct delivery Objects, unable to provide more detailed services (such as facing customers from the best angle). If customers are required to wave, for example during rush hour, there may be multiple people waving, confusing the robot.

[0307] To this end, this embodiment provides a method for ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com