Distributed deep learning method based on pipeline annular parameter communication

A parametric communication and deep learning technology, applied in the field of deep learning, can solve the problems of low cluster training speed and long computing time, and achieve the effect of shortening communication time, reducing communication volume and avoiding communication congestion.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

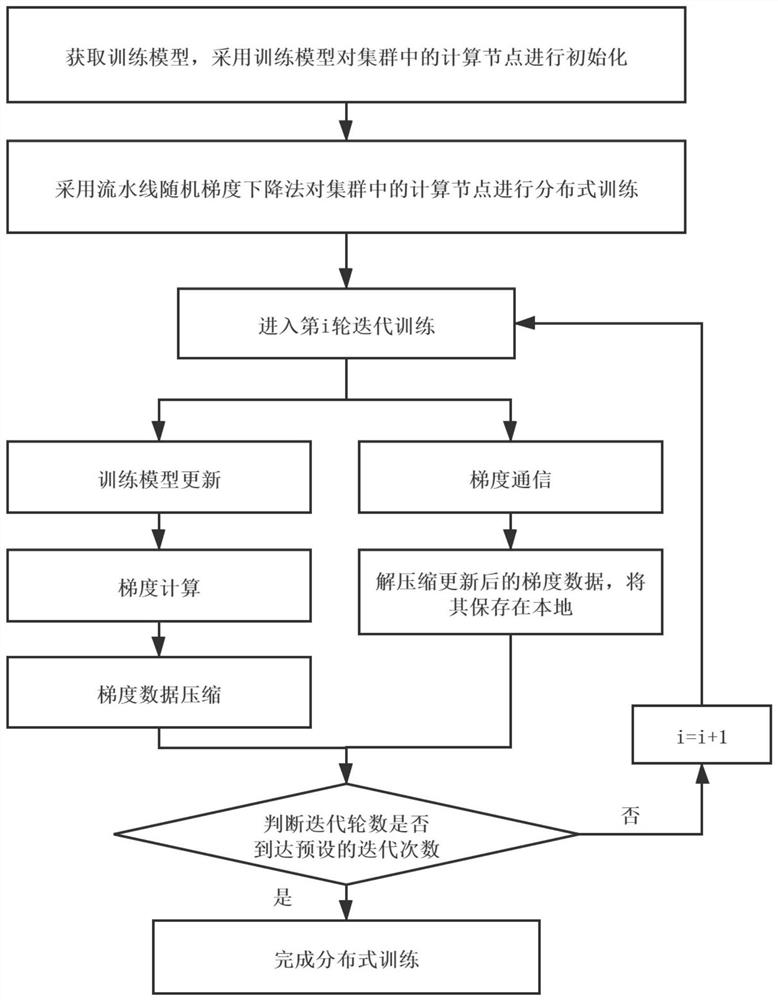

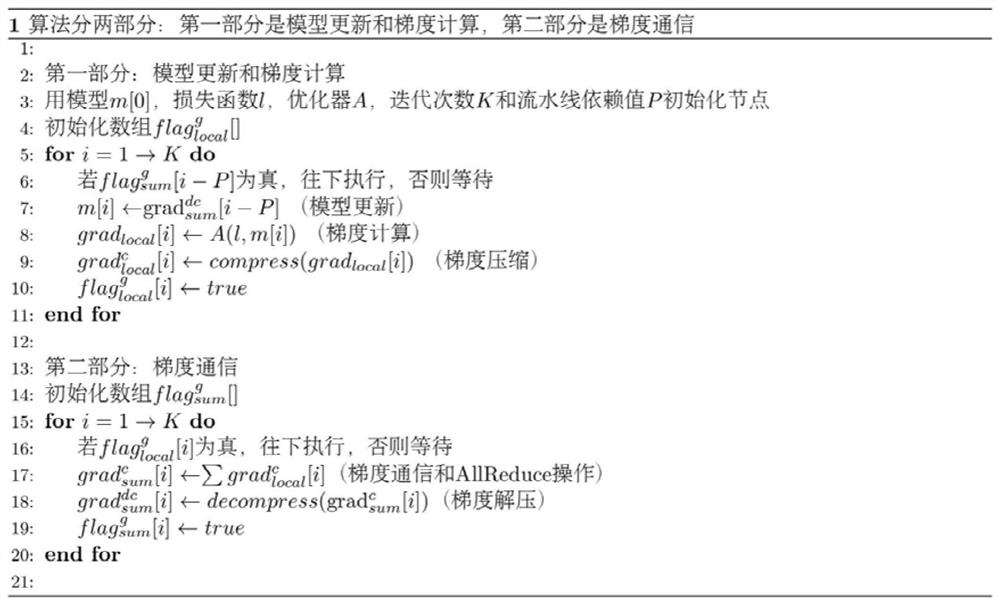

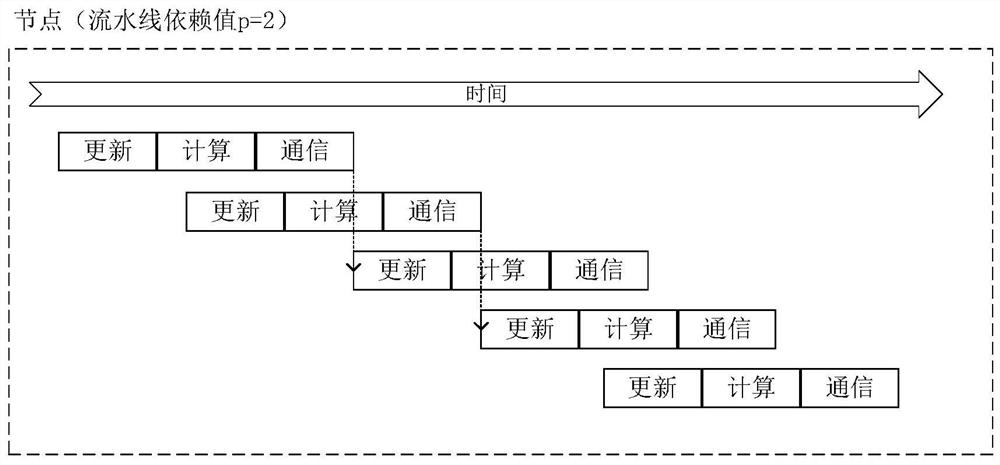

[0036] This embodiment proposes a distributed deep learning method based on pipeline ring parameter communication, as shown in Figures 1-2, which is a flow chart of the distributed deep learning method based on pipeline ring parameter communication in this embodiment.

[0037] In the distributed deep learning method based on pipeline ring parameter communication proposed by this embodiment, the following steps are included:

[0038] S1: Obtain a training model, and use the training model to initialize computing nodes in the cluster.

[0039] Before the model starts training, use the locally stored training model to initialize the computing nodes in the cluster, and define the same loss function l, optimizer A, iteration number K, and pipeline dependency value P for each node related to model training. parameter; two tag arrays are defined for each compute node in the cluster with and a model state storage array m; where the tag array Flag corresponding to whether the loc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com