Patents

Literature

96 results about "Pipeline (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

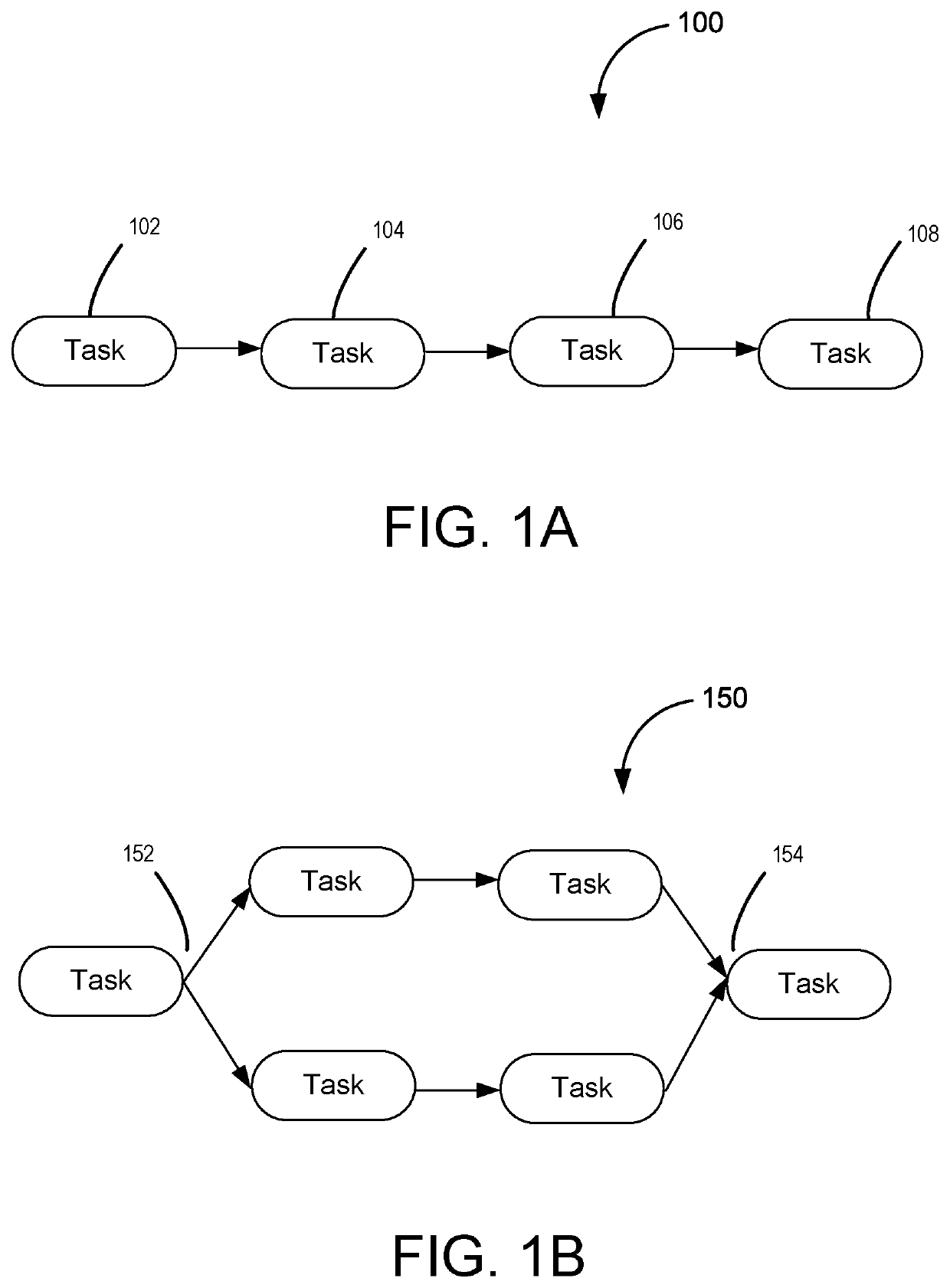

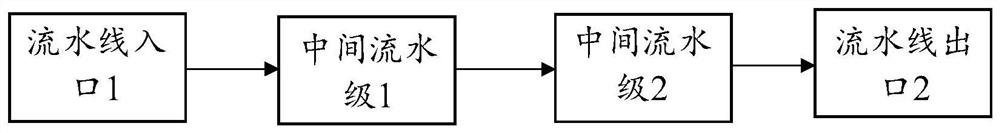

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer storage is often inserted between elements.

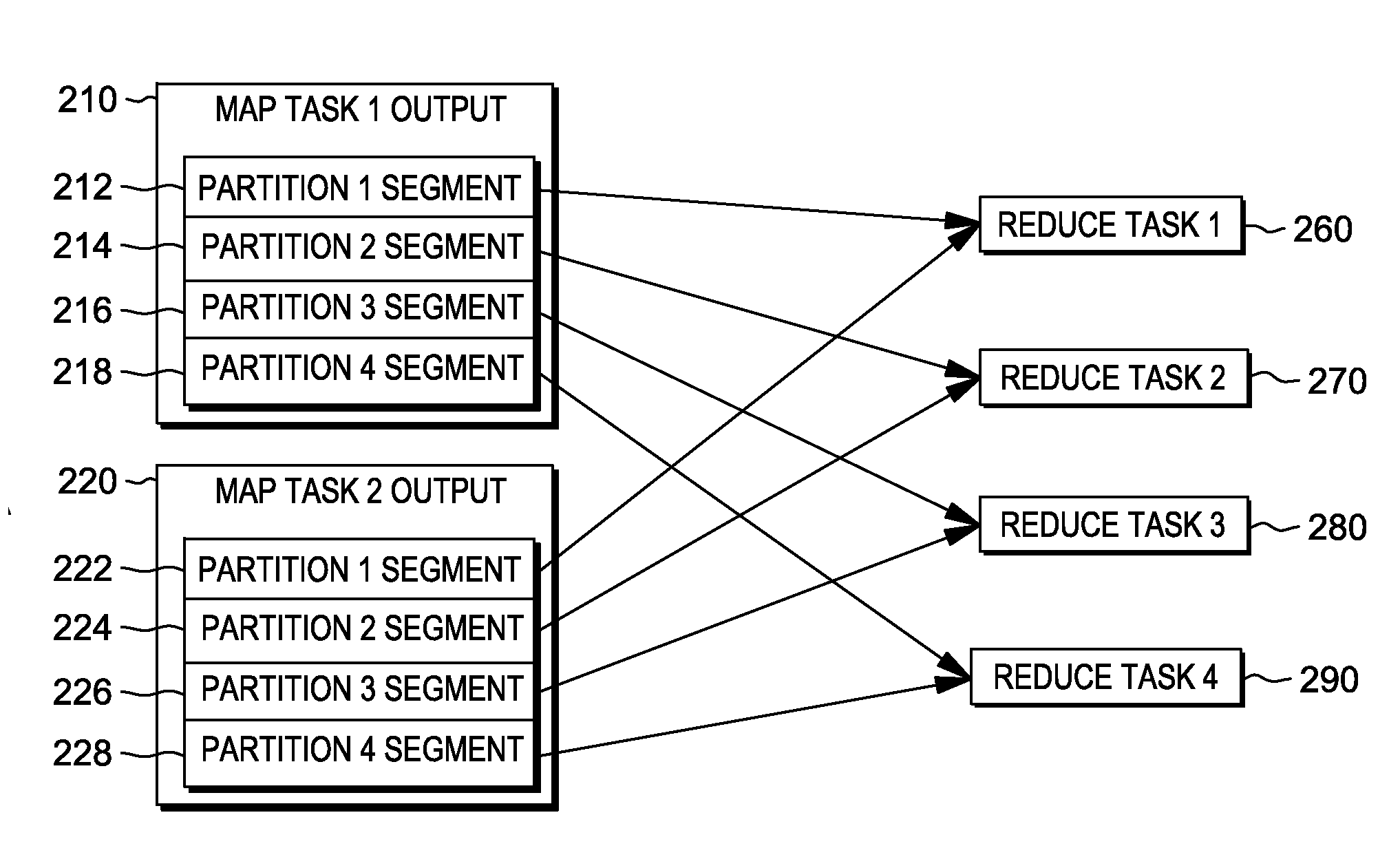

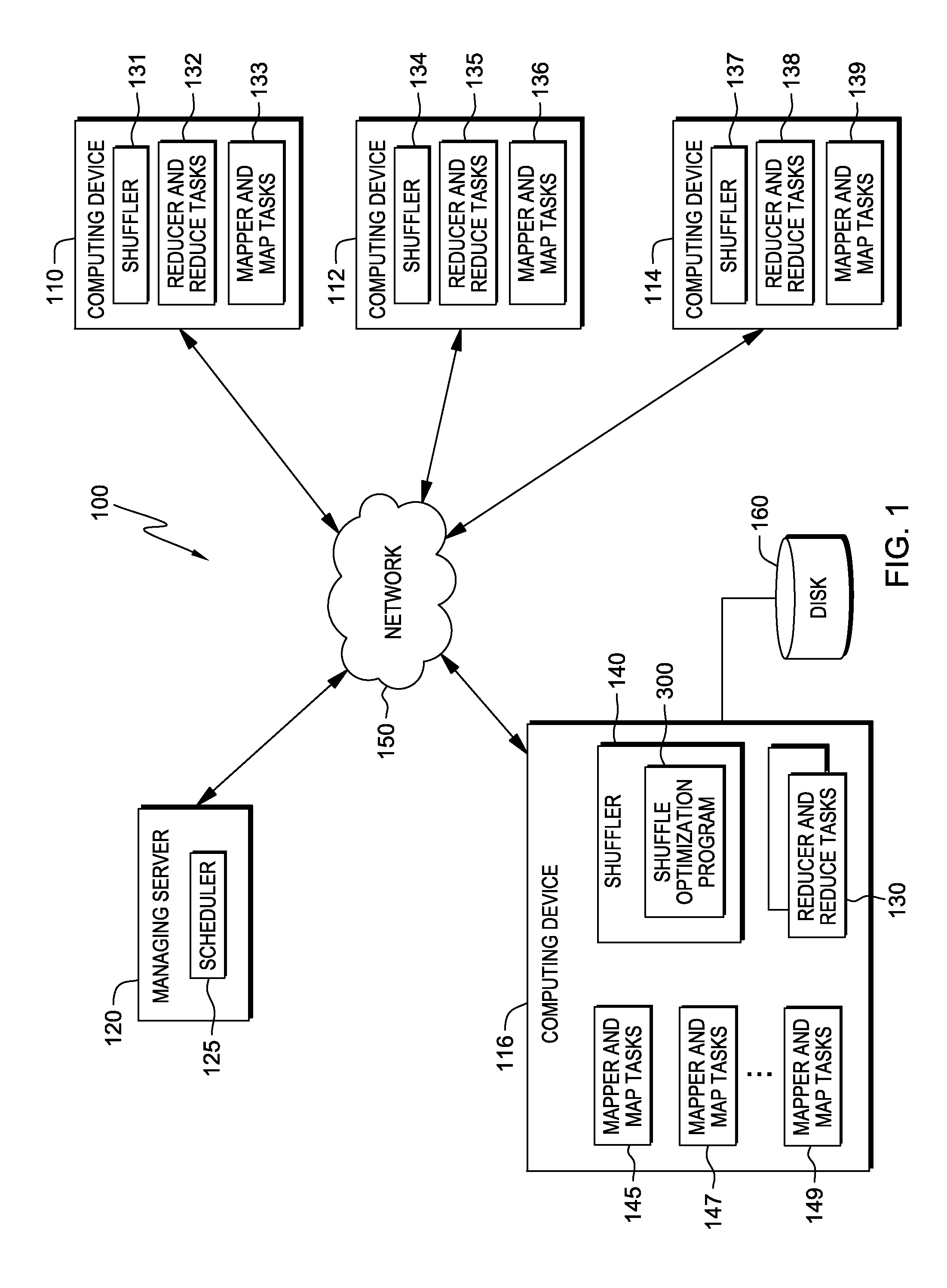

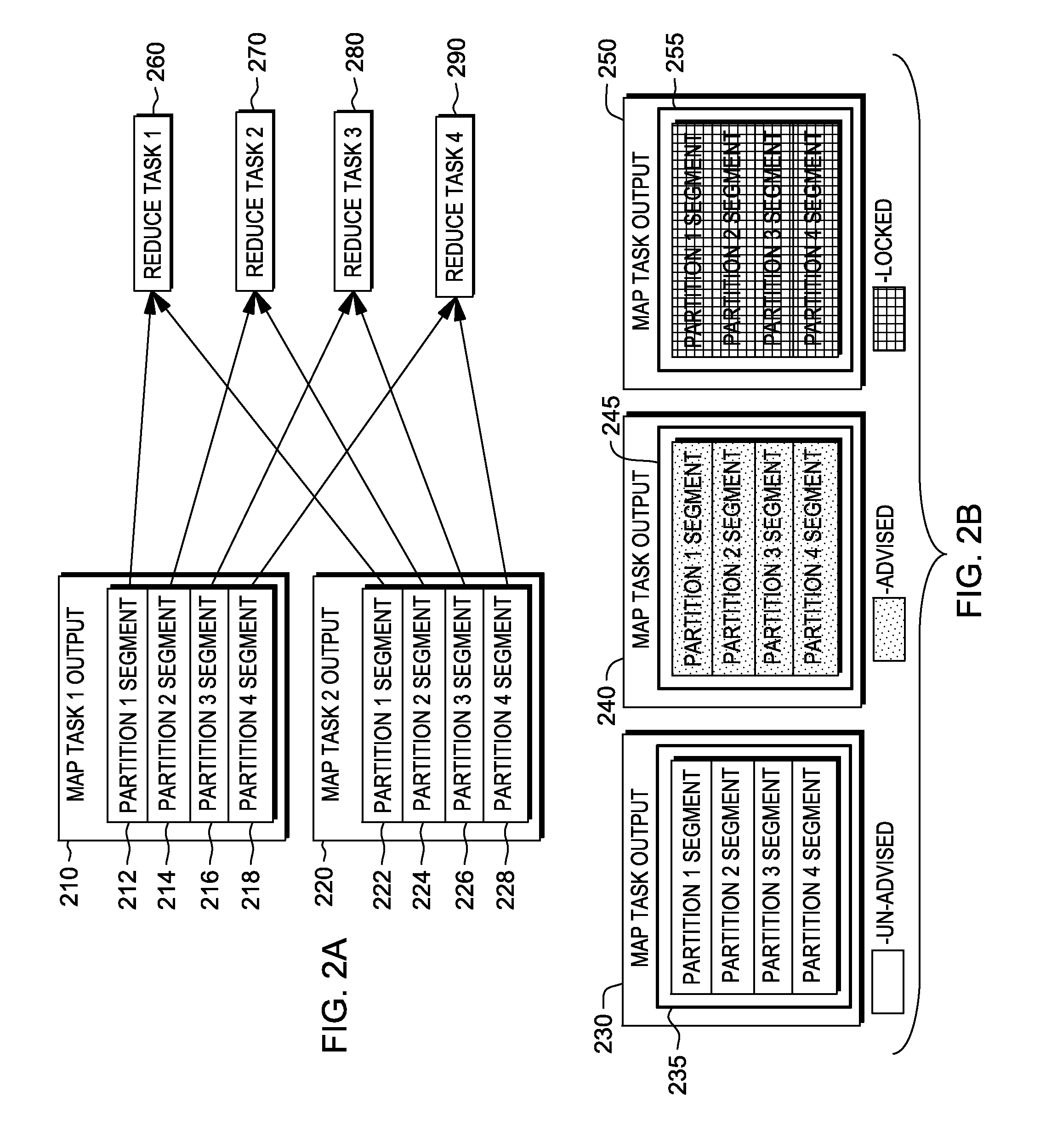

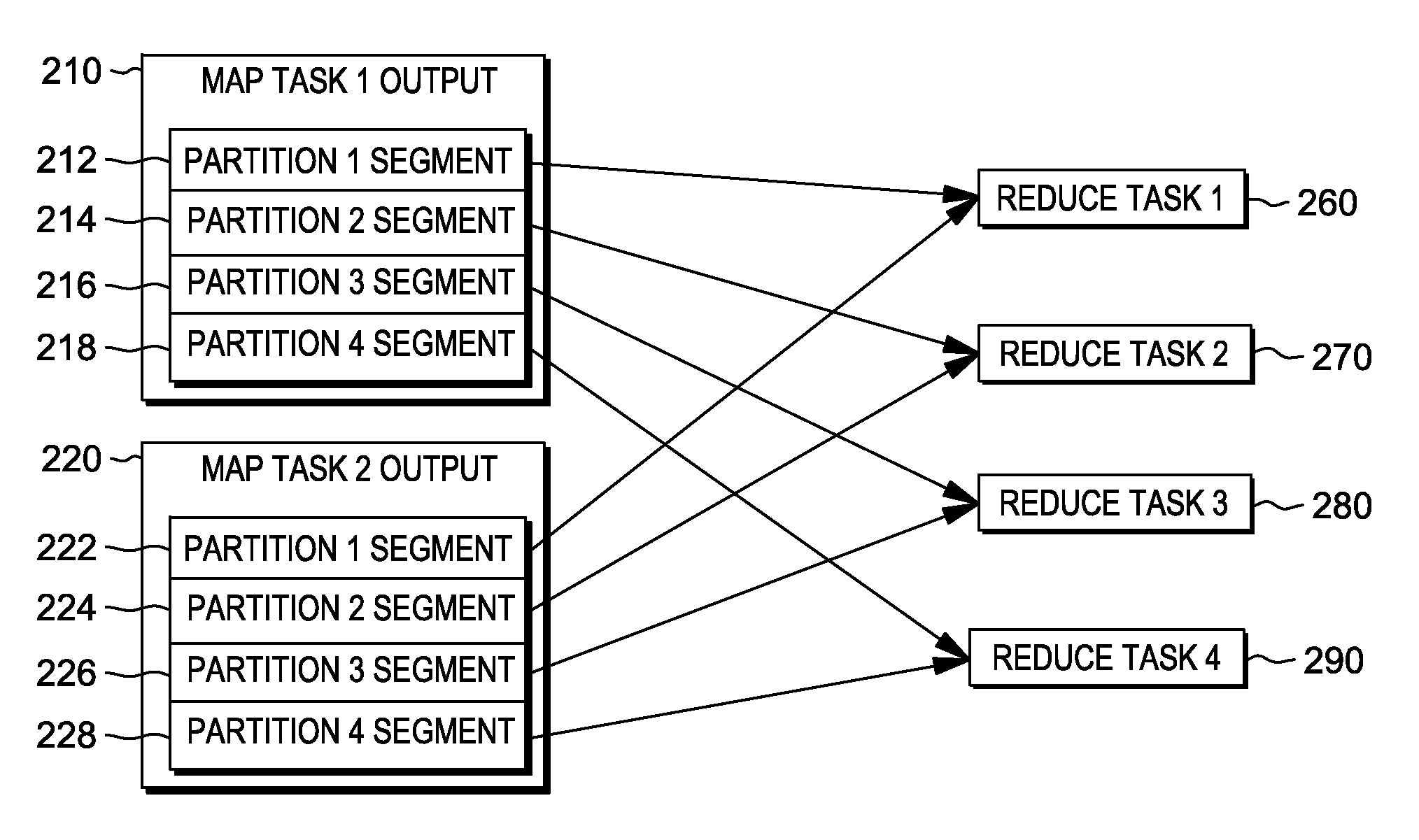

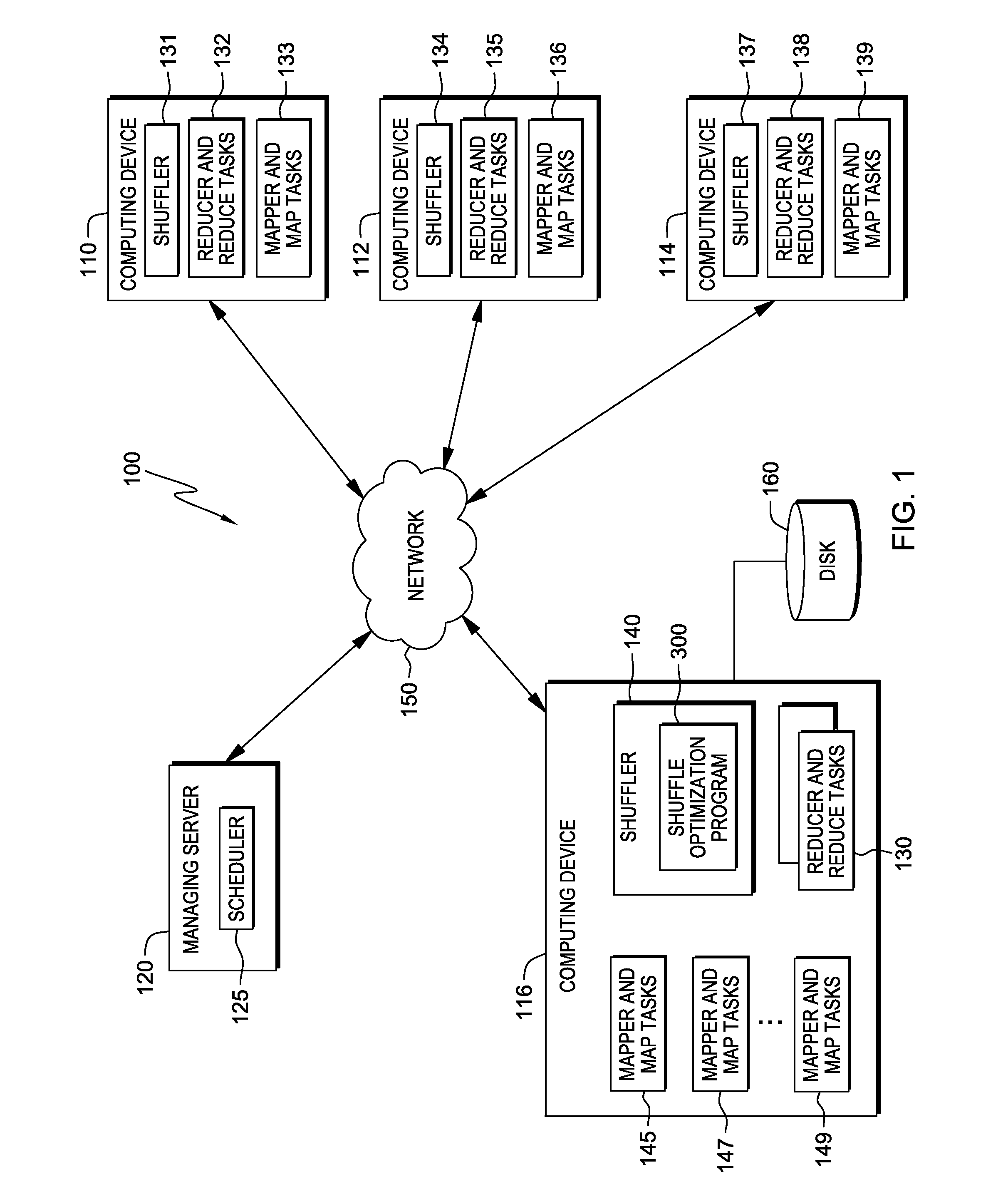

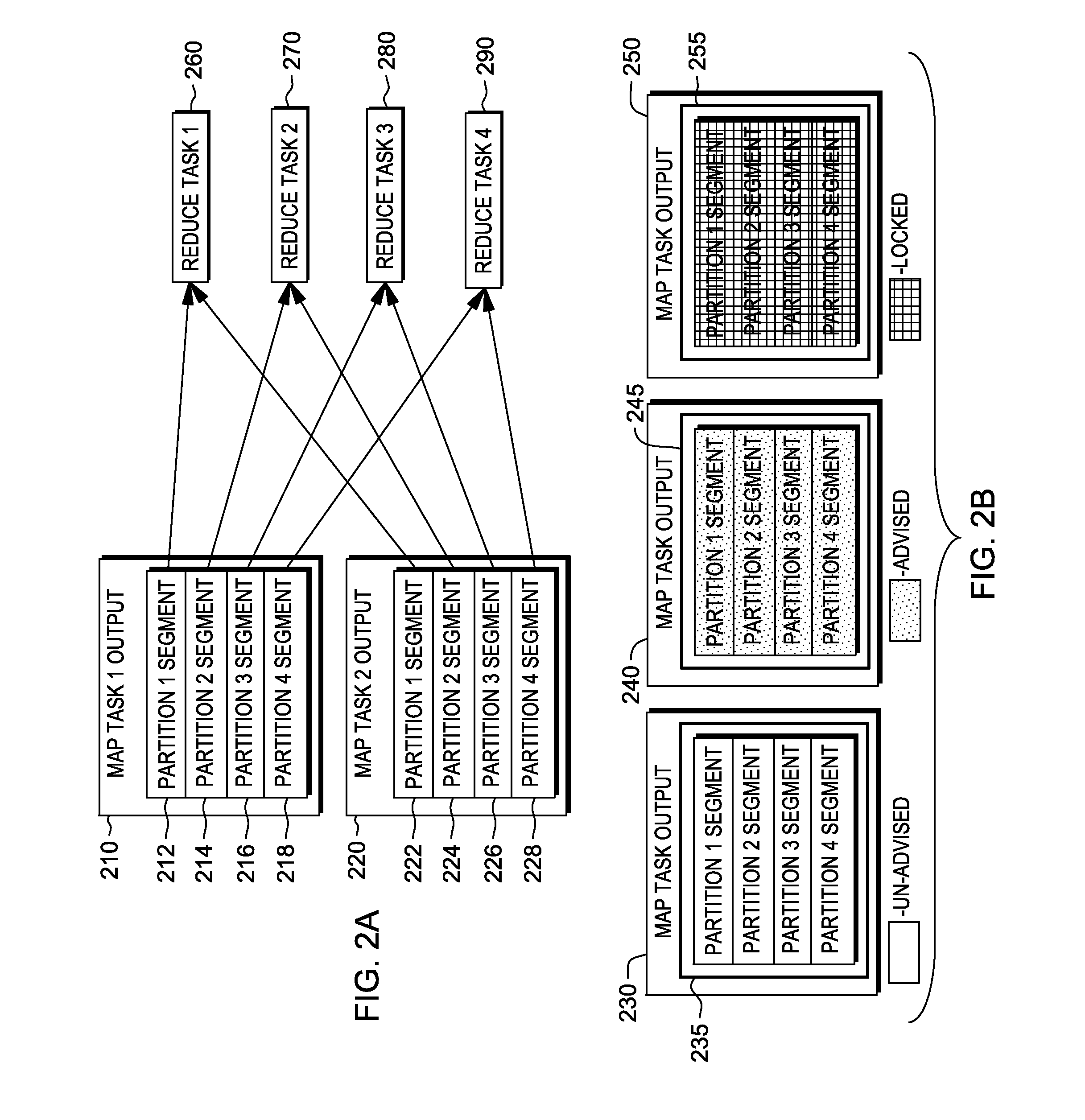

Optimization of map-reduce shuffle performance through shuffler I/O pipeline actions and planning

A shuffler receives information associated with partition segments of map task outputs and a pipeline policy for a job running on a computing device. The shuffler transmits to an operating system of the computing device a request to lock partition segments of the map task outputs and transmits an advisement to keep or load partition segments of map task outputs in the memory of the computing device. The shuffler creates a pipeline based on the pipeline policy, wherein the pipeline includes partition segments locked in the memory and partition segments advised to keep or load in the memory, of the computing device for the job, and the shuffler selects the partition segments locked in the memory, followed by partition segments advised to keep or load in the memory, as a preferential order of partition segments to shuffle.

Owner:IBM CORP

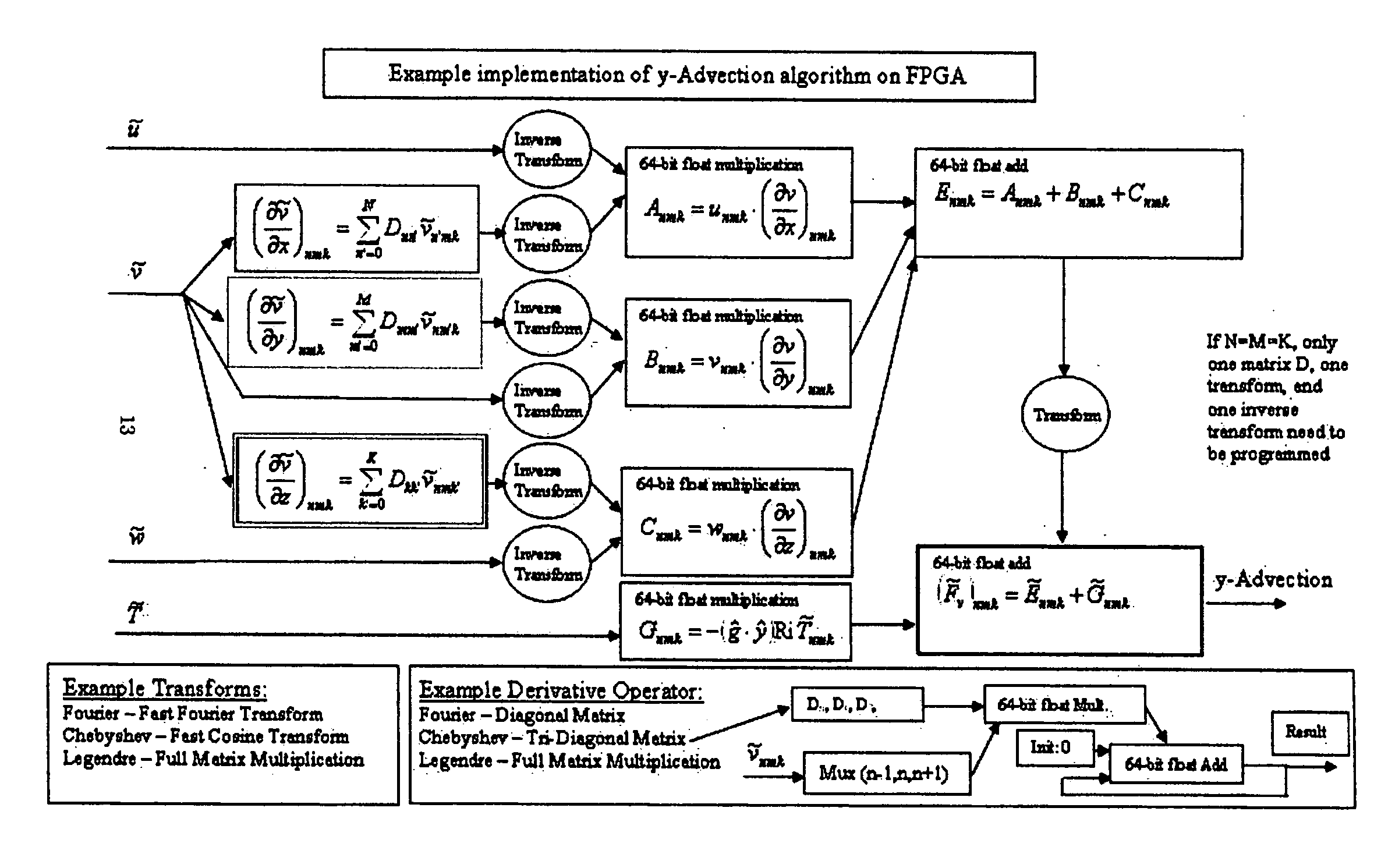

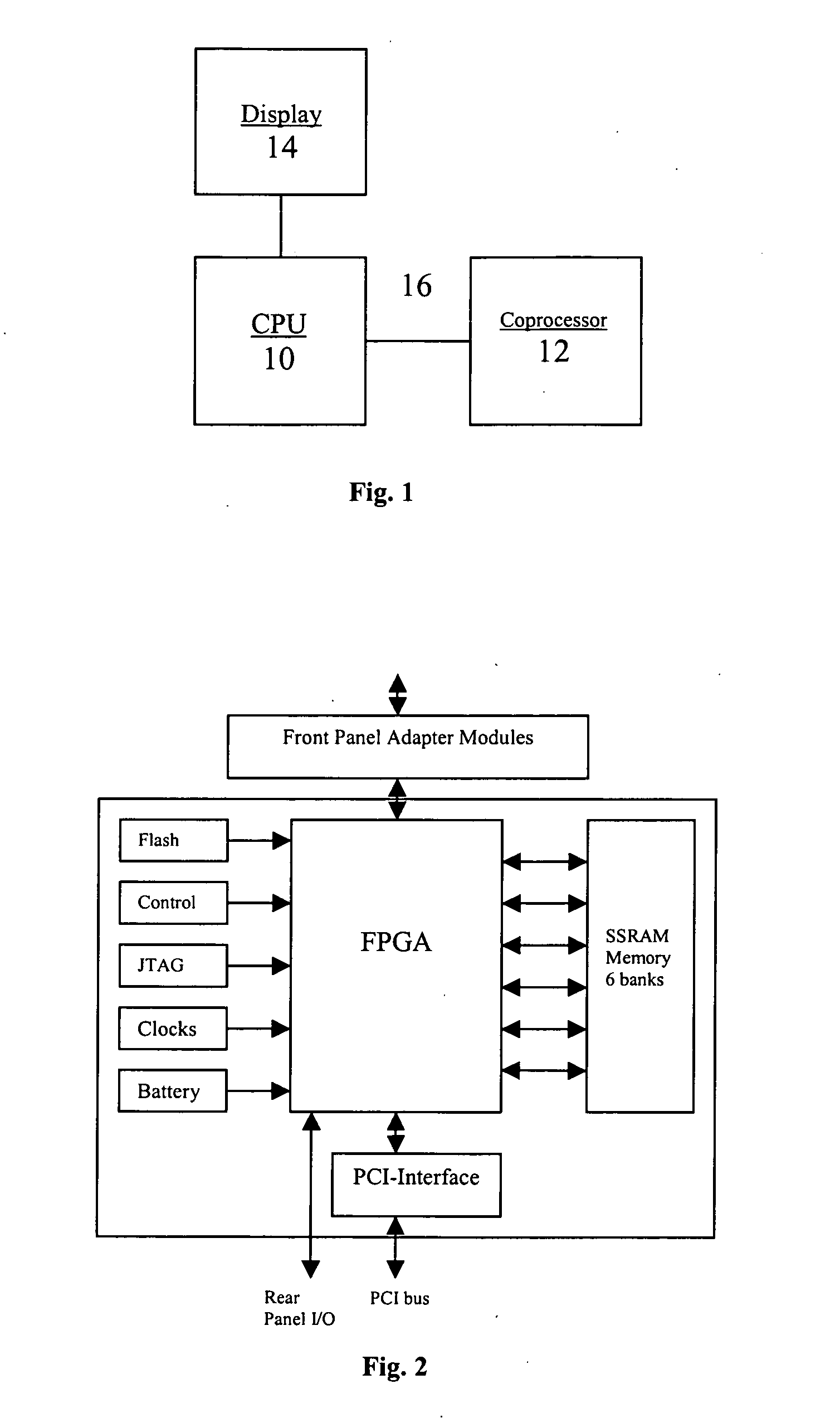

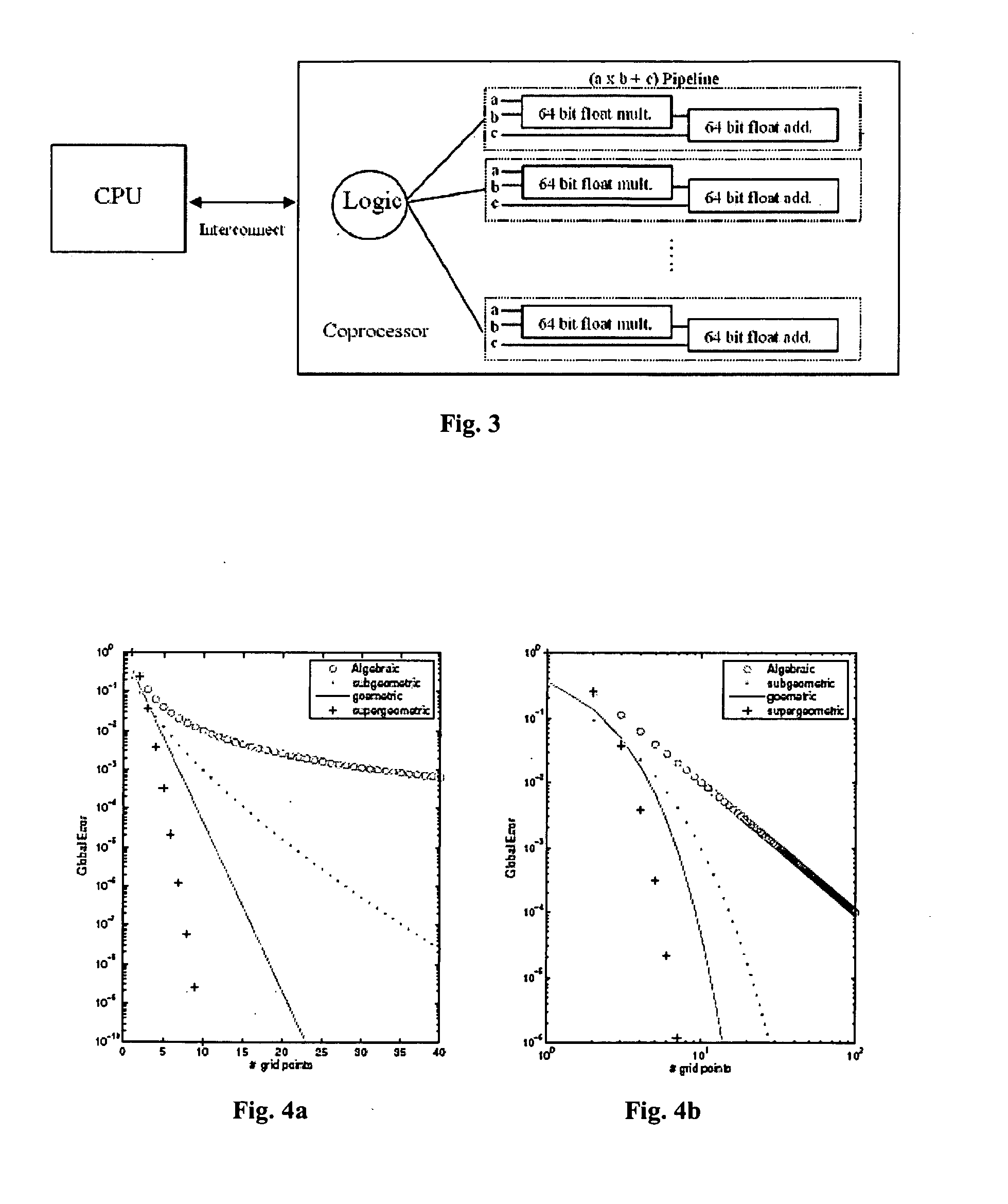

Computational fluid dynamics (CFD) coprocessor-enhanced system and method

InactiveUS20070219766A1Cost-effectiveWide variety of usDesign optimisation/simulationProgram controlCoprocessorDisplay device

The present invention provides a system, method and product for porting computationally complex CFD calculations to a coprocessor in order to decrease overall processing time. The system comprises a CPU in communication with a coprocessor over a high speed interconnect. In addition, an optional display may be provided for displaying the calculated flow field. The system and method include porting variables of governing equations from a CPU to a coprocessor; receiving calculated source terms from the coprocessor; and solving the governing equations at the CPU using the calculated source terms. In a further aspect, the CPU compresses the governing equations into combination of higher and / or lower order equations with fewer variables for porting to the coprocessor. The coprocessor receives the variables, iteratively solves for source terms of the equations using a plurality of parallel pipelines, and transfers the results to the CPU. In a further aspect, the coprocessor decompresses the received variables, solves for the source terms, and then compresses the results for transfer to the CPU. The CPU solves the governing equations using the calculated source terms. In a further aspect, the governing equations are compressed and solved using spectral methods. In another aspect, the coprocessor includes a reconfigurable computing device such as a Field Programmable Gate Array (FPGA). In yet another aspect, the coprocessor may be used for specific applications such as Navier-Stokes equations or Euler equations and may be configured to more quickly solve non-linear advection terms with efficient pipeline utilization.

Owner:VIRGINIA TECH INTPROP INC

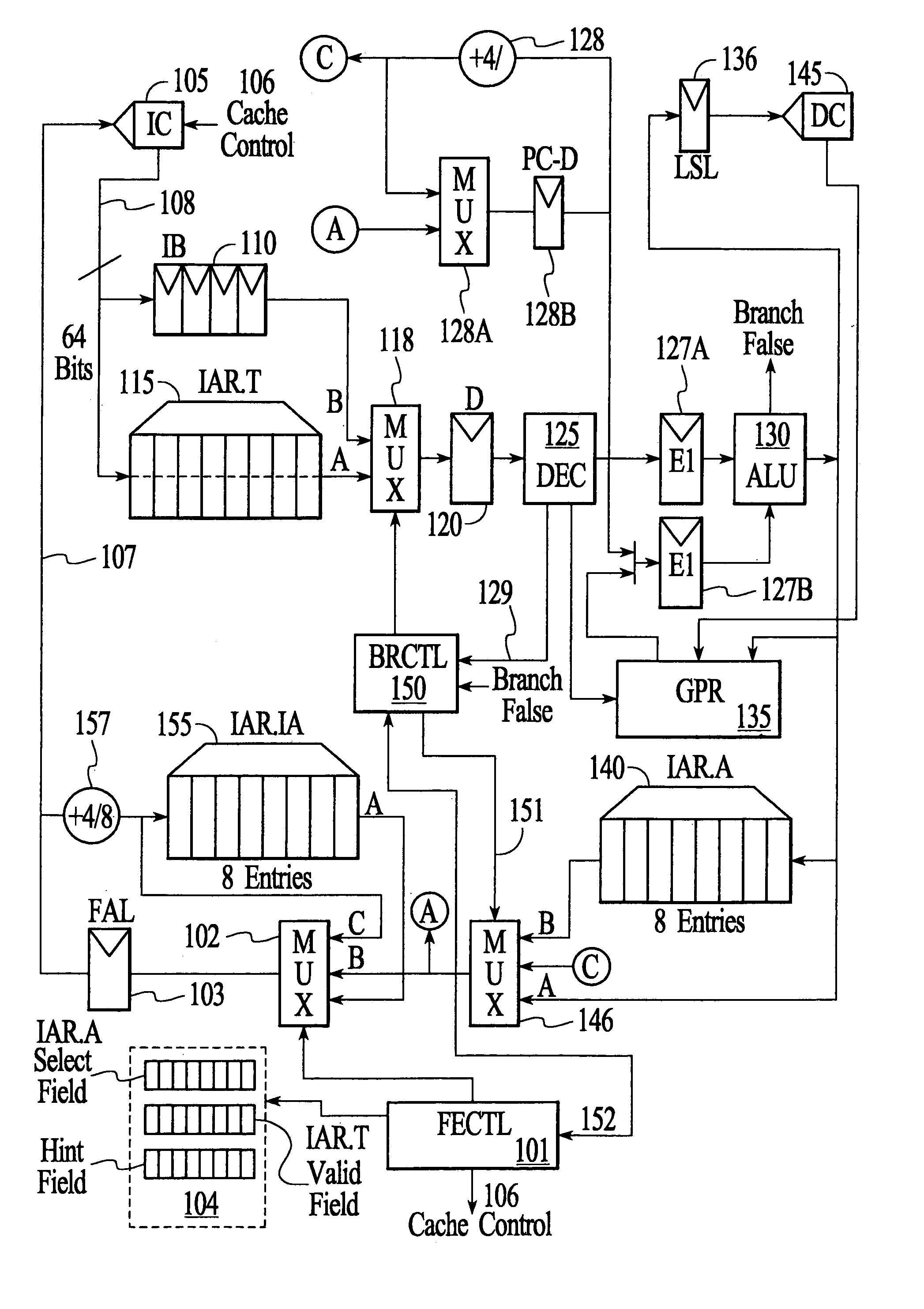

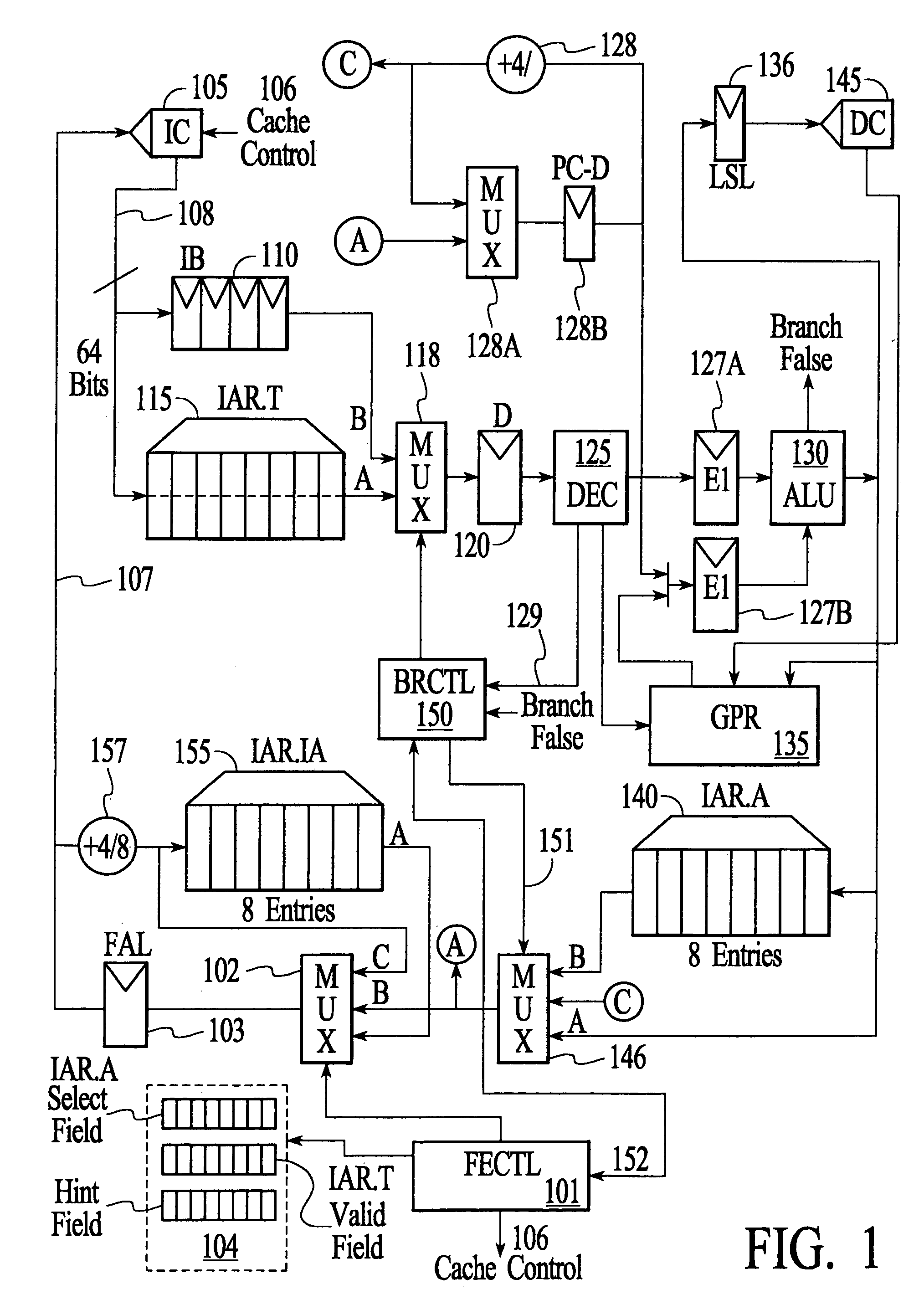

Branch control memory

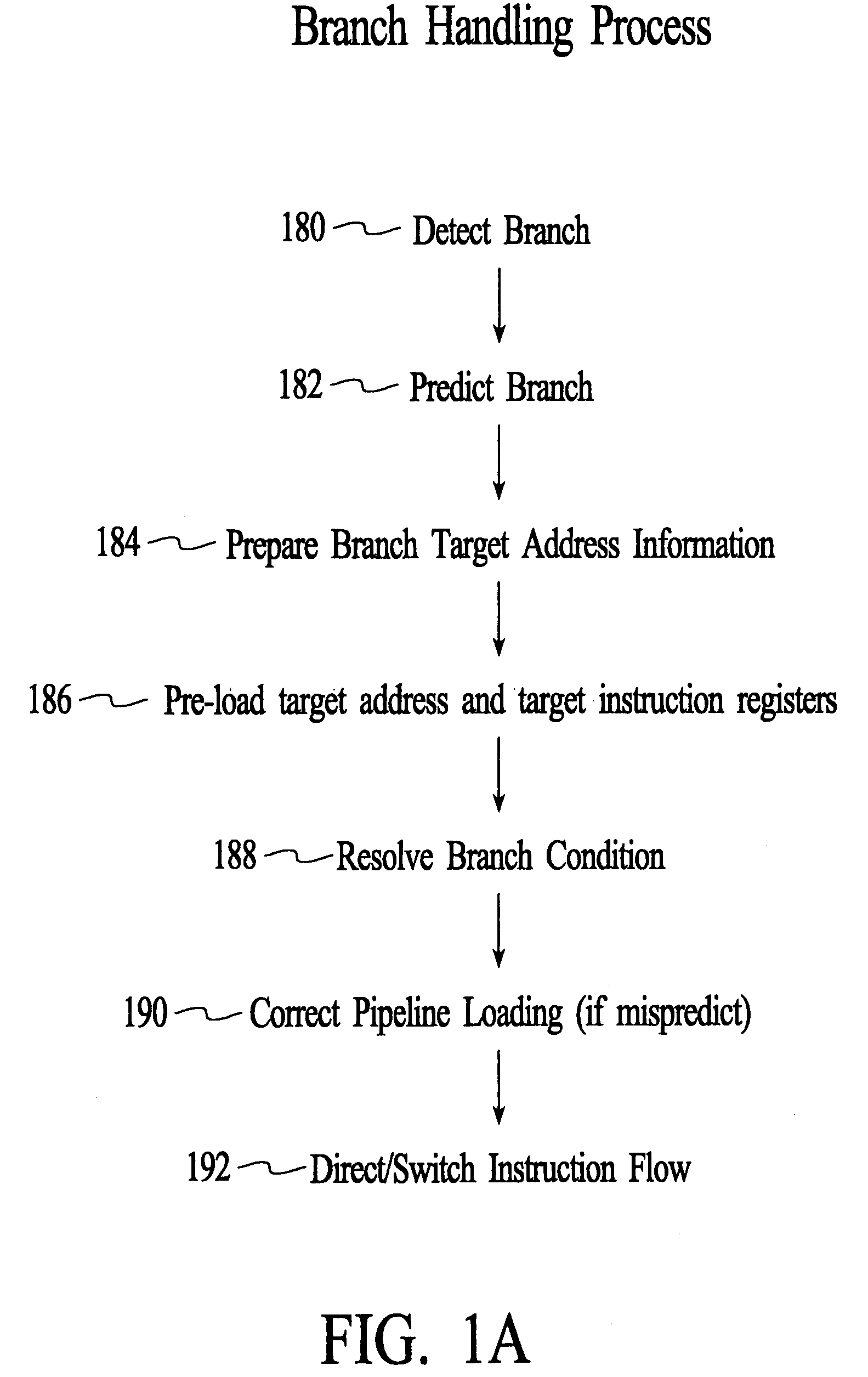

InactiveUS7159102B2Simplify the commissioning processImprove latency handlingDigital computer detailsNext instruction address formationParallel computingControl memory

A branch control memory store branch instructions which are adapted for optimizing performance of programs run on electronic processors. Flexible instruction parameter fields permit a variety of new branch control and branch instruction implementations best suited for a particular computing environment. These instructions also have separate prediction bits, which are used to optimize loading of target instruction buffers in advance of program execution, so that a pipeline within the processor achieves superior performance during actual program execution.

Owner:RENESAS ELECTRONICS CORP

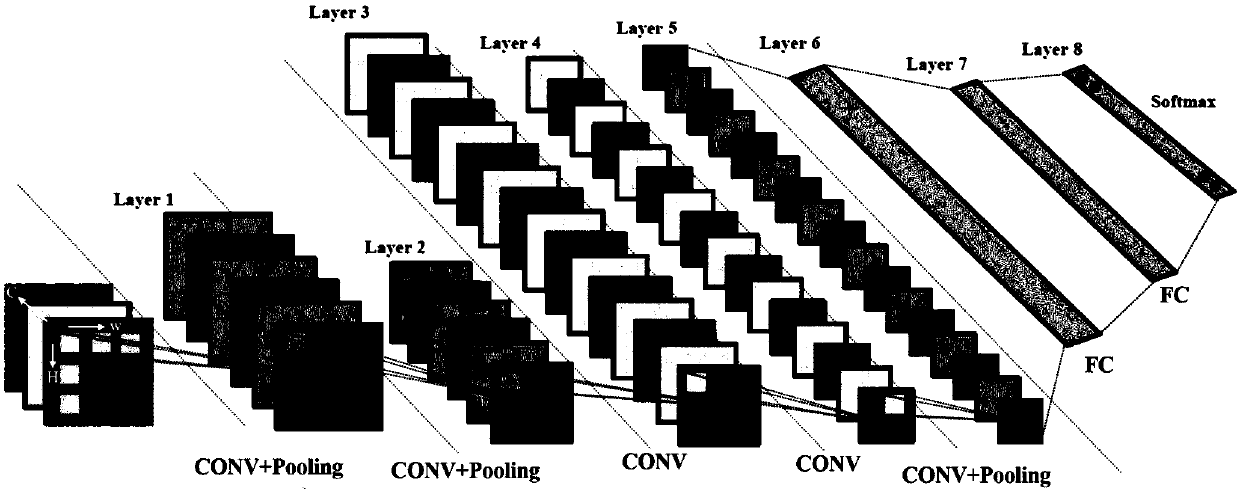

Convolutional neural network hardware accelerator for solidifying full network layer on reconfigurable platform

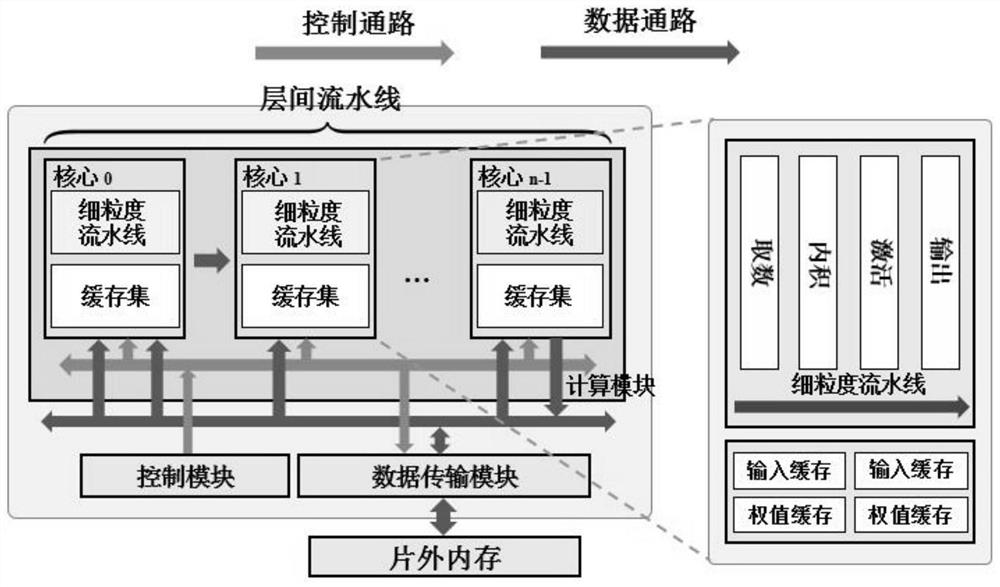

PendingCN112116084ASolve parallelismResolving Conflicts Between Homogeneous Hardware ParallelismsNeural architecturesPhysical realisationComputer hardwareHardware structure

The invention discloses a convolutional neural network hardware accelerator for solidifying a full network layer on a reconfigurable platform. The accelerator comprises a control module which is usedfor coordinating and controlling an acceleration process, including the initialization and synchronization of other modules on a chip, and starting the interaction of different types of data between each calculation core and an off-chip memory; a data transmission module which comprises a memory controller and a plurality of DMAs and is used for the data interaction between each on-chip data cacheand the off-chip memory; a calculation module which comprises a plurality of calculation cores for calculation, wherein the calculation cores are in one-to-one correspondence with different network layers of the convolutional neural network; wherein each calculation core is used as one stage of an assembly line, and all the calculation cores jointly form a complete coarse-grained assembly line structure, and each calculation core internally comprises a fine-grained computing pipeline. By implementing end-to-end mapping between hierarchical computing and a hardware structure, the adaptabilitybetween software and hardware features is improved, and the utilization efficiency of computing resources is improved.

Owner:UNIV OF SCI & TECH OF CHINA

Optimization of map-reduce shuffle performance through shuffler I/O pipeline actions and planning

A shuffler receives information associated with partition segments of map task outputs and a pipeline policy for a job running on a computing device. The shuffler transmits to an operating system of the computing device a request to lock partition segments of the map task outputs and transmits an advisement to keep or load partition segments of map task outputs in the memory of the computing device. The shuffler creates a pipeline based on the pipeline policy, wherein the pipeline includes partition segments locked in the memory and partition segments advised to keep or load in the memory, of the computing device for the job, and the shuffler selects the partition segments locked in the memory, followed by partition segments advised to keep or load in the memory, as a preferential order of partition segments to shuffle.

Owner:IBM CORP

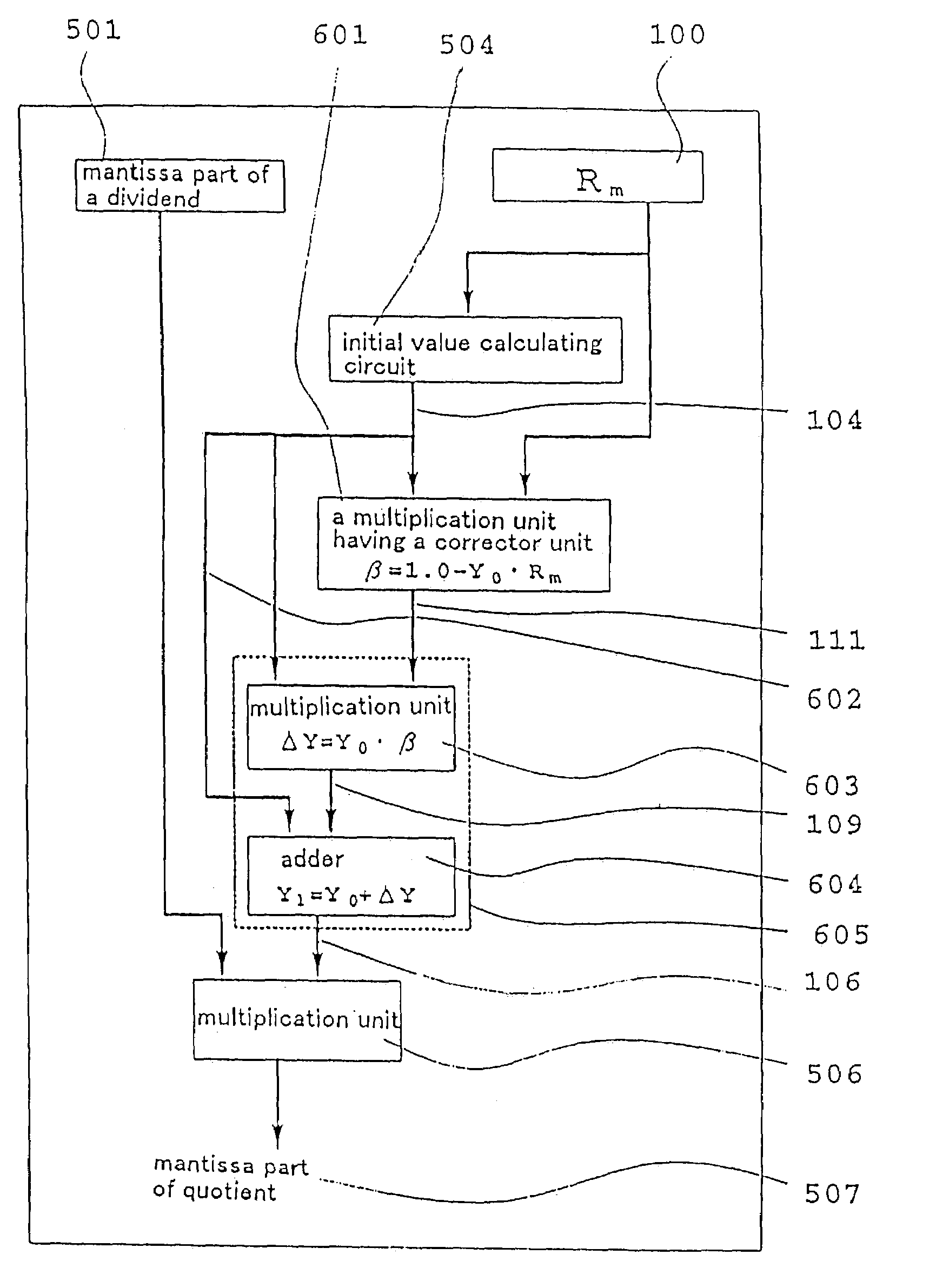

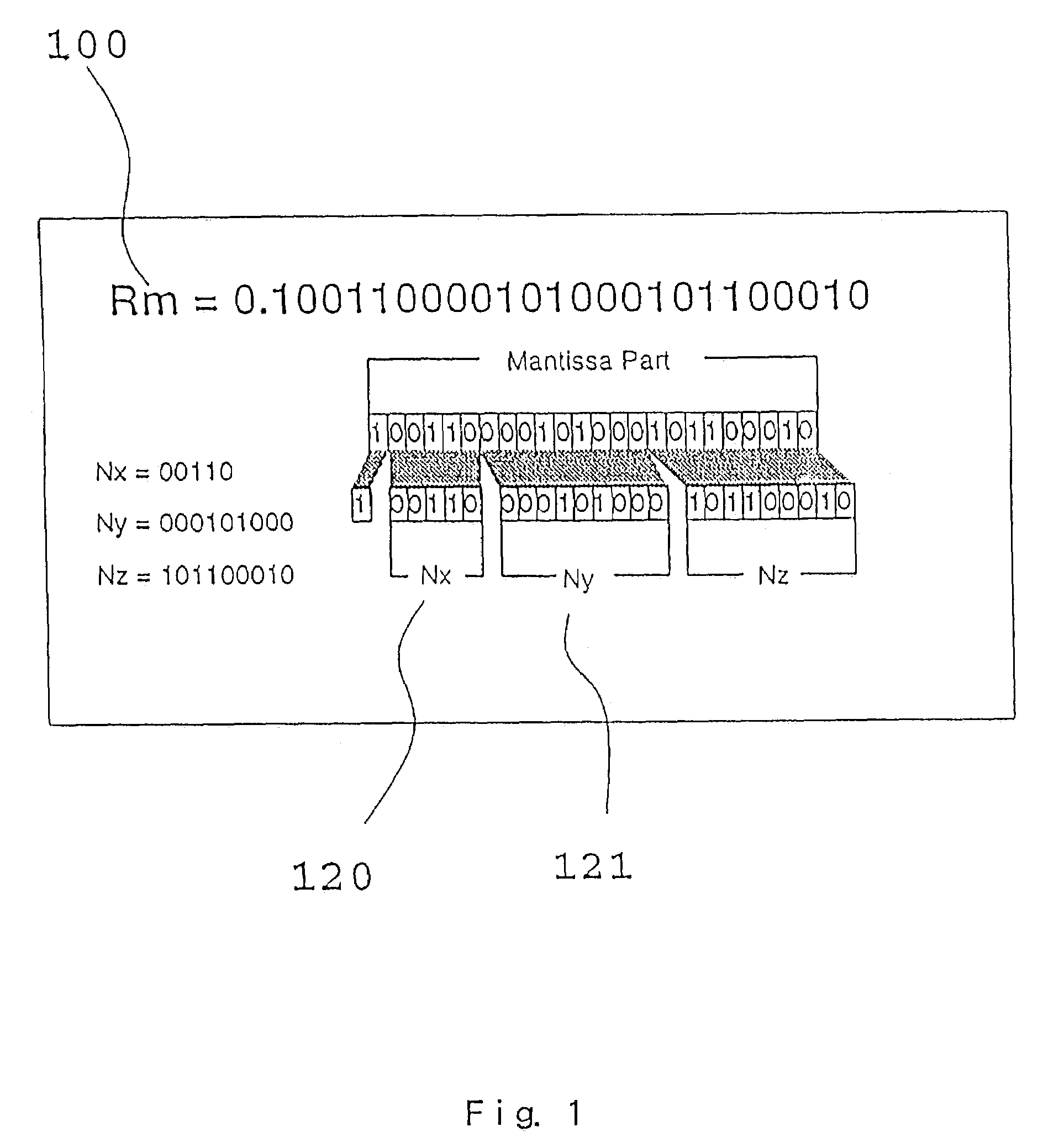

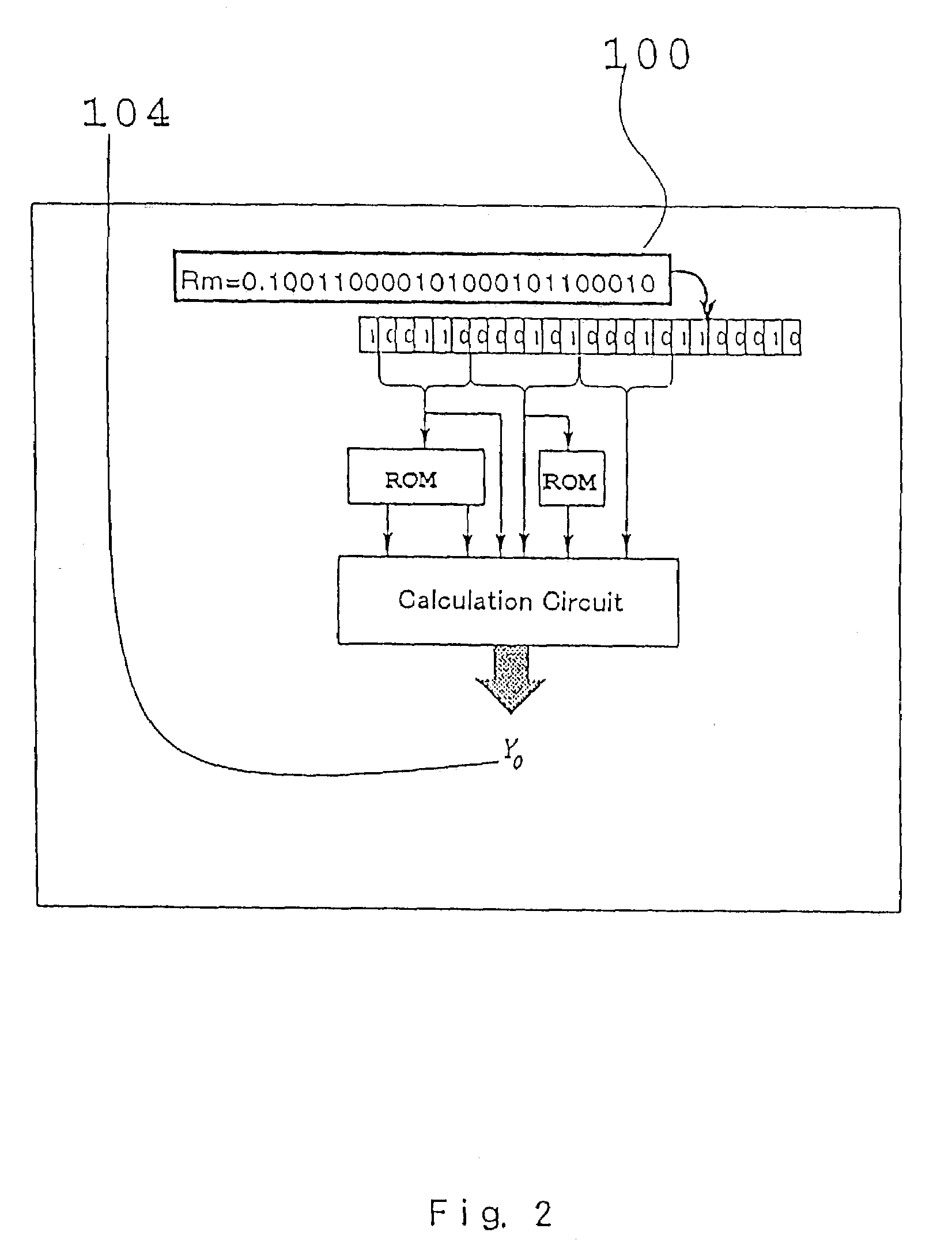

Computing system using newton-raphson method

InactiveUS7191204B1Avoid computing timeImprove throughputComputation using non-contact making devicesDigital function generatorsBinary multiplierAlgorithm

A dividing circuit and square root extracting circuit using the Newton-Raphson method. The number of places of an initial value of the Newton-Raphson method is decreased, and a part of a multiplier is omitted. Therefore the circuit scale is reduced. A circuit dedicated for the iterated computation circuit for the Newton-Raphson method is mounted, enabling the whole circuits to operate as a pipeline circuit. By cut-off in expanding an iterative operation to a series operation, use of a table, adoption of approximation mode for deriving an initial value, and adoption of redundant expression for computation, higher speed operation and reduction of circuit scale are possible.

Owner:OGATA WATARU

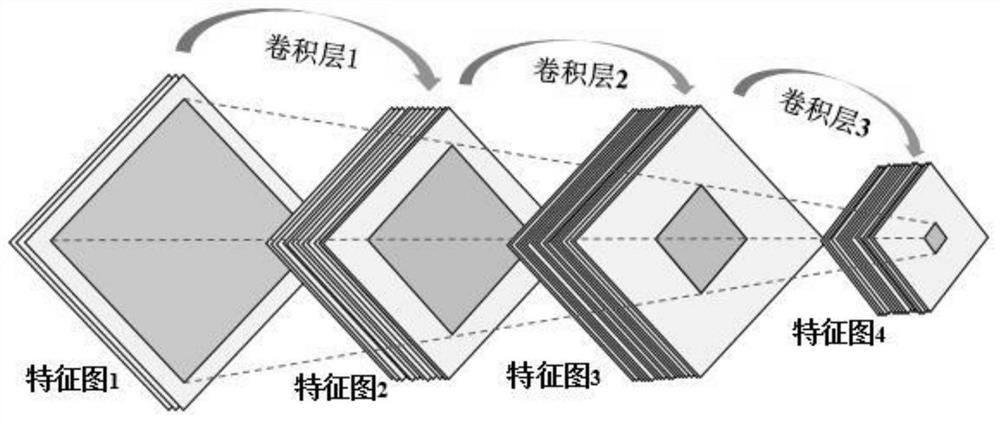

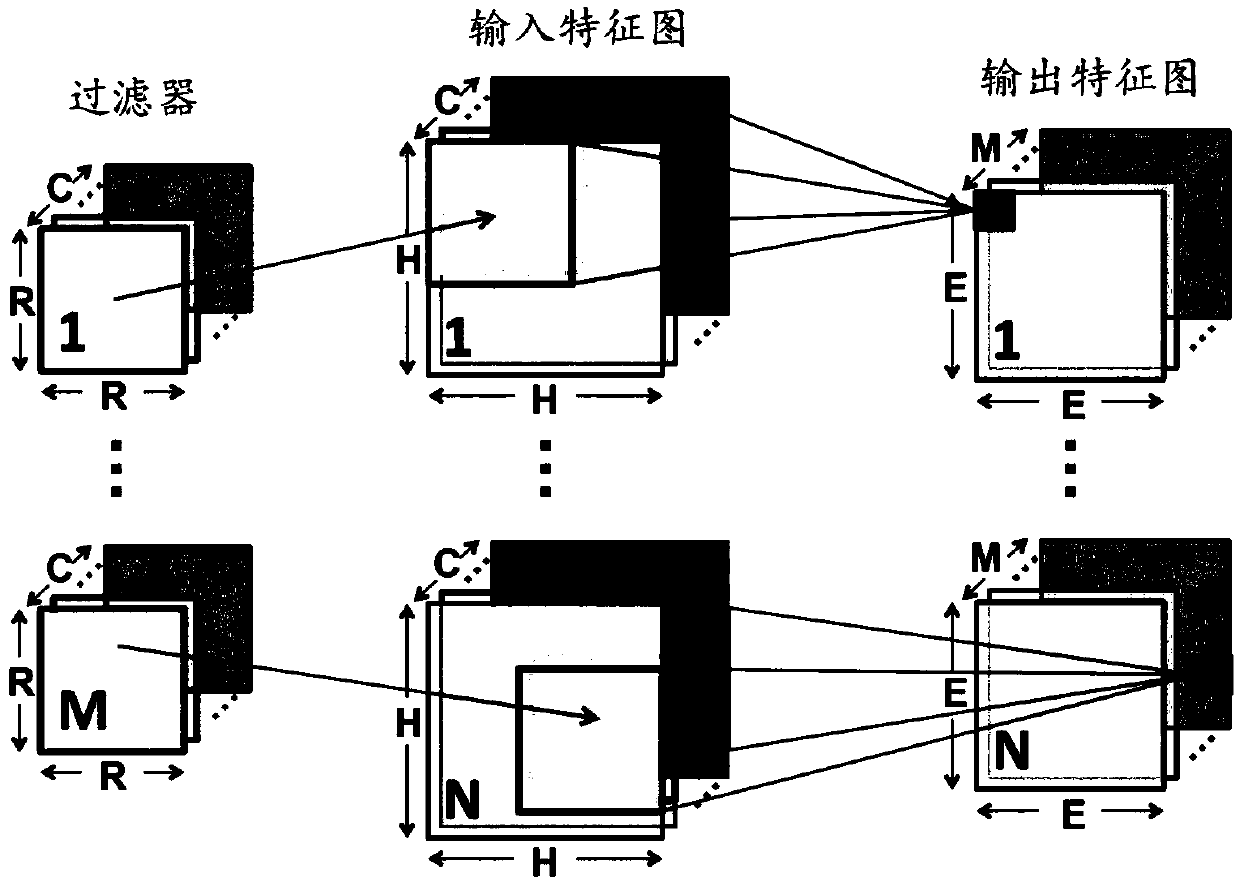

Neural network calculation special circuit and related calculation platform and implementation method thereof

ActiveCN110766127AImprove hardware utilizationSave hardware resourcesProcessor architectures/configurationNeural architecturesComputer hardwareMap reading

The invention discloses a special circuit for a neural network and a related computing platform and implementation method thereof. The special circuit comprises: a data reading module which comprisesa feature map reading sub-module and a weight reading sub-module which are respectively used for reading feature map data and weight data from an on-chip cache to a data calculation module when a depthwise convolution operation is executed, wherein the feature map reading sub-module is also used for reading the feature map data from the on-chip cache to the data calculation module when executing pooling operation; a data calculation module which comprises a dwconv module used for executing depthwise convolution calculation and a pooling module used for executing pooling calculation; and a datawrite-back module which is used for writing a calculation result of the data calculation module back to the on-chip cache. The use of hardware resources is reduced by multiplexing the read logic andthe write-back logic of the two types of operations. The special circuit provided by the invention adopts a high-concurrency pipeline design, so that the computing performance can be further improved.

Owner:XILINX TECH BEIJING LTD

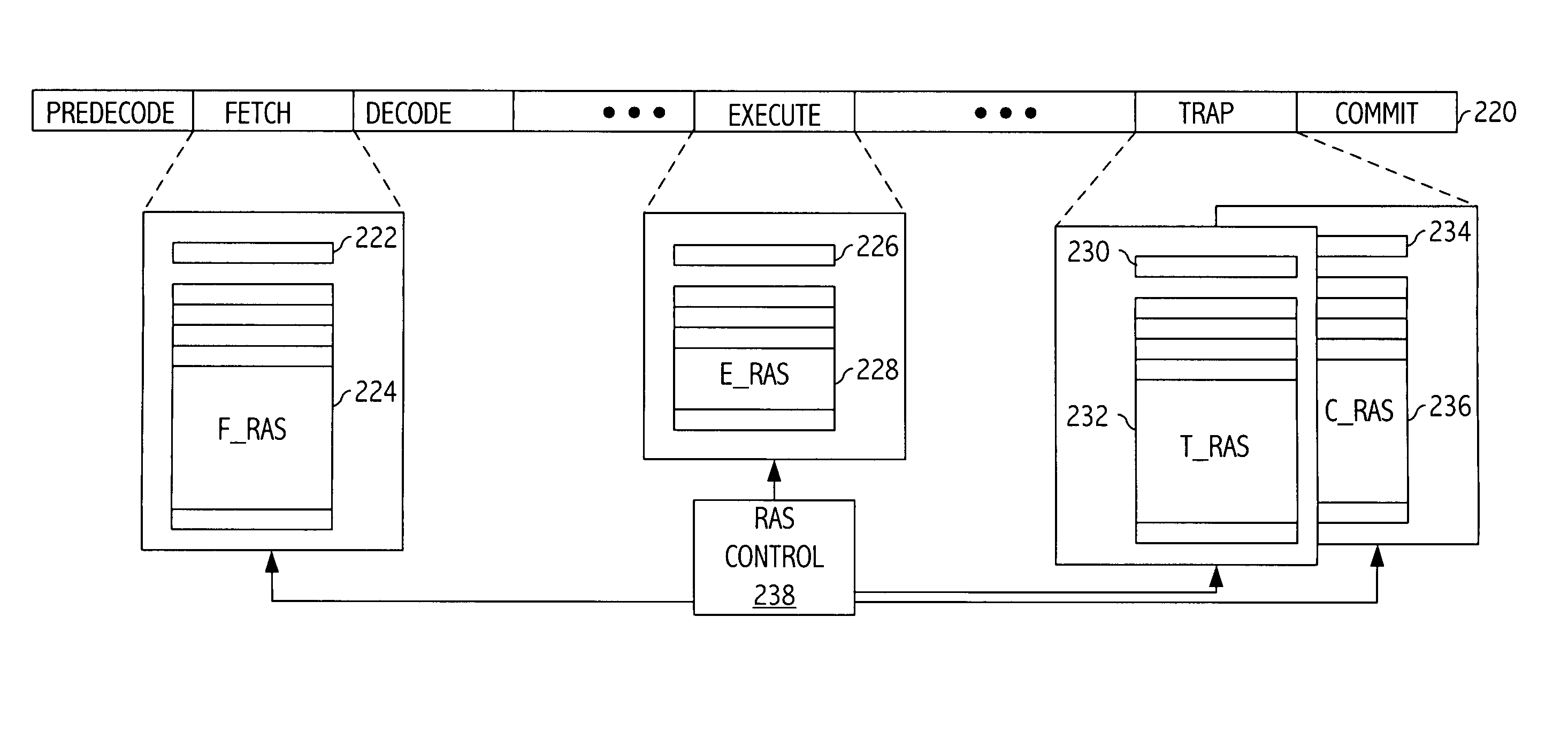

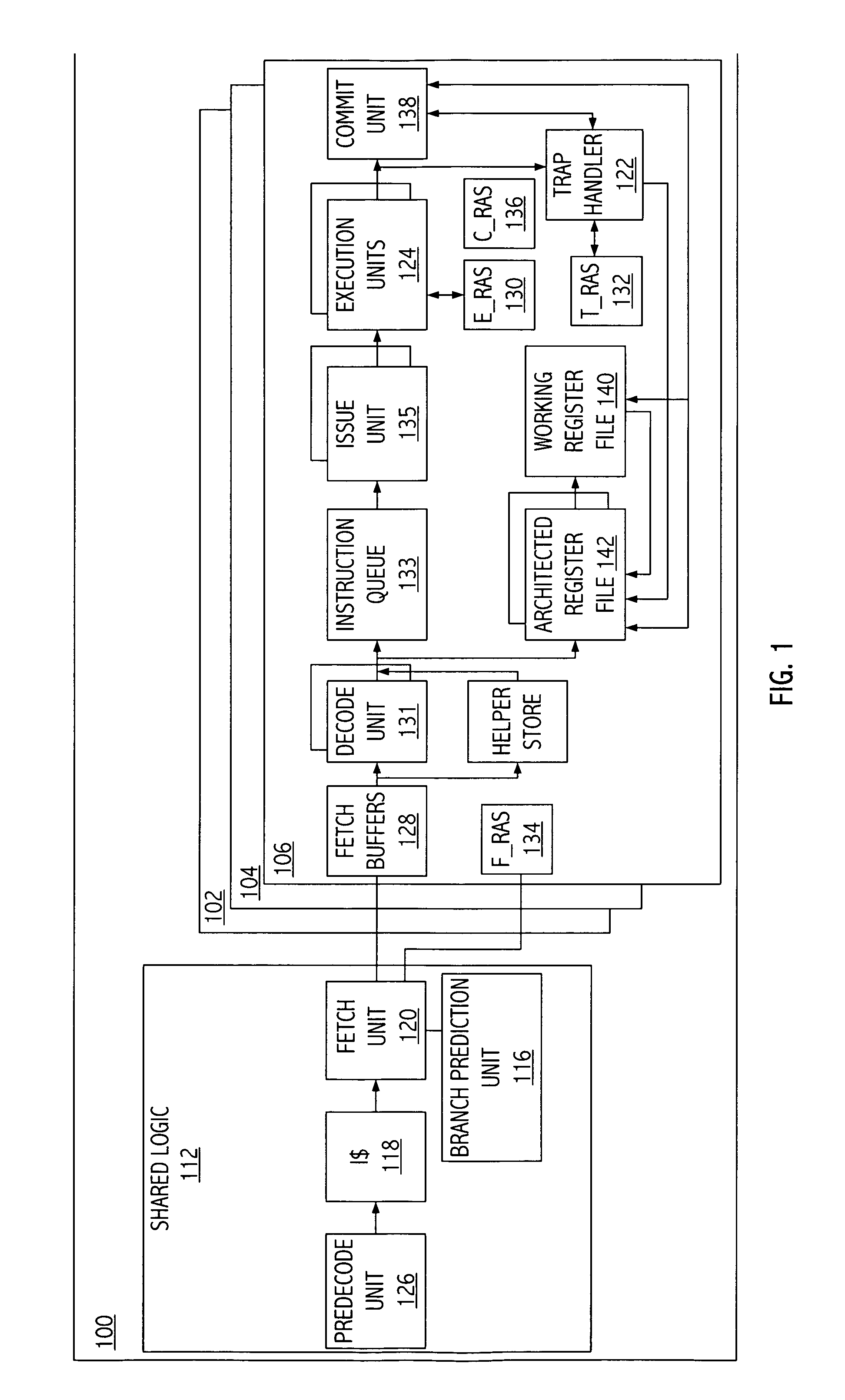

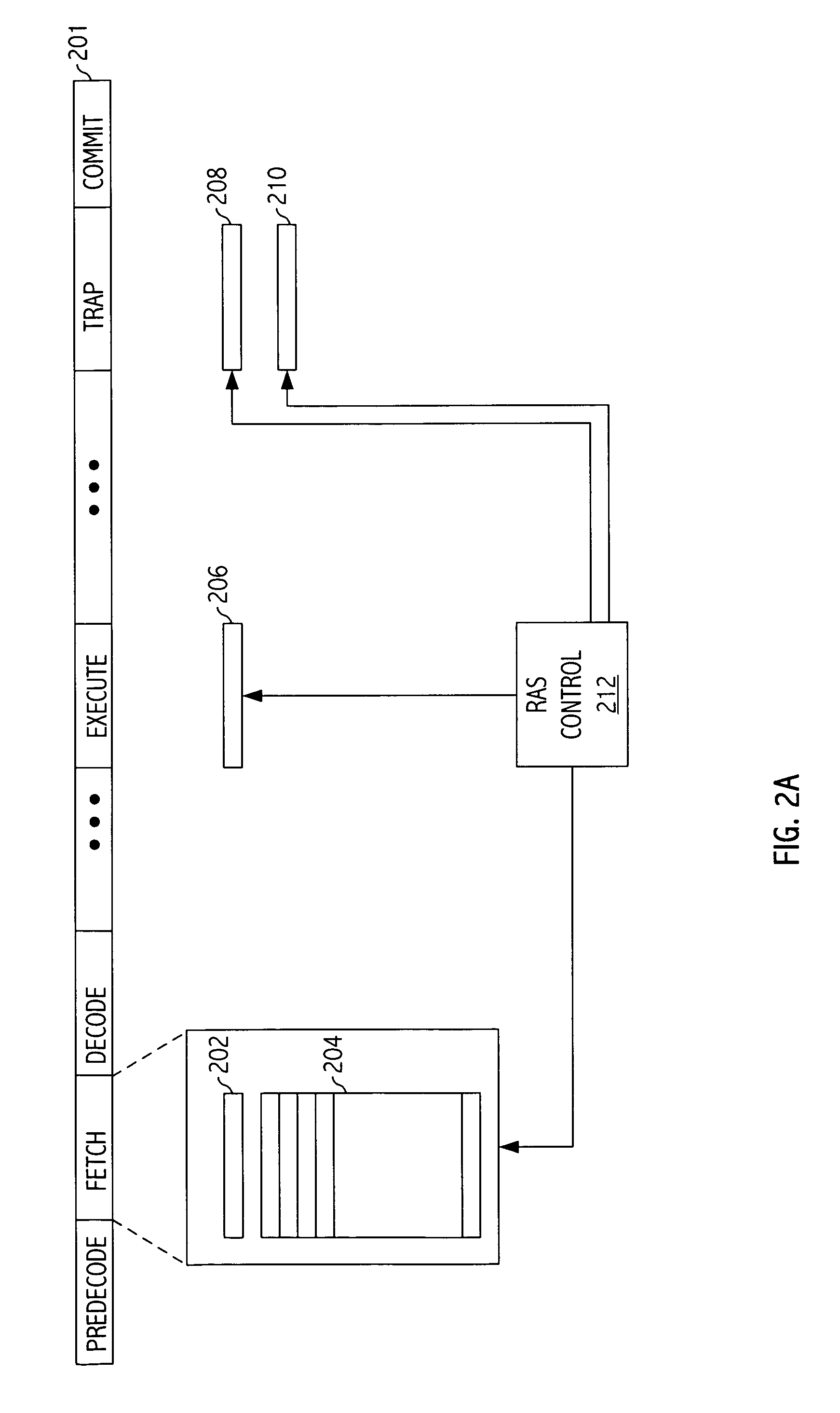

Return address stack recovery in a speculative execution computing apparatus

ActiveUS7836290B2Digital computer detailsSpecific program execution arrangementsSpeculative executionReturn address stack

Owner:ORACLE INT CORP

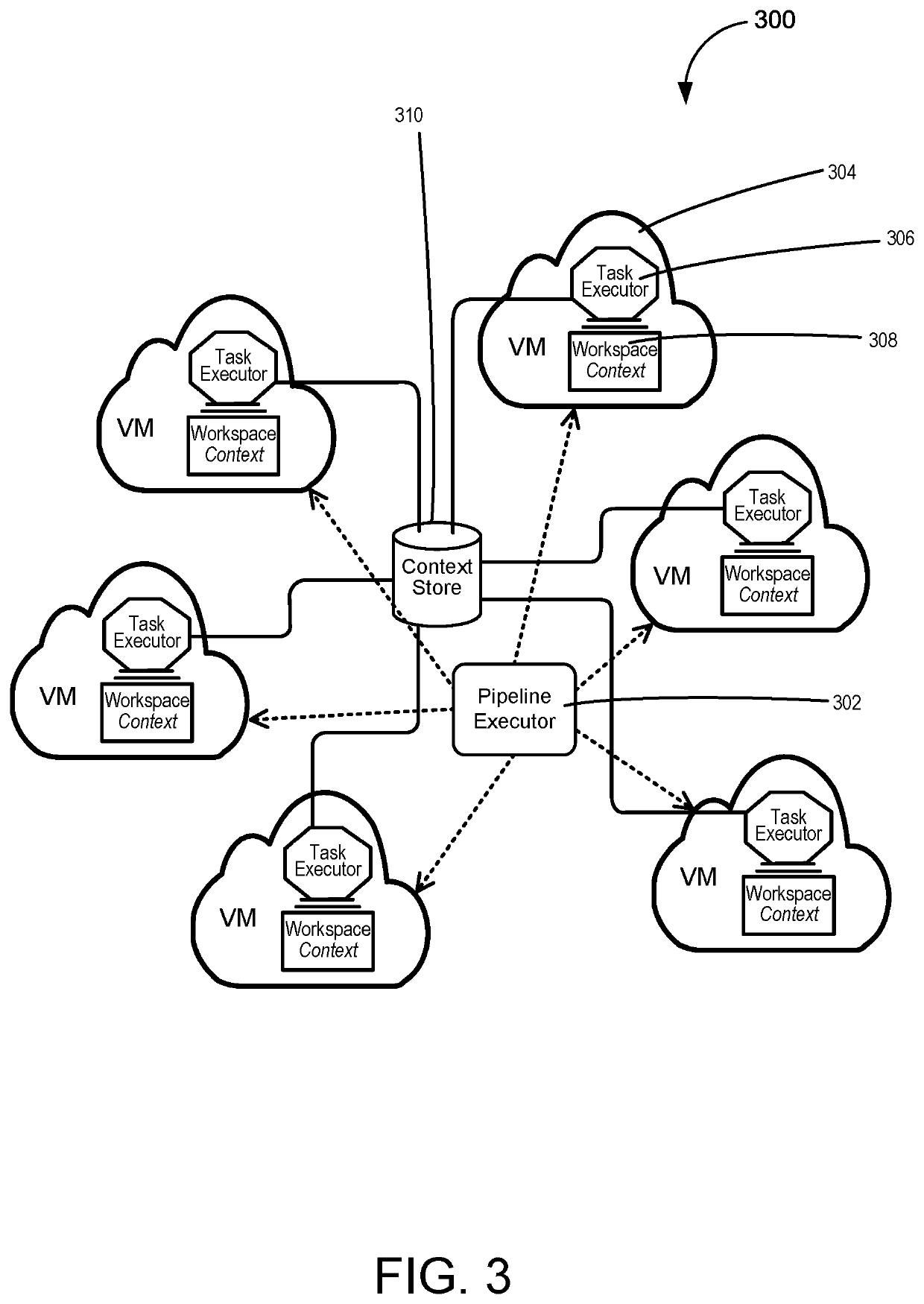

Persistent context for reusable pipeline components

Techniques are provided for managing and isolating build process pipelines. The system can encapsulate all the information needed for each build process step in a build context structure, which may be accessible to the build process step. Each build process step can receive input from the build context, and can generate a child build context as output. Accordingly, the build pipeline may be parallelized, duplicated, and / or virtualized securely and automatically, and the build context can carry, organize, and isolate data for each task. The build context from each step can also be stored and subsequently inspected, e.g. for problem-solving. A computing device can execute a first build step configured to generate the build context including a plurality of output objects. The computing device further executes a second build step based on the build context, which is accessible to the second build step but isolated from other processes.

Owner:ORACLE INT CORP

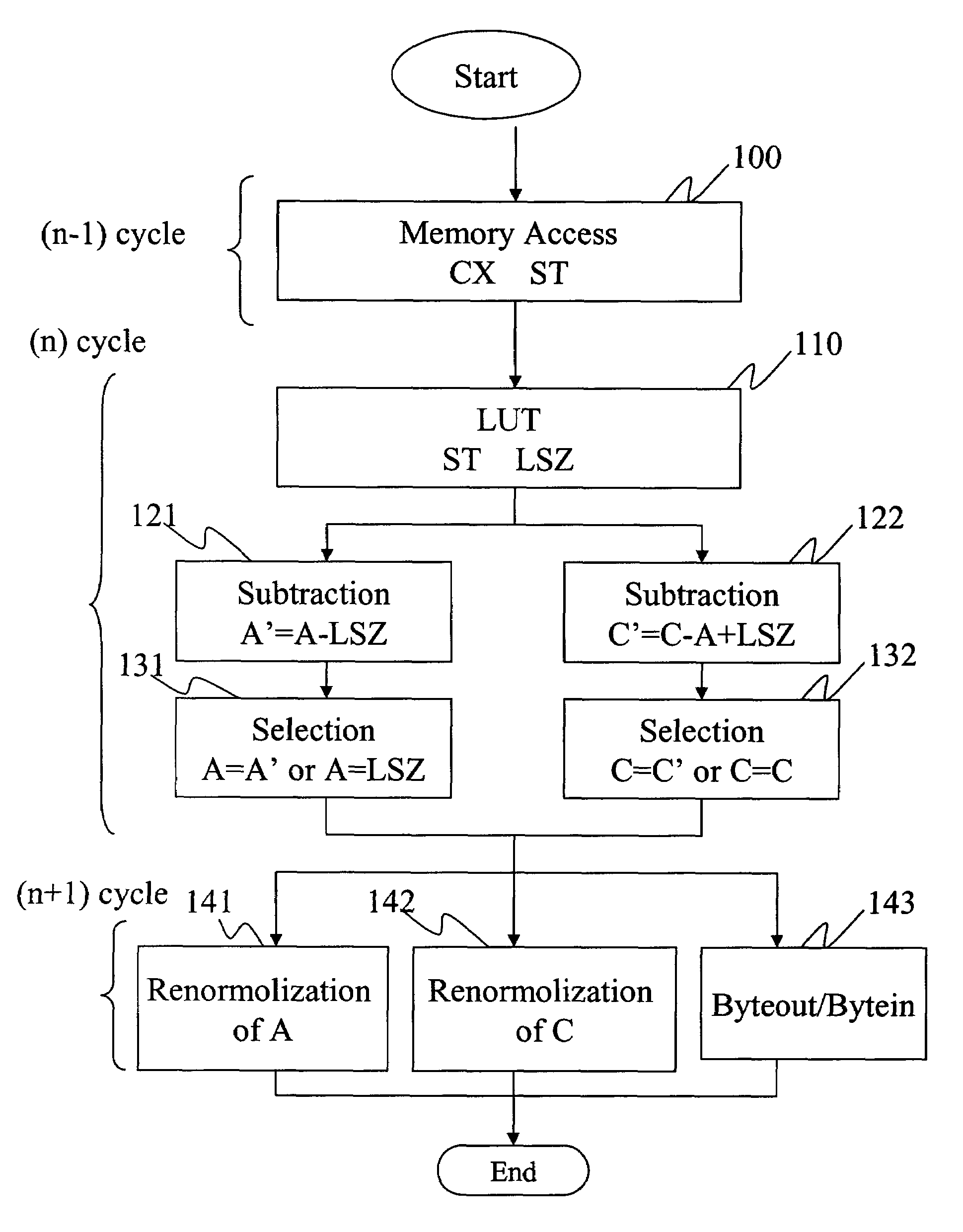

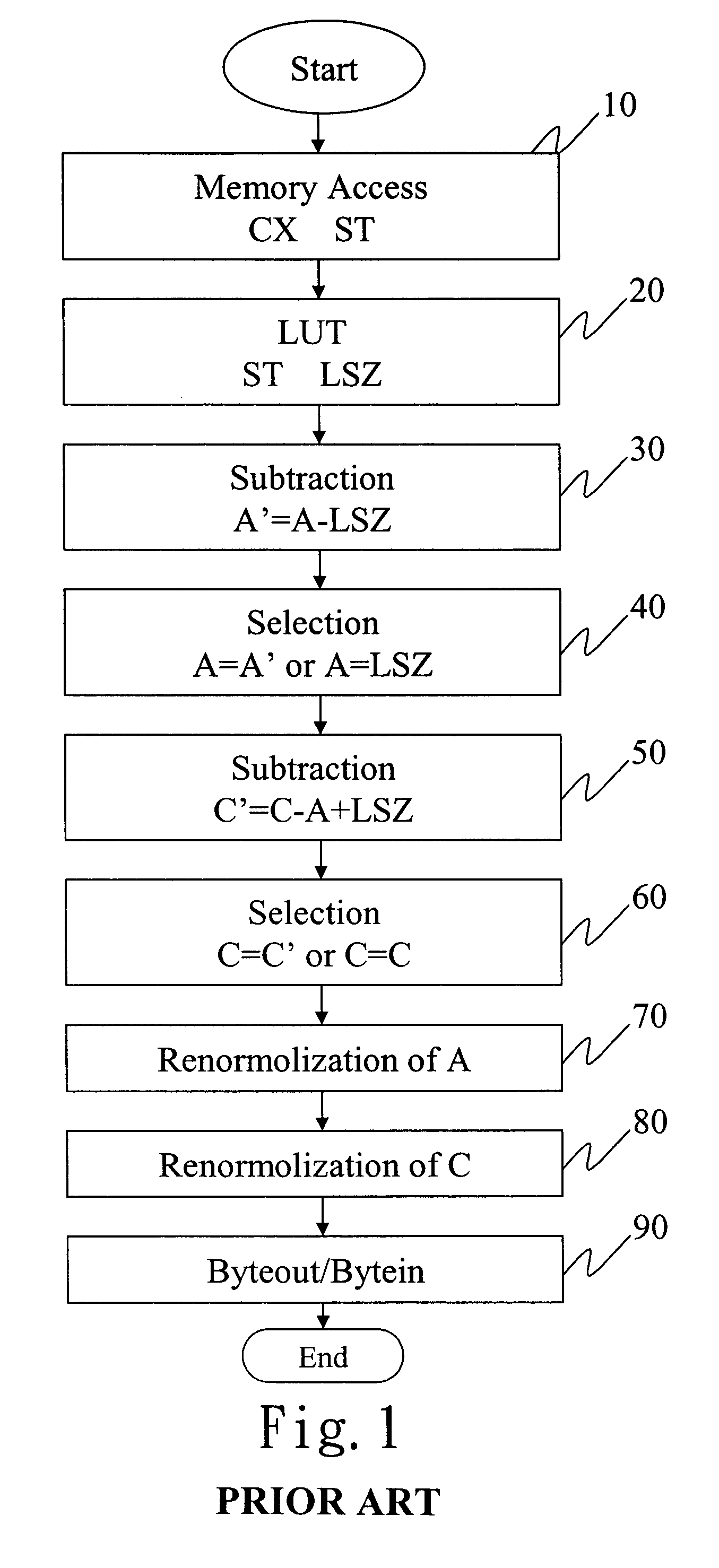

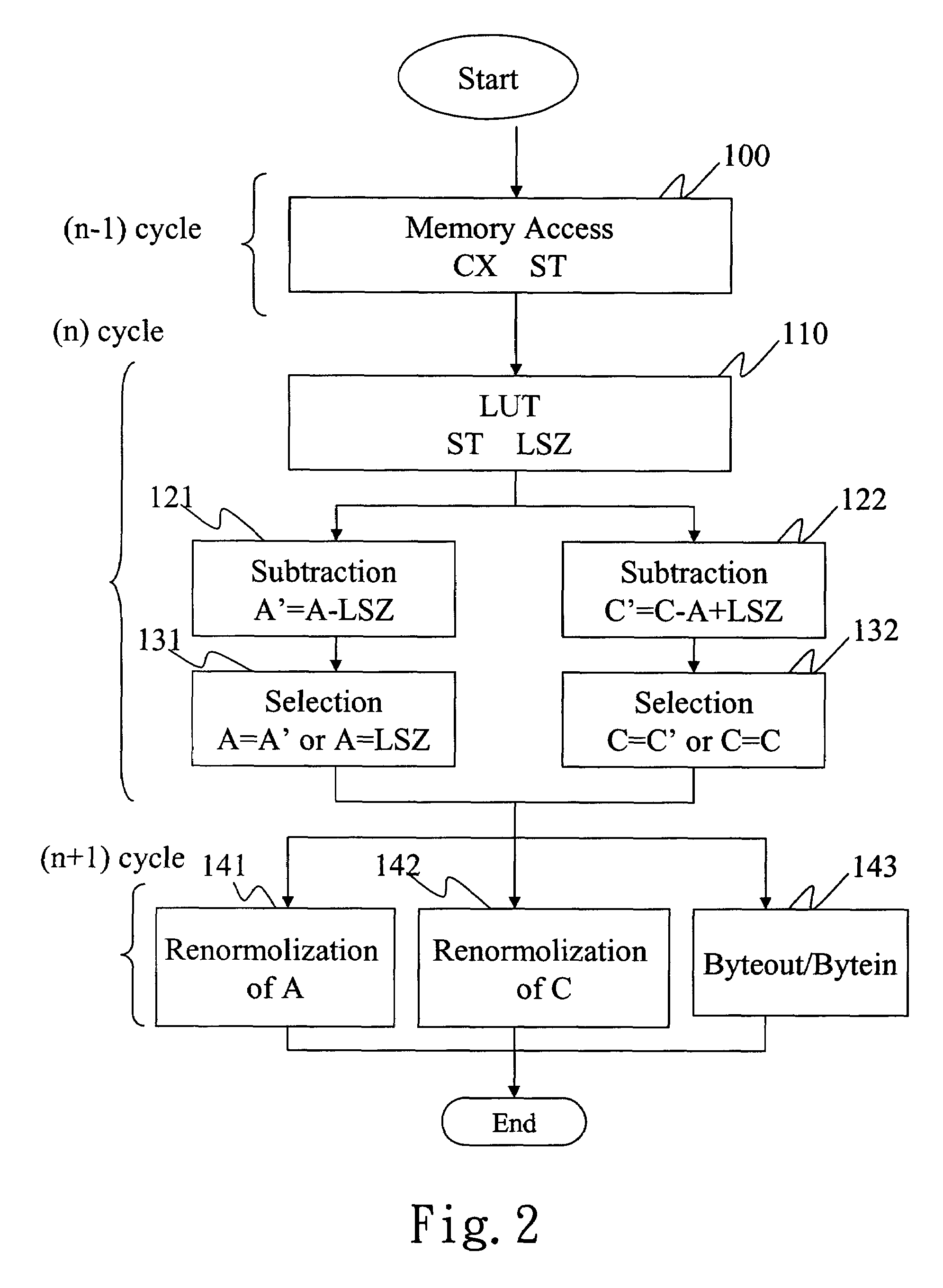

Method of compressing and decompressing images

InactiveUS7218786B2Improve codec speedImprove processing speedCharacter and pattern recognitionPictoral communicationRenormalizationWork cycle

A method of compressing and decompressing images is disclosed for applications in compression and decompression chips with the JBIG standard. The pipeline of computing a pixel is divided into three parts: memory access, numerical operations, renormalization and byteout / bytein. Each steps takes a work cycle; therefore, three pixels are processed in parallel at the same time. In comparison, the work cycle of the prior art without pipeline improvement is longer. This method can effectively shorten the work cycle of each image data process, increasing the speed of compressing and decompressing image data.

Owner:PRIMAX ELECTRONICS LTD

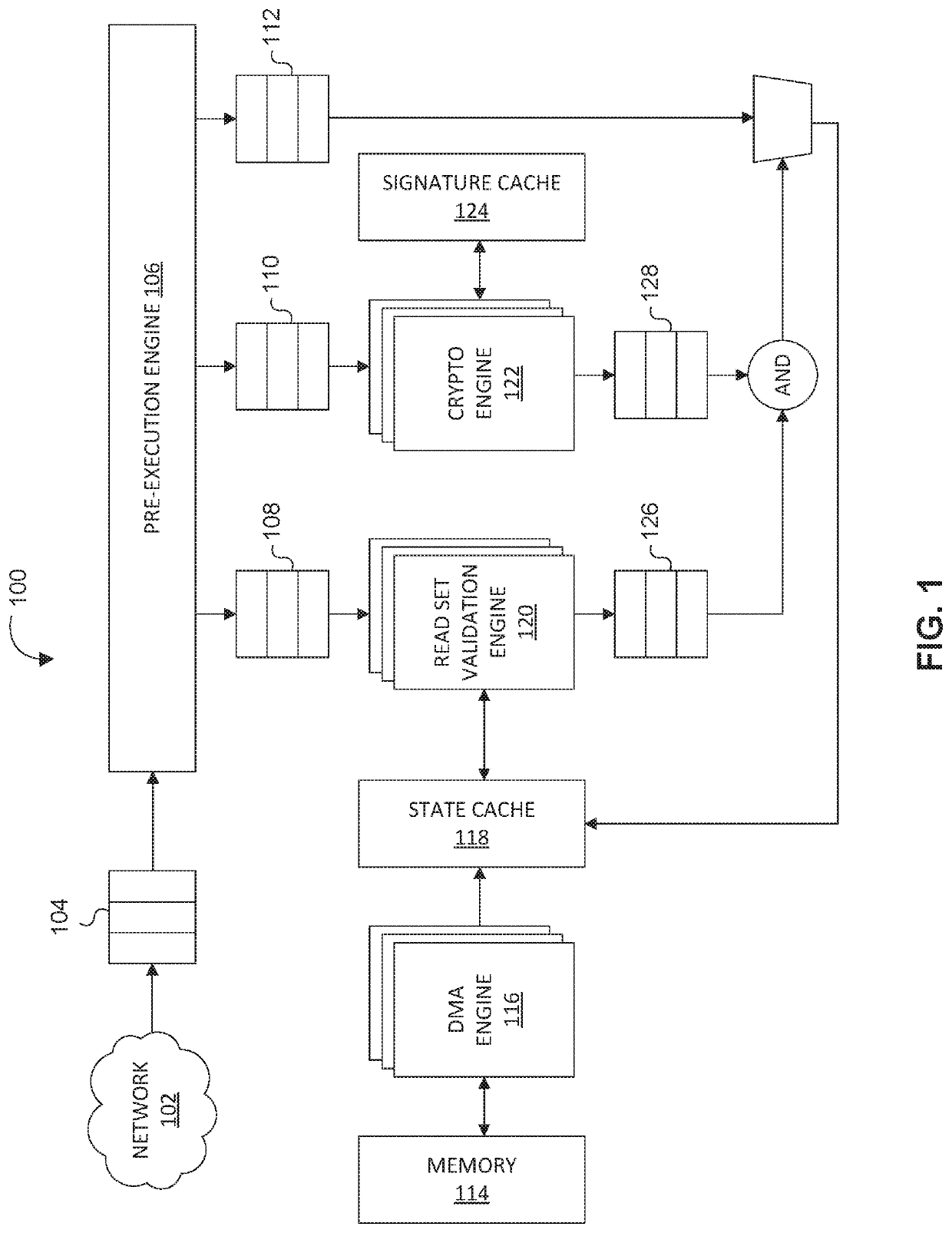

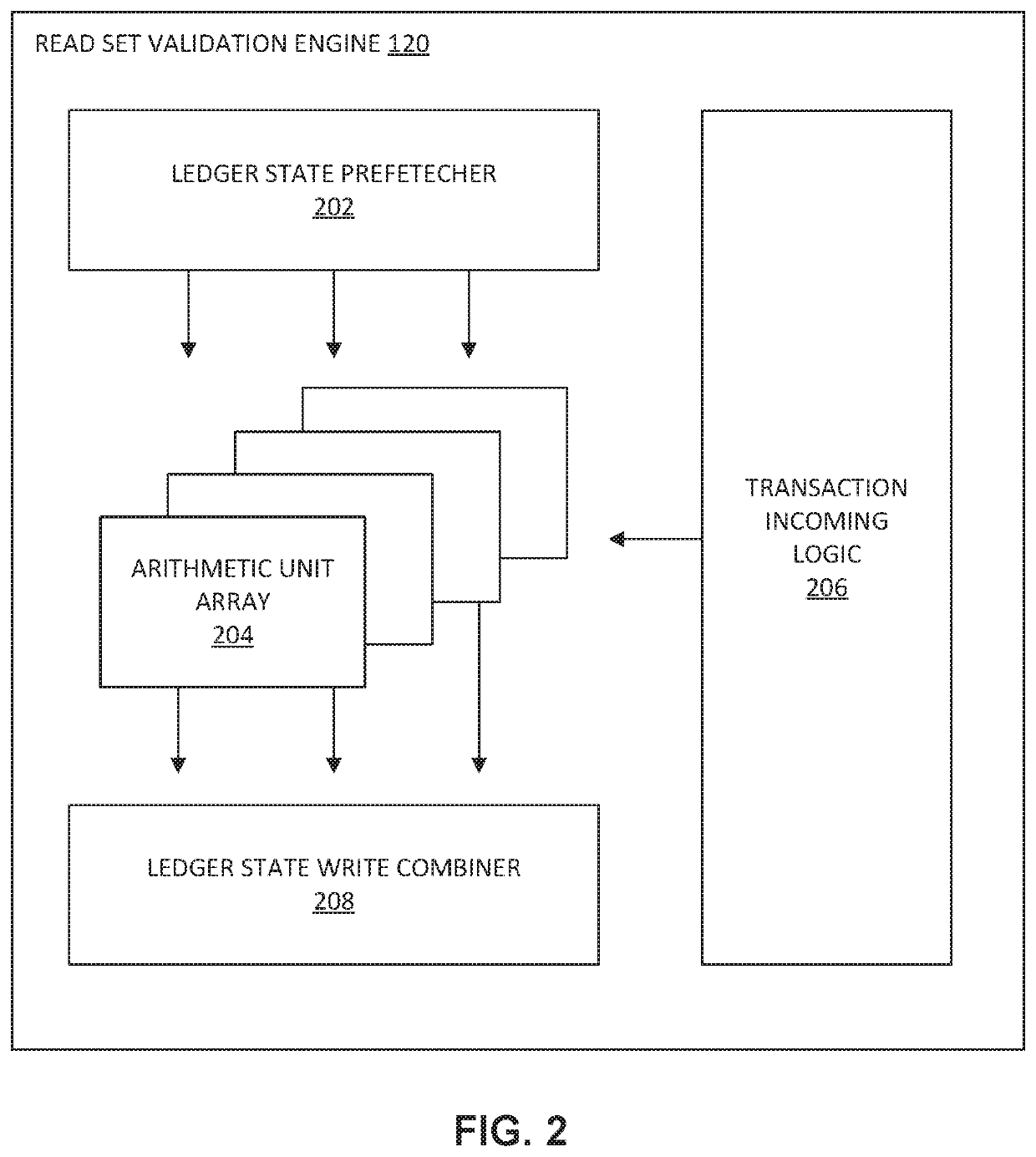

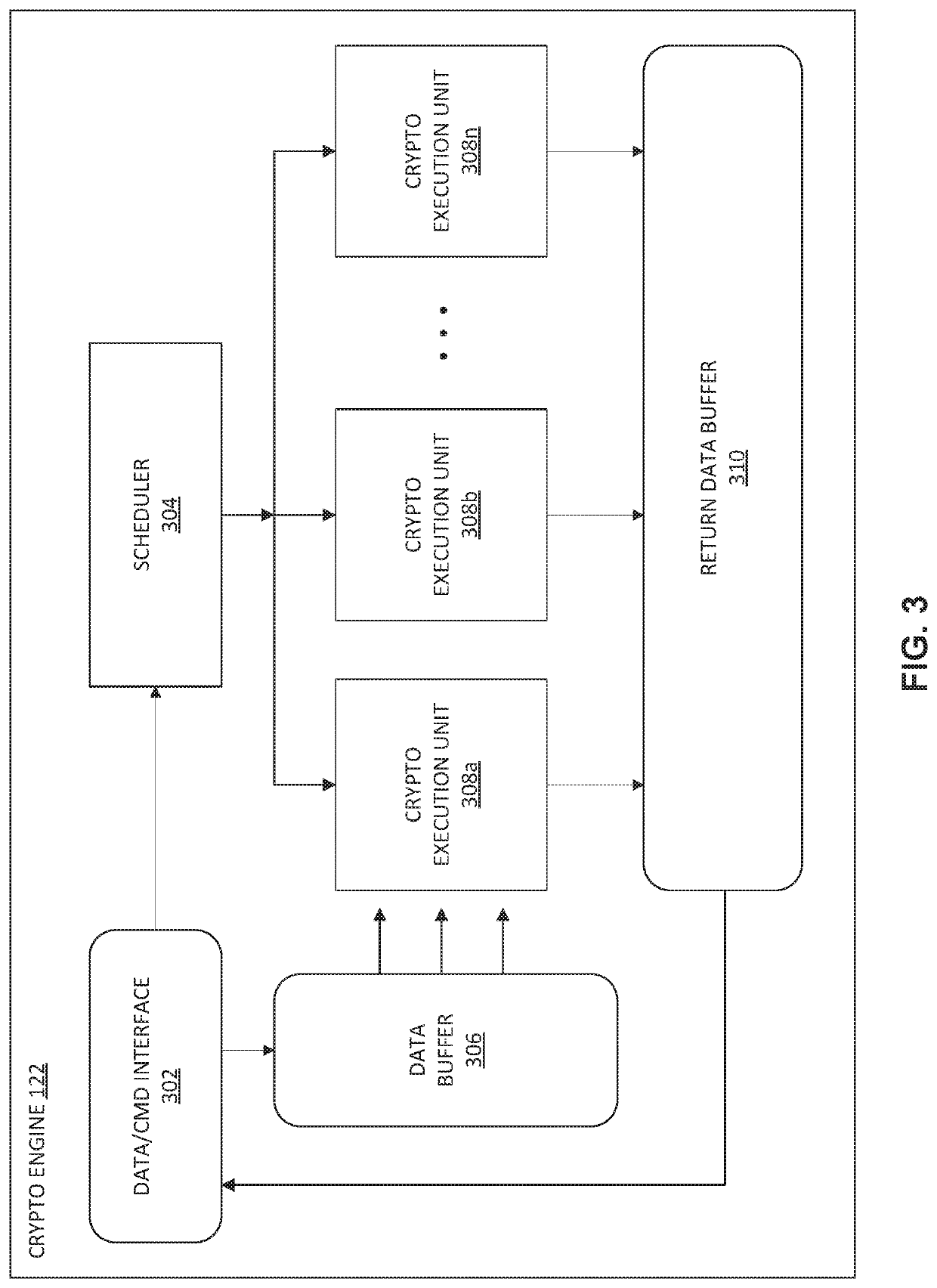

Systems and methods for performing programmable smart contract execution

InactiveUS20200210402A1Reduce attackReduce riskDatabase updatingUser identity/authority verificationComputer architectureHardware architecture

Systems and methods related to a fixed pipeline hardware architecture configured to execute smart contracts in an isolated environment separate from a computing processing unit are described herein. Executing a smart contract may comprise performing a set of distributed ledger operations to modify a ledger associated with a decentralized application. The fixed pipeline hardware architecture may comprise and / or be incorporated within a self-contained hardware device comprising electronic circuitry configured to be communicatively coupled or physically attached to a component of a computer system. The hardware device may be specifically programmed to execute, and perform distributed ledger operations associated with, particular smart contracts, or types of smart contracts, that administer different decentralized applications and / or one or more aspects of different decentralized applications.

Owner:ACCELOR LTD

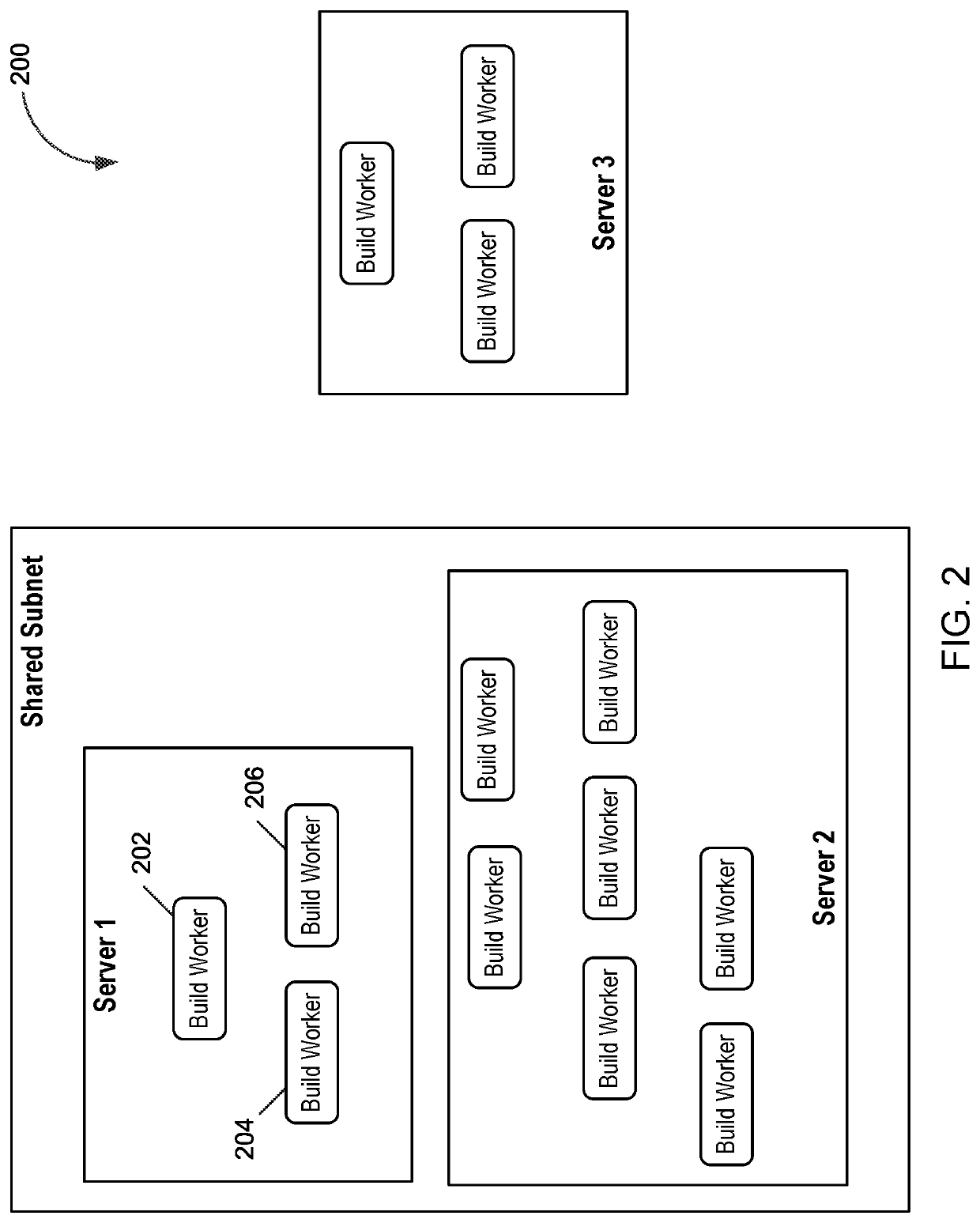

Auto-adjusting worker configuration for grid-based multi-stage, multi-worker computations

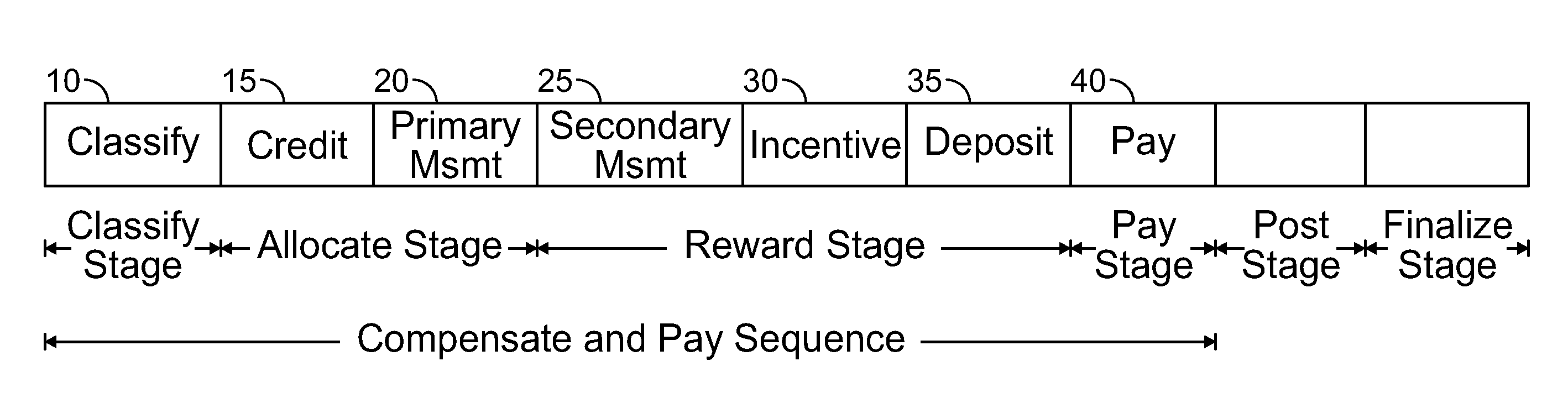

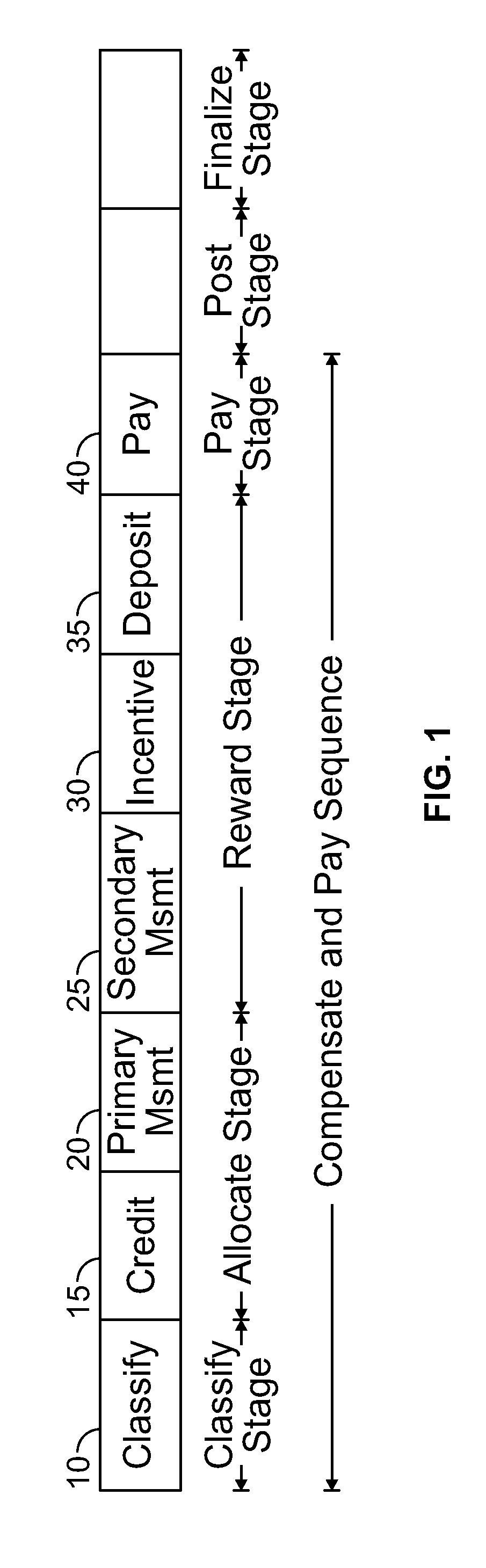

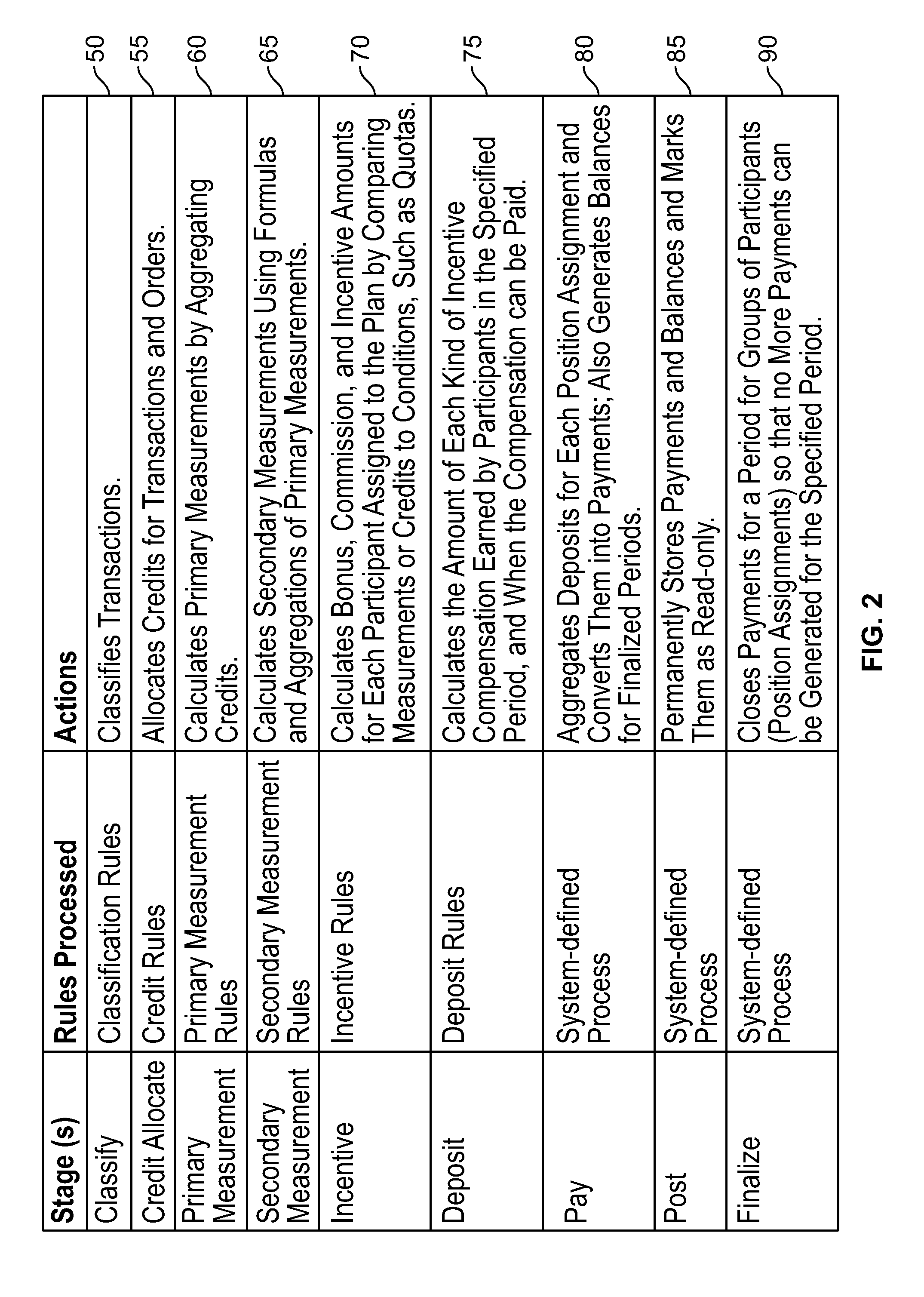

InactiveUS20130238383A1Operation efficiency can be improvedDigital computer detailsResourcesData packTheoretical computer science

A method of improving the operational efficiency of segments of a data pipeline of a cloud based transactional processing system with multiple cloud based resources. The Method having a first step to virtually determine an approximation of a processing runtime of computations computing a value from transactions using potentially available resources of said cloud based transactional processing system for processing segments of a pipeline of data wherein said data comprising compensation and payment type data. A second step to determine an actual processing runtime of computations computing a value from actual transactions using actual available resources using available resources for processing segments of a pipeline of data wherein said data comprising compensation and payment type data. A third step for adjusting a difference between the approximation of the runtime of said first step and the actual processing runtime of said second step by changing material parameters at least including the volume of transactions and available resources at particular segments of the pipeline, to produce an optimum result in adjusted processing runtime.

Owner:CALLIDUS SOFTWARE

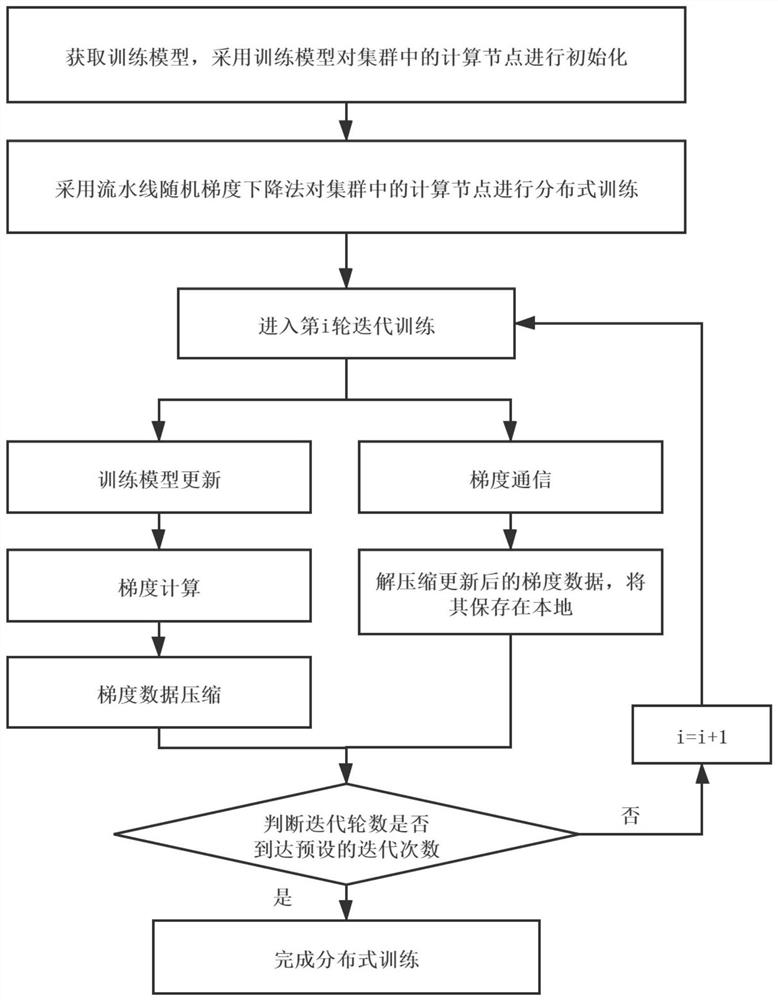

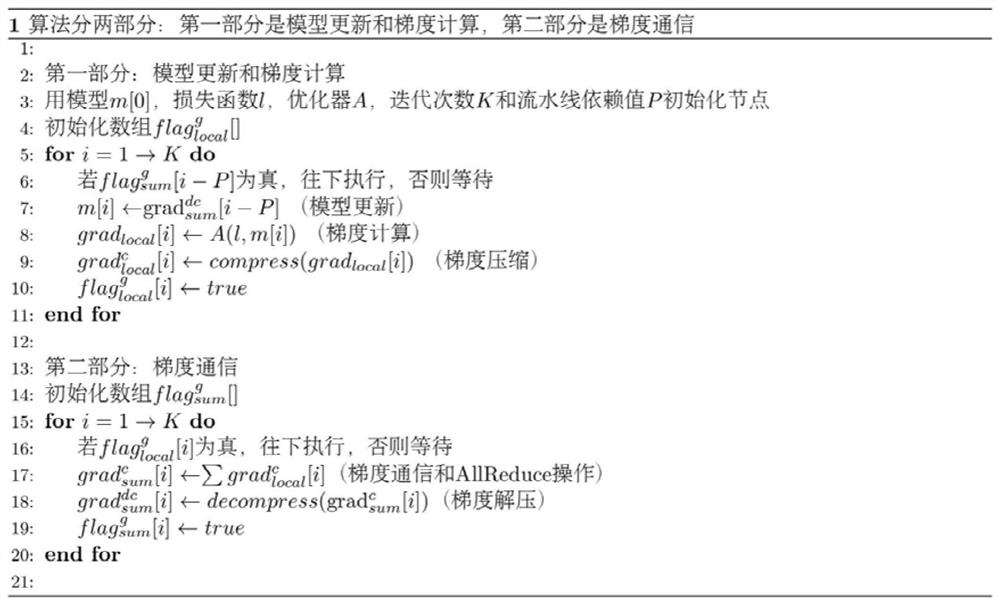

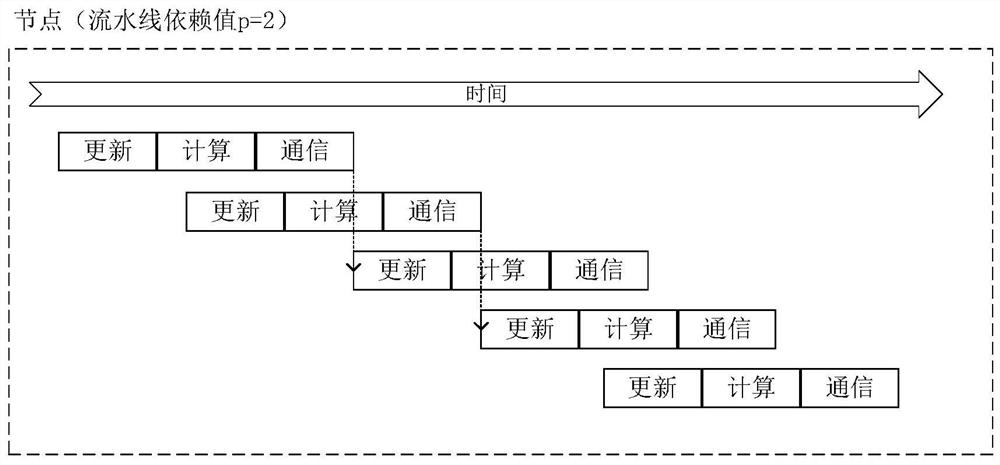

Distributed deep learning method based on pipeline annular parameter communication

PendingCN112862088ASolve congestionReduce time consumptionInterprogram communicationConcurrent instruction executionStochastic gradient descentAlgorithm

In order to overcome the defects of low cluster training speed and high training time overhead, the invention provides a distributed deep learning method based on pipeline ring parameter communication. The method comprises the following steps: obtaining a training model, and initializing computing nodes in a cluster by adopting the training model; performing distributed training on computational nodes in the cluster by adopting a pipeline stochastic gradient descent method, executing training model updating and gradient calculation, and executing gradient communication in parallel during the period; after the node completes the ith round of gradient calculation locally, compressing gradient data, then starting a communication thread to execute an annular AllReduce operation, and starting the (i + 1)-th round of iterative training at the same time until the iterative training is completed. According to the method, an annular AllReduce algorithm is adopted, the problem of communication congestion of server nodes of an image parameter server framework is avoided through annular communication, and time consumption is reduced through parallel overlapping calculation and communication of a local assembly line.

Owner:SUN YAT SEN UNIV

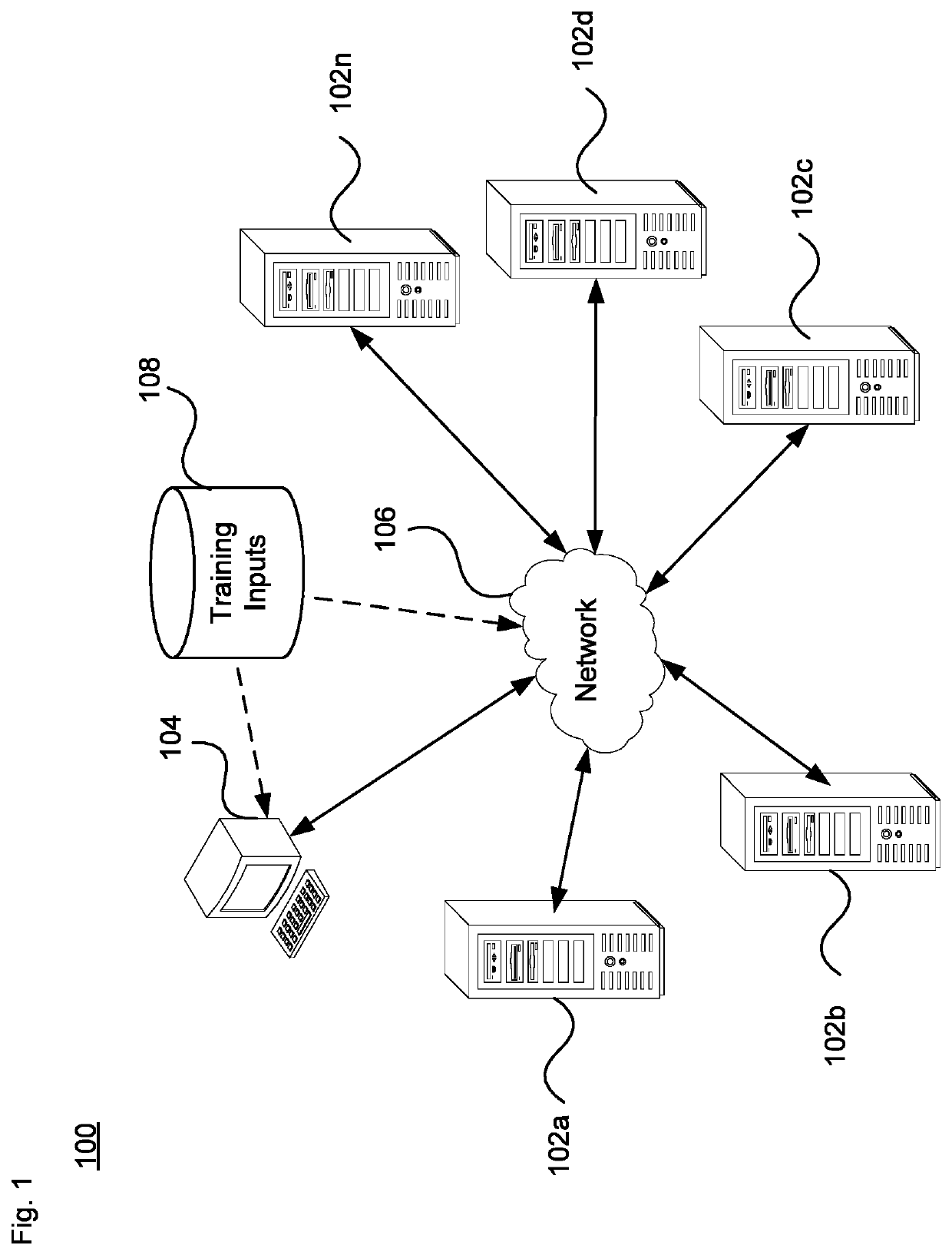

Data set generation for testing of machine learning pipelines

ActiveUS20200234177A1Avoid wastingAccurate dataCharacter and pattern recognitionMachine learningData setTheoretical computer science

A system may include memory containing: (i) a master data set representable in columns and rows, and (ii) a query expression. The system may include a software application configured to apply a machine learning (ML) pipeline to an input data set. The system may include a computing device configured to: obtain the master data set and the query expression; apply the query expression to the master data set to generate a test data set, where applying the query expression comprises, based on content of the query expression, generating the test data set to have one or more columns or one or more rows fewer than the master data set; apply the ML pipeline to the test data set, where applying the ML pipeline results in either generation of a test ML model from the test data set or indication of an error in the test data set; and delete the test data set from the memory.

Owner:SERVICENOW INC

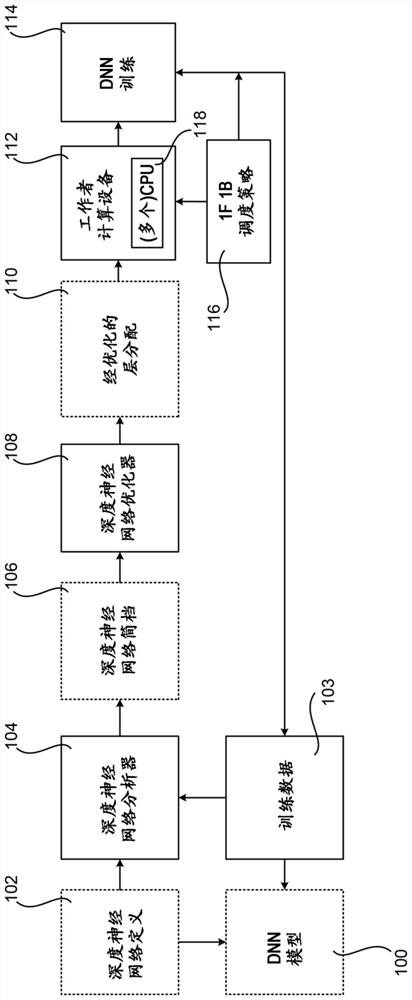

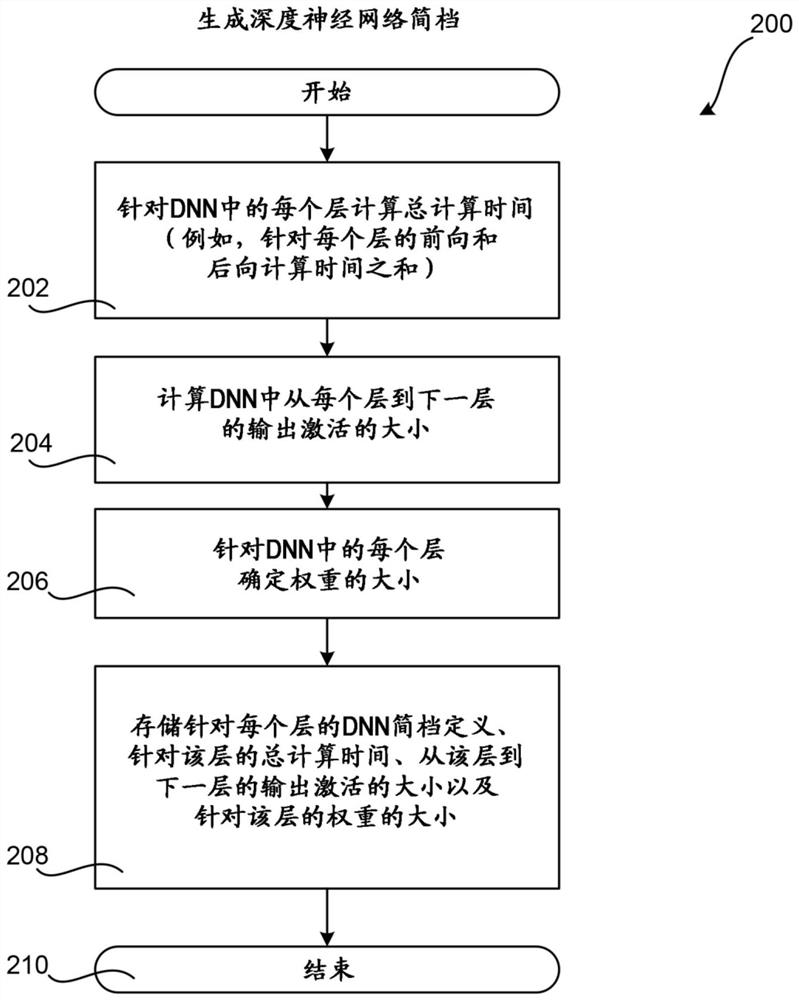

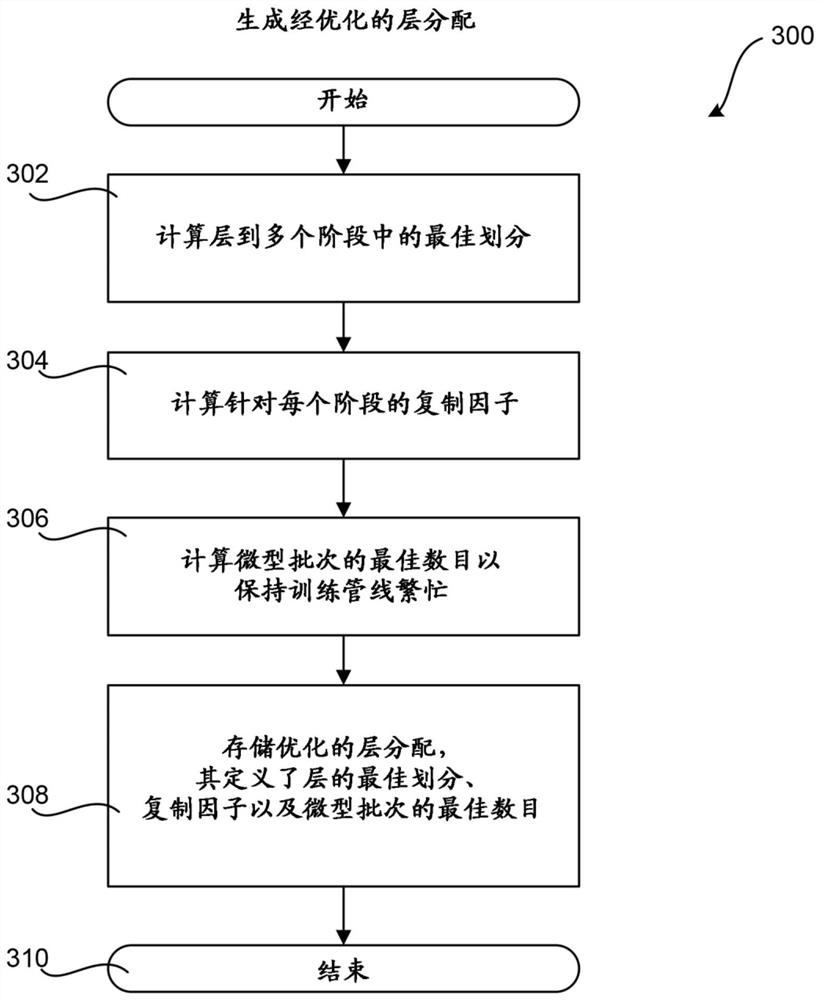

High-performance pipeline parallel deep neural network training

PendingCN112154462AGuarantee productivityReduce overheadNeural architecturesPhysical realisationEngineeringScheduling (computing)

Layers of a deep neural network (DNN) are partitioned into stages by using a profile of the DNN. Each of the stages includes one or more of the layers of the DNN. The partitioning of the layers of theDNN into stages is optimized in various ways including optimizing the partitioning to minimize training time, to minimize data communication between worker computing devices used to train the DNN, orto ensure that the worker computing devices perform an approximately equal amount of the processing for training the DNN. The stages are assigned to the worker computing devices. The worker computingdevices process batches of training data by using a scheduling policy that causes the workers to alternate between forward processing of the batches of the DNN training data and backward processing of the batches of the DNN training data. The stages can be configured for model parallel processing or data parallel processing.

Owner:MICROSOFT TECH LICENSING LLC

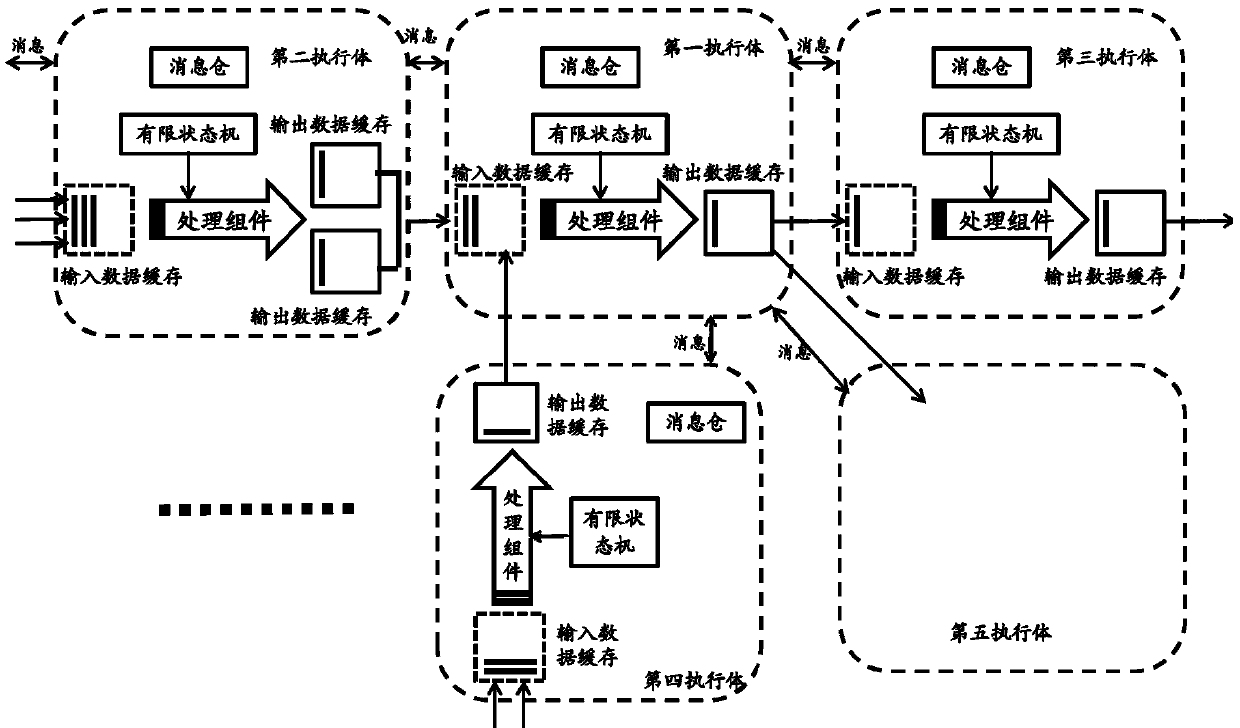

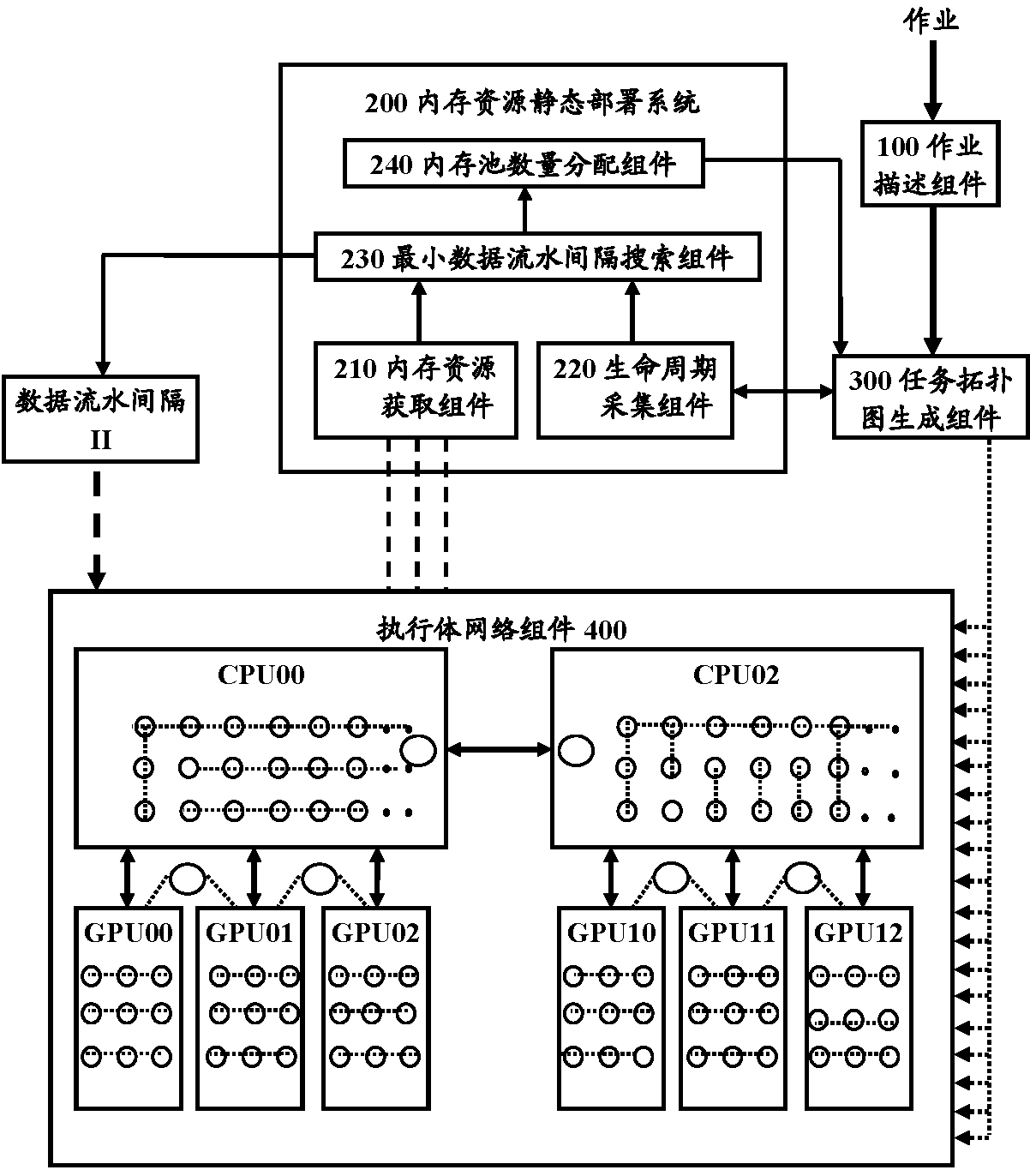

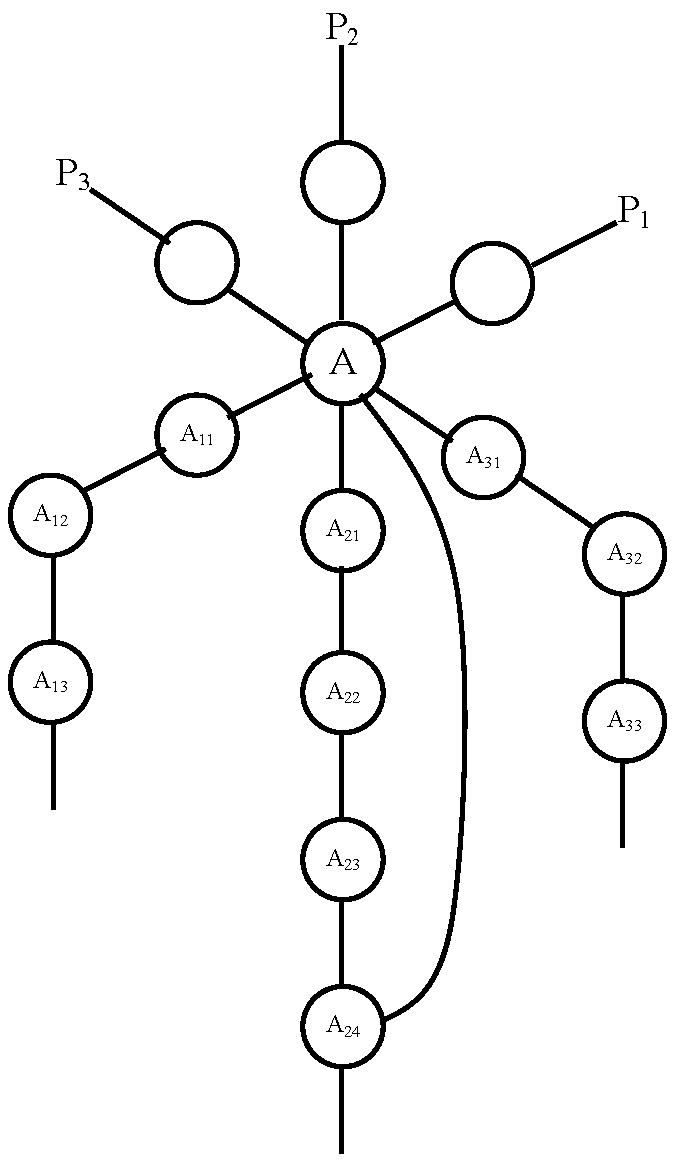

Memory resource static deployment system and method

ActiveCN110955529AEnsure safetyEliminate the risk of memory corruption (OutofMemory)Resource allocationPathPingTopological graph

The invention provides a memory resource static deployment system, comprising a memory resource obtaining component which is used for obtaining the memory resource quantity of each computing device, alife cycle acquisition component which is used for acquiring the life cycle of each logic output cache of each task node from the start of written data to the overwriting of the data on the basis ofall topological paths to which each task node of the task relationship topological graph to be deployed on the plurality of computing devices belongs, a minimum data pipeline interval searching component which is used for searching a minimum data pipeline interval suitable for the system between 0 and the maximum floating point operation value, and a memory pool number allocation component which is used for rounding up the quotient obtained by dividing the acquired life cycle of each logic output cache by the minimum data flow interval to obtain an integer so as to allocate the memory pools ofthe integer parts to the corresponding logic output caches.

Owner:BEIJING ONEFLOW TECH CO LTD

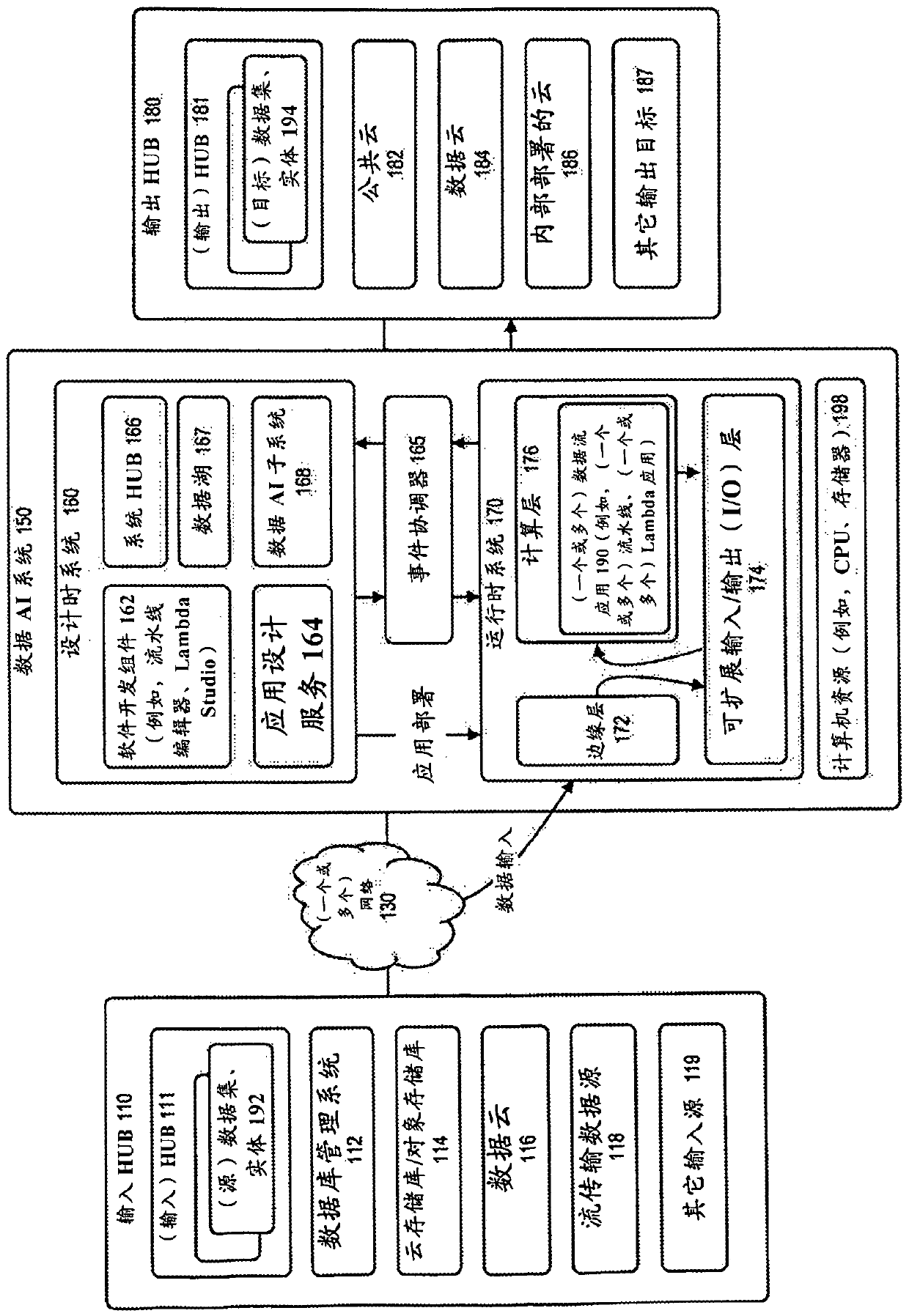

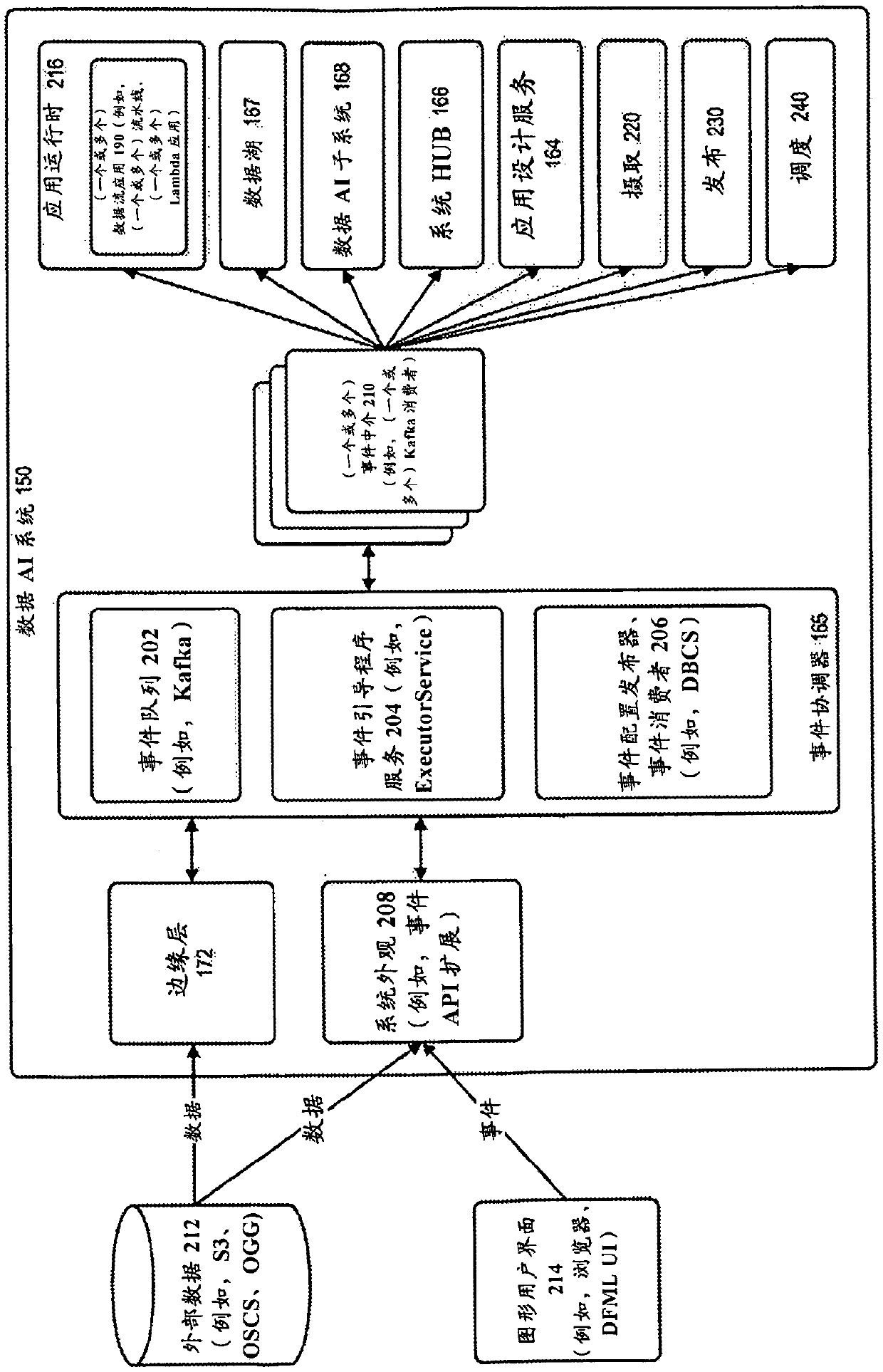

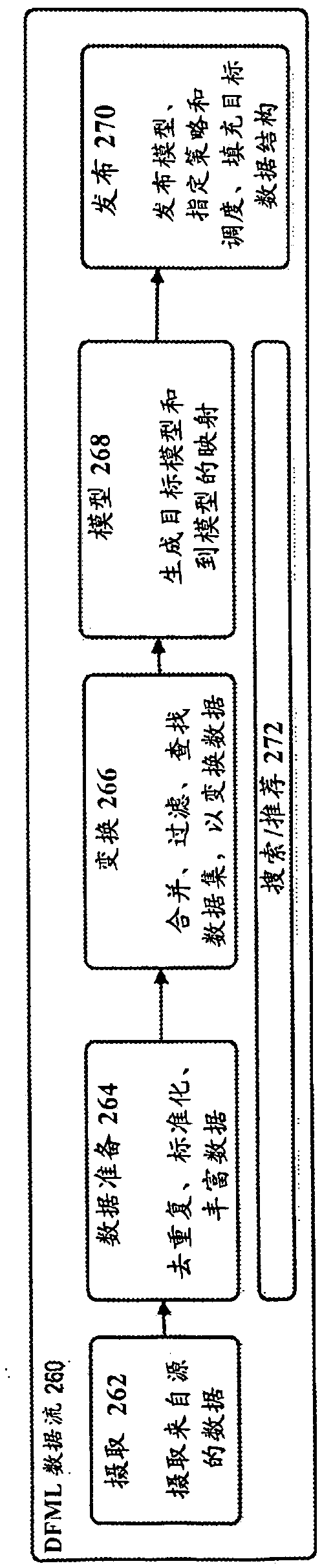

System and method for dynamic lineage tracking, reconstruction, and lifecycle management

In accordance with various embodiments, described herein is a system (Data Artificial Intelligence system, Data AI system), for use with a data integration or other computing environment, that leverages machine learning (ML, DataFlow Machine Learning, DFML), for use in managing a flow of data (dataflow, DF), and building complex dataflow software applications (dataflow applications, pipelines). Inaccordance with an embodiment, the system can provide data governance functionality such as, for example, provenance (where a particular data came from), lineage (how the data was acquired / processed), security (who was responsible for the data), classification (what is the data about), impact (how impactful is the data to a business), retention (how long should the data live), and validity (whether the data should be excluded / included for analysis / processing), for each slice of data pertinent to a particular snapshot in time; which can then be used in making lifecycle decisions and dataflow recommendations.

Owner:ORACLE INT CORP

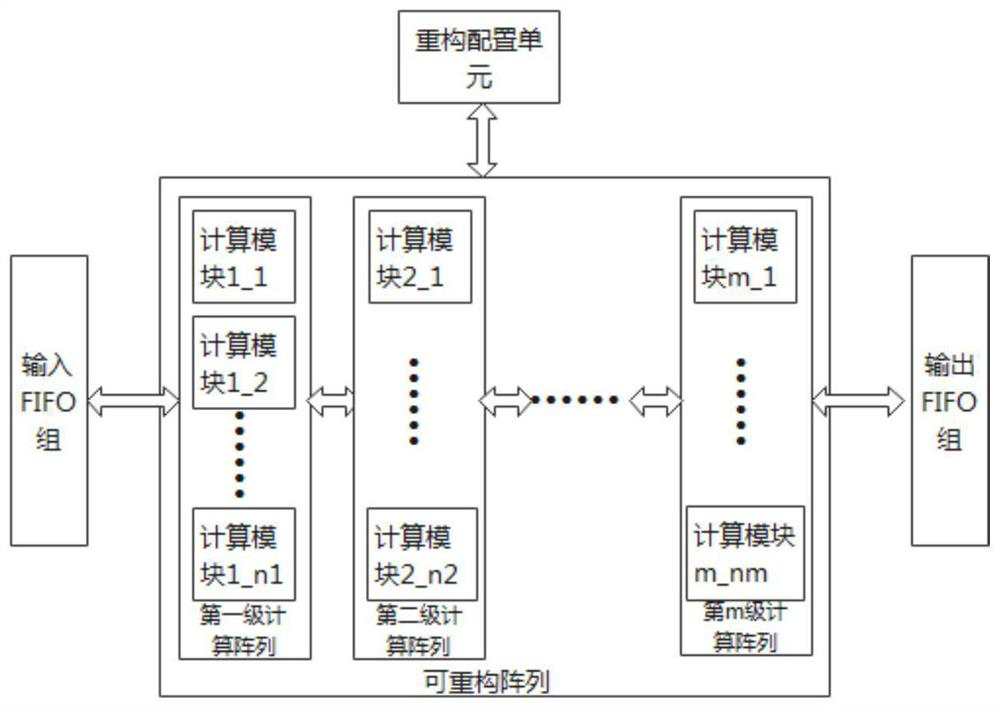

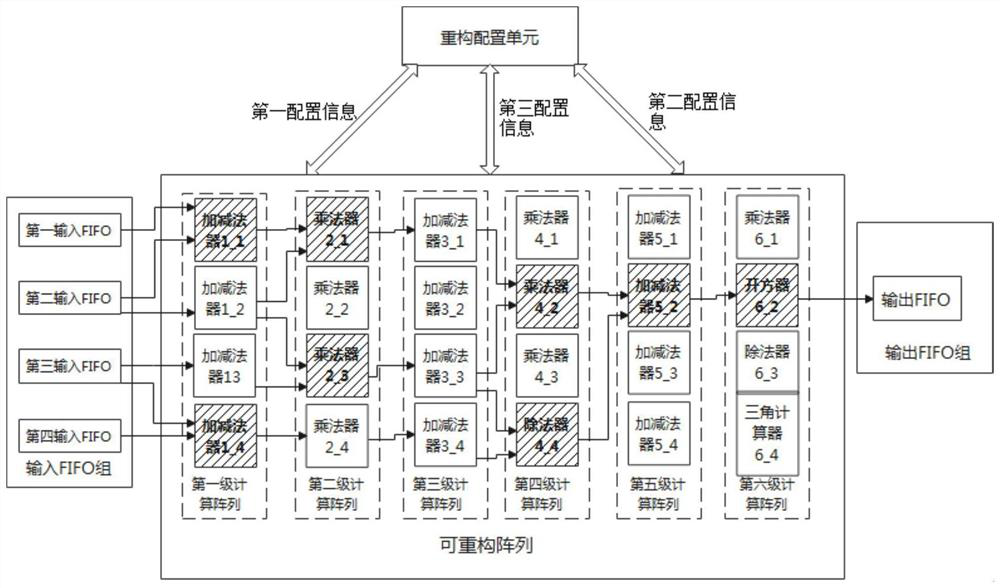

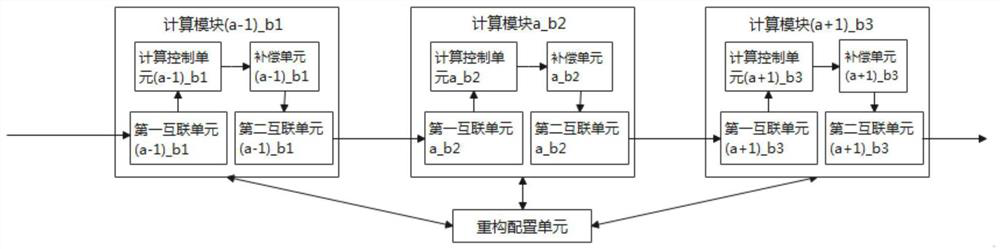

Reconfigurable processor and configuration method

ActiveCN113064852AGuaranteed flexibilitySimplify complexityArchitecture with single central processing unitElectric digital data processingComputer architectureEngineering

The invention discloses a reconfigurable processor and a configuration method. The reconfigurable processor comprises a reconfigurable configuration unit and a reconfigurable array; the reconfigurable configuration unit is used for providing reconfiguration information for reconfiguring a computing structure in the reconfigurable array according to an algorithm matched with the current application scene; the reconfigurable array comprises at least two stages of computing arrays, and the reconfigurable array is used for connecting the two adjacent stages of computing arrays into a data path pipeline structure meeting the computing requirements of an algorithm according to the reconfiguration information provided by the reconfigurable configuration unit; and in the same level of computing array, the pipeline depths of different computing modules accessed to the data path pipeline structure are equal, so that the different computing modules accessed to the data path pipeline structure synchronously output data. Therefore, the reconfigurable processor can configure adaptive assembly line depth according to different algorithms, and on this basis, the overall streamline of the data processing operation of the reconfigurable array is realized, and the data throughput rate of the reconfigurable processor is improved.

Owner:AMICRO SEMICON CORP

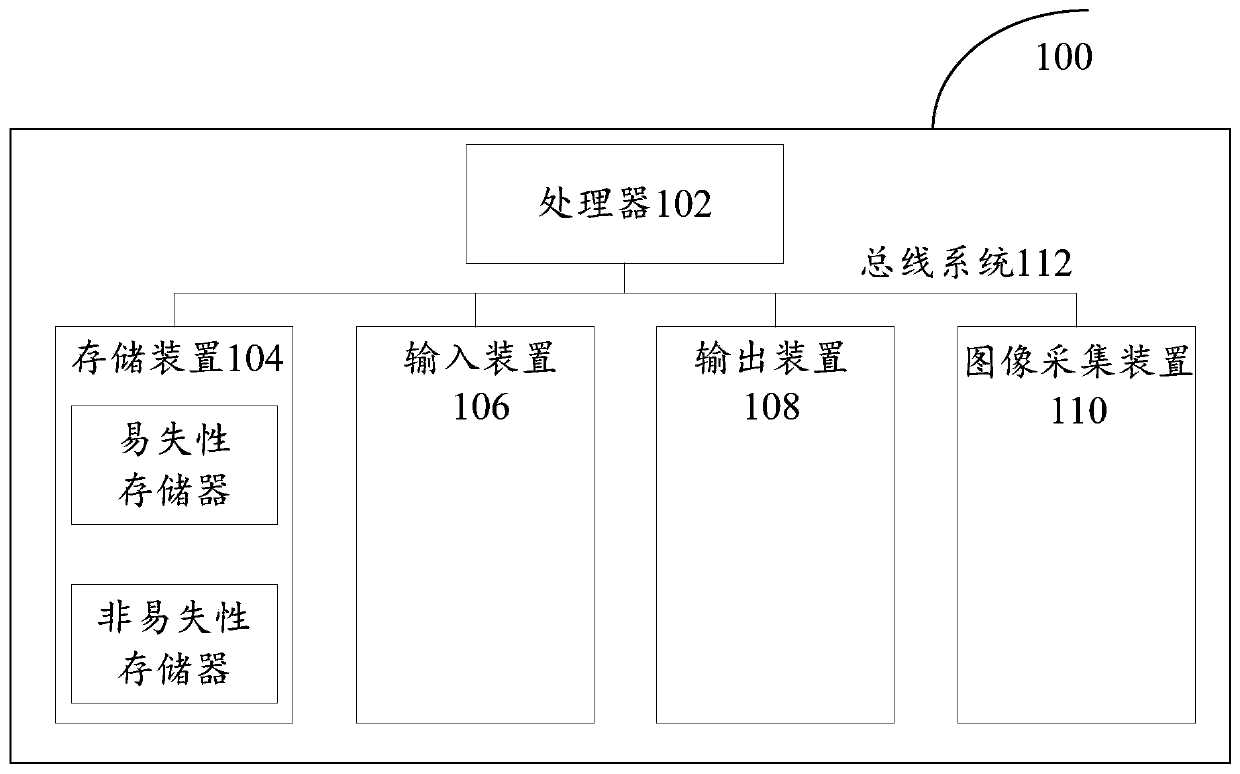

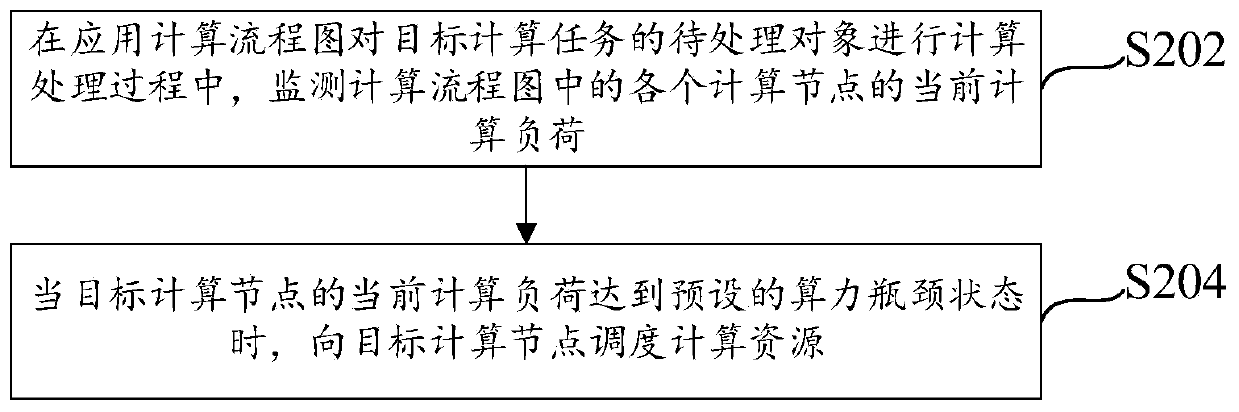

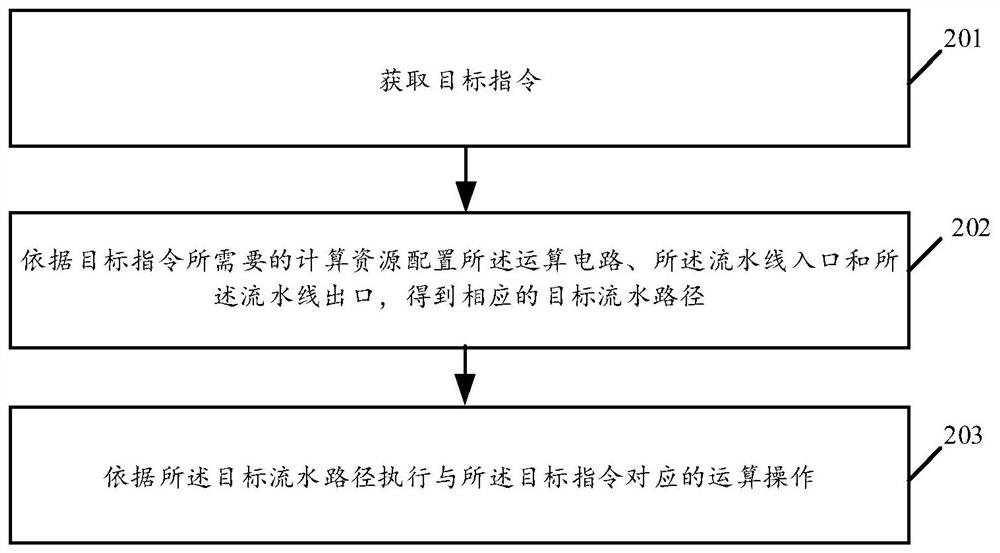

Computing resource scheduling method and device and electronic equipment

ActiveCN111400008AImprove computing efficiencyEfficient schedulingProgram initiation/switchingResource allocationData transmissionDistributed computing

The invention provides a computing resource scheduling method and device, and electronic equipment. The method is executed by a scheduling device, and the method comprises the steps: in a process of performing computing processing on a to-be-processed object of a target computing task by using a computing flow chart, monitoring a current computing load of each computing node in the computing flowchart, wherein the calculation flow chart comprises a plurality of calculation nodes and data transmission pipelines among the mutually connected calculation nodes, the computing node is used for executing a sub-task of a target computing task through a thread in the scheduling equipment, and transmitting data after executing the sub-task to the downstream computing node through a data transmission pipeline; when the current computing load of the target computing node reaches a preset computing power bottleneck state, scheduling computing resources to the target computing node. According to the invention, the computing efficiency of the scheduling equipment can be improved under the condition of limited computing resources.

Owner:BEIJING KUANGSHI TECH

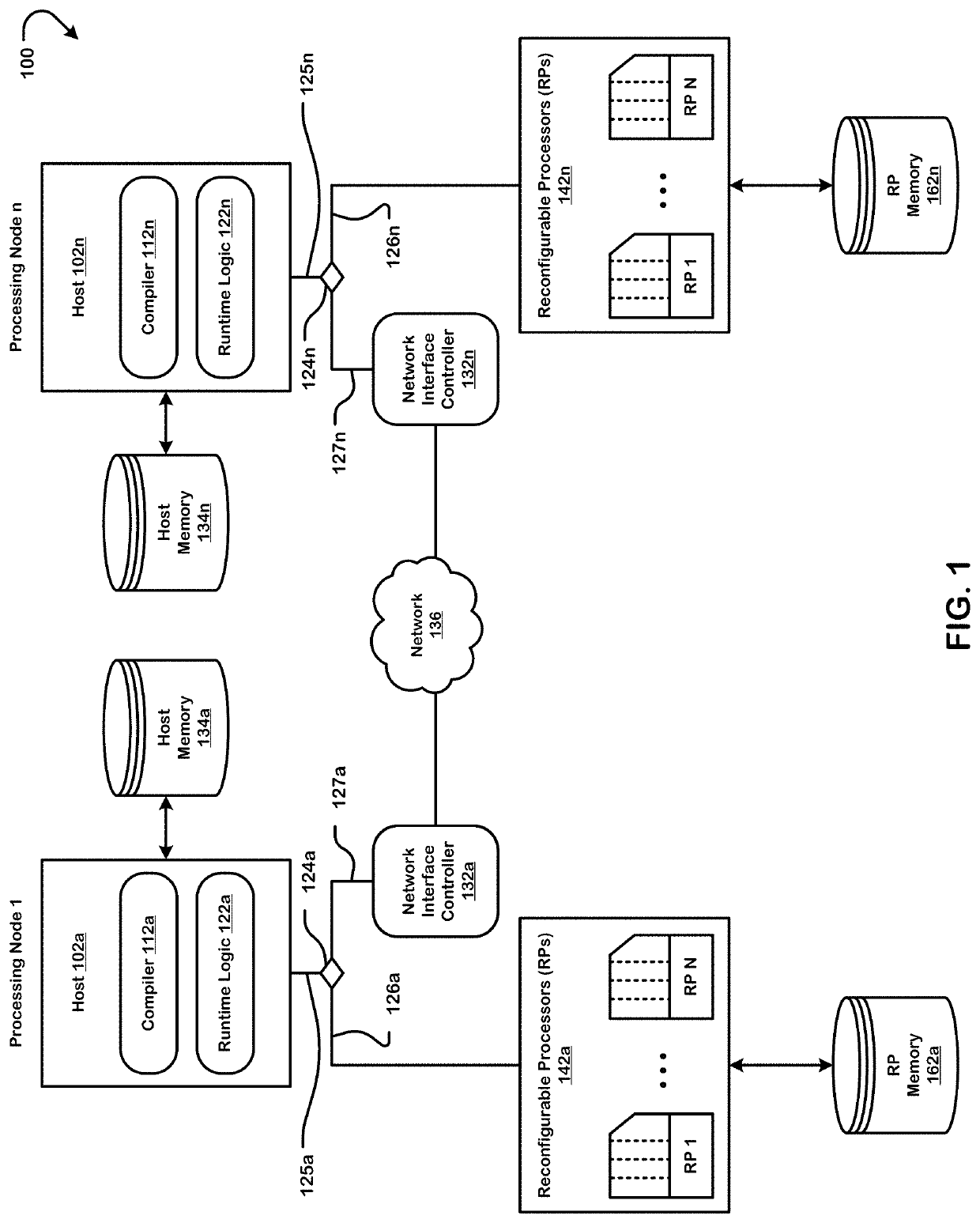

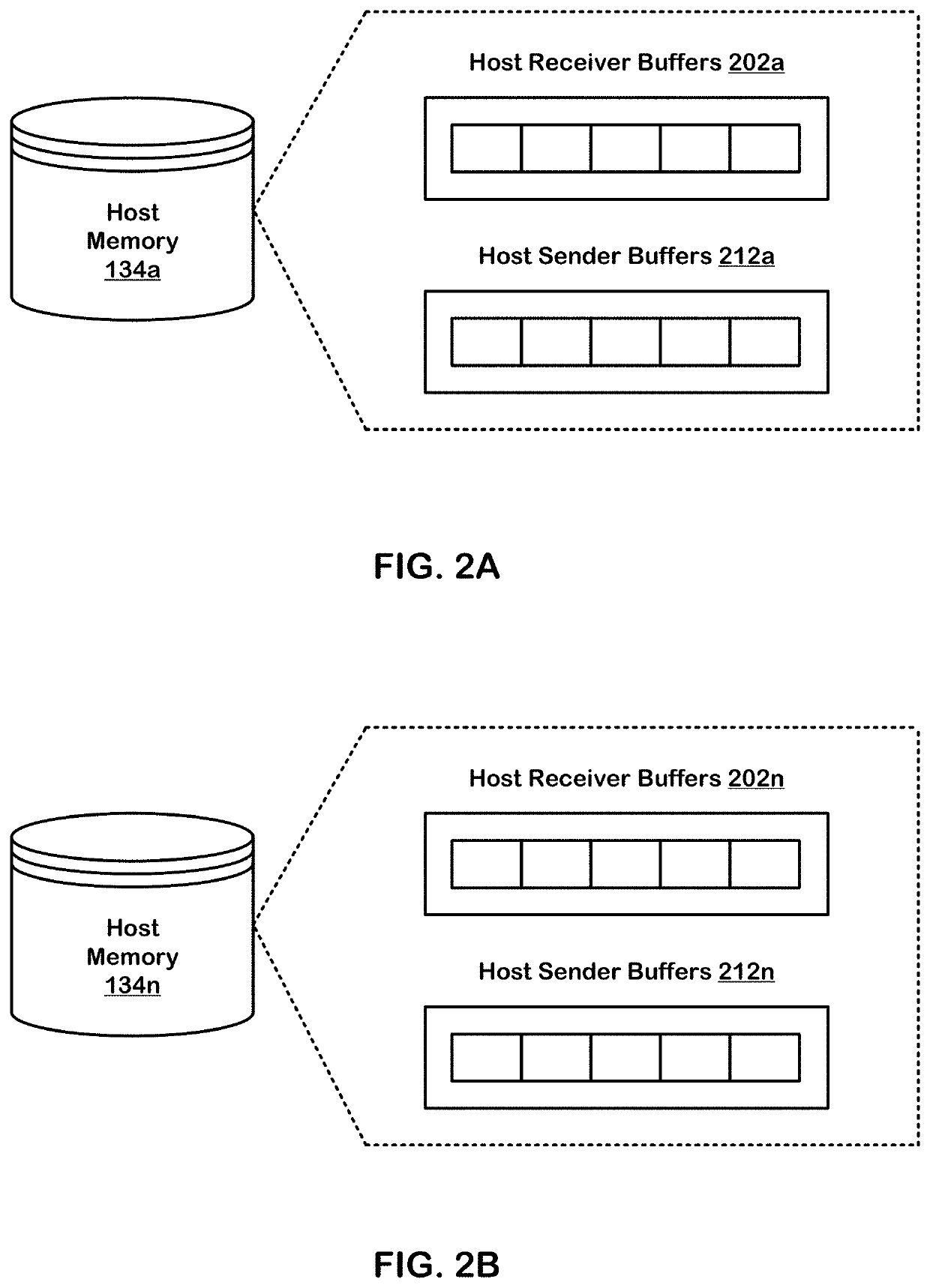

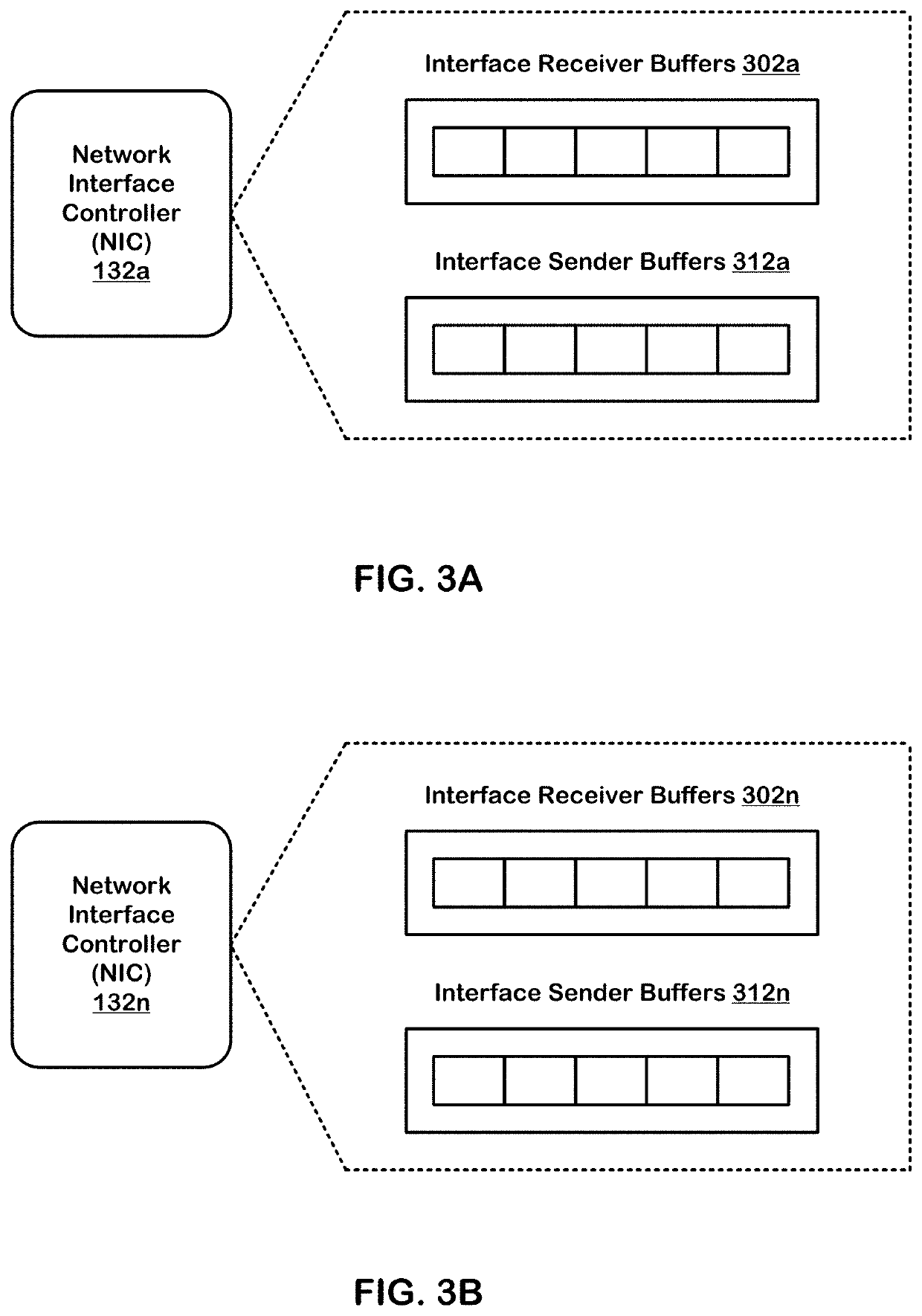

Executing a neural network graph using a non-homogenous set of reconfigurable processors

A system for executing a graph partitioned across a plurality of reconfigurable computing units includes a processing node that has a first computing unit reconfigurable at a first level of configuration granularity and a second computing unit reconfigurable at a second, finer, level of configuration granularity. The first computing unit is configured by a host system to execute a first dataflow segment of the graph using one or more dataflow pipelines to generate a first intermediate result and to provide the first intermediate result to the second computing unit without passing through the host system. The second computing unit is configured by the host system to execute a second dataflow segment of the graph, dependent upon the first intermediate result, to generate a second intermediate result and to send the second intermediate result to a third computing unit, without passing through the host system, to continue execution of the graph.

Owner:SAMBANOVA SYST INC

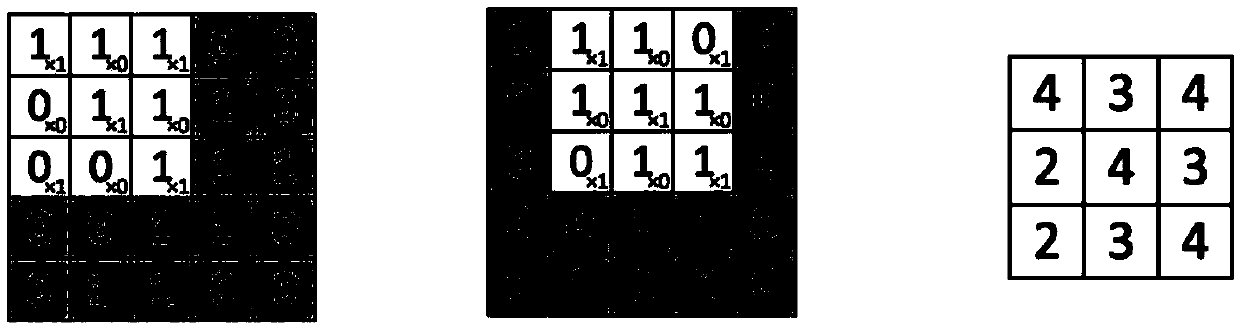

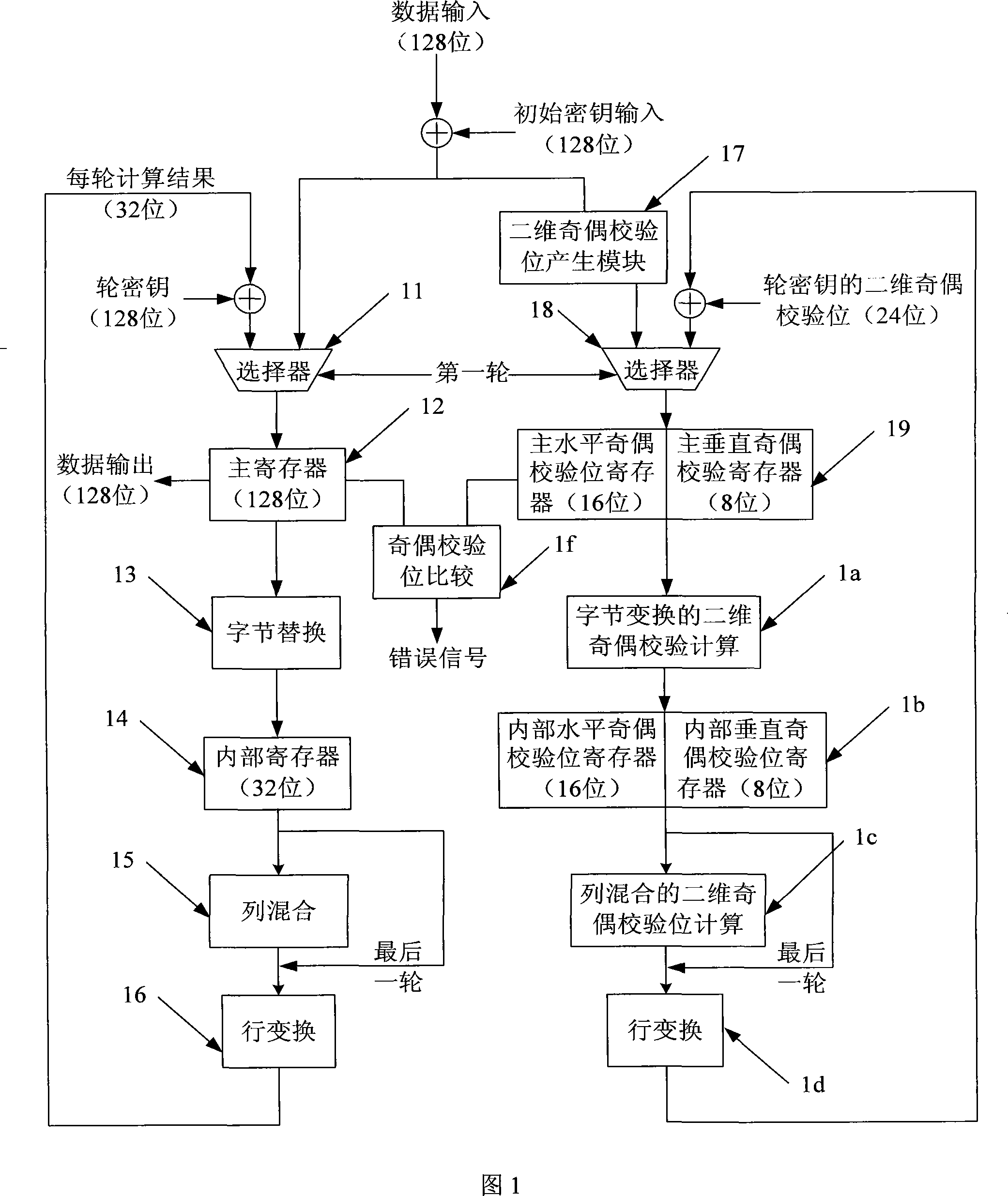

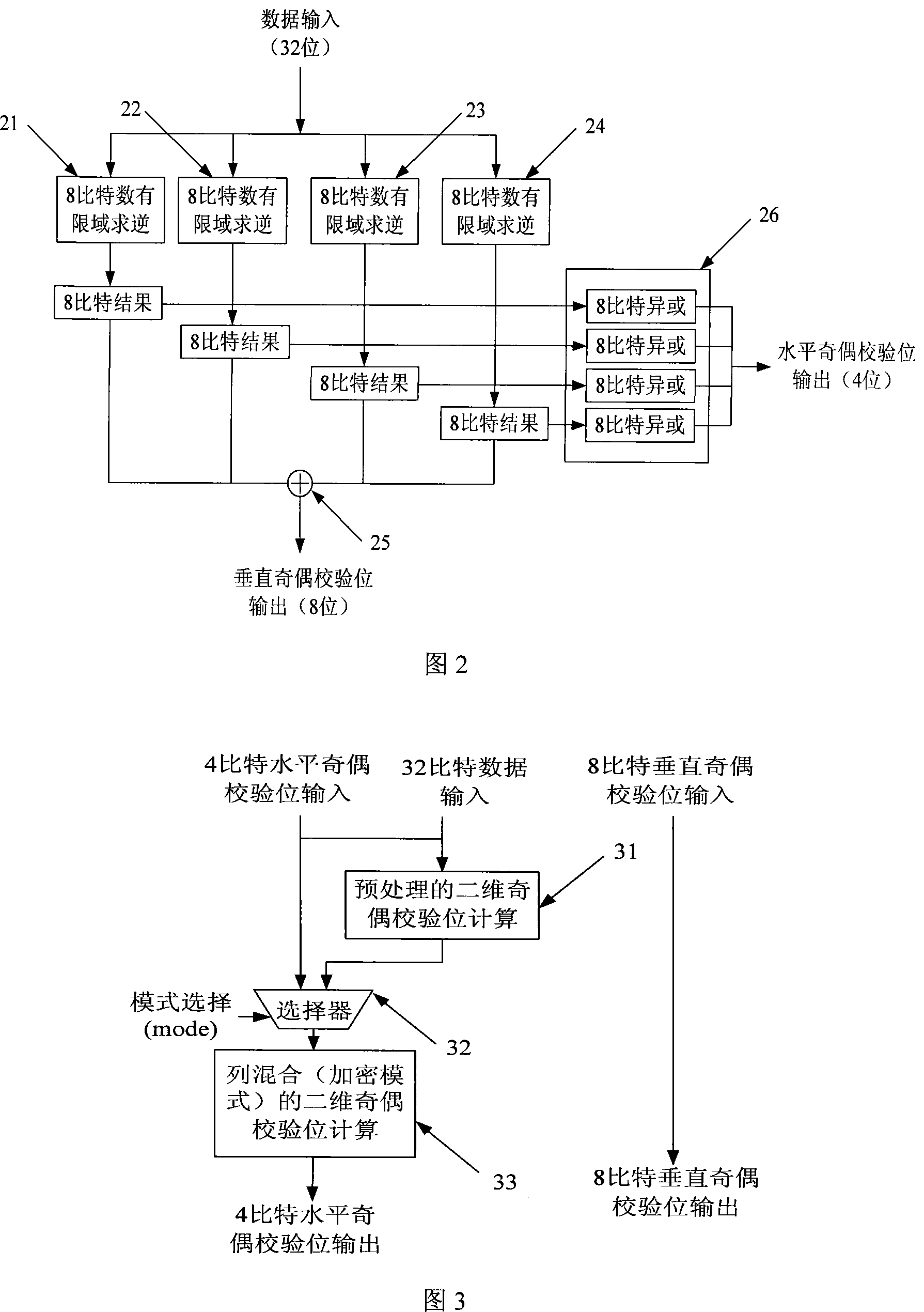

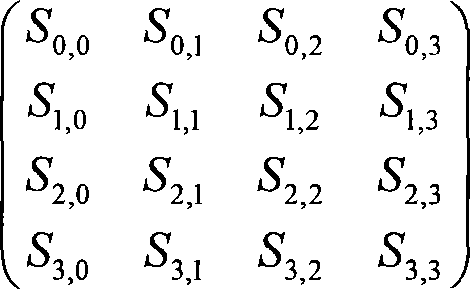

2-D parity checkup correction method and its realization hardware based on advanced encryption standard

InactiveCN101242262AThroughput has no effectThroughput impactEncryption apparatus with shift registers/memoriesError correction/detection using multiple parity bitsHardware structureCheck digit

The invention relates to a technical field of integrate circuit design, specifically to a two-dimension parity check error detecting method in an advanced encryption standard and an implement hardware thereof. The method, by performing a two-dimension parity check in a horizontal direction and a vertical orientation for the data in the advanced encryption standard, can completely covers the odd number errors by performing a two-dimension parity check in a horizontal direction and a vertical orientation for the data in the advanced encryption standard, has a skyhigh percentage of coverage for the even number errors, especially completely covers the two errors in the condition the number of the error is two, and is capable of effectively resisting error impact. The hardware for actualizing the invention uses a completely parallel construction between a principal operation module and a two-dimension parity check digit computation module, wherein, in the principal operation module, 128 bit data are divided into 4 groups of 32 bit data and uses 2-degree pipeline architecture, and the parity check digit computation module uses a 32 bit data computing mode. The hardware structure has no affect on the data throughput in the in an advanced encryption standard, the leading in extra hardware has a low cost, and the invention is fit for an application area with a high security requirement and a strict hardware area requirement.

Owner:FUDAN UNIV

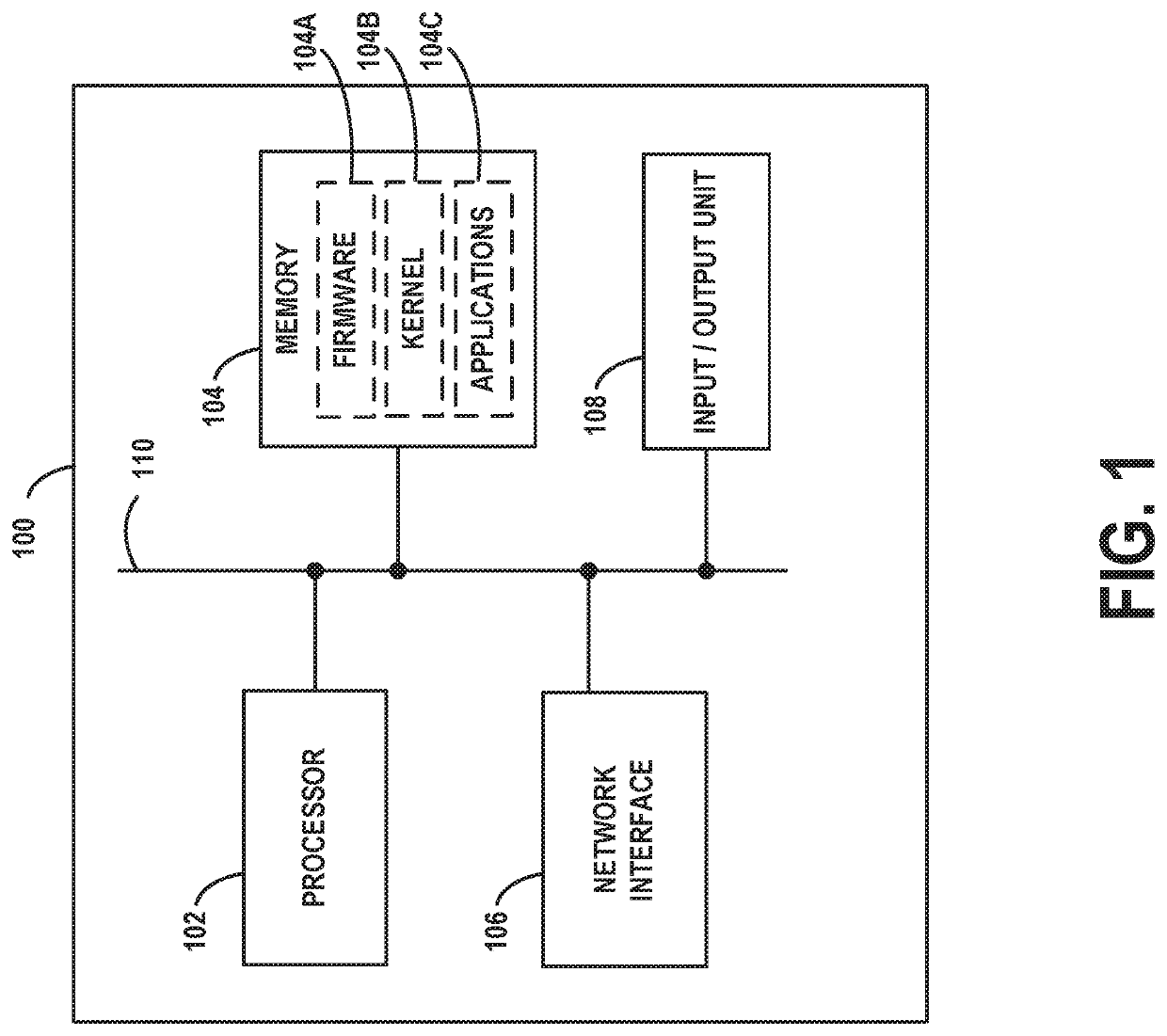

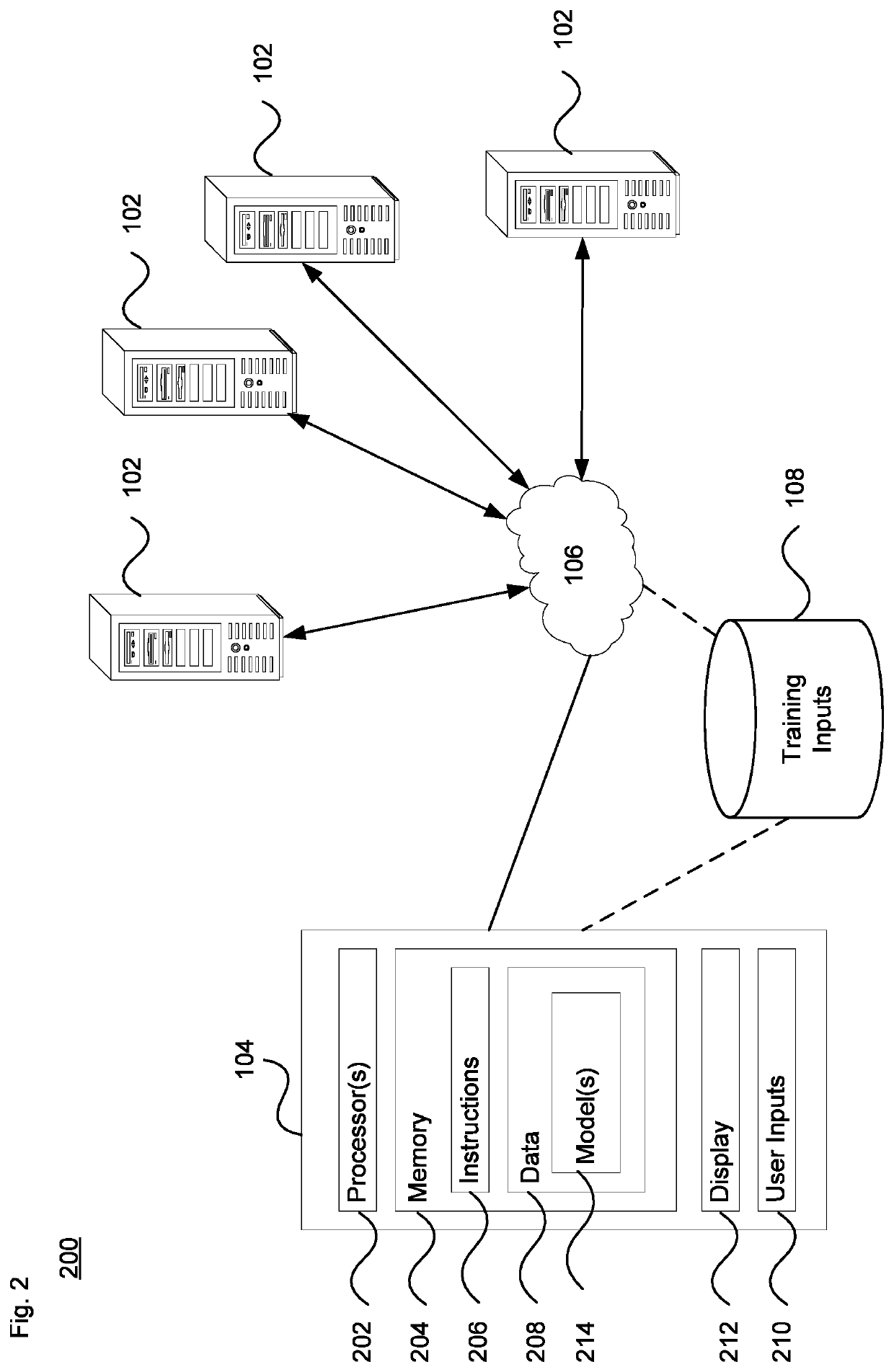

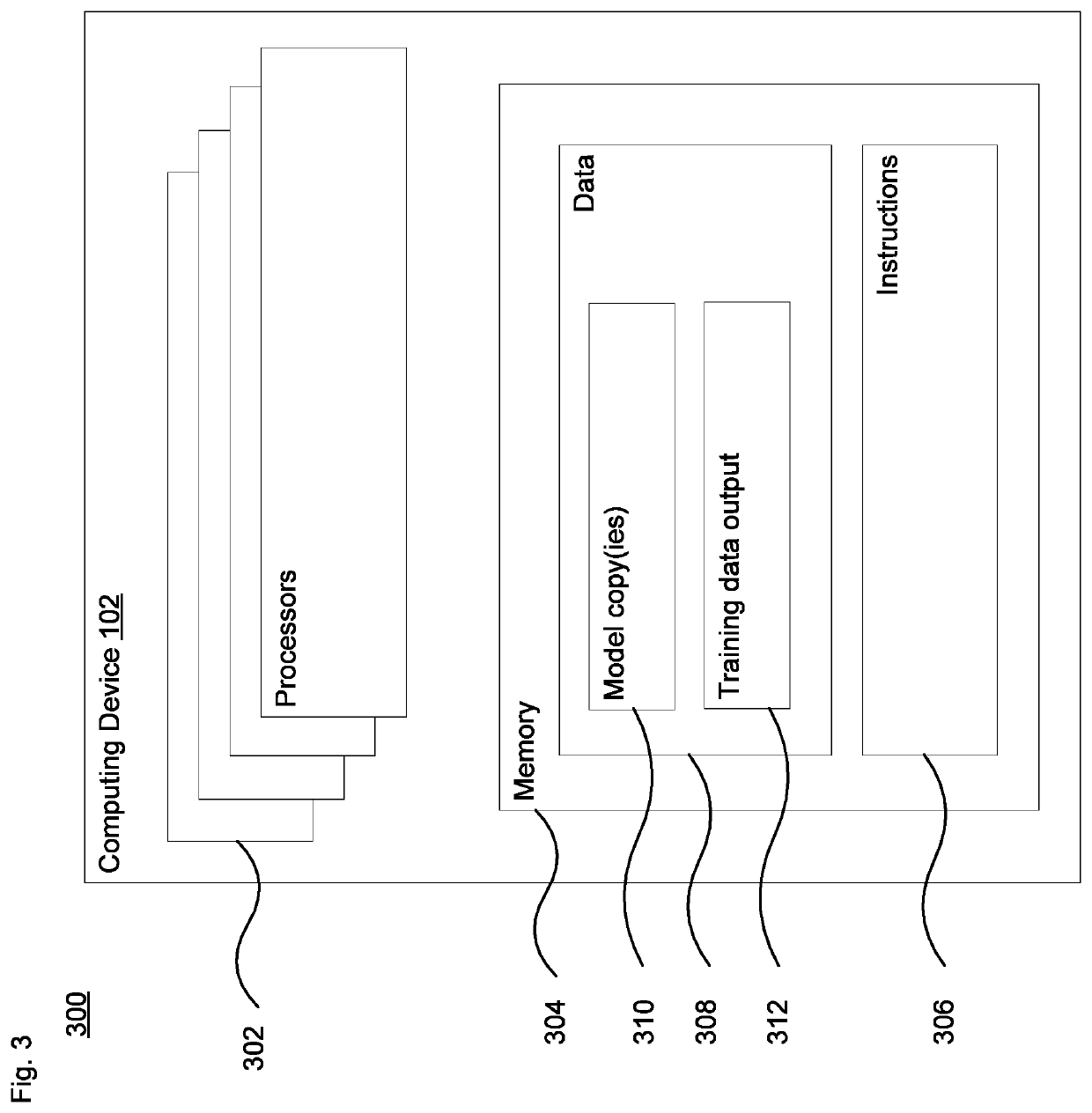

Machine learning training platform

Aspects of the disclosure relate to training a machine learning model on a distributed computing system. The model can be trained using selected processors of the training platform. The distributed system automatically modifies the model for instantiation on each processor, adjusts an input pipeline to accommodate the capabilities of selected processors, and coordinates the training between those processors. Simultaneous processing at each stage can be scaled to reduce or eliminate bottlenecks in the distributed system. In addition, autonomous monitoring and re-allocating of resources can further reduce or eliminate bottlenecks. The training results may be aggregated by the distributed system, and a final model may then be transmitted to a user device.

Owner:WAYMO LLC

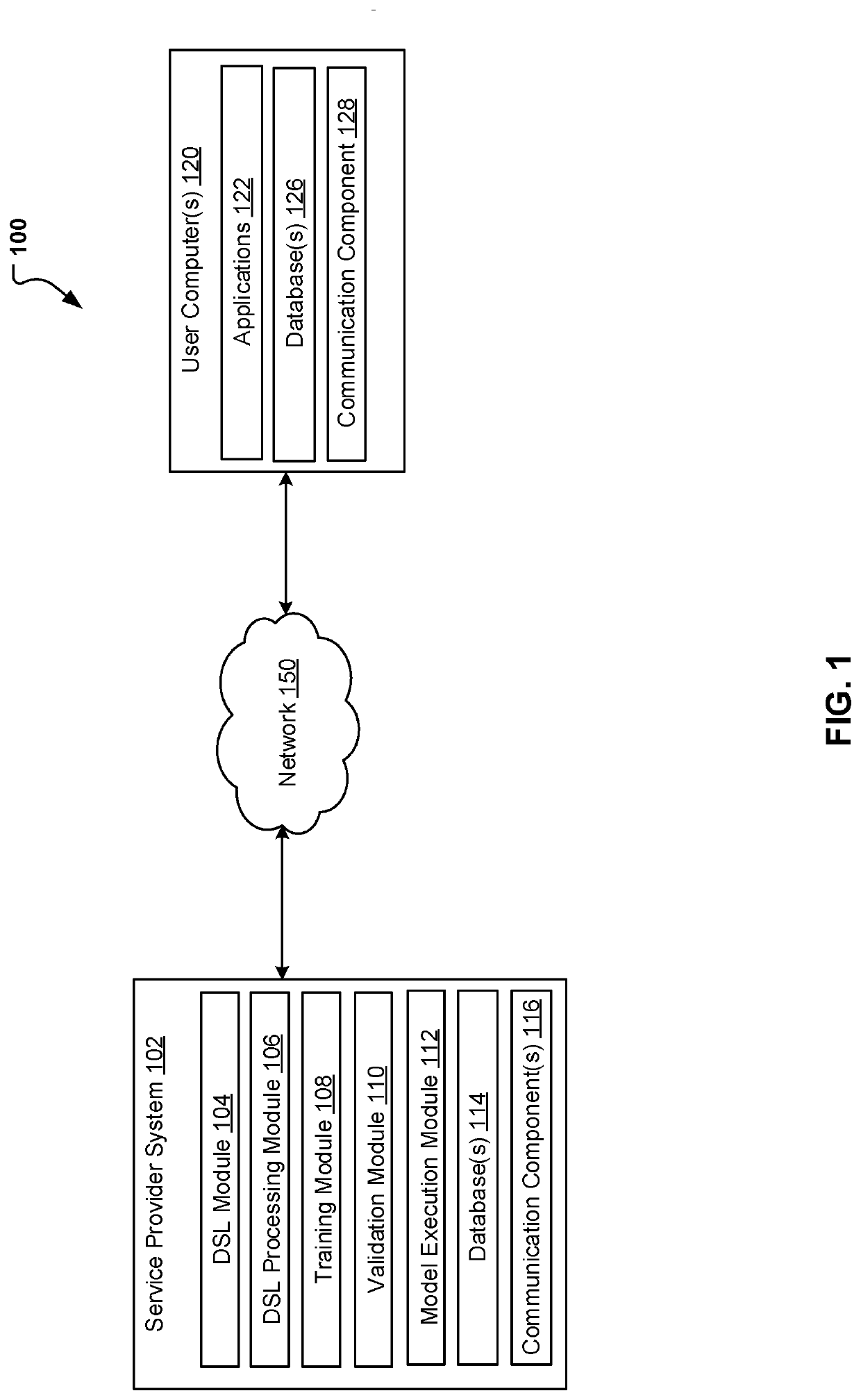

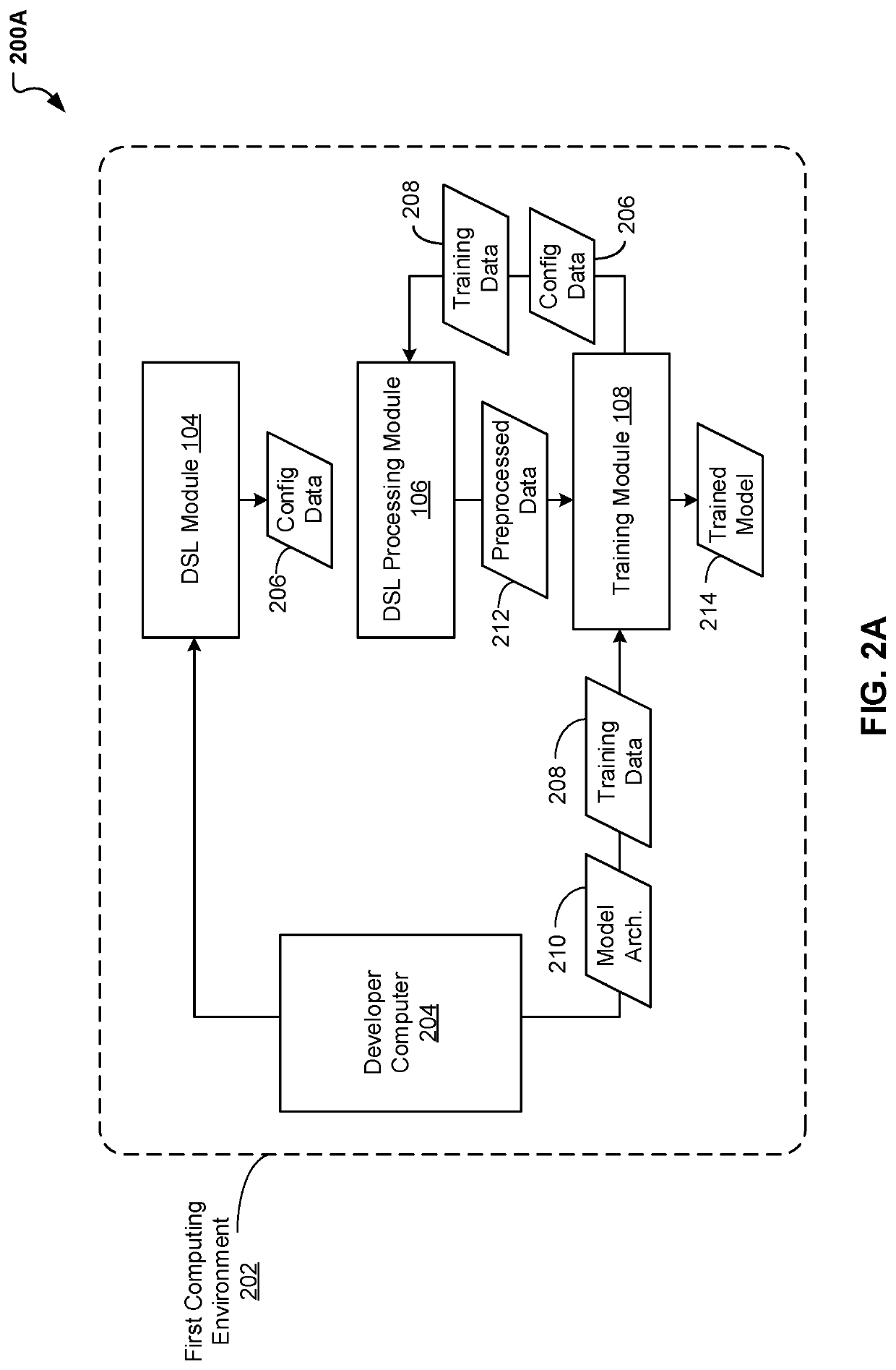

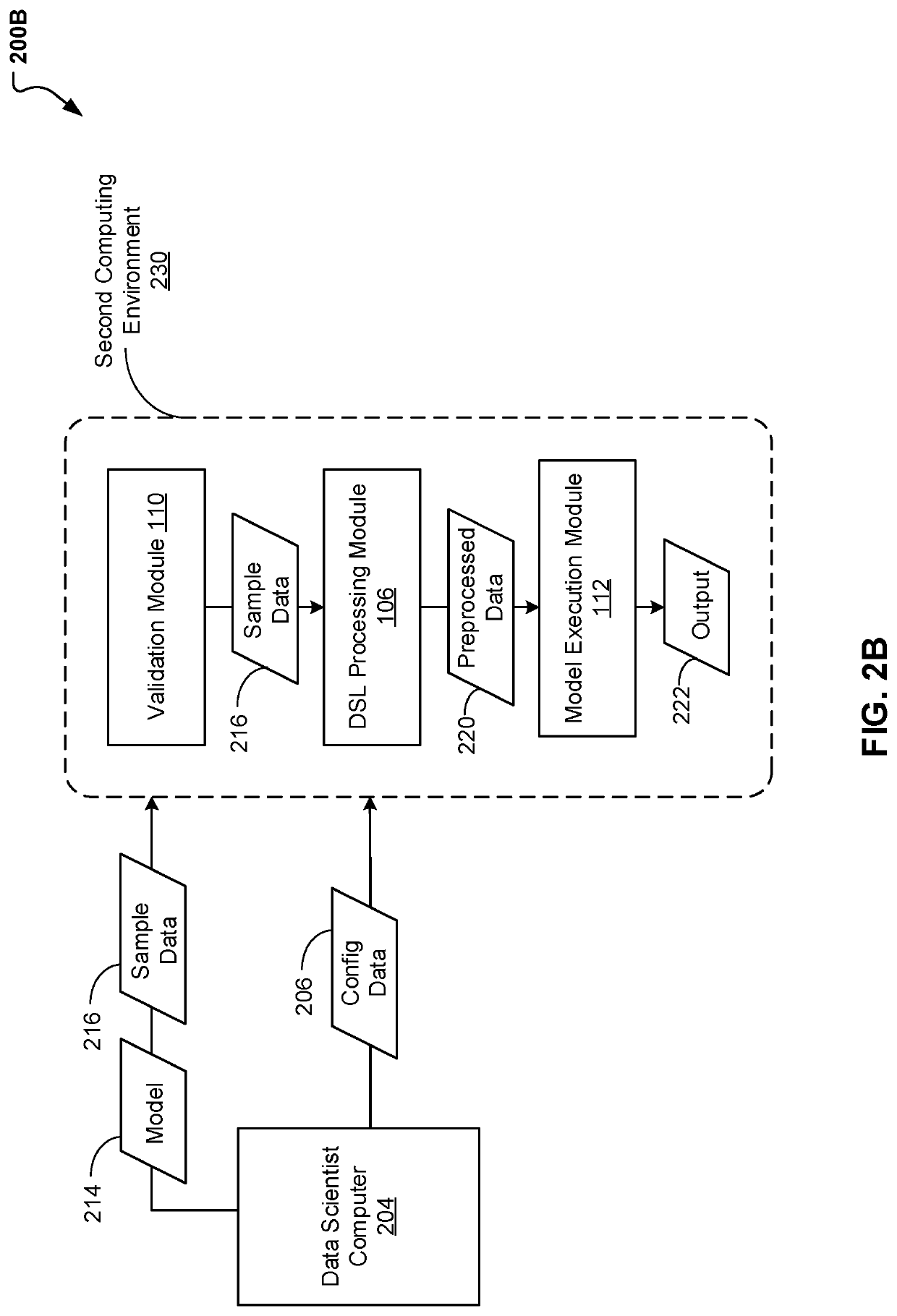

Framework for Managing Natural Language Processing Tools

A system performs operations that include receiving, via first computing environment, a request to process text data using a first natural language processing (NLP) model. The operations further include accessing configuration data associated with the NLP model, where the configuration data generated using a domain specific language that supports a plurality of preprocessing modules in a plurality of programming languages. The operations also include selecting, based on the configuration data, one or more preprocessing modules of the plurality of preprocessing modules, generating, based on the configuration data, a preprocessing pipeline using the one or more preprocessing modules, and generating preprocessed text data by inputting the text data into the preprocessing pipeline. The preprocessed text data is provided to the first NLP model.

Owner:PAYPAL INC

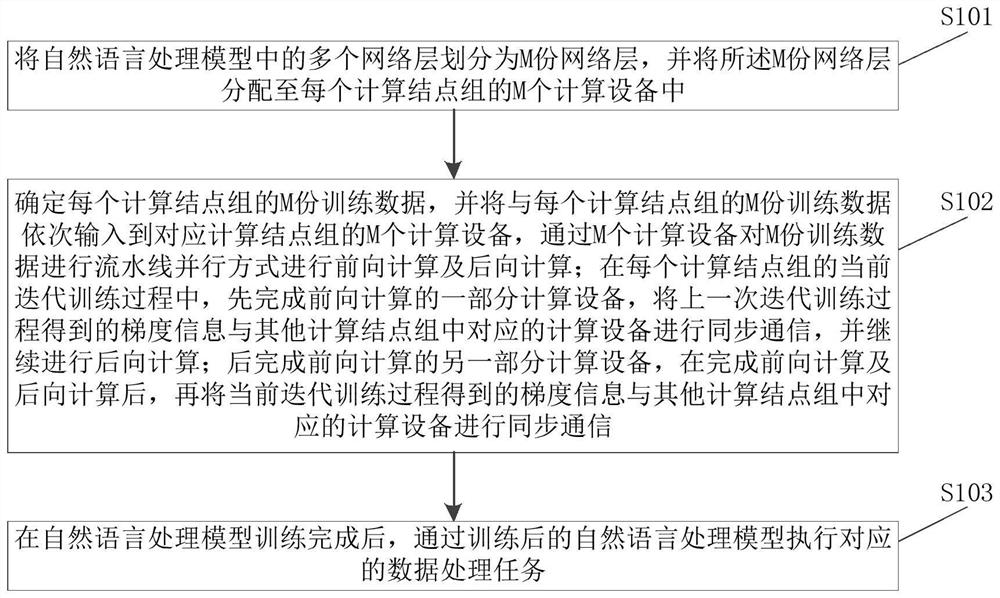

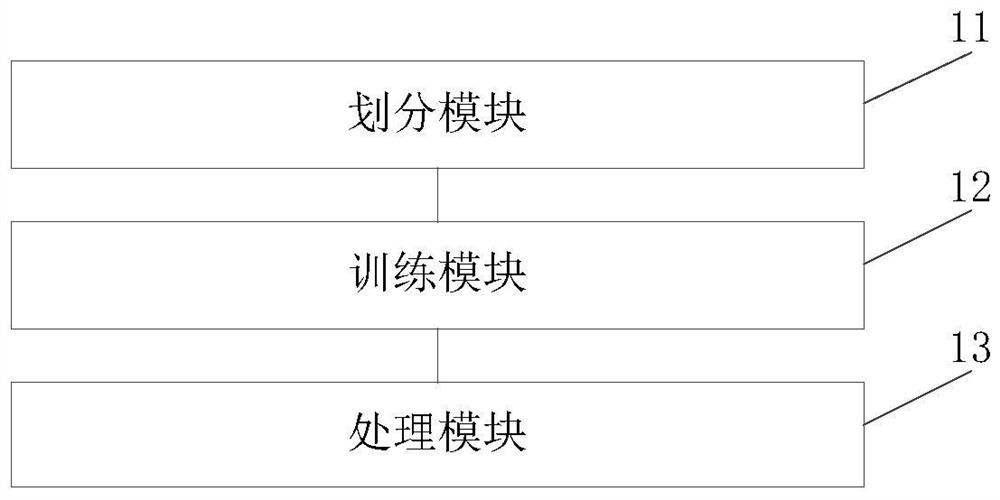

Parallel computing method and device for natural language processing model, equipment and medium

PendingCN114356578AGuaranteed treatment effectReduce computing timeResource allocationInterprogram communicationConcurrent computationTheoretical computer science

The invention discloses a parallel computing method and device for a natural language processing model, equipment and a medium. In the scheme, a plurality of computing devices in different computing node groups are trained in an assembly line parallel mode, different computing node groups are subjected to gradient sharing in a data parallel mode, the assembly lines can be controlled in a certain number of nodes in a parallel mode, and the problem that in large-scale computing node training, the number of the nodes is too large is avoided. And the method can be effectively suitable for parallel training of a large-scale network model on large-scale computing nodes. Moreover, according to the scheme, the synchronous communication between the computing node groups is hidden in the pipeline parallel computing process, so that each computing node group can enter the next iterative computation as soon as possible after the iterative computation is finished, and the processing efficiency of the natural language processing model can be improved on the basis of ensuring the processing effect of the natural language processing model in this way. The calculation time of natural language processing model training is shortened, and the efficiency of distributed training is improved.

Owner:NAT UNIV OF DEFENSE TECH

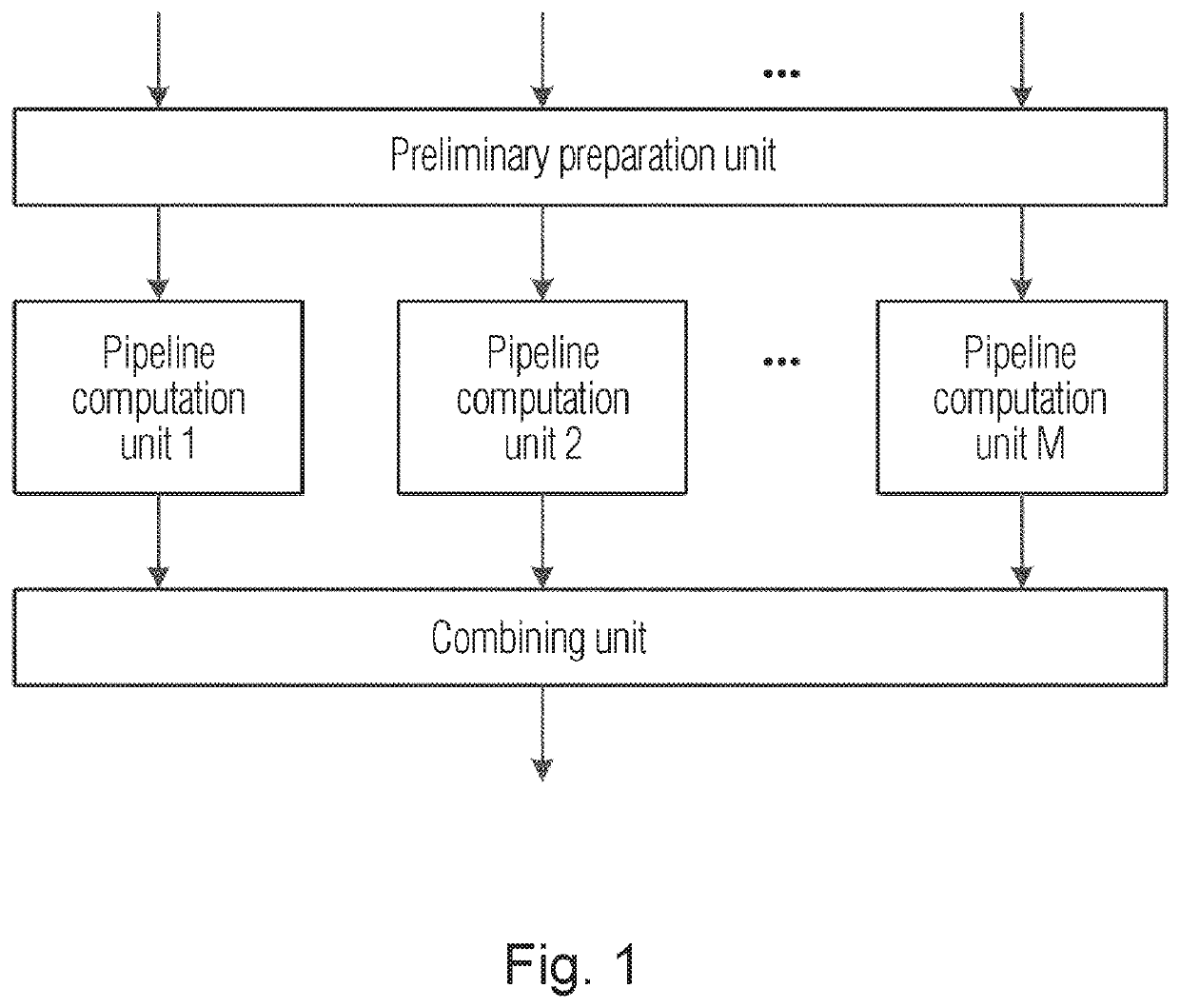

Method and apparatus for computing hash function

ActiveUS20210167944A1Encryption apparatus with shift registers/memoriesDigital data processing detailsComputer architectureHash function

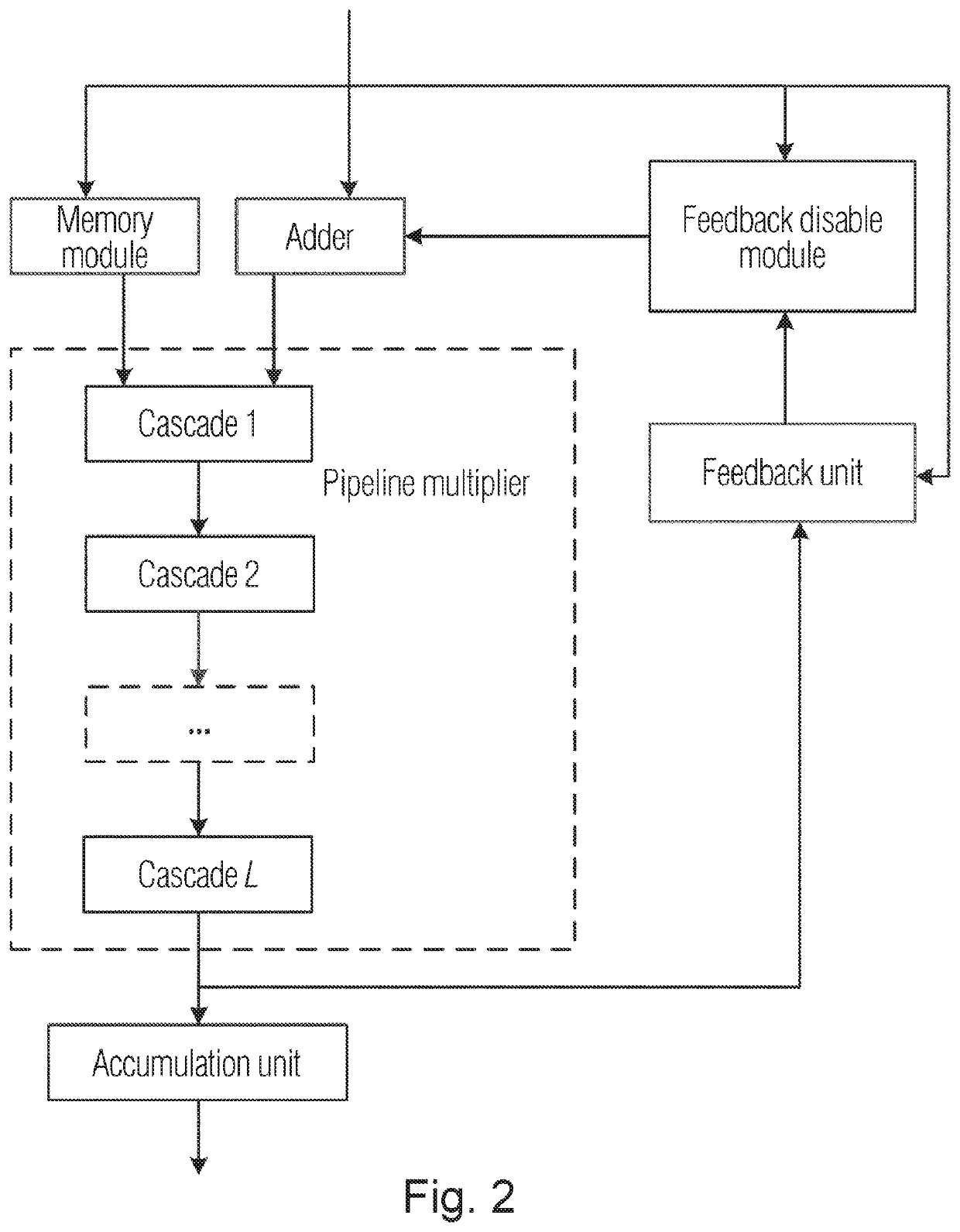

The group of inventions relates to computing techniques and can be used for computing a hash function. The technical effect relates to increased speed of computations and improved capability of selecting a configuration of an apparatus. The apparatus comprises: a preliminary preparation unit having M inputs with a size of k bits, where M>1; M pipelined computation units running in parallel, each comprising: a memory module, a feedback disable module, an adder, a pipeline multiplier having L stages, a feedback unit, and an accumulation unit; and a combining unit.

Owner:OTKRYTOE AKTSIONERNOE OBSHCHESTVO INFORMATSIONNYE TEKHNOLOGII I KOMMUNIKATSIONNYE SISTEMY

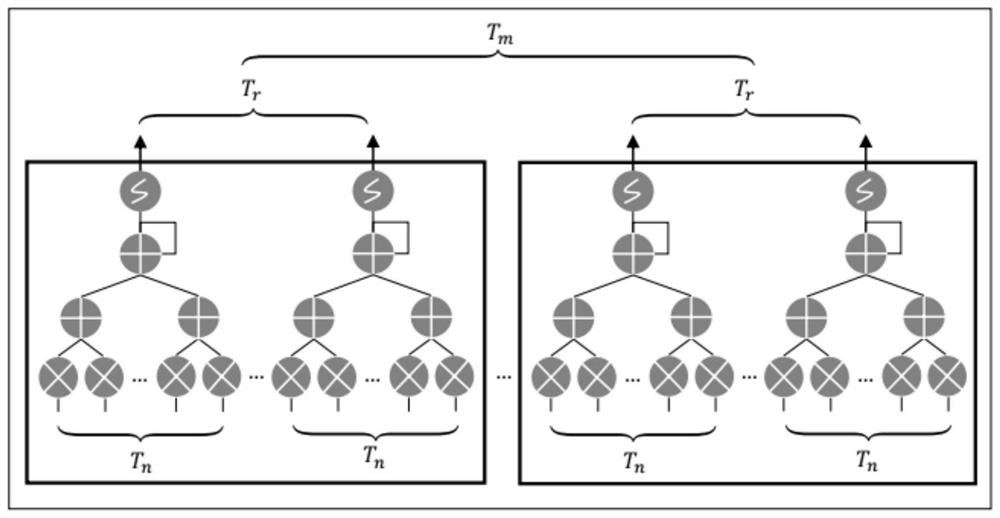

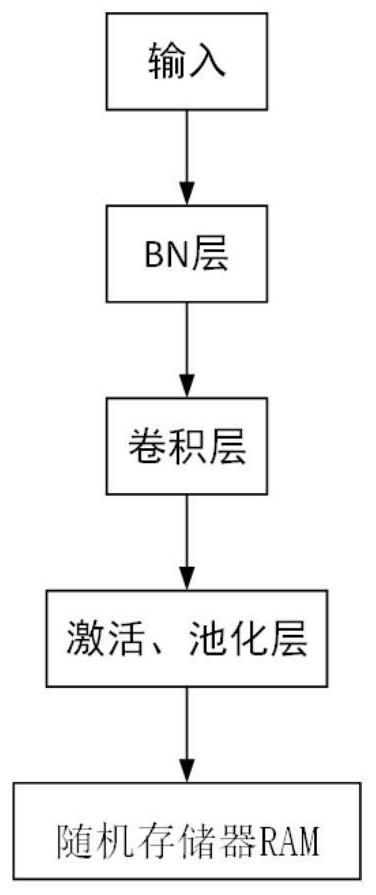

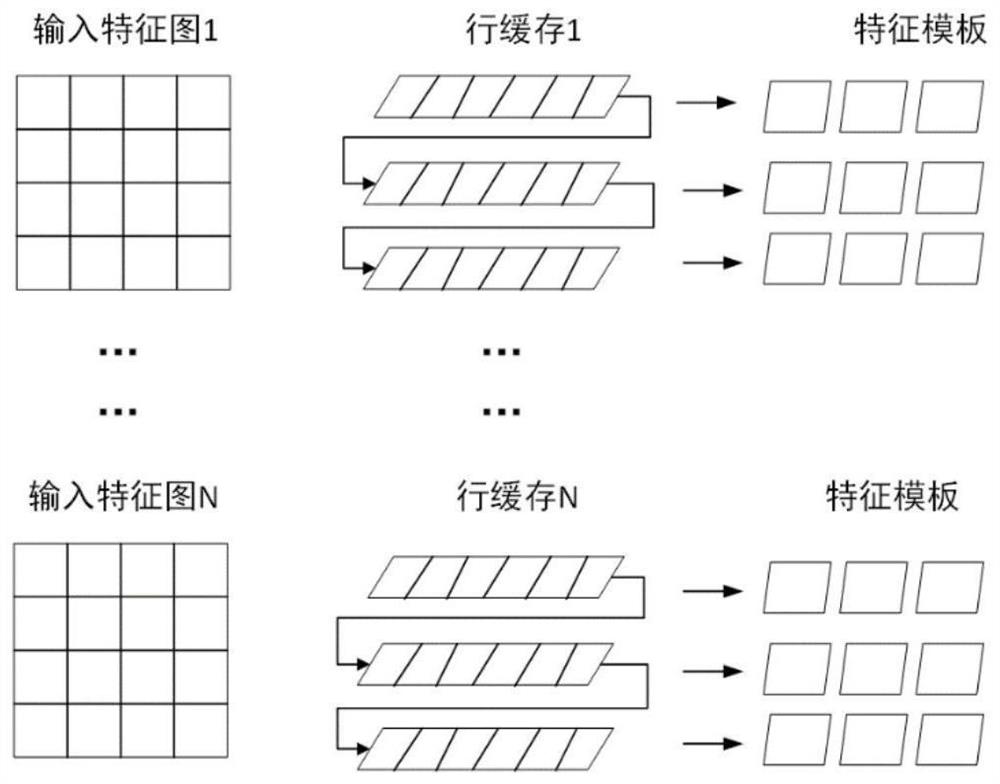

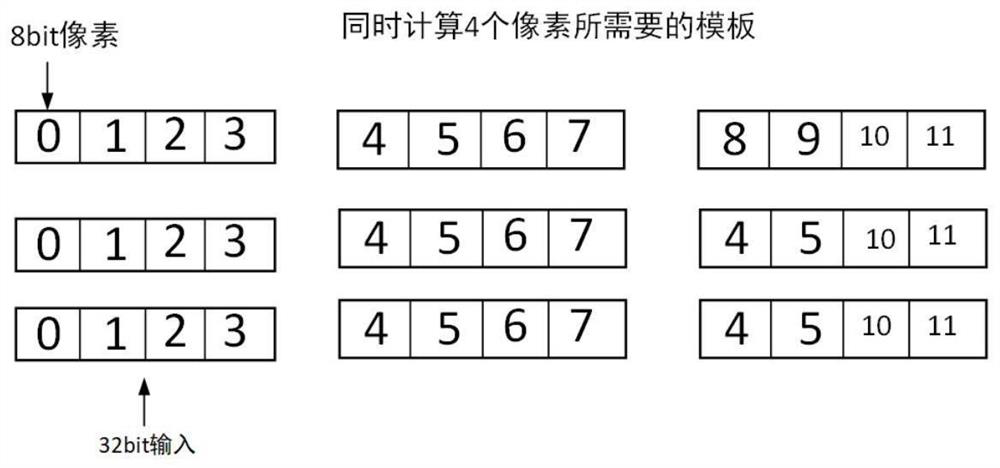

Multi-parallel strategy convolutional network accelerator based on FPGA

PendingCN112070210AReduce usageSolve the problem of computational redundancyNeural architecturesPhysical realisationComputational scienceRandom access memory

The invention discloses a multi-parallel strategy convolutional network accelerator based on an FPGA, and relates to the field of network computing. The system comprises a single-layer network computing structure, the single-layer network computing structure comprises a BN layer, a convolution layer, an activation layer and a pooling layer, the four layers of networks form an assembly line structure, and the BN layer merges input data; the convolution layer is used for carrying out a large amount of multiplication and additive operation, wherein the convolution layer comprises a first convolution layer, a middle convolution layer and a last convolution layer, and convolution operation is carried out by using one or more of input parallel, pixel parallel and output parallel; the activationlayer and the pooling layer are used for carrying out pipeline calculation on an output result of the convolution layer; and storing a pooled and activated final result into an RAM (Random Access Memory). Three parallel structures are combined, different degrees of parallelism can be configured at will, high flexibility is achieved, free combination is achieved, and high parallel processing efficiency is achieved.

Owner:REDNOVA INNOVATIONS INC

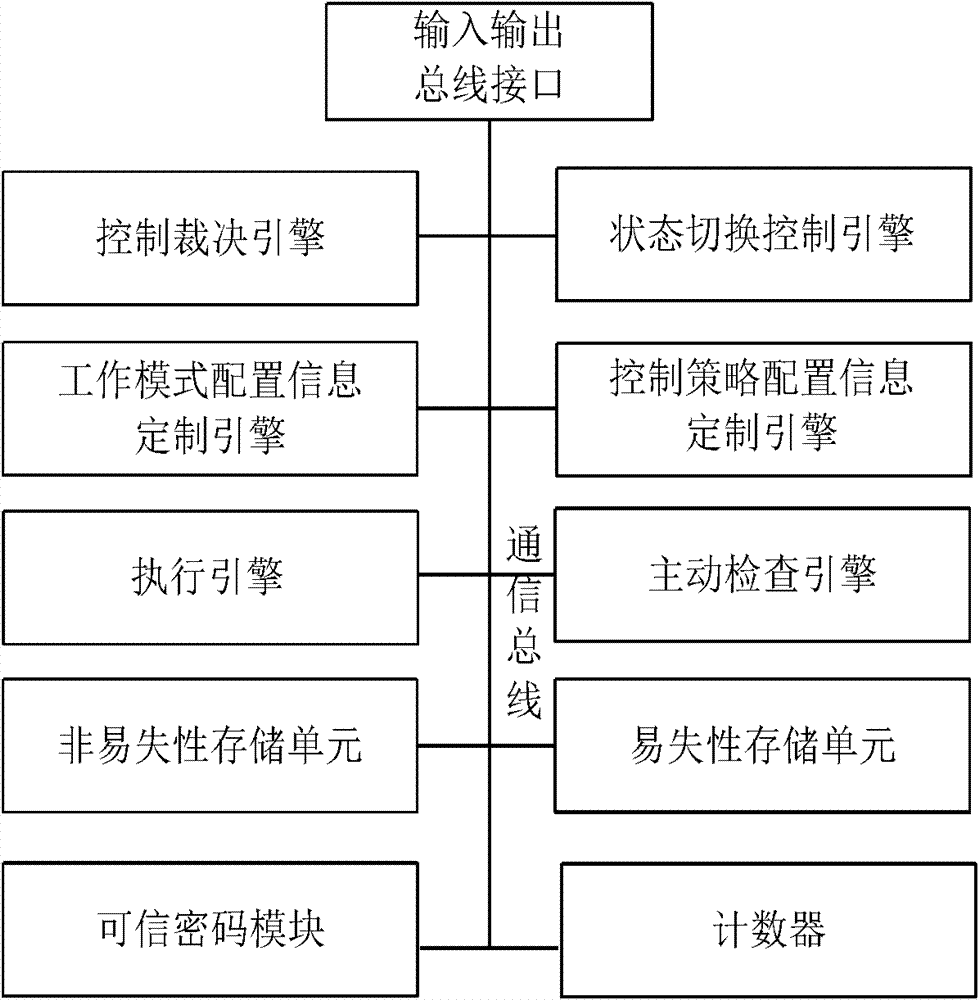

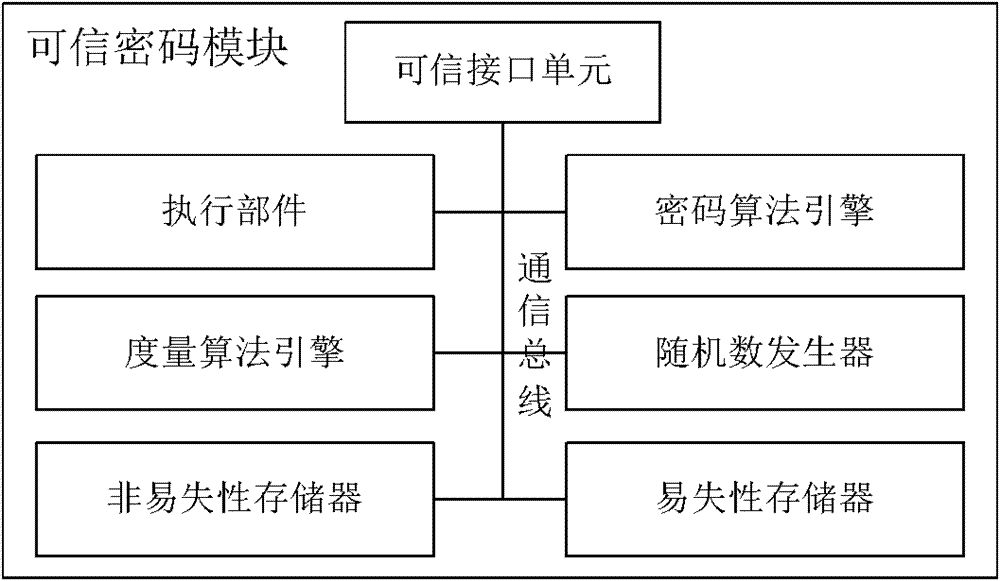

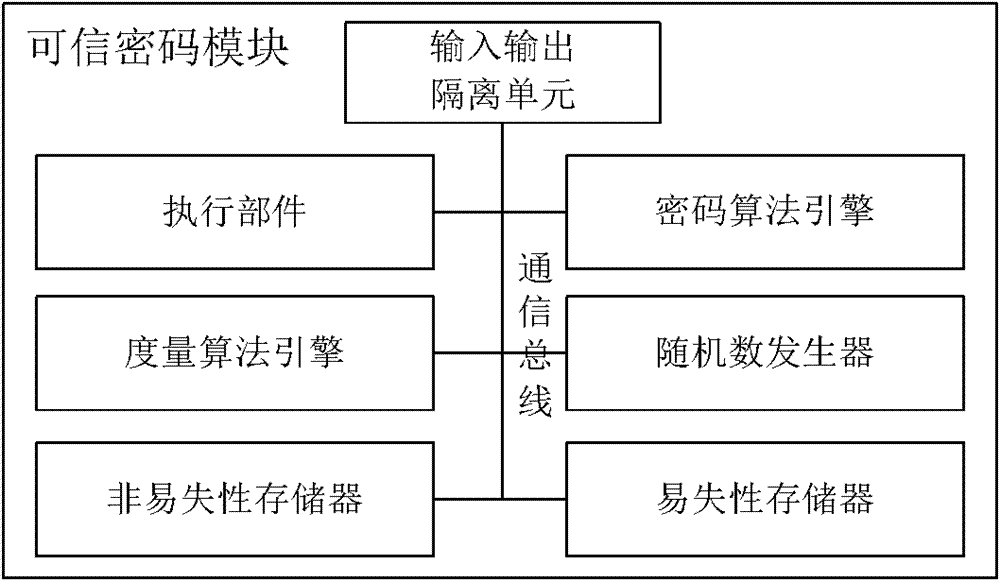

Credible platform and method for controlling hardware equipment by using same

InactiveCN102063592BImprove security assuranceAchieve isolationPlatform integrity maintainanceWork performanceComputer module

The invention relates to a credible platform and a method for controlling hardware equipment by using the same, belonging to the field of a computer. The credible platform comprises the hardware equipment and a credible platform control module with the function of active control, wherein hardware units, such as an active measure engine, a control ruling engine, a working mode custom engine, a credible control strategy configuration engine, and on the like, are arranged in the credible platform control module so as to realize the control functions of actively checking working mode configuration information, control strategy configuration information, firmware codes and circuit working states, and on the like for the hardware equipment. Through the identity legitimacy authentication and the active control of the credible hardware equipment, which are realized by the credible pipeline technology, the active control and active check function, the security control system of credible hardware equipment which can not be bypassed by the upper layer can be stilled provided for the accessor of credible platform in incredible or lower-credibility computing environment without modifying the computing platform system structure and obviously reducing the system working performance.

Owner:BEIJING UNIV OF TECH

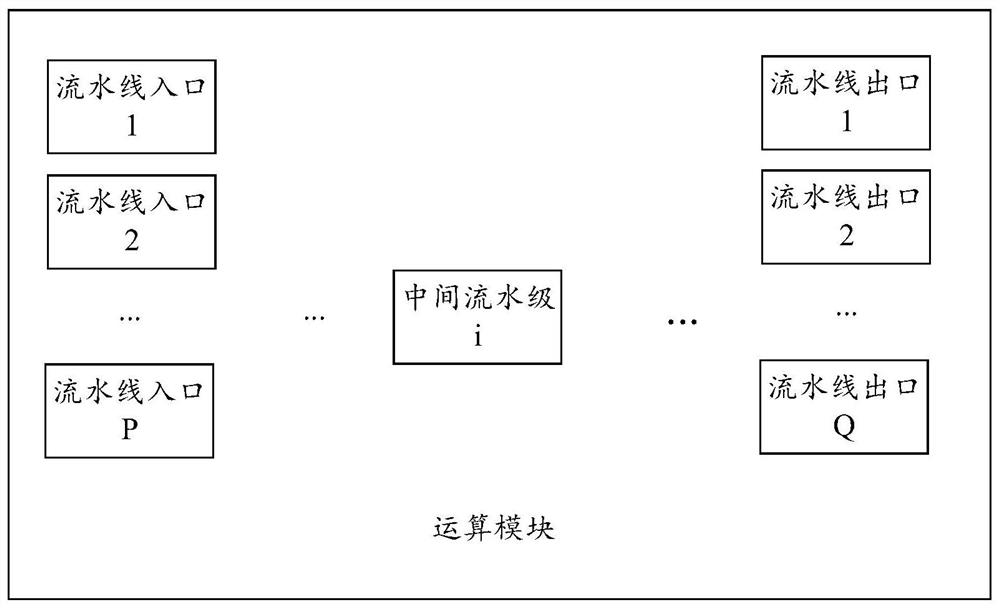

Operation module, pipeline optimization method, and related product

PendingCN113918221AReduce computing latencyImprove computing efficiencyConcurrent instruction executionComputer architectureEngineering

The invention discloses an operation module, a pipeline optimization method, and a related product, which are applied to a combined processing device. The combined processing device comprises: electronic equipment, an interface device, other processing devices, and a storage device, wherein the electronic equipment can comprise one or more computing devices, and the computing devices can be configured to execute the pipeline optimization method. By adopting the embodiment of the invention, computing resources can be saved.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

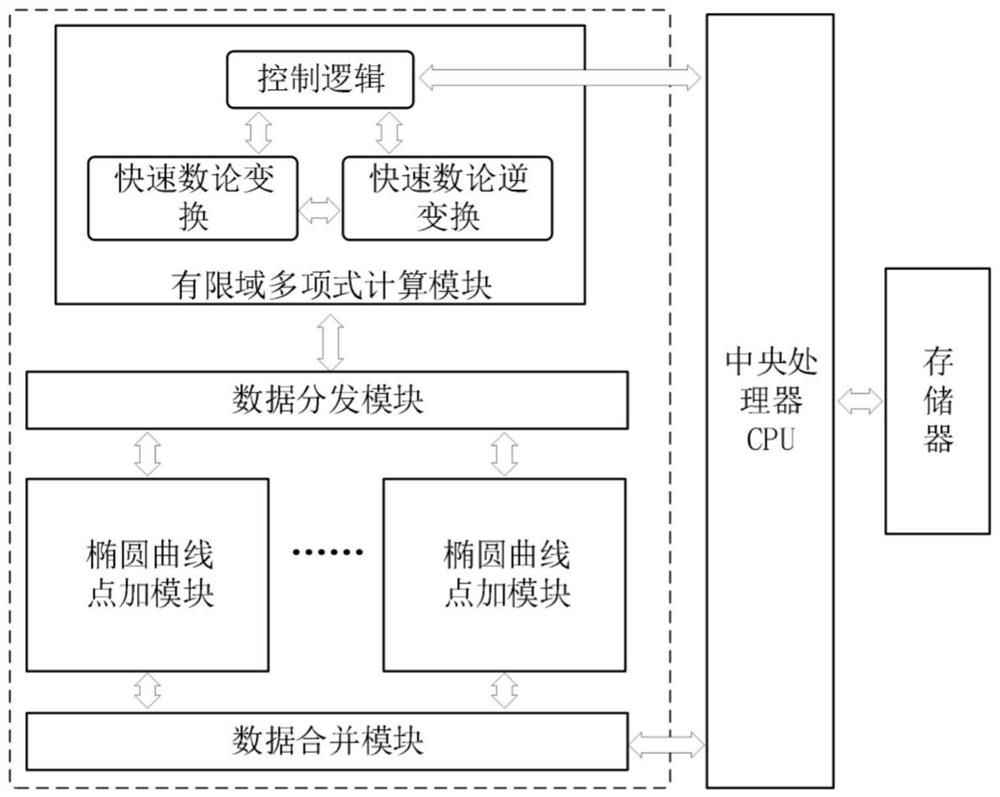

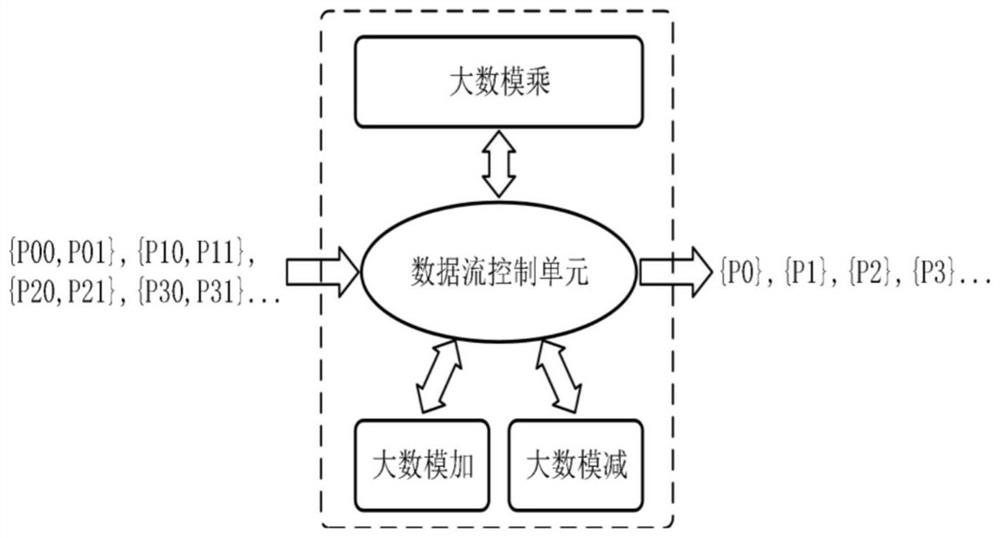

Efficient zero-knowledge proof accelerator and method

The invention relates to an efficient zero-knowledge proof accelerator which can provide a high-computing-power and high-efficiency hardware carrier for zero-knowledge proof calculation. According to the method, a fine-grained pipeline architecture is adopted for multi-scalar multiplication, and a plurality of elliptic curve point addition architectures can be integrated into a large-number modular multiplication hardware circuit under the condition that the chip area is not increased, that is, pipeline calculation acceleration can be carried out on elliptic curve point addition calculation only through one large-number modular multiplication hardware circuit. Meanwhile, a plurality of large-number modular multiplication hardware circuits are further integrated, and parallel acceleration can be carried out on point addition calculation of a plurality of elliptic curves. Therefore, compared with the prior art, the method is more flexible and suitable for ASICs and FPGAs of different scales.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

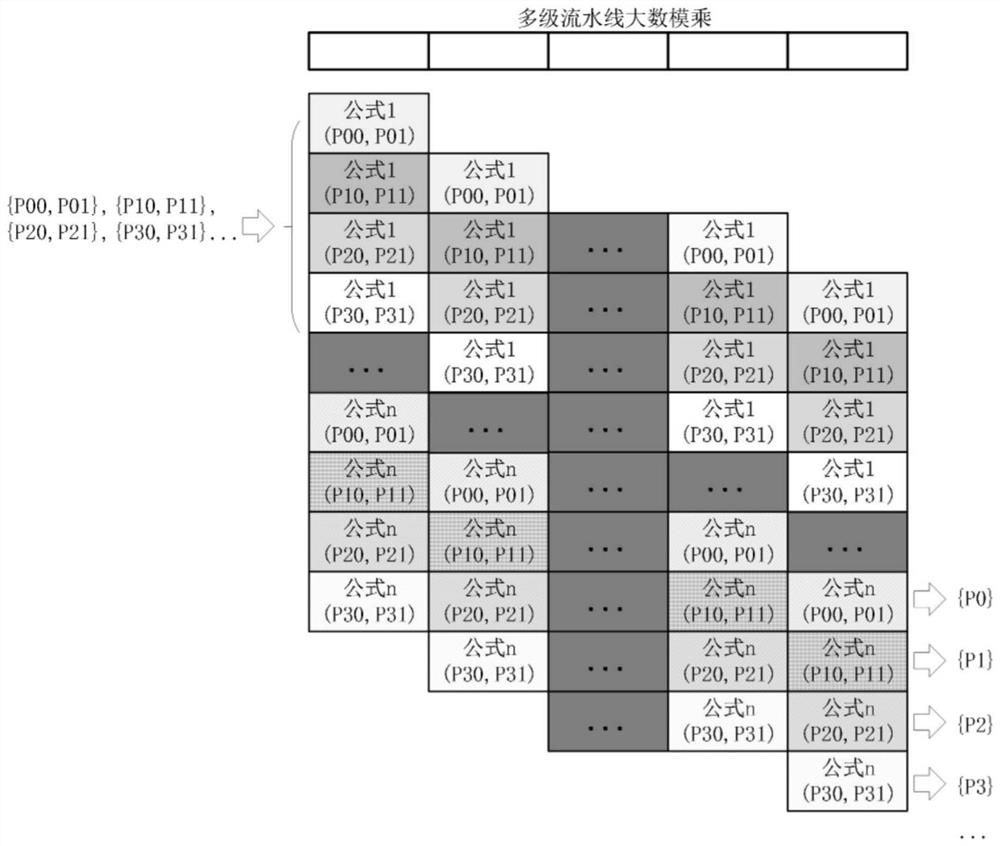

Parallel pipeline computing device of CNC interpolation

ActiveCN102023840AHigh precision resultsHigh speed computingDigital data processing detailsData memoryReconfigurable computing

The invention provides a parallel pipeline computing device of CNC interpolation, which is characterized by comprising a parallel / pipeline computing member CU3B which is formed by a plurality of computing units CU3; and a data memory. The computing units CU3 comprise six adders, two shifters for right shifting 1 bit, two shifters for right shifting 2 bits and a shifter for right shifting 3 bits; four data output ends, namely, a beta 0l data output end, a beta 1l data output end, a beta 2l data output end and a beta 3l data output end of the former computing unit CU3 and four data output ends, namely, a beta 0r data output end, a beta 1r data output end, a beta 2r data output end and a beta 3r data output end are respectively connected with four data input ends, namely, a beta 0 data input end, a beta 1 data input end, a beta 2 data input end, and a beta 3 data input end of the next two computing units CU3, so that 2n-1 computing units CU3 form the parallel / pipeline computing member CU3B; the beta (0.5) data output end of each computing unit CU3 is connected with the data memory. Compared with the prior art, the device has high speed calculation and accurate results; the device is suitable for reconfigurable computation of chip level parallel pipeline and meets the developing industrial requirements.

Owner:柏安美创新科技 (广州) 有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com