High-performance pipeline parallel deep neural network training

A deep neural network and training data technology, applied in the field of high-performance pipeline parallel deep neural network training, can solve problems such as the collapse of parallelization methods, and achieve the effect of reducing utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

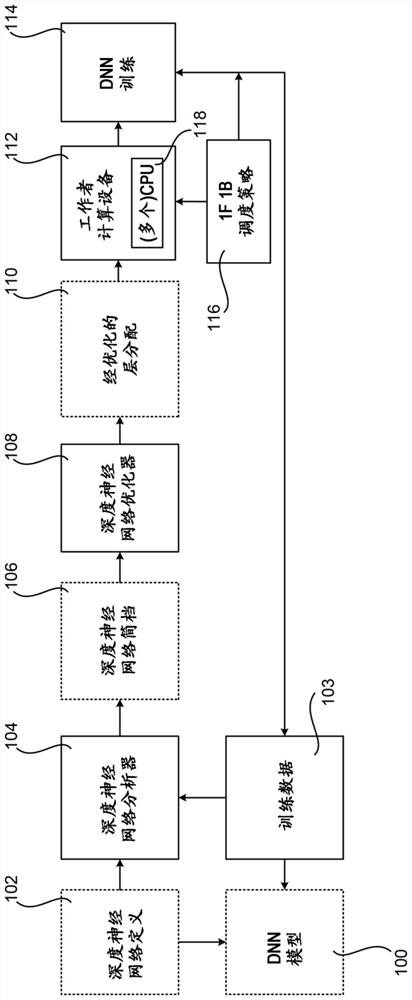

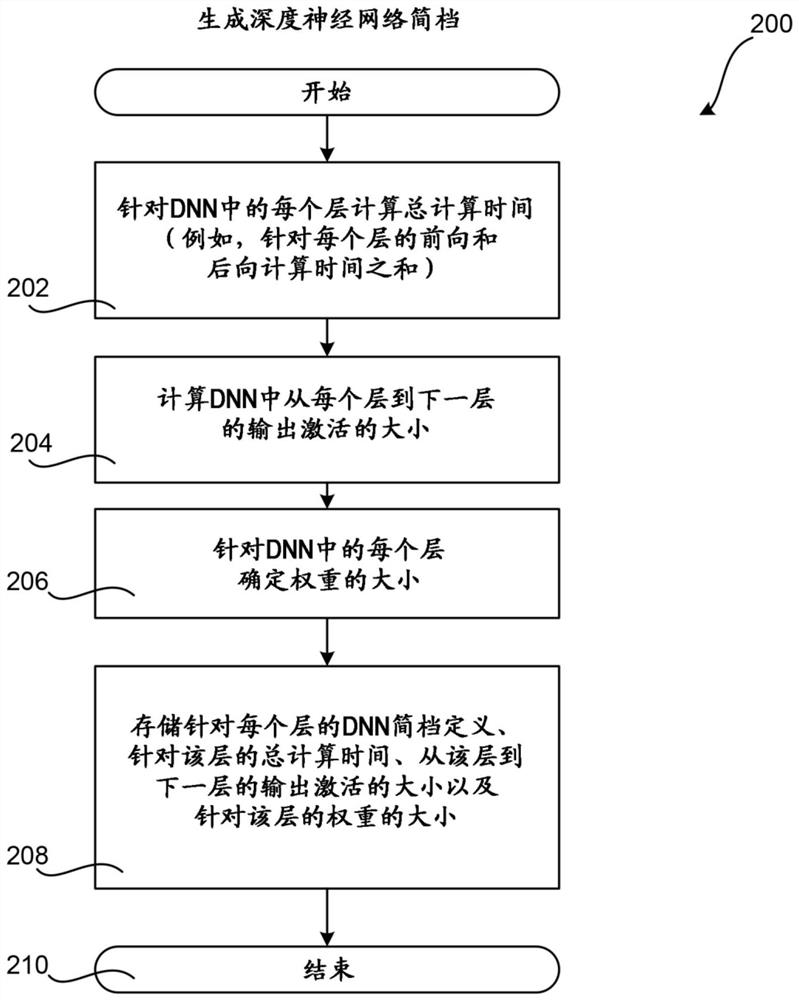

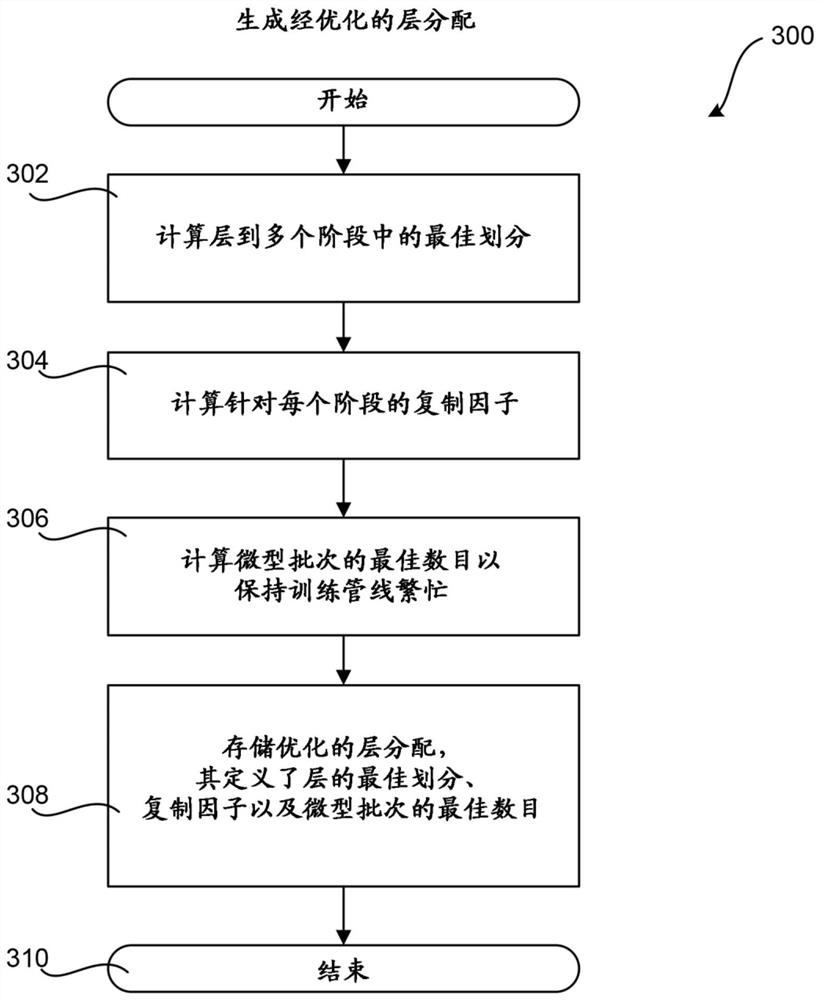

[0023] The following detailed description relates to techniques for high-performance pipelined parallel DNN model training. Among other technical benefits, the disclosed techniques can also eliminate the performance impact caused by previous parallelization techniques when training large DNN models or when network bandwidth induces a high communication-to-computation ratio. The disclosed technique can also partition the layers of a DNN model between pipeline stages to balance work and minimize network communication, and efficiently schedule forward and backward passes of a bidirectional DNN training pipeline. These and other aspects of the disclosed technology can reduce utilization of various types of computing resources, including but not limited to memory, processor cycles, network bandwidth, and power. Other technical benefits not specifically identified herein may also be realized through implementations of the disclosed technology.

[0024] Before describing the disclos...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com