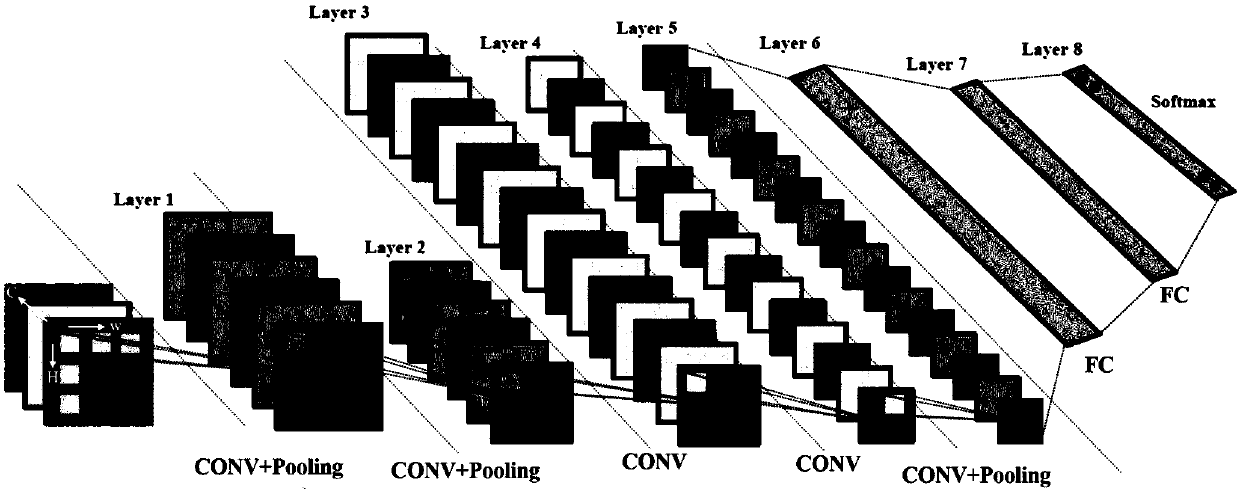

Neural network calculation special circuit and related calculation platform and implementation method thereof

A technology of neural network and special circuit, which is applied in the field of special circuit for neural network calculation, and can solve the problems of not being able to meet practical requirements, multi-computing and memory resources, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

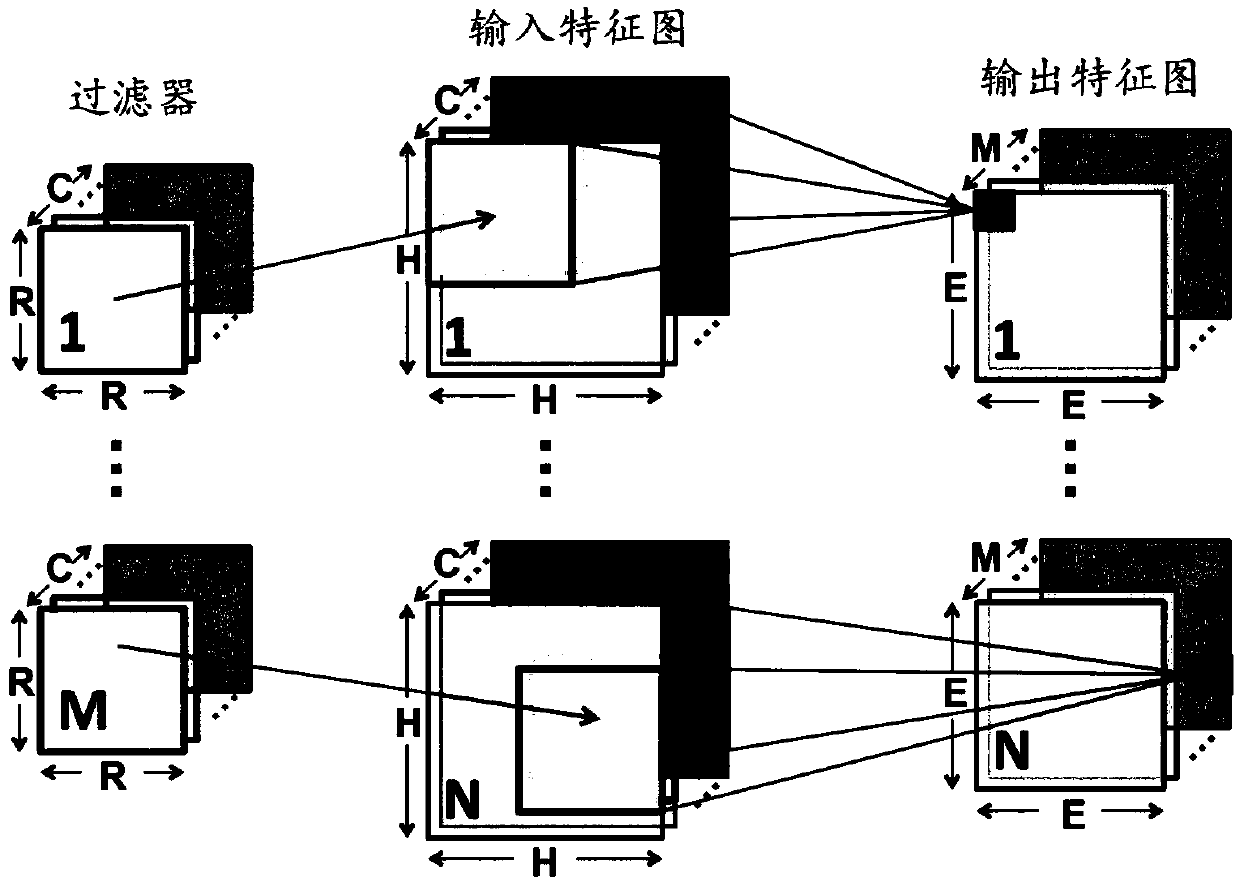

Method used

Image

Examples

example 1

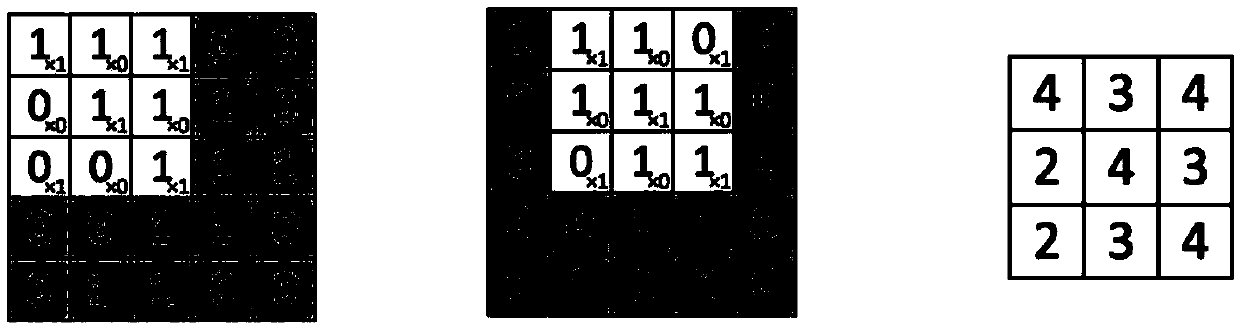

[0094] Example 1: Taking a Separable convolution layer of the Xception network as an example, to do Separable convolution, first do depthwise convolution. The number of channels in this layer is 128, the convolution kernel size is 3x3, and the step size is 1x1.

[0095] The depthwise convolution here can be realized by using the special circuit of the present invention: first, send instructions to the instruction control module, and configure the number of channels, convolution kernel size, step size, data source address, result address and other information to each module, and configure the instruction type For depthwise convolution, the instruction starts to execute. The data reading module reads the image, weight, and bias data from the cache according to the instruction requirements, the data calculation module performs convolution operation according to the size of the convolution kernel, and the data write-back module writes the calculation result back to the on-chip cach...

example 2

[0096] Example 2: Take a max pooling layer of Xception as an example. This layer is a maximum pooling operation. The pooling size is 3x3, the step size is 2x2, and the number of channels is the same as that of the previous layer (128). This layer can be used The implementation of the special circuit of the present invention: firstly send instructions to the instruction control module, configure the channel number, pooling size, step size, data source address, result address and other information to each module, the instruction type is configured as maximum value pooling, and the instruction start execution. The data reading module reads the image data from the cache according to the instruction and sends it to the calculation module. The calculation module takes the maximum value of the input data according to the pool size and sends the result data to the data write-back module. The data write-back module will calculate The results are written back to the on-chip cache, and t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com