Patents

Literature

48results about How to "Reduce computing latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

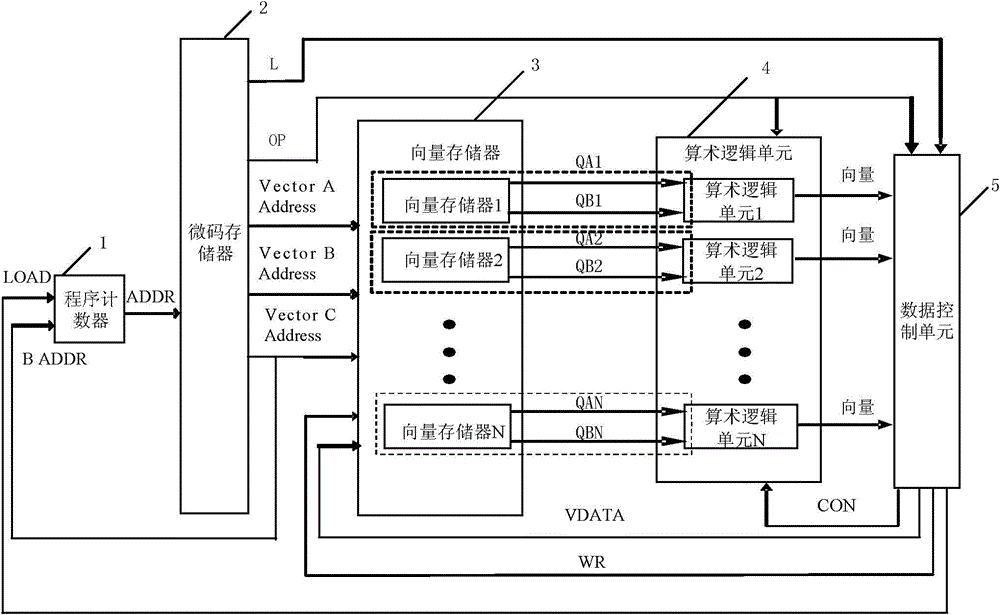

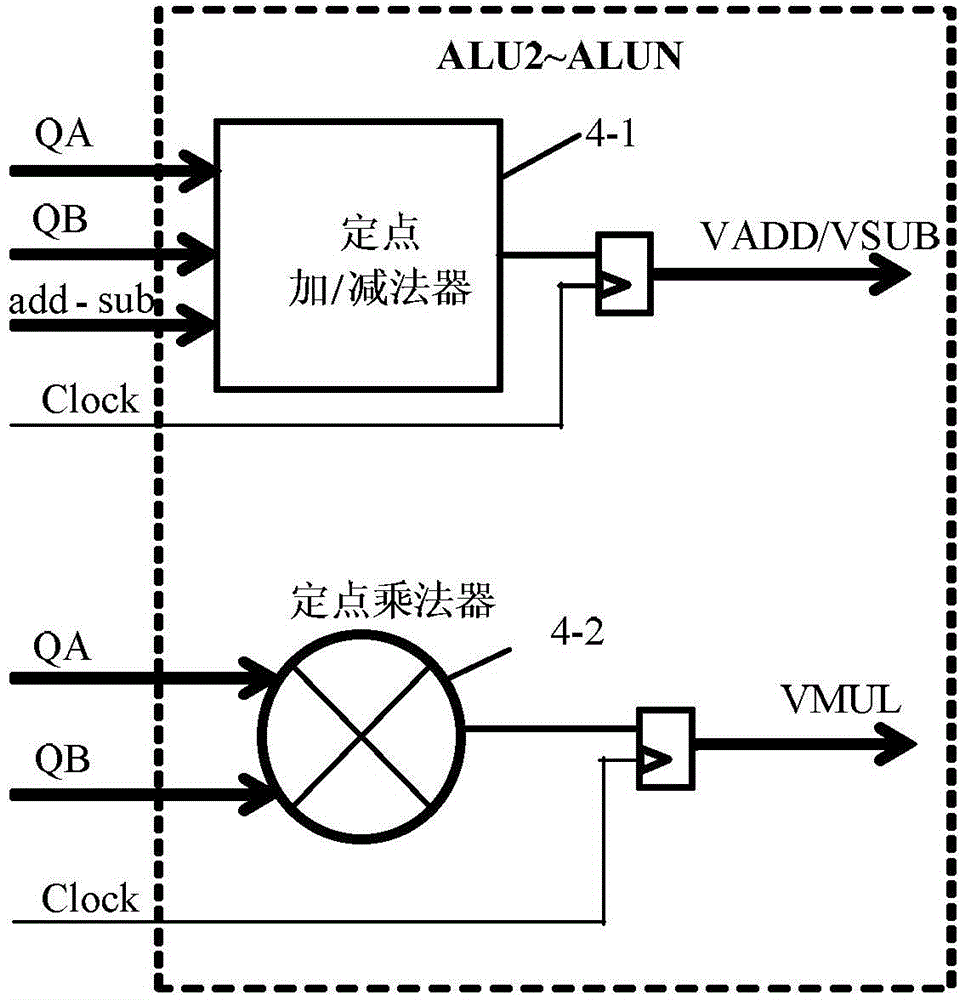

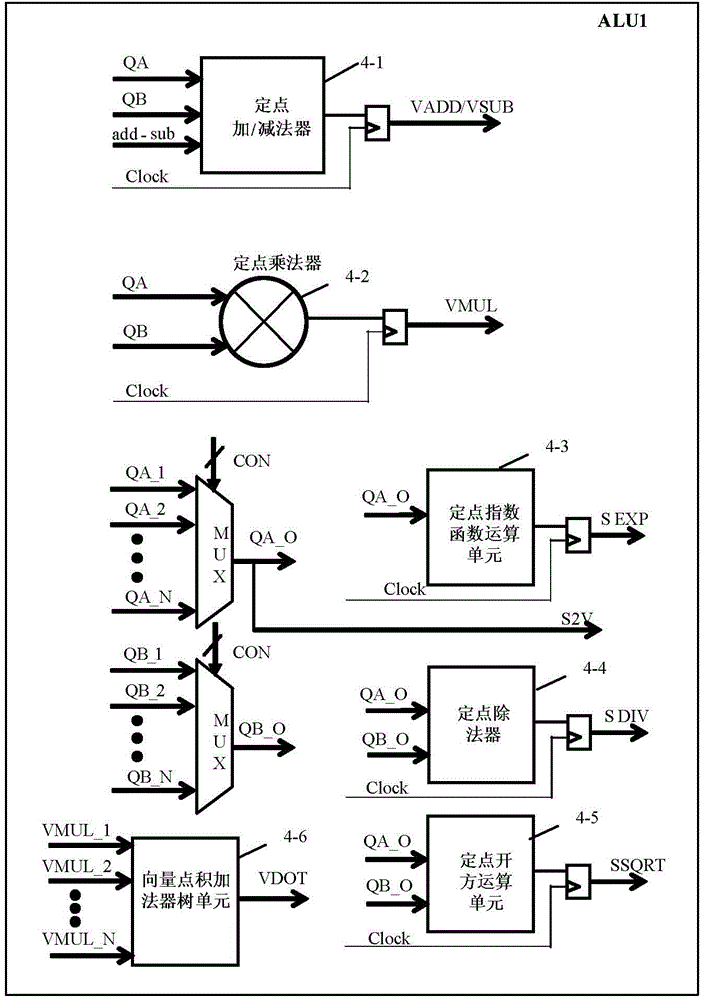

Fixed point vector processor and vector data access controlling method thereof

InactiveCN104699458AImprove computing performanceFlexible configurationMachine execution arrangementsInstruction setVector processor

The invention discloses a fixed point vector processor and a vector data access controlling method thereof, relates to a vector processor used for online time series prediction and aims to solve the problems that an existing vector processor incapable of optimizing a specific method is poor in universality and cannot satisfy requirements on online computation. The fixed point vector processor comprises a program counter, a microcode memorizer, a vector memorizer, an arithmetic logic unit and a data control unit. A complete fixed point vector processing procedure is formed by every signal processing procedure of the program counter, the microcode memorizer, the vector memorizer, the arithmetic logic unit and the data control unit. By means of ALU (arithmetic logic unit) designing, an ALU structure of each data path can be changed flexibly according to computation needs, flexible configuration of an instruction set is achieved, and accordingly, the fixed point vector processor and the vector data access controlling method are applicable to occasions required by complex computation.

Owner:HARBIN INST OF TECH

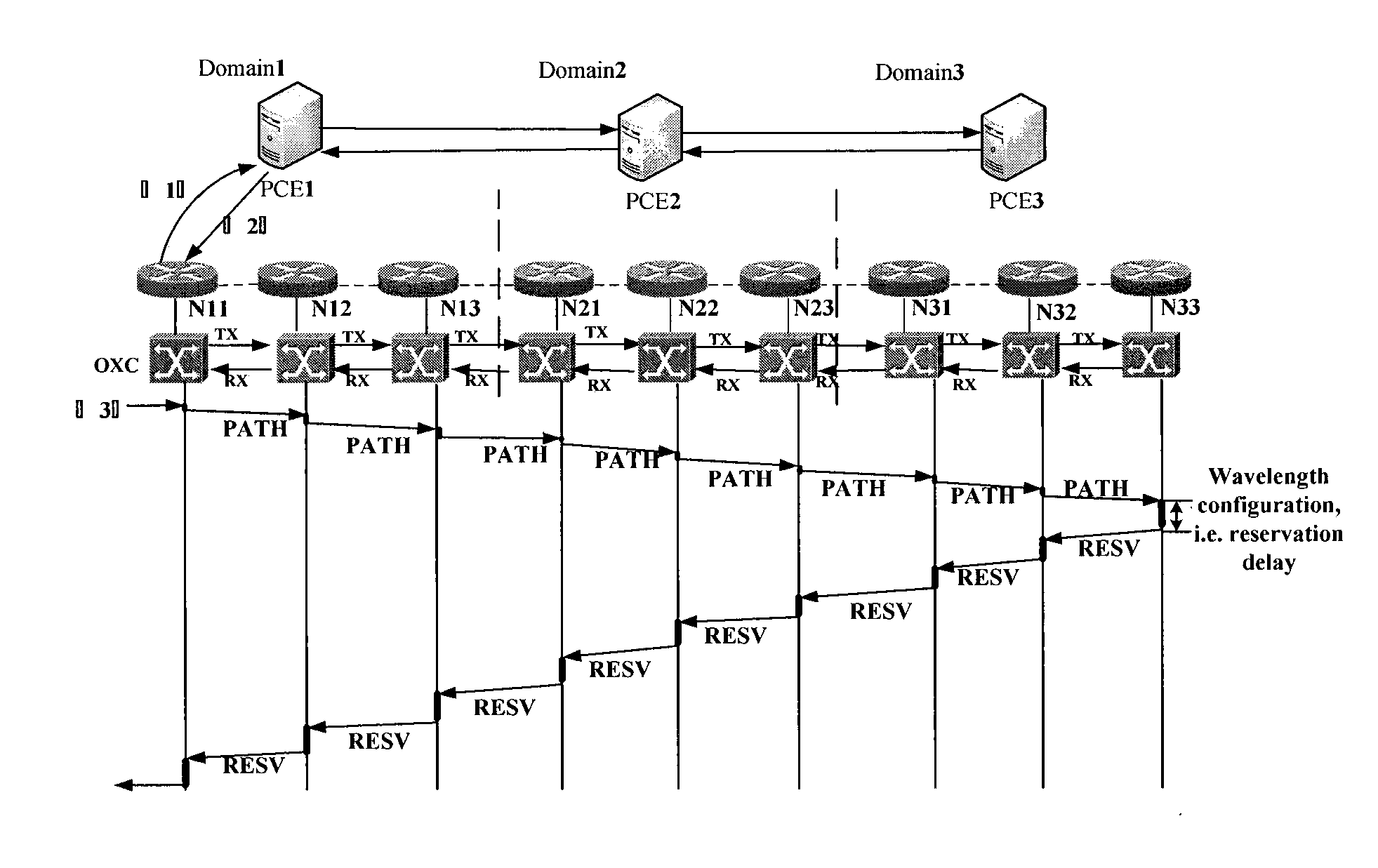

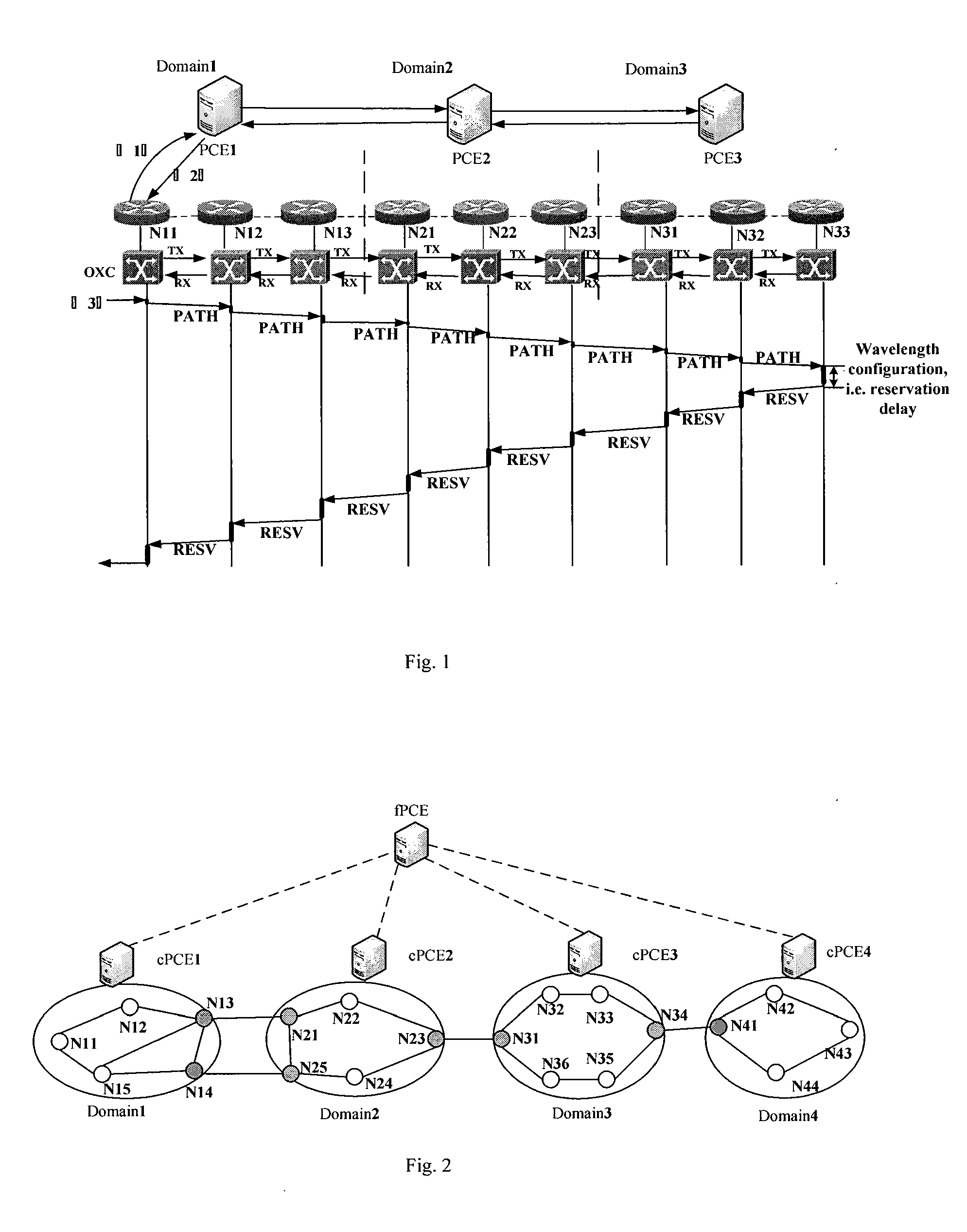

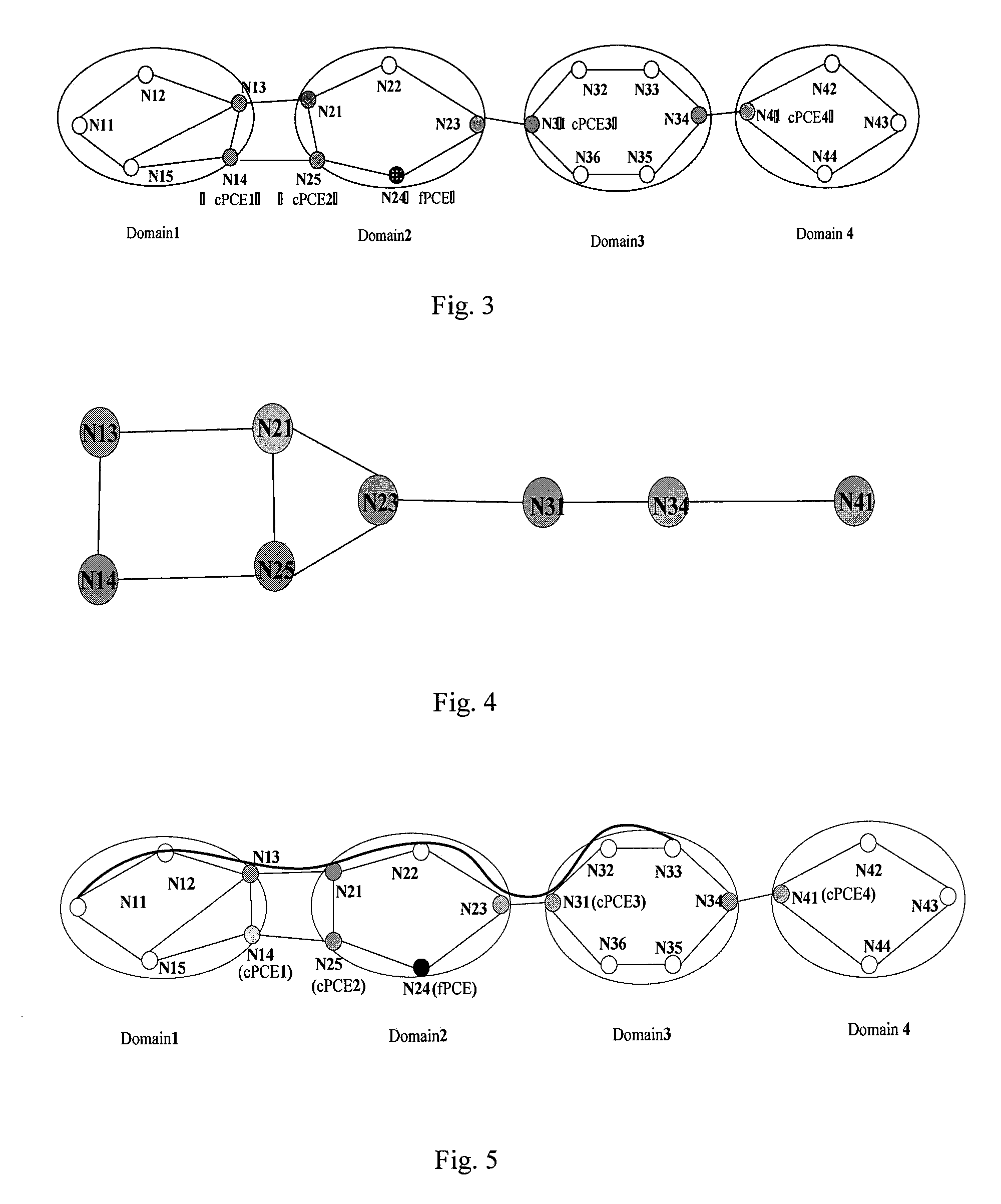

Method for Establishing an Inter-Domain Path that Satisfies Wavelength Continuity Constraint

InactiveUS20120308225A1Improve resource utilizationReduce computing latencyTransmission monitoringOptical multiplexNODALResource utilization

The present invention provides a method for establishing an inter-domain path that satisfies wavelength continuity constraint. The fPCE stores a virtual topology comprised by border nodes of all domains. The present invention uses parallel inter-domain path establishment method to decrease the influence from WCC. Compared with the sequential process way in prior art, it enhanced the resource utilization and decreased computation delay.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

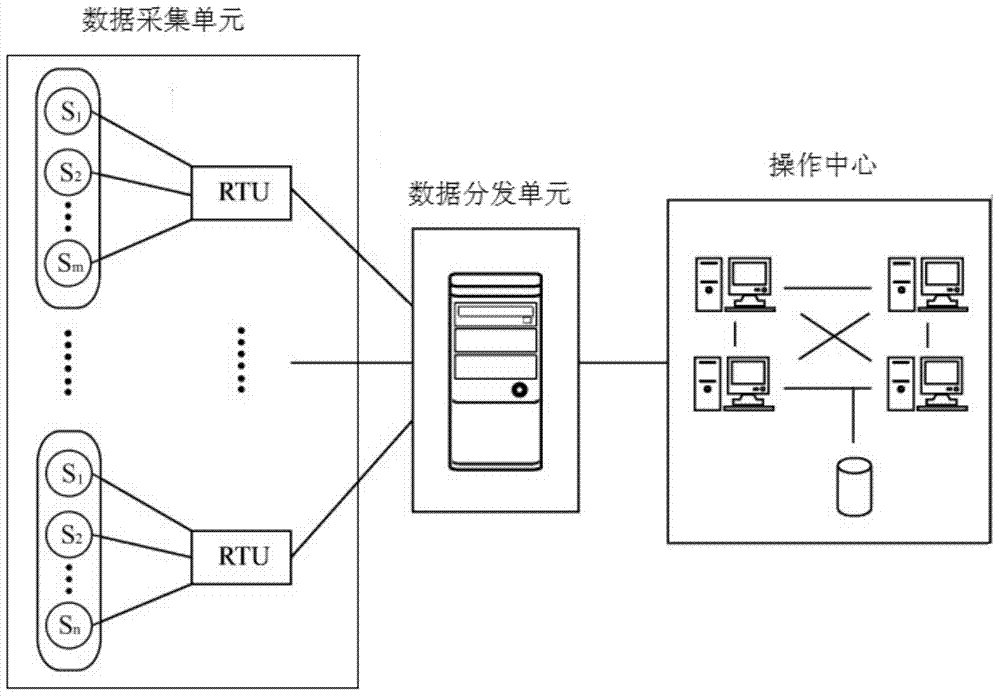

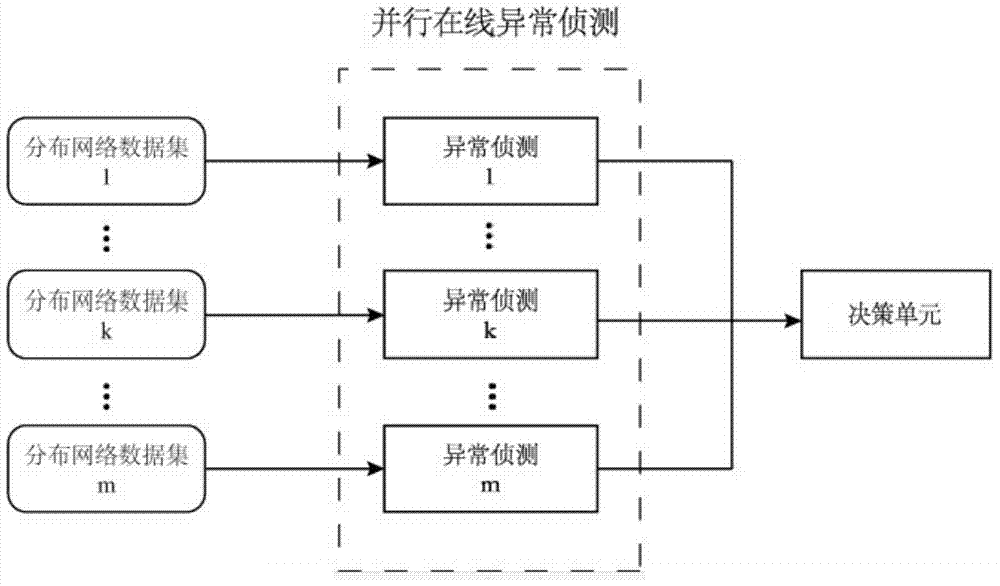

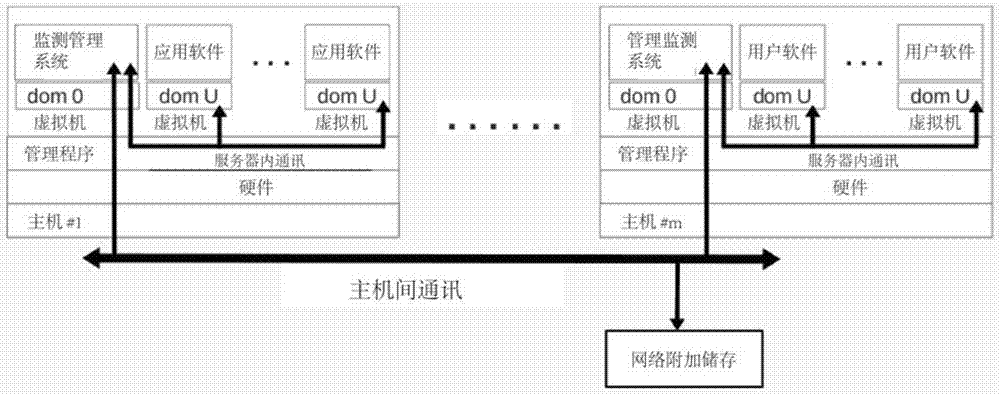

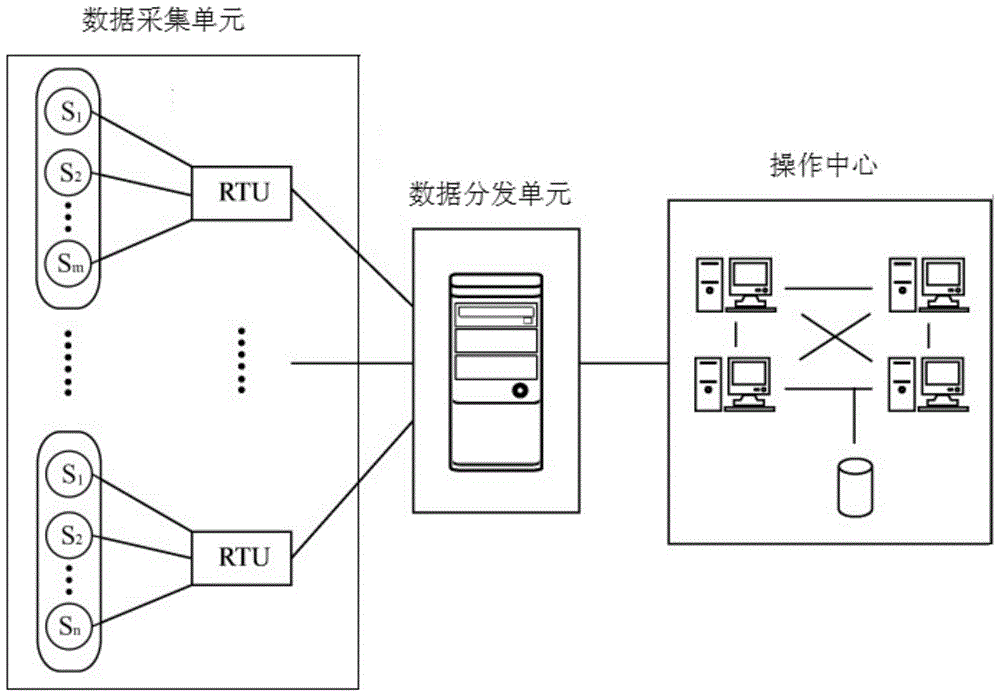

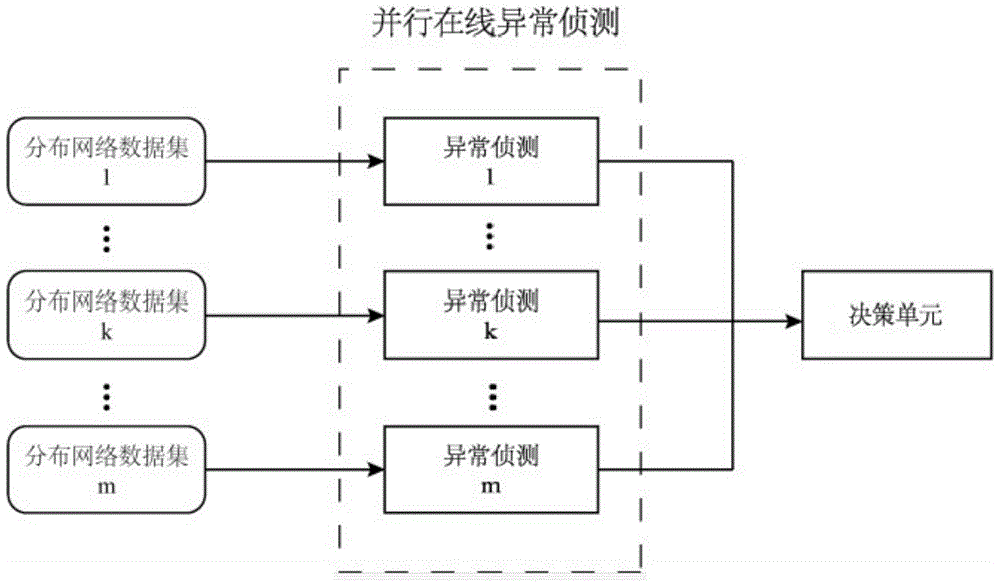

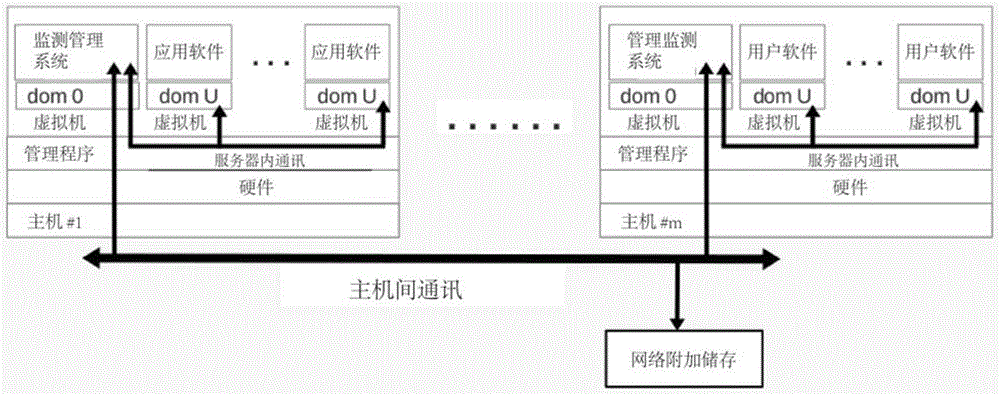

Online pipe network anomaly detection system based on machine learning

ActiveCN103580960AImprove exception recognition rateSave human effortData switching networksReal-time dataData mining

The invention discloses an online pipe network anomaly detection system based on machine learning. The online pipe network anomaly detection system comprises a data collection unit, a data distribution unit and a plurality of anomaly detection units. The data collection unit is used for collecting real-time data of an online pipe network, merging the real-time data according to position areas and grouping the real-time data into different data packages. The data distribution unit is used for receiving the data packages, extracting data elements from the data packages and dividing the data packages into a plurality of data subsets after formatting the data packages. The anomaly detection units are used for receiving the data subsets in a one-to-one correspondence mode and predicating anomalism of the data subsets based on a semi-supervised machine learning framework. The anomaly detection units can be used for carrying out parallel data processing, and data transmission can be carried out among the anomaly detection units through an MPI. The online pipe network anomaly detection system can meet the requirements of the online anomaly detection units based on machine learning for usability of a server, and can prevent extra hardware on standby in an idle state from being introduced in.

Owner:FOSHAN LUOSIXUN ENVIRONMENTAL PROTECTION TECH

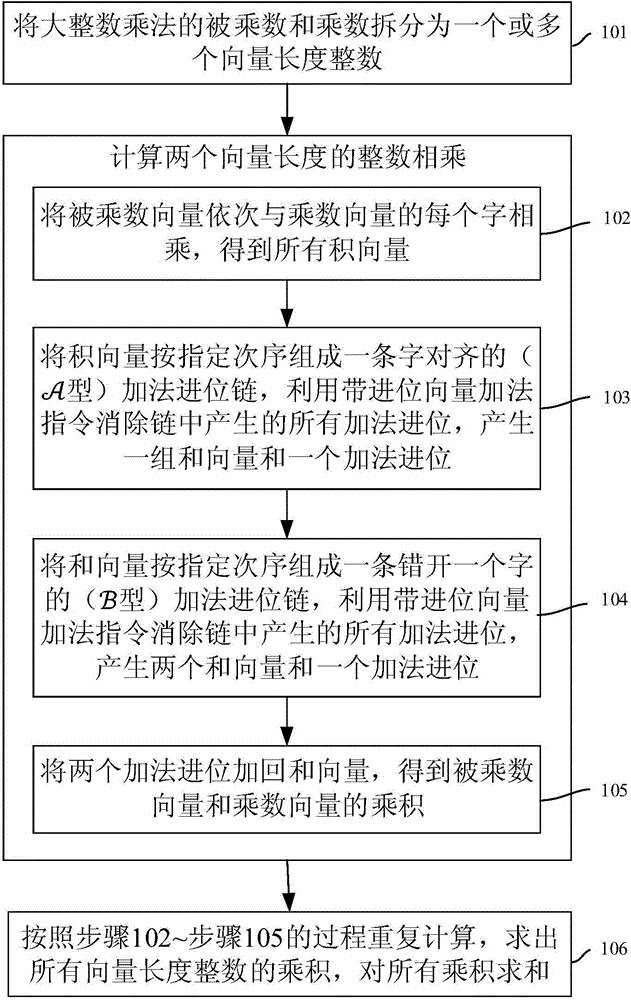

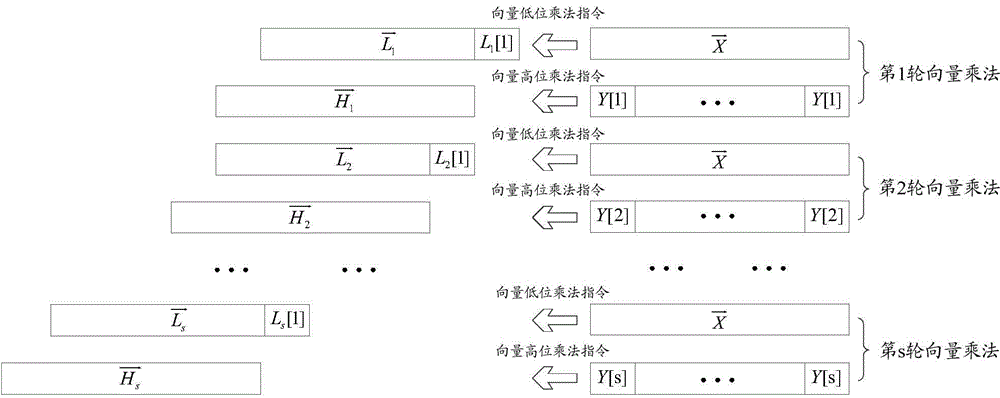

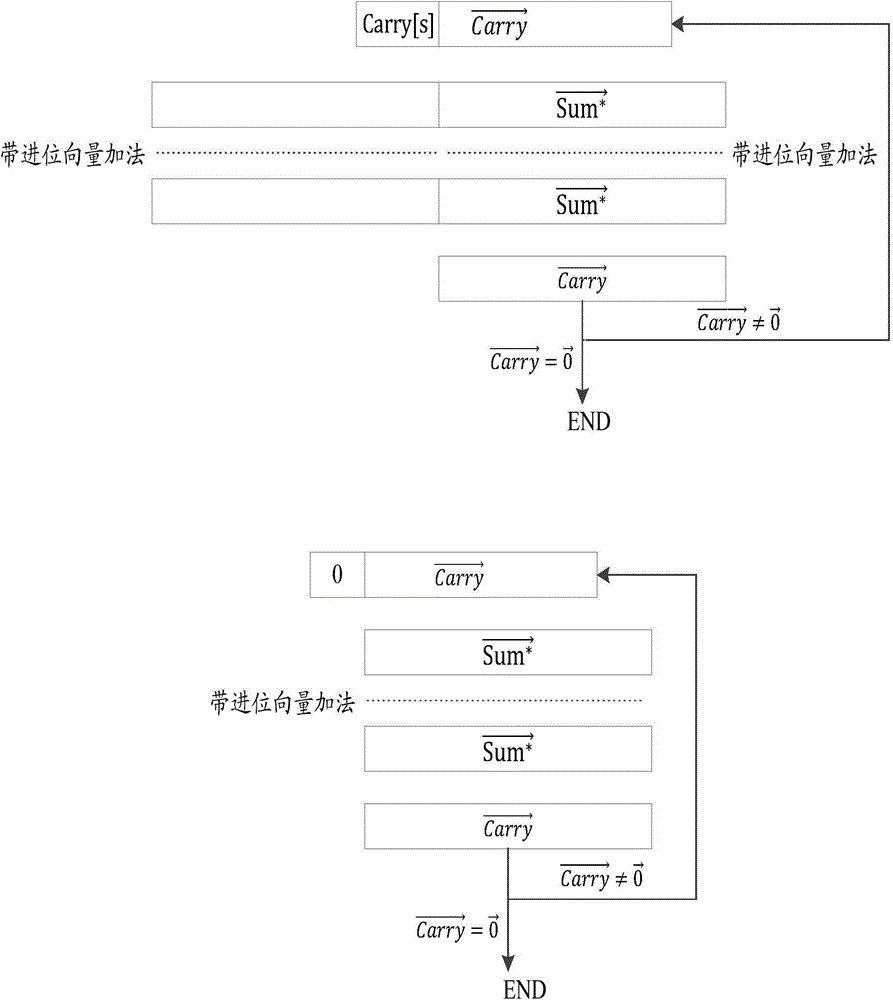

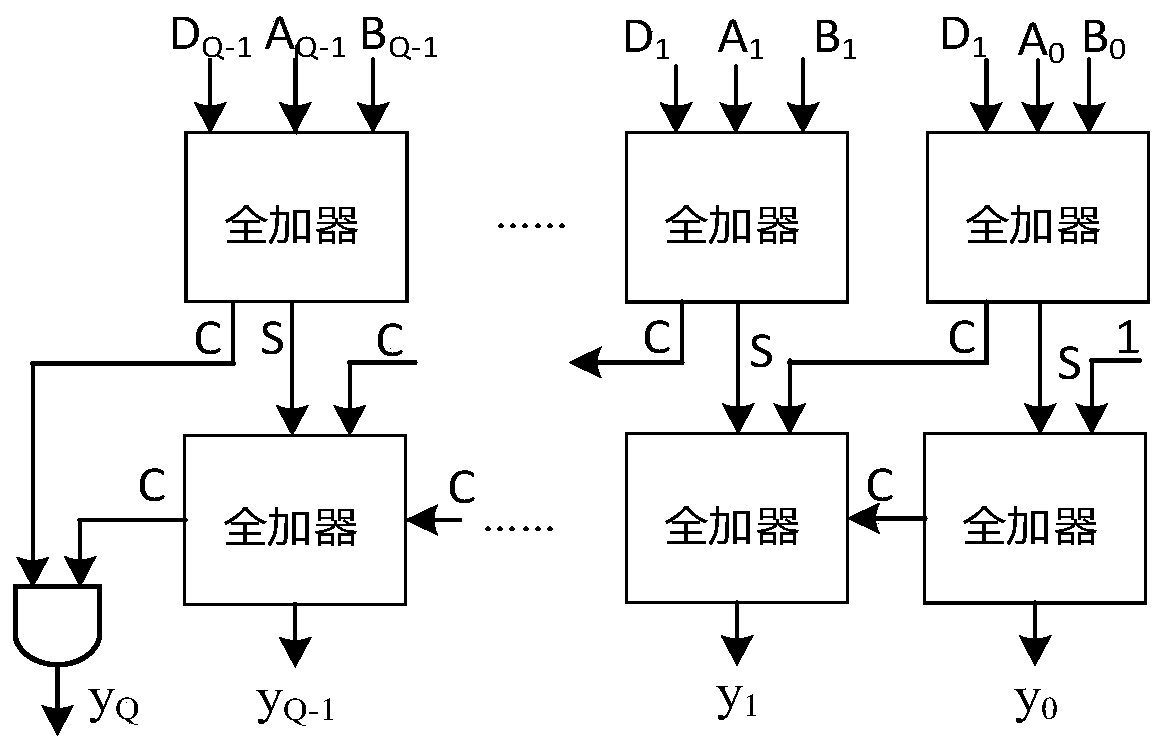

Large integer multiplication realizing method and device based on vector instructions

ActiveCN104461449AReduce the number of instructionsImprove computing throughputDigital data processing detailsXeon PhiTwo-vector

The invention provides a large integer multiplication realizing method and device based on vector instructions. The multiplicand and the multiplier of the large integer multiplication are each split into one or more vector length integers, the integers are multiplied, and all products are summed; when the integers with two vector lengths are multiplied, product vectors generated by all the vector multiplication instructions form two addition carry chains according to the appointed sequence, the vector addition instructions with carries are utilized for making carries generated by vector addition each time serve as input of the next vector addition instruction, all the addition carries in the chains are eliminated, and only two addition carries are generated and added back to obtain the product of the integers with the two vector lengths. Specifically, if the length of the multiplicand and the length of the multiplier are smaller than 1 / n of the vector length, multiplication of n groups of integers is combined into the one-time multiplication of vector length integers, and the calculation handling capacity is promoted by n times. Based on the large integer multiplication method, the invention further discloses a high-speed large integer multiplication device based on an Intel Xeon Phi co-processor. According to the method, instruction numbers needed by the large integer multiplication method are reduced, calculation delay is reduced, and the calculation handling capacity is improved.

Owner:DATA ASSURANCE & COMM SECURITY CENT CHINESE ACADEMY OF SCI

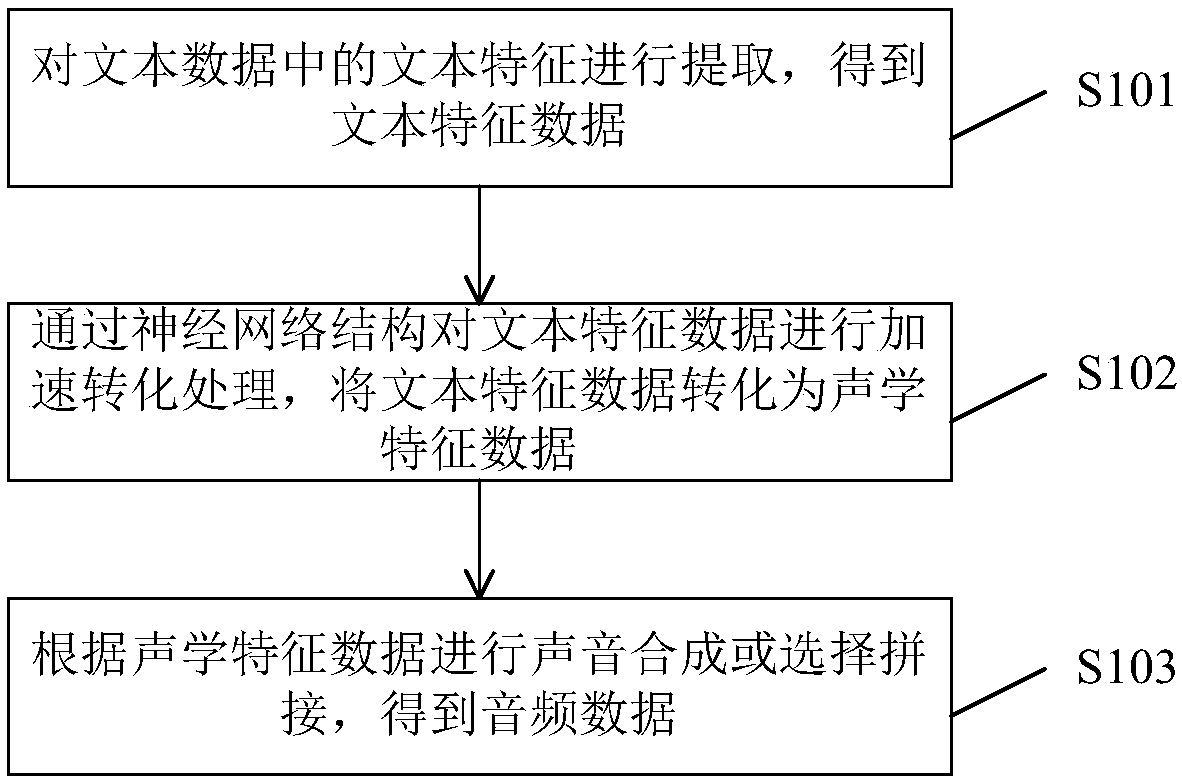

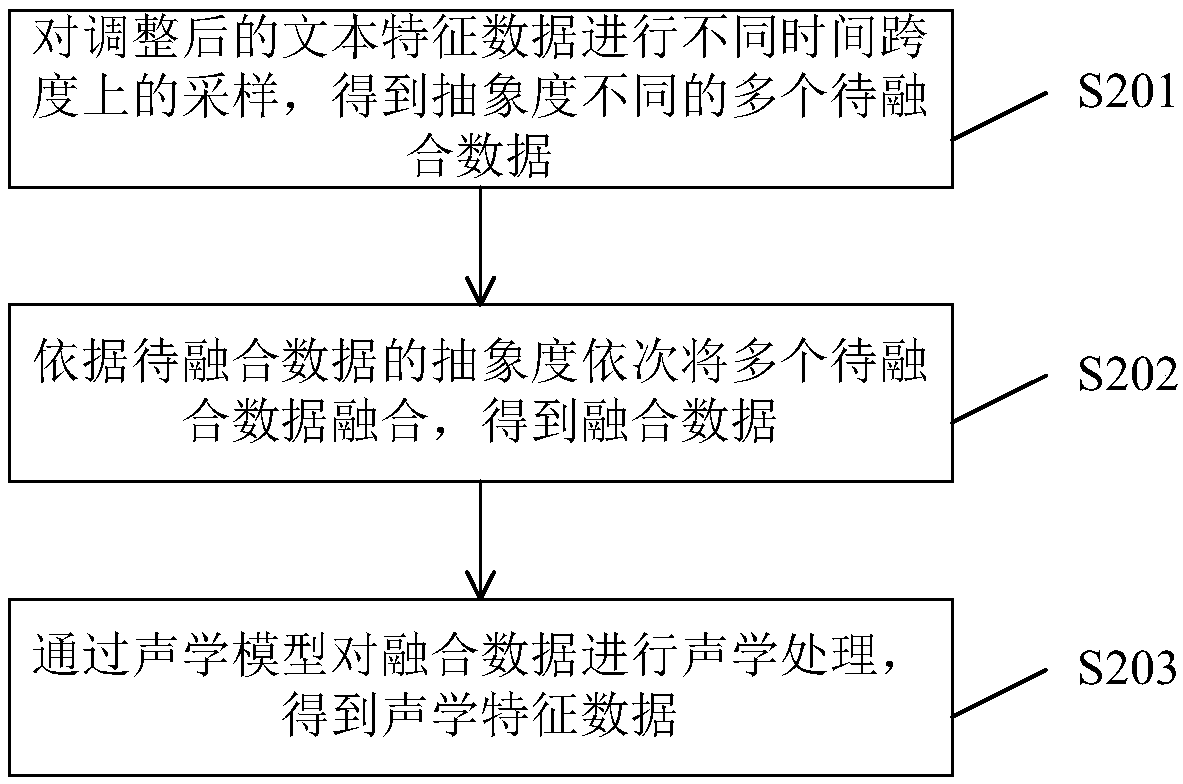

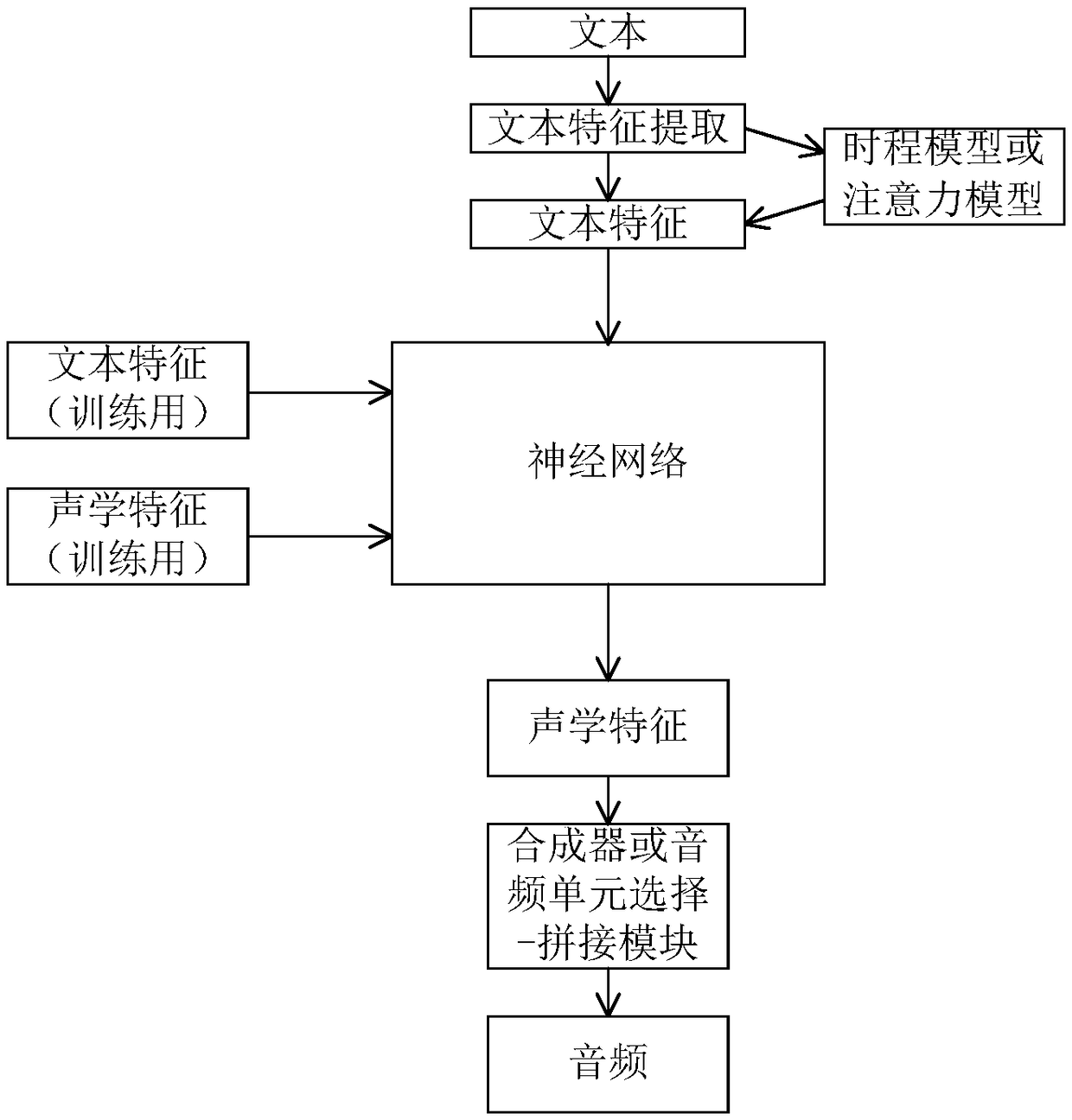

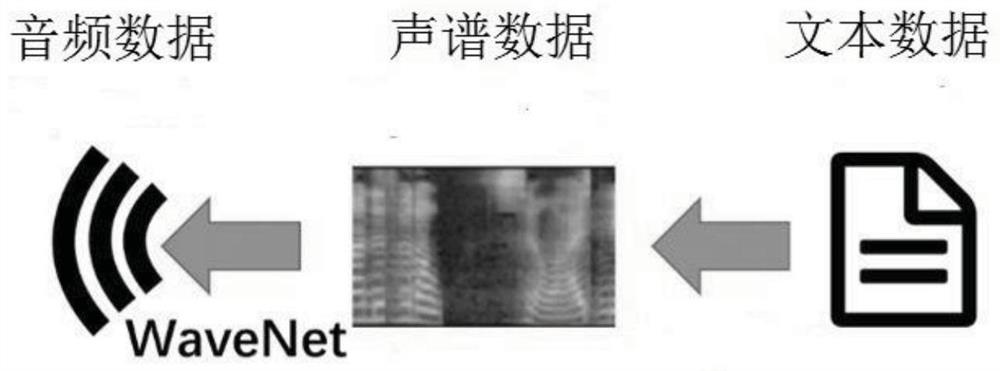

Audio data generating method and system for voice synthesis

ActiveCN109036371AGuaranteed prediction accuracyImprove interactive experienceSpeech synthesisNetwork structureFeature data

The invention provides an audio data generating method for voice synthesis. The audio data generating method comprises the following steps: extracting the text features in the text data to obtain textfeature data; carrying out the acceleration conversion processing on the text feature data through a neural network structure, and converting the text feature data into acoustic feature data; performing sound synthesis or selected splicing according to the acoustic feature data to obtain audio data. According to the invention, through the special anti-convolution structure is adopted, a good voice synthesis effect can be achieved on the premise that no any auto-regressive structure is included and few parameters are used, the calculation delay can be reduced while the prediction precision ofthe acoustic features can be guaranteed through the neural network structure, and the requirement for computing resources is reduced, the concurrent quantity is increased, the voice synthesis speed isincreased, and contribution is made to improvement of human-computer interaction experience.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

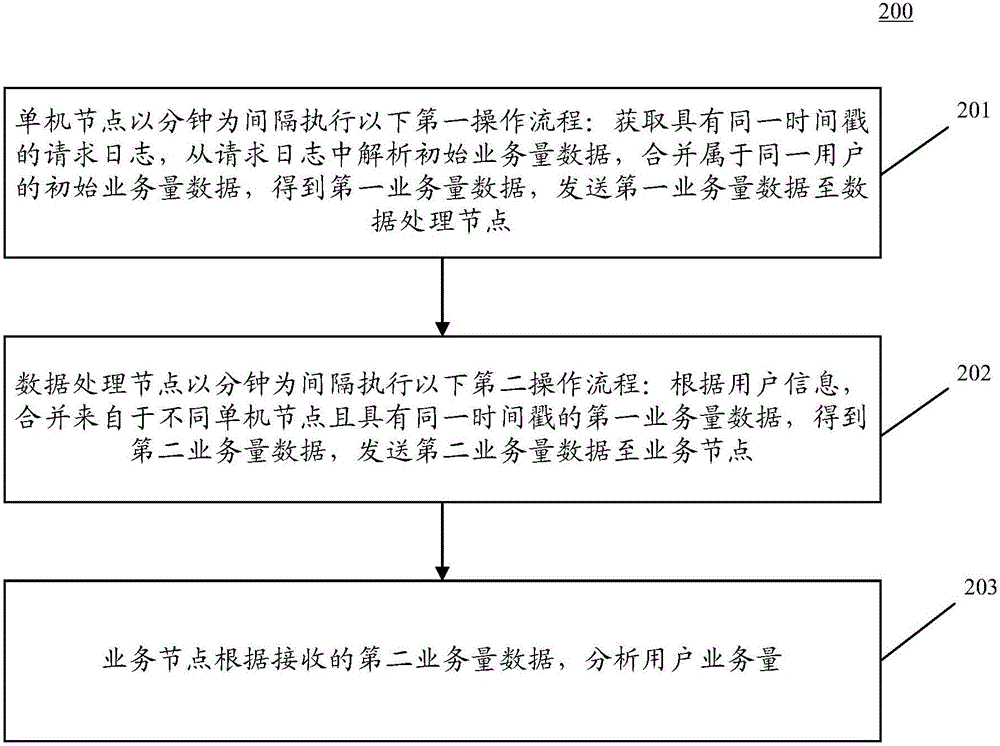

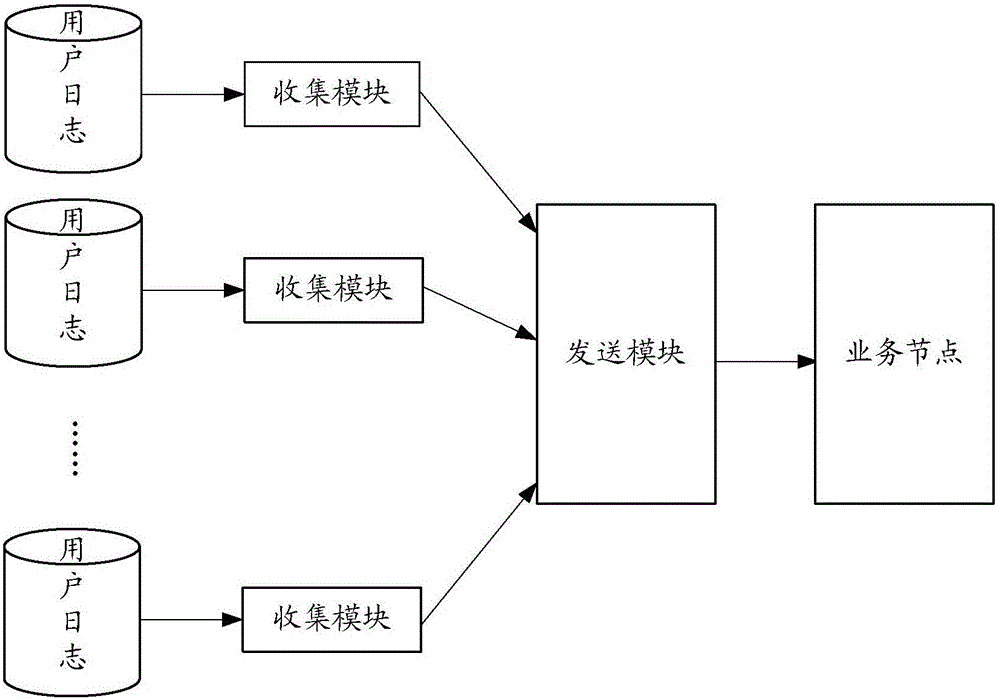

Method and system for analyzing user traffic

ActiveCN105138691AReduce transmissionReduce computing latencyDatabase distribution/replicationWebsite content managementTimestampComputer science

The invention discloses a method and system for analyzing user traffic. The method includes the steps that single nodes execute the following first operation process every other minute that request logs with the same timestamp are obtained, initial traffic data are analyzed from the request logs, the initial traffic data belonging to the same user are combined to obtain first traffic data, and the first traffic data are sent to a data processing node; the data processing node executes the following second operation process every other minute that according to user information, the first traffic data from different single nodes and with the same timestamp are combined, second traffic data are obtained, and the second traffic data are sent to a service node; the service node analyzes the user traffic according to the received second traffic data. The implementation mode reduces transmission of unnecessary repeated data, and computation delay is low.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

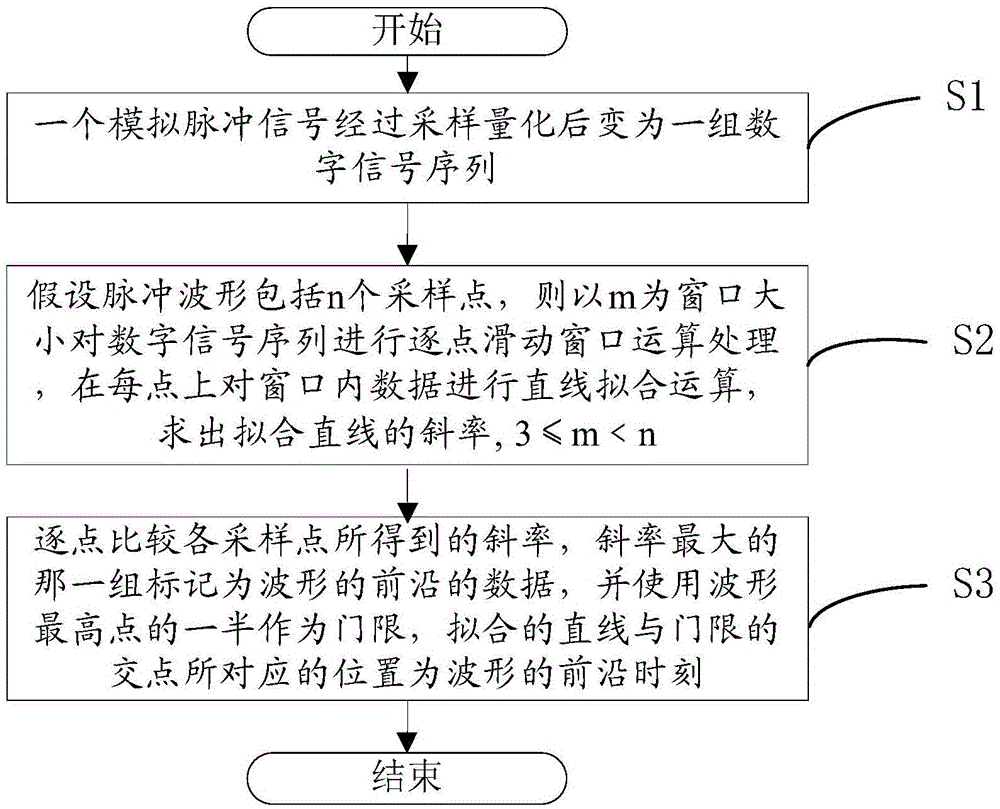

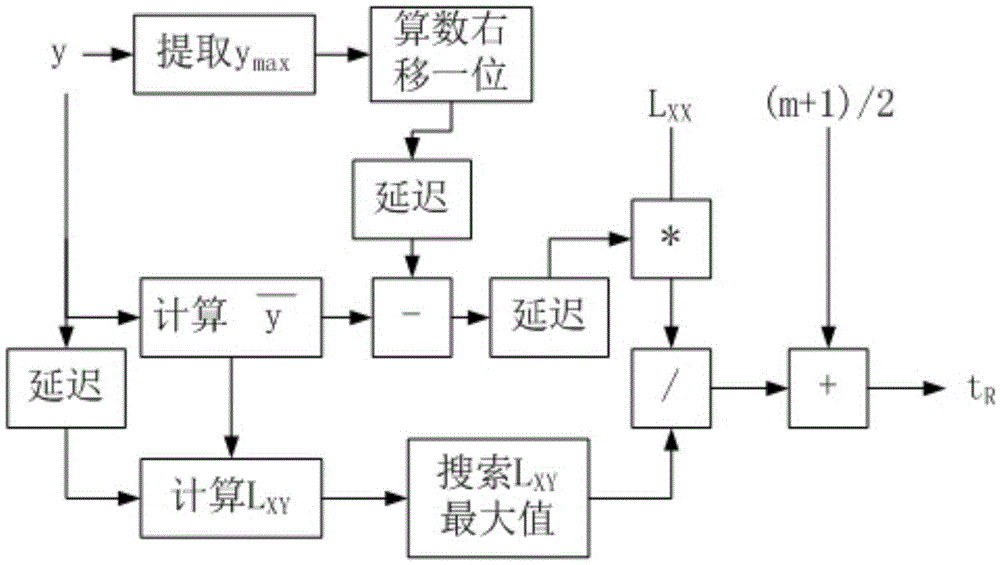

Method and system for detecting leading edge of pulse waveform based on straight line fitting

ActiveCN105486934AHigh precisionReduce precisionProgramme controlComputer controlSlide windowPulse waveform

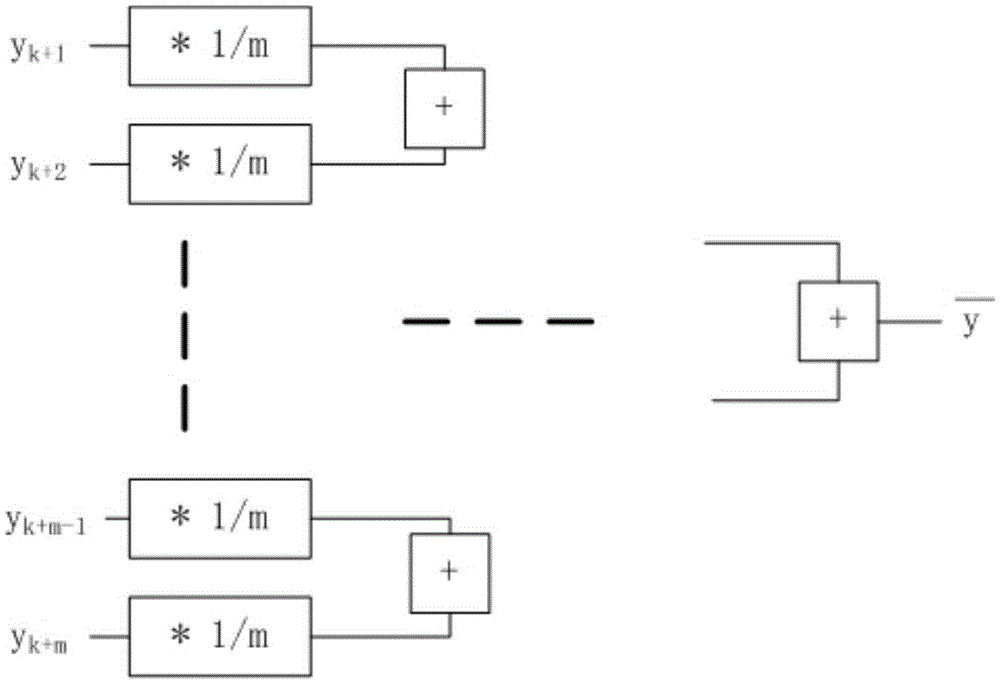

The invention relates to a method and a system for detecting a leading edge of a pulse shape based on straight line fitting, belonging to the waveform leading edge detection technology field. The method comprises steps of converting an analog pulse signal to a group of digital signal sequences after sampling quantization, supposing that the pulse waveform comprises n sampling points, using an m as a window size to perform point-by-point sliding window operation processing on the digital signal sequence, performing straight line fitting operation on the data inside the window on each point to solve an inclination rate of the fitted straight line, wherein 3<=m<n, comparing the inclination rates of the sampling points point by point, marking the group having the maximum inclination rate as the data of the waveform leading edge, and using the half of the highest point of the waveform as a threshold, wherein the position corresponding to the intersection point between fitted straight line and the threshold is the leading edge moment of the waveform. The invention is high in accuracy, small in calculation amount, fast in calculation speed and easy to implement.

Owner:WATCHDATA SYST

Target identification method and device

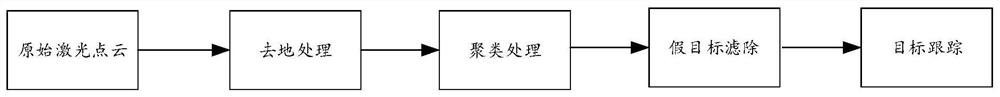

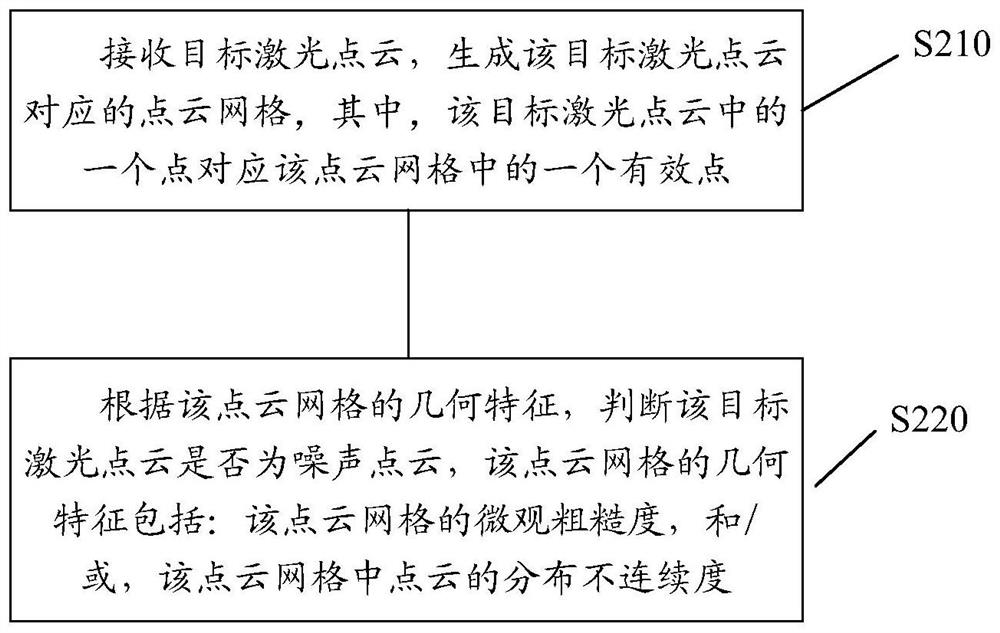

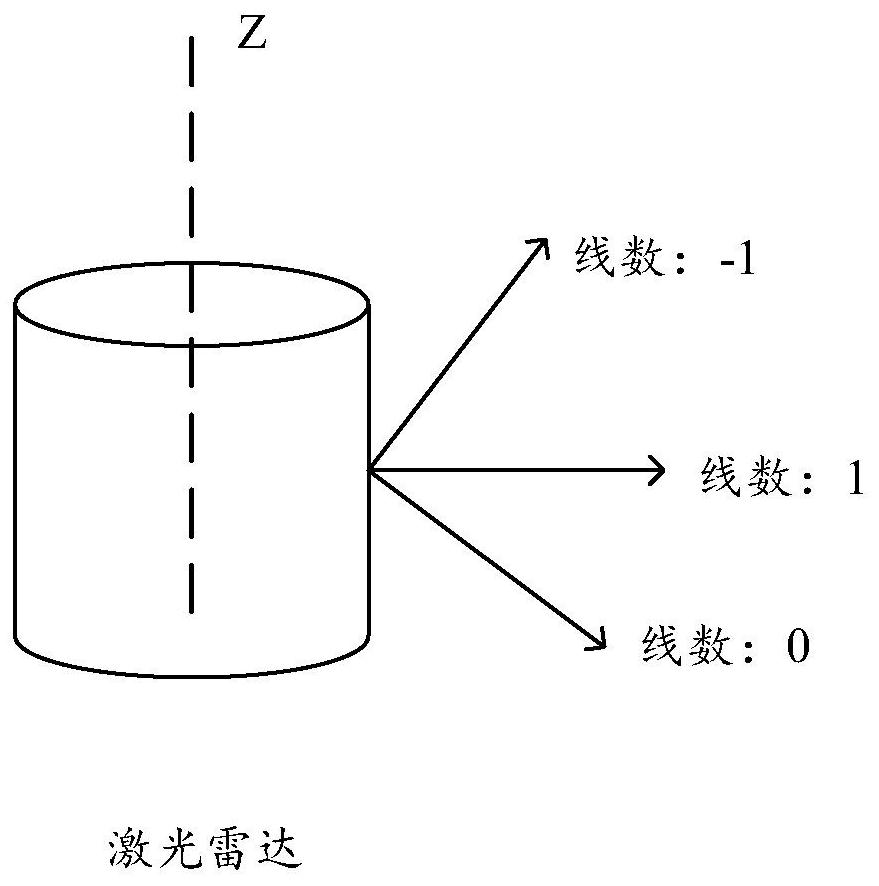

ActiveCN112513679AReduce false detection rateImprove the detection rateScene recognitionAquisition of 3D object measurementsPoint cloudSimulation

The invention discloses a target identification method and device, which can be applied to the field of automatic driving or intelligent driving. The method comprises: receiving a target laser point,generating a grid corresponding to a target laser point cloud, one point in the target laser point cloud corresponding to an effective point in a point cloud grid (S210); and according to the geometrical characteristics of the point cloud grid, determining whether the target laser point cloud is a noise point cloud, the geometrical characteristics of the point cloud grid including the microscopicroughness of the point cloud grid and / or the distribution discontinuity of the point cloud in the point cloud grid (S220). The target identification method provided by the invention can determine whether the laser point cloud is a false target caused by splashing water spray, road flying dust or automobile exhaust and the like due to accumulated water on the road, effectively reduces the false detection rate, improves the detection rate of the false target, and is simple to implement and convenient to implement.

Owner:HUAWEI TECH CO LTD

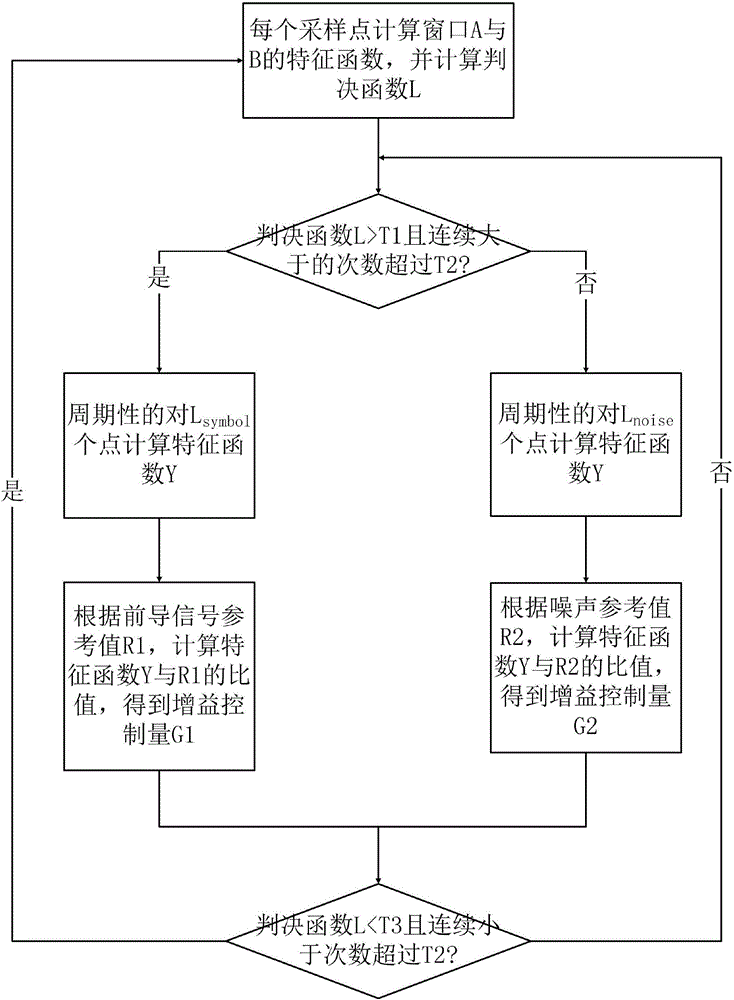

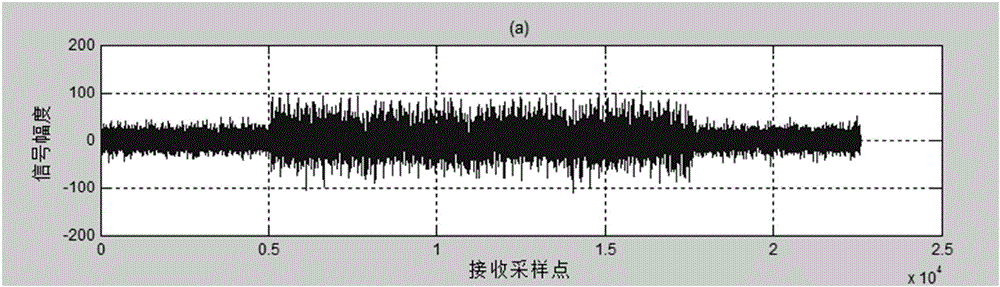

Power line carrier communication automatic gain control method

InactiveCN106411363AAnti-interferenceAvoid interferencePower distribution line transmissionComputation complexityDetection threshold

The invention discloses a power line carrier communication automatic gain control method, which comprises the following steps: calculating a characteristic function and a decision function; according to relation between the decision function and a detection threshold value and a pulse interference threshold value, calculating gain control amount; and during the process of calculating the gain control amount, according to the relation between the decision function and a preset threshold value and the pulse interference threshold value, stopping calculating the gain control amount and restarting the calculating process. According to the method, the received data can be used directly to carry out gain control judgment; the characteristic function is calculated through an iteration mode; difference between the current signal and the delay signal is calculated every time, and is subjected to add operation with the result obtained in the last calculation, thereby reducing calculation delay and complexity under the condition of ensuring gain calculation precision; and meanwhile, the pulse interference threshold value is set for resisting interference of noise to gain control. Therefore, the method is independent of frame synchronization or symbol synchronization information, can effectively prevent the interference of the noise on the gain control and simplifies gain control value computation complexity.

Owner:WILLFAR INFORMATION TECH CO LTD

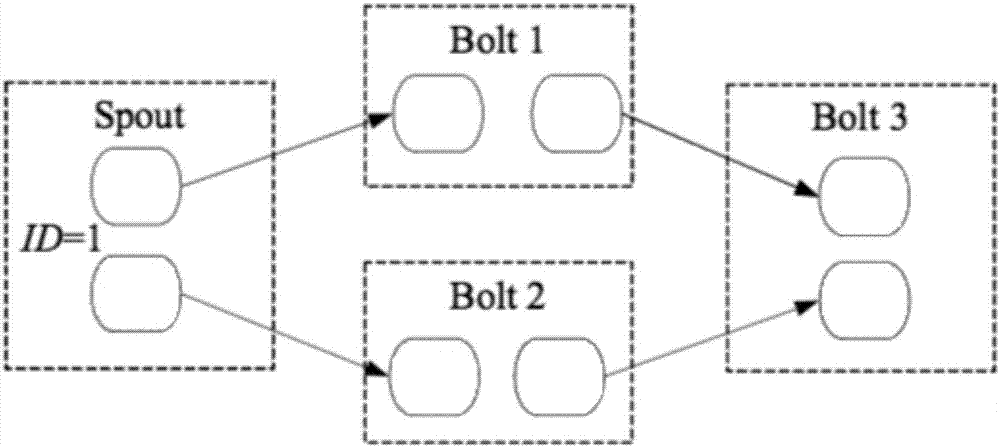

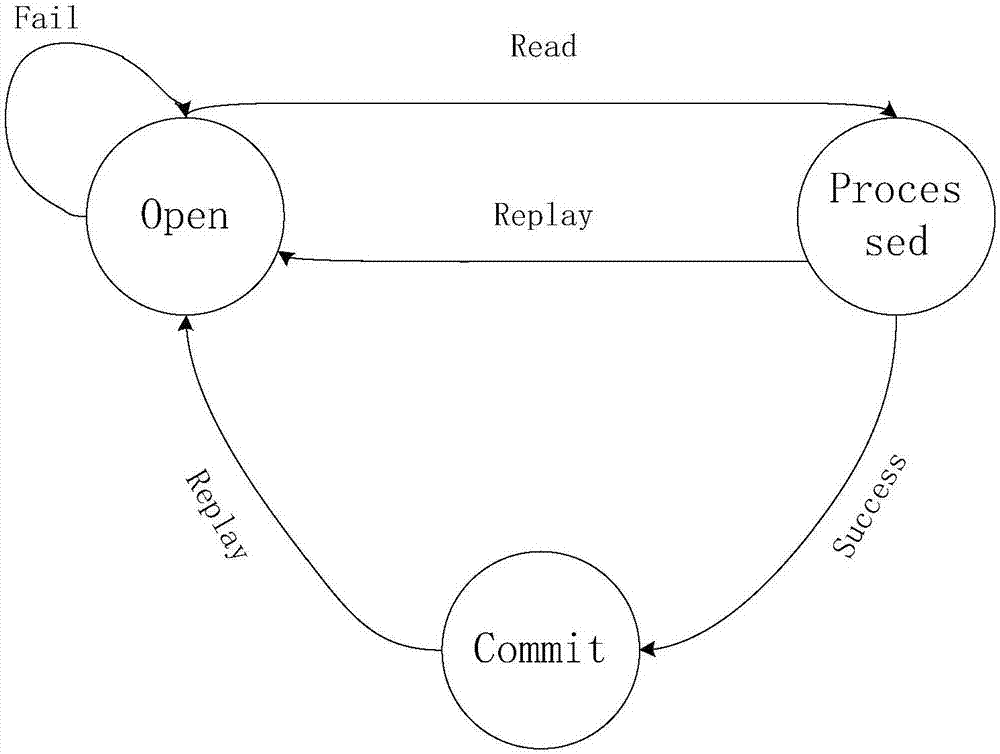

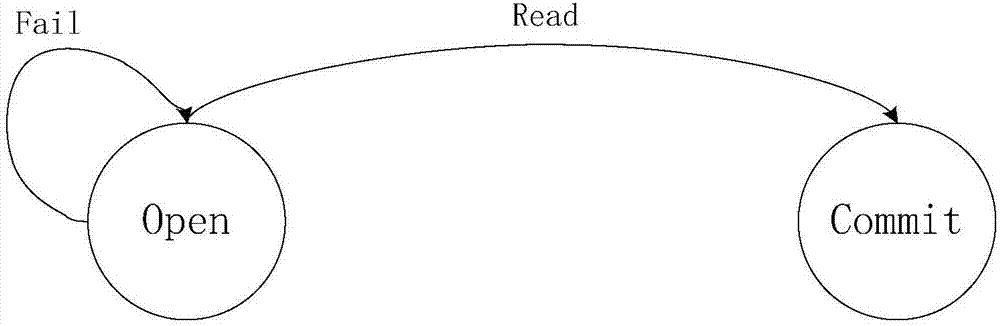

Parallel data reflow method under stream computing environment

ActiveCN107153526AFault-tolerantReduce data calculation delayDatabase distribution/replicationConcurrent instruction executionPipingComputer security

The invention provides a parallel data reflow method oriented for real-time streaming computation. The method comprises the steps that step 1, initialization of three queues; step 2, initialization of a piping Data Queue; step 3, read requests are initiated by Spout of Topology to the Data Queue; step 4, data in the three queues is read by Data Queue; step 5, whether or not the queue pointed by ToP is empty is determined, if the queue is empty, step 6 is proceeded; if the queue is not empty, step 7 is proceeded; step 6, the data in the From queue is copied to the To queue, and the From queue is cleared; 7, data in the Data Queue is obtained by Topology, a Tuple is sent by current Task to downstream; step 8, the feedback of Tuple awaits for being sent by current Task, if the sending fails or times out and the feedback is not sent, the Tuple is opted to reflow; 9, whether or not the Topology can be stopped is determined, and if the Topology cannot be stopped, then step 4 is proceeded, otherwise, the steps are ended. By the parallel data reflow method oriented for real-time streaming computation, the data is stateless and has fault-tolerance, data computation latency is reduced, system response is increased, and the reflowed data is processed by priority at the first possible chance.

Owner:ZHEJIANG UNIV OF TECH

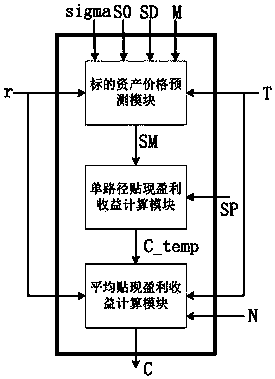

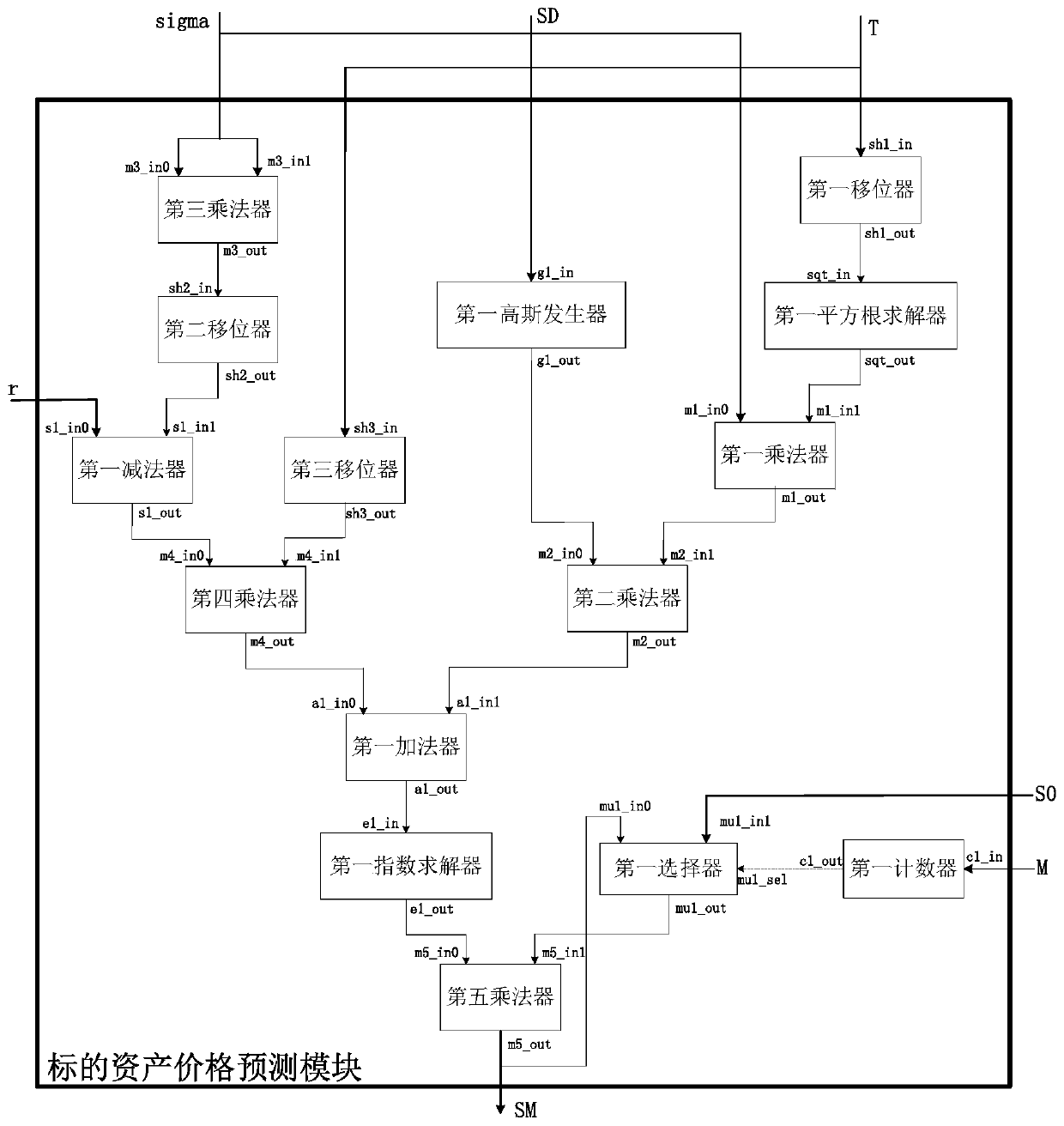

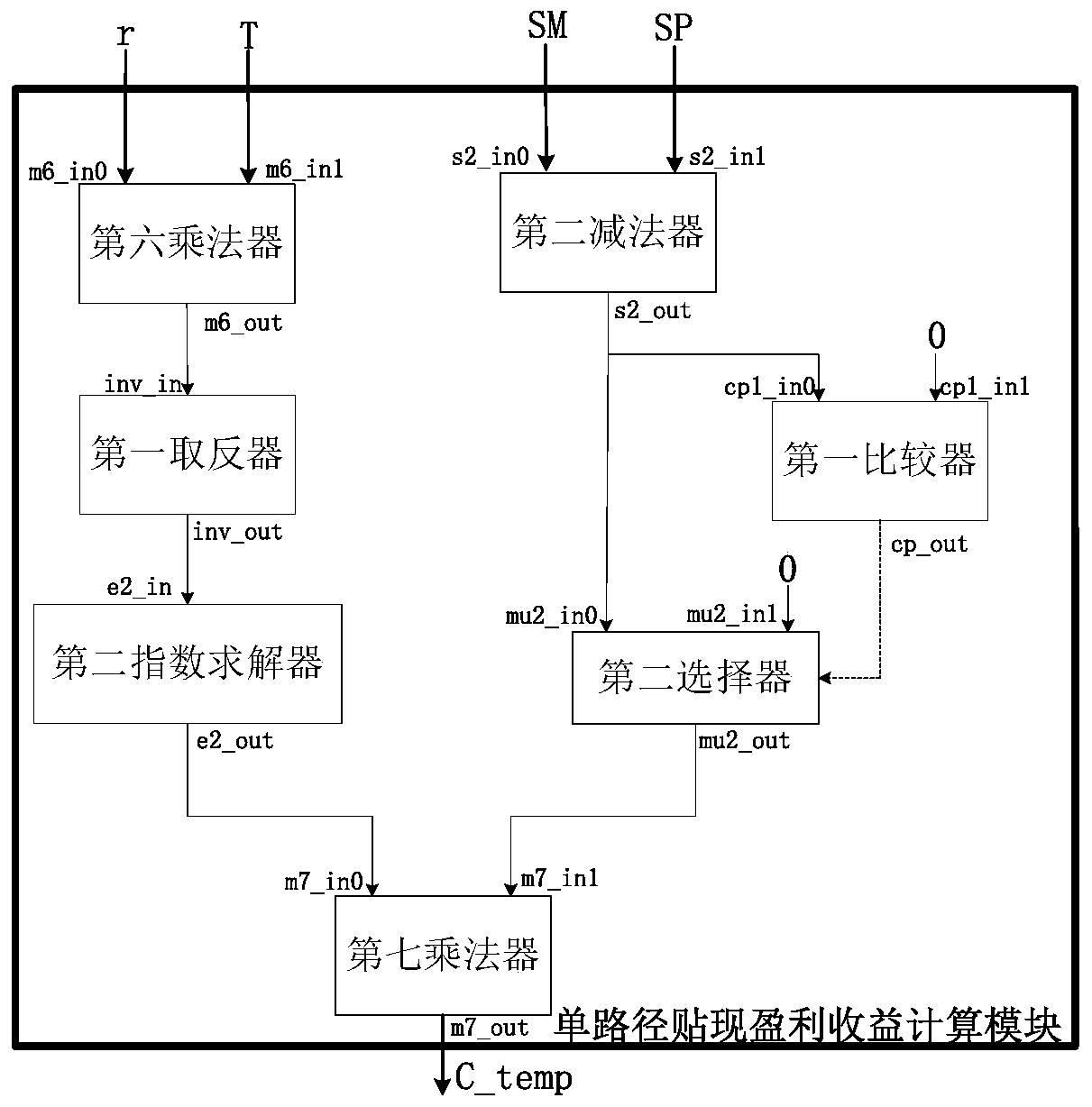

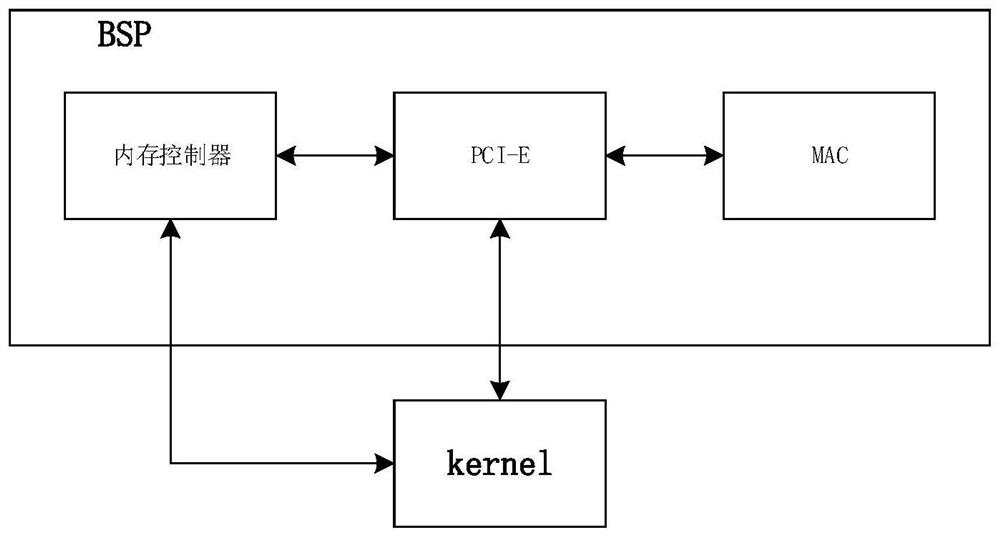

An option pricing hardware accelerator

InactiveCN109741185AImprove computing powerReduce computing latencyComputations using contact-making devicesFinanceResource consumptionSimulation

Aiming at the technical problems that a traditional option pricing method is realized by adopting software and the calculation performance is difficult to ensure, the invention provides an option pricing hardware accelerator, which consists of a target asset price prediction module, a single-path profit profit profit calculation module and an average profit profit profit calculation module, The target asset price prediction module is responsible for completing Monte Carlo simulation of one-time target asset price prediction to obtain a target asset price prediction value; and the single-path profit income calculation module is responsible for calculating a profit income prediction value of the Monte Carlo simulation according to the target asset price prediction value. And the average profit income calculation module is responsible for accumulating profit incomes generated by Monte Carlo simulation each time and then calculating an average value of the profit incomes, and the value isan option profit income estimation value. Compared with software implementation, the method has the advantages of high performance, low hardware resource consumption and easiness in parallelization.

Owner:NAT UNIV OF DEFENSE TECH

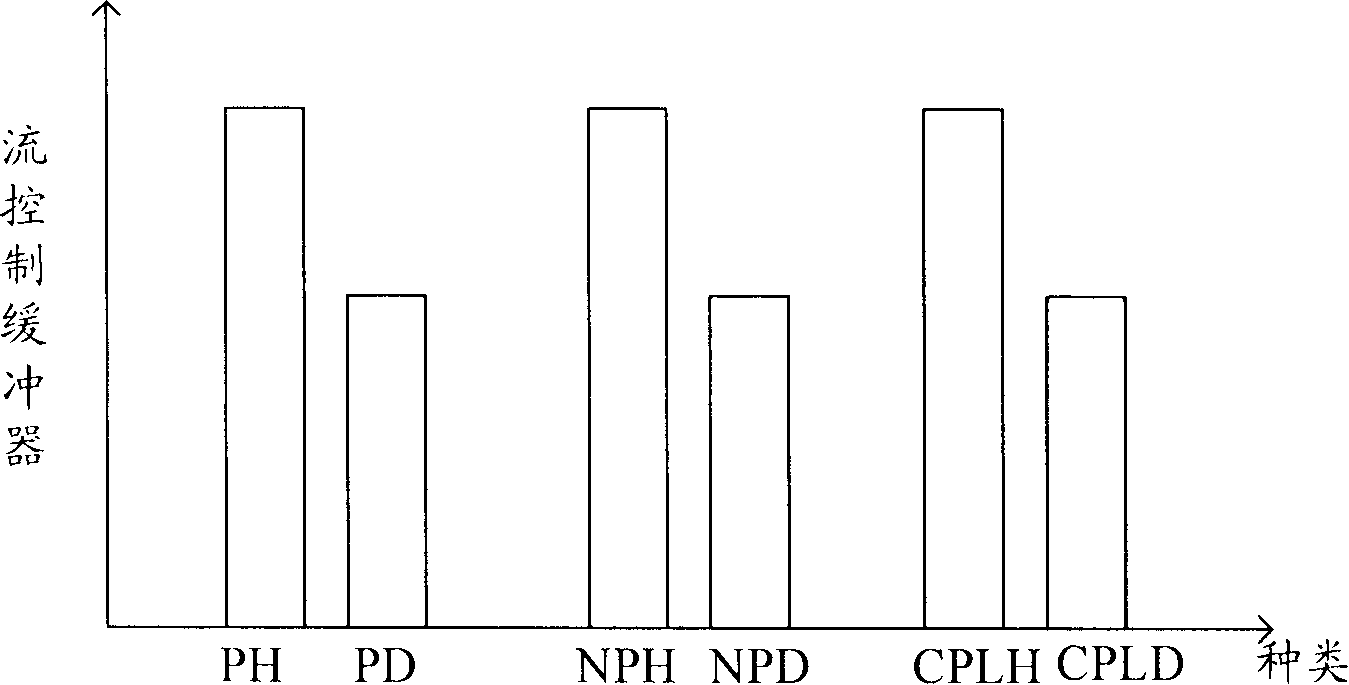

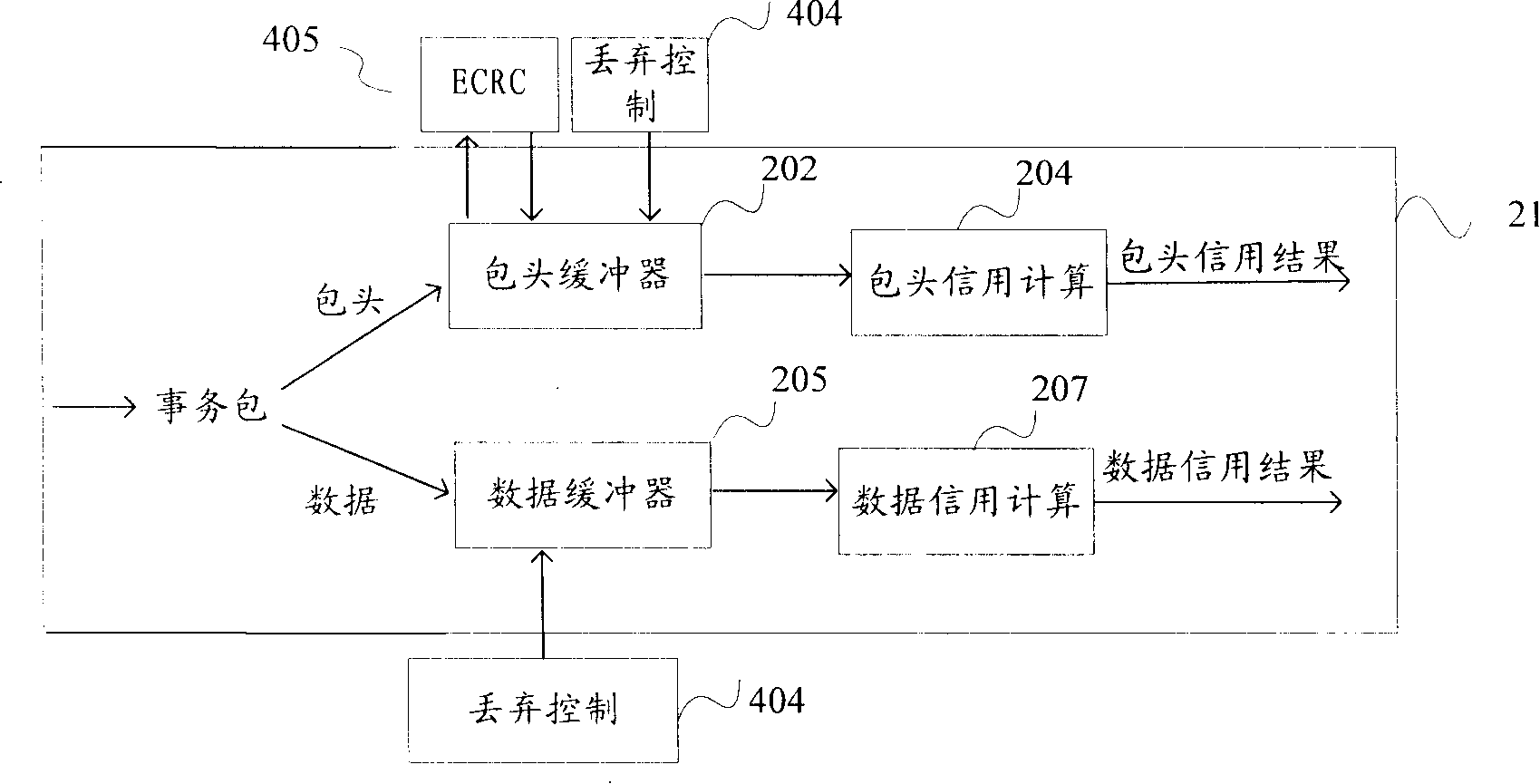

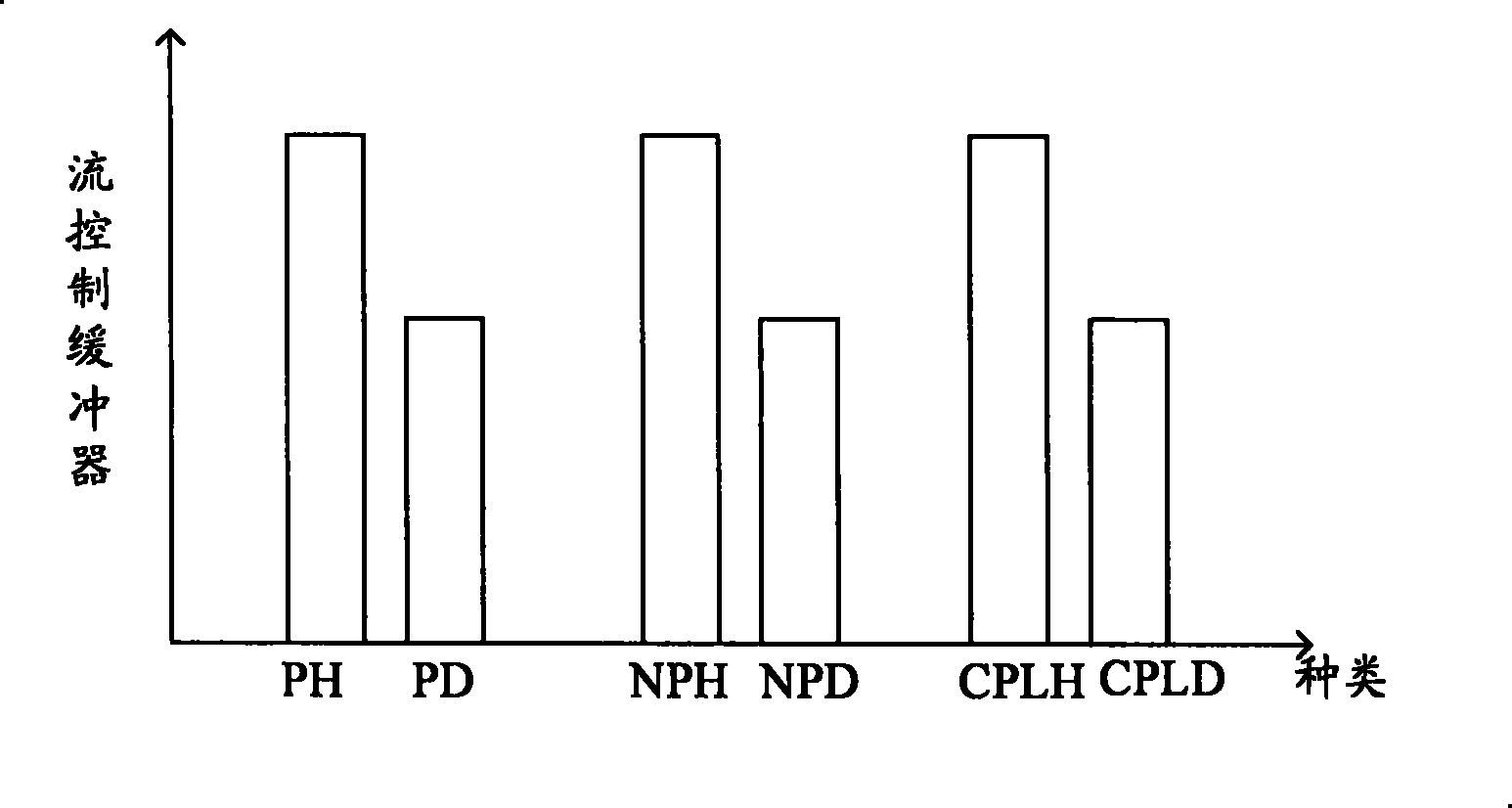

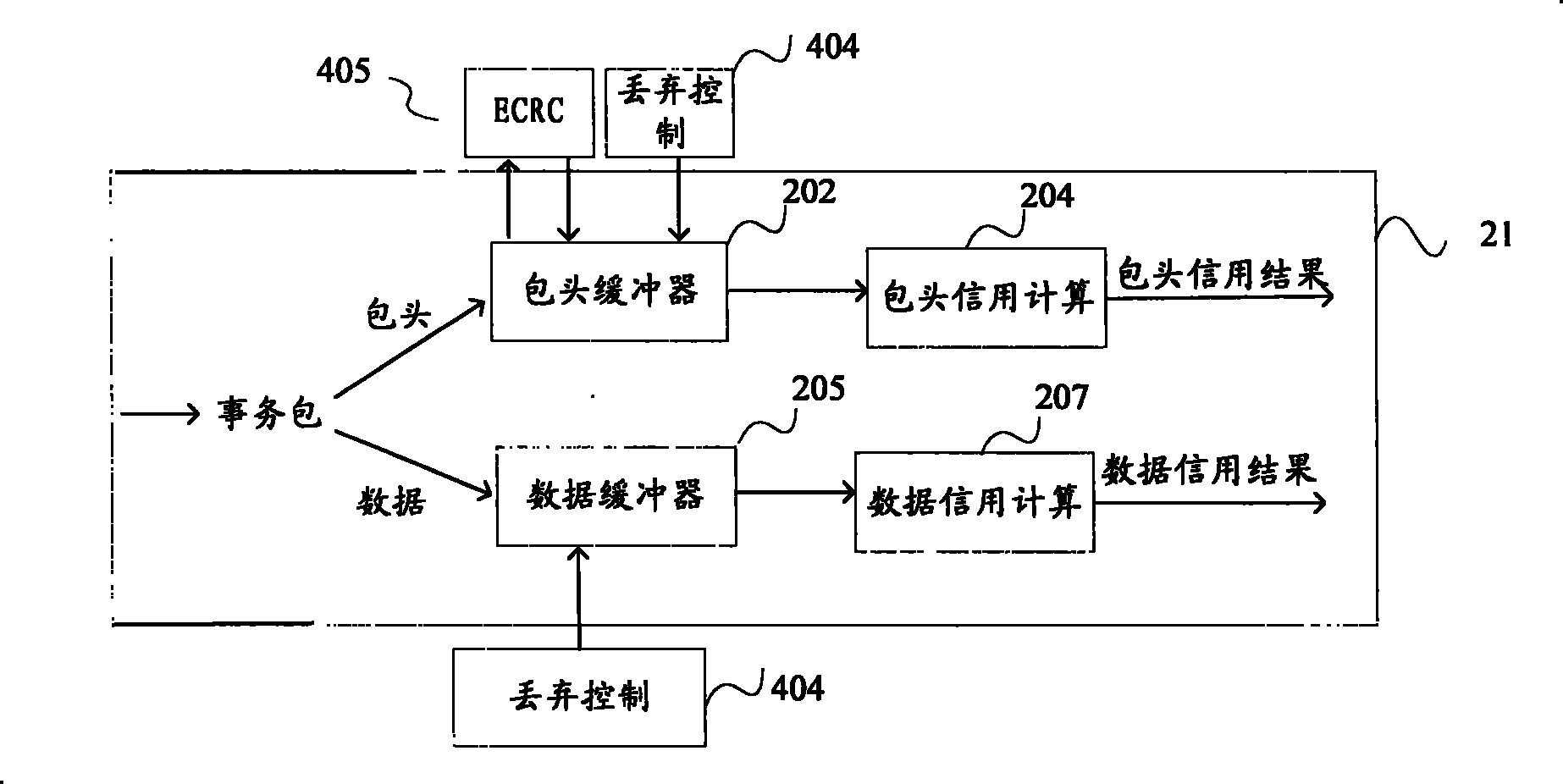

Credit processing equipment and flow control transmission apparatus and method thereof

ActiveCN101184022ACalculation speedReduce computing latencyEnergy efficient ICTData switching networksRing counterTransport system

The invention discloses a stream packet header credit processing device, comprising a packet header buffer unit and a packet header credit calculation unit, which is characterized in that: the packet header credit is calculated directly according to the amount of the packet header credit received, recorded and transmitted by a writing pointer and a reading pointer, so that delay-time is reduced; meanwhile, a credit processing device is provided in the invention and the credit processing device comprises the packet header credit processing device and a data credit calculation unit; wherein, in the data calculation circuit a ring counter control unit and a controlling table unit are used to choose different types of data; the data credit is calculated via the shared circuit, so that circuit area is saved and calculation speed is raised; the invention also provides a sending device, a receiving device, a transmission system and a processing and transmitting method using the receiving and sending devices and transmission system, so that credit processing is optimized; efficiency is increased and power consumption is reduced with the stream control device and stream control method in the invention.

Owner:SEMICON MFG INT (SHANGHAI) CORP

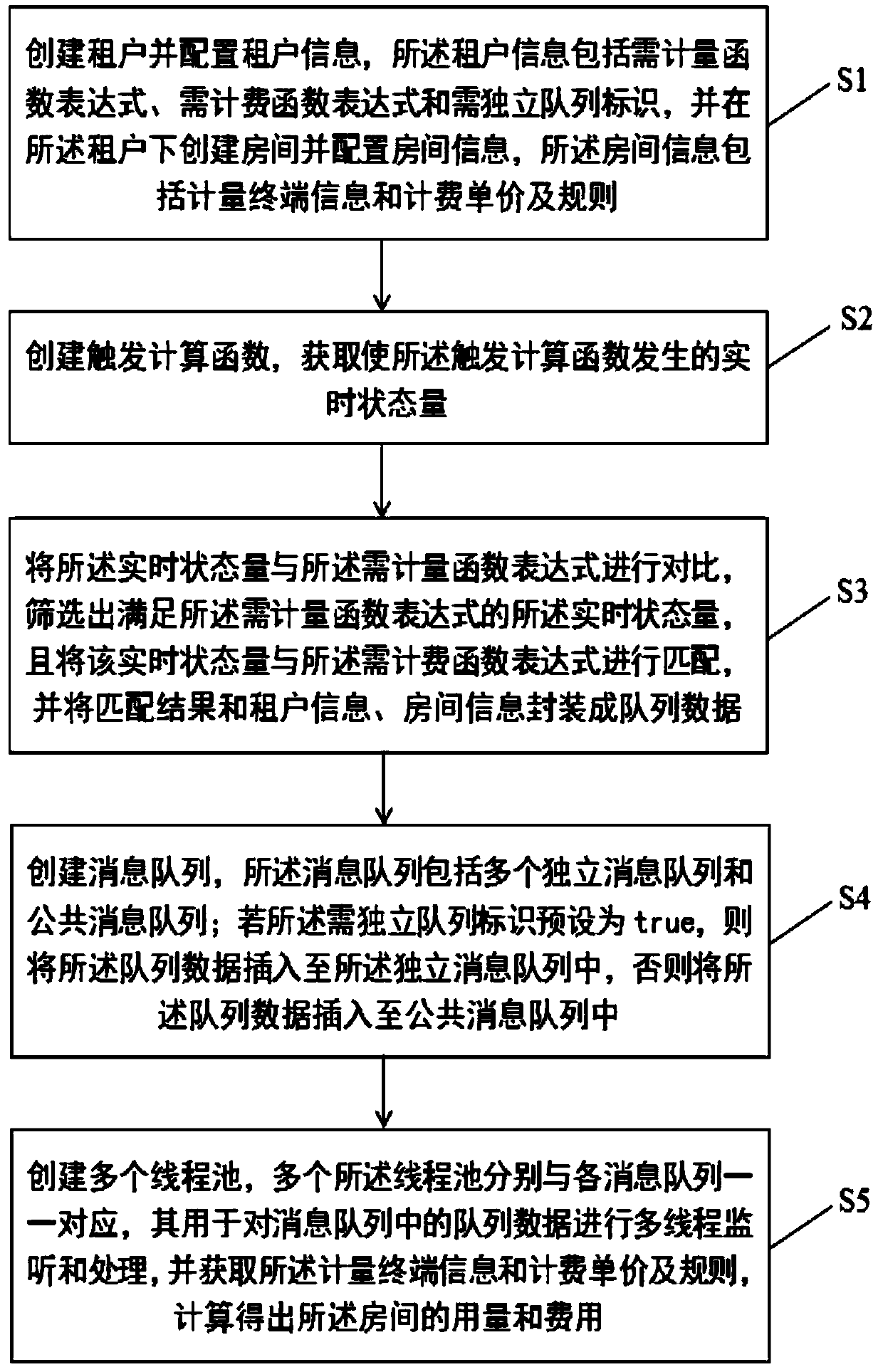

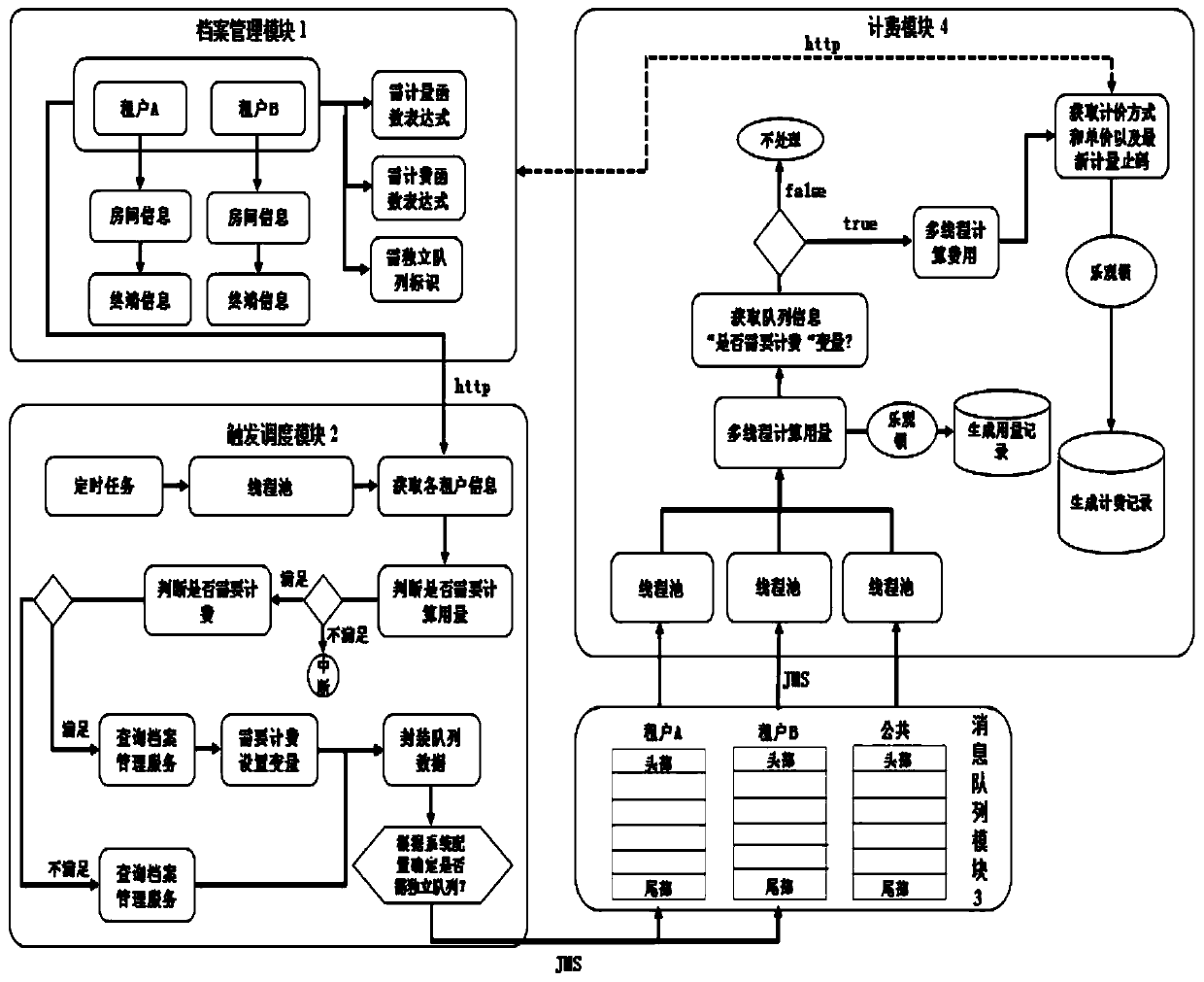

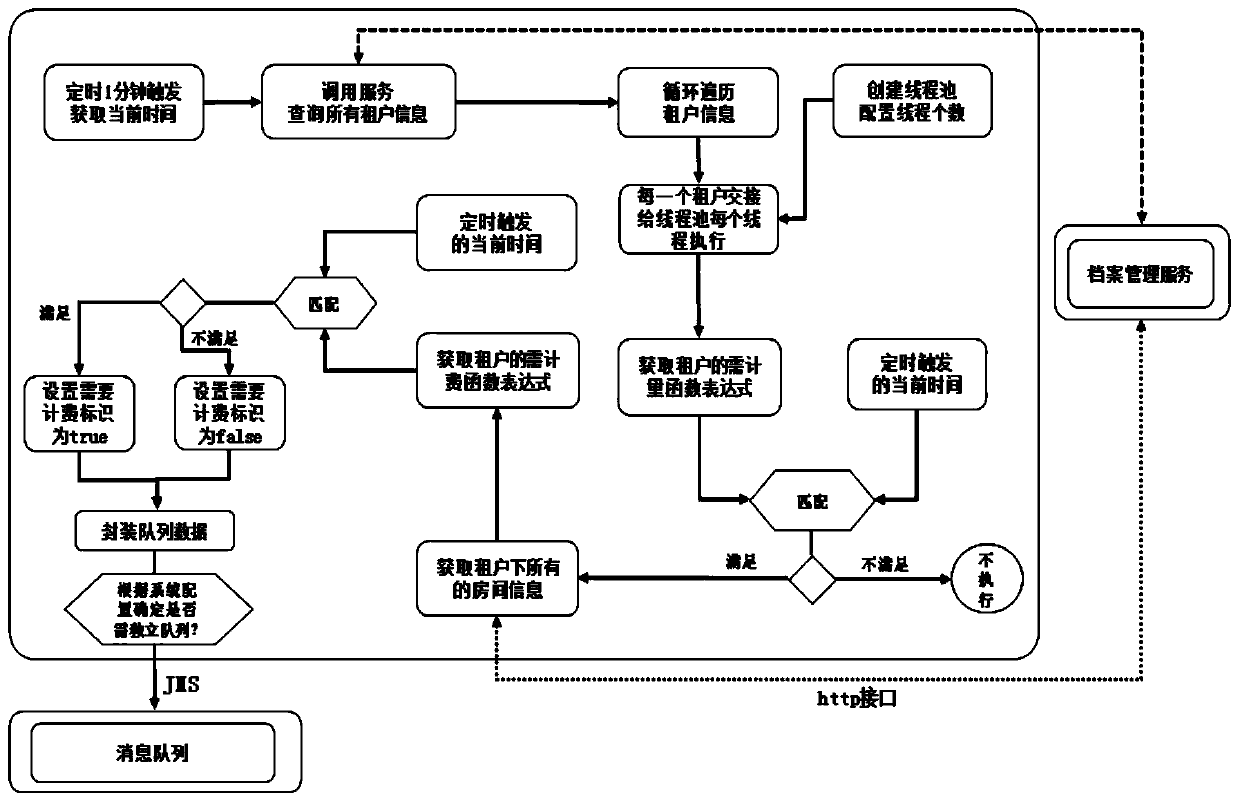

Distributed metering and charging method and system

PendingCN111488224AEasy maintenanceImprove computing efficiencyInterprogram communicationBuying/selling/leasing transactionsMessage queueEngineering

The invention relates to a distributed metering and charging method and system, relates to the technical field of multi-tenant multi-terminal metering and charging, and aims to encapsulate tenant androom information needing metering and charging into queue data and create a plurality of independent message queues and public message queues to receive the queue data. Each independent message queueis used for receiving queue data needing to create an independent thread for monitoring. Each public message queues are used for receiving queue data which does not need to create independent threadsfor monitoring, creating thread pools for each independent message queues and the public message queues respectively to achieve multi-thread monitoring and processing of queue data. Finally, distributed calculation of the use amount and cost with a room is made as a main body. Therefore, the calculation efficiency and timeliness can be improved, the calculation delay can be reduced, the maintenance of later codes is facilitated, the metering and charging service can be independently extracted and applied to different metering systems, and the universality is very high.

Owner:武汉时波网络技术有限公司

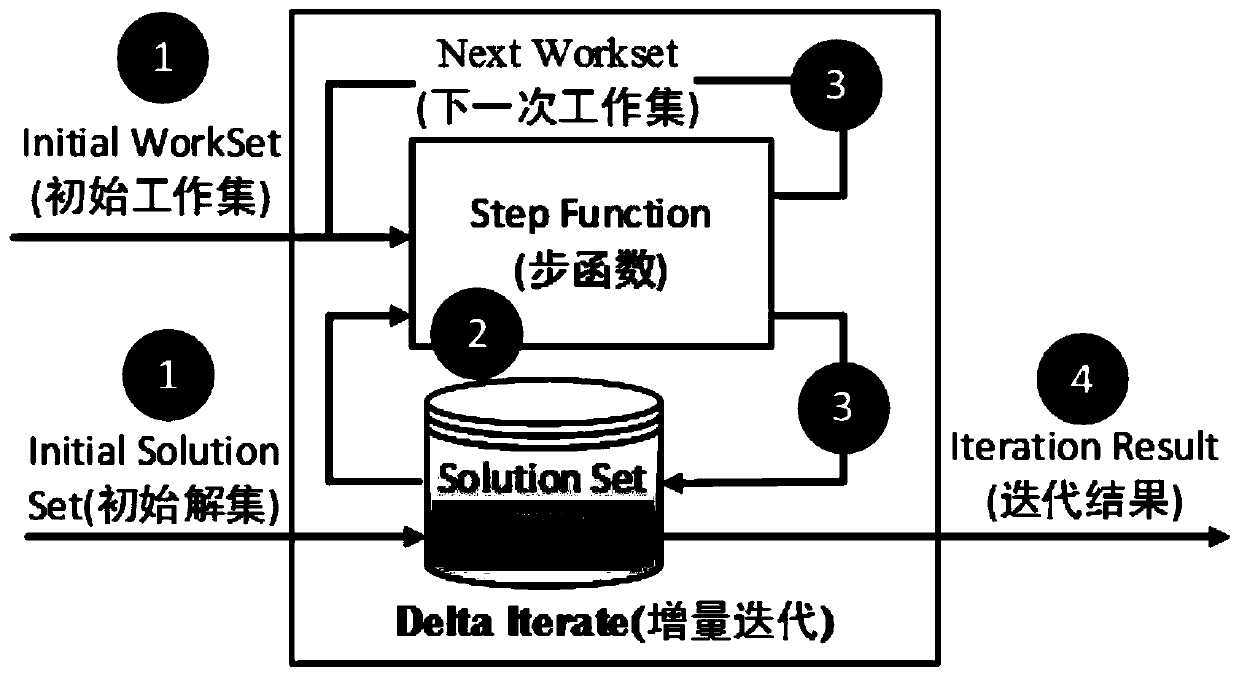

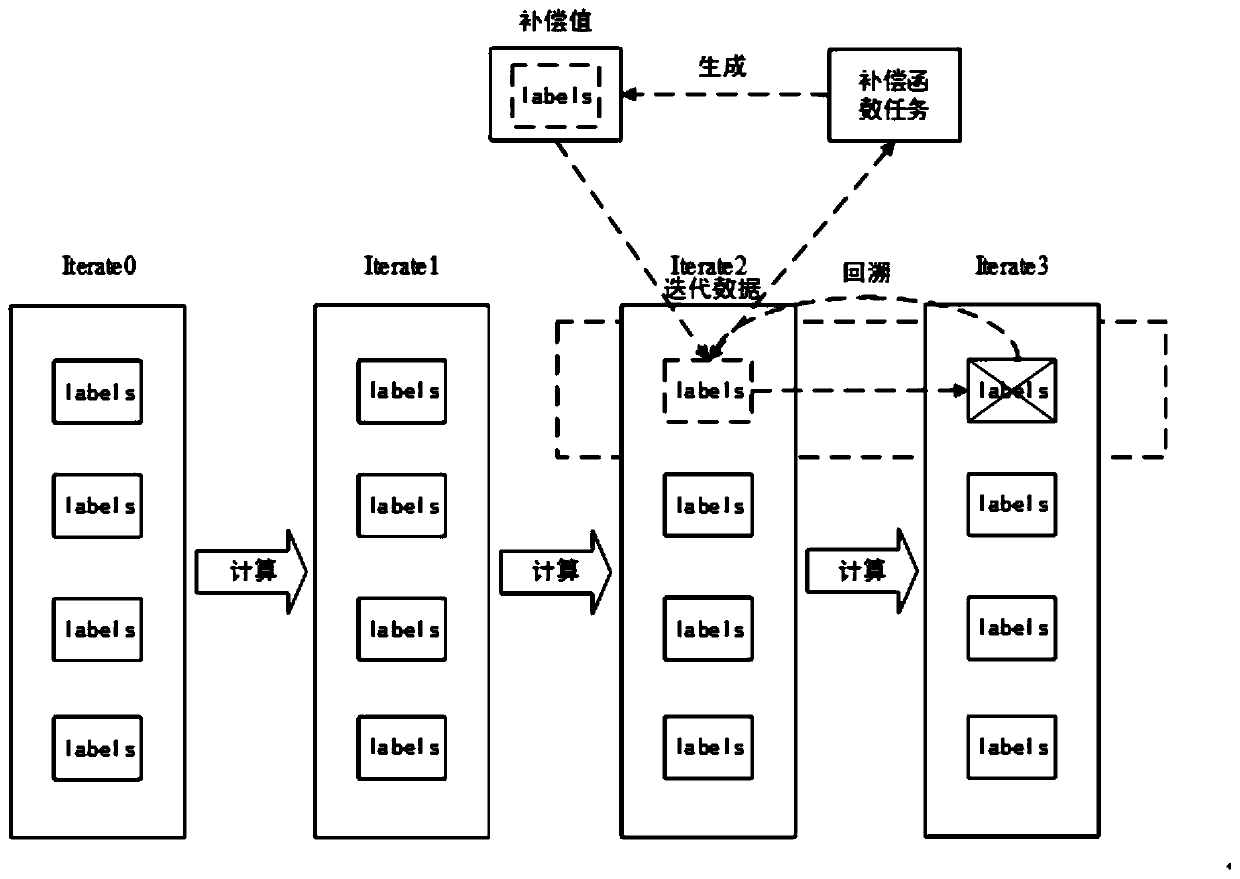

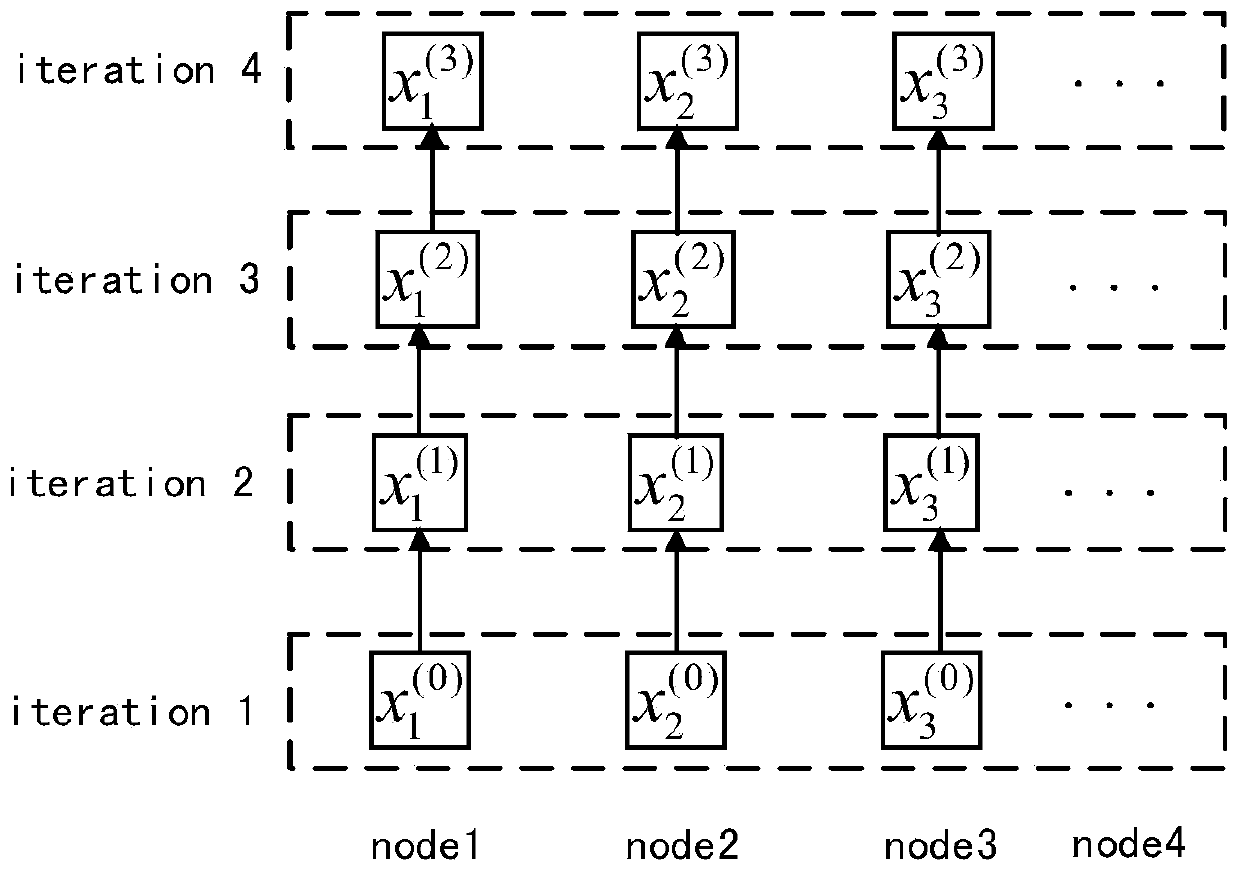

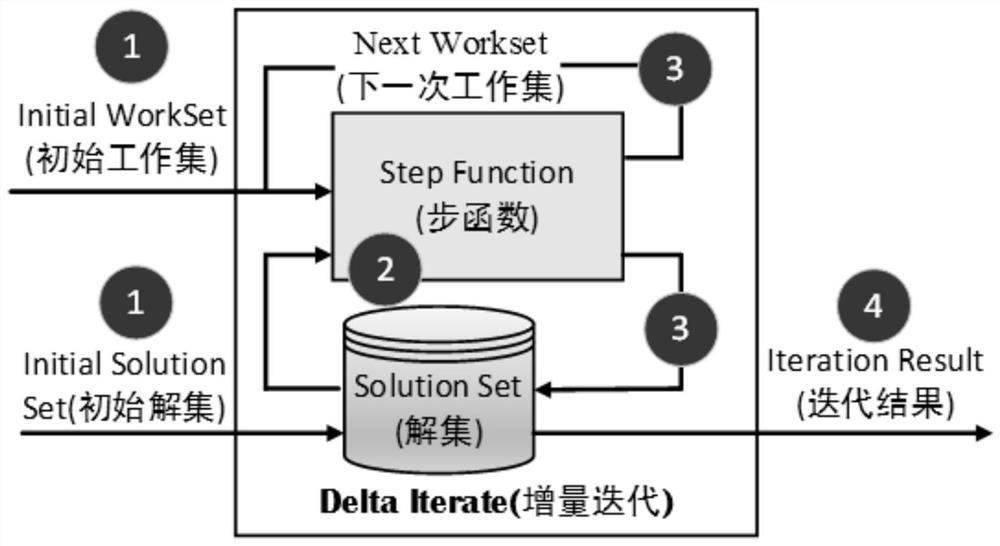

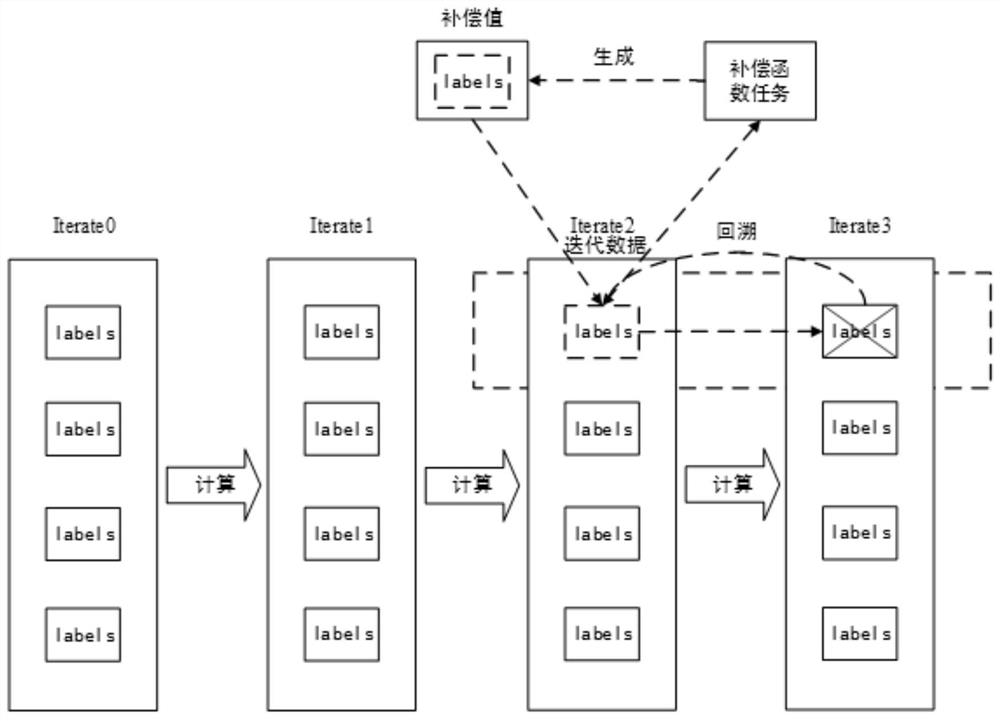

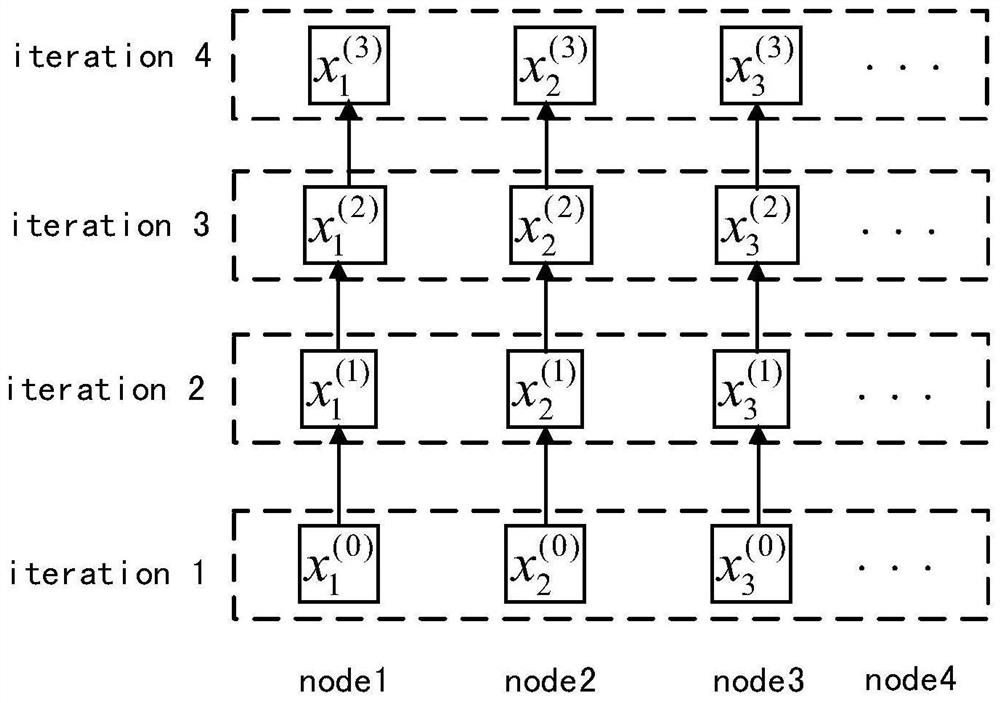

Iterator based on optimistic fault tolerant method

ActiveCN110795265ABest trouble-free performanceReduce computing latencyNon-redundant fault processingExecution paradigmsSystem usageReal-time computing

The invention discloses an iterator based on an optimistic fault tolerant method. The invention belongs to the technical field of distributed iterative computation in a big data environment. The iterator comprises an incremental iterator and a batch iterator, comprehensively considers iterative tasks of different sizes and iterative computation tasks of different failure rates, introduces a compensation function, and enables a system to use the function to re-initialize lost partitions. And when a fault occurs, the system suspends the current iteration, ignores the failed task, reallocates thelost calculation to the newly obtained node, and calls the compensation function on the partition to recover the consistent state and recover execution. And for the condition of relatively low faultfrequency, the calculation delay is greatly reduced, and the iterative processing efficiency is improved. For the condition of higher fault frequency, the iterator can ensure that the iterative processing efficiency is not lower than that of the iterator before optimization. The iterator based on an optimistic fault tolerant method does not need to add additional task operation, thus effectively reducing the fault-tolerant overhead.

Owner:NORTHEASTERN UNIV +1

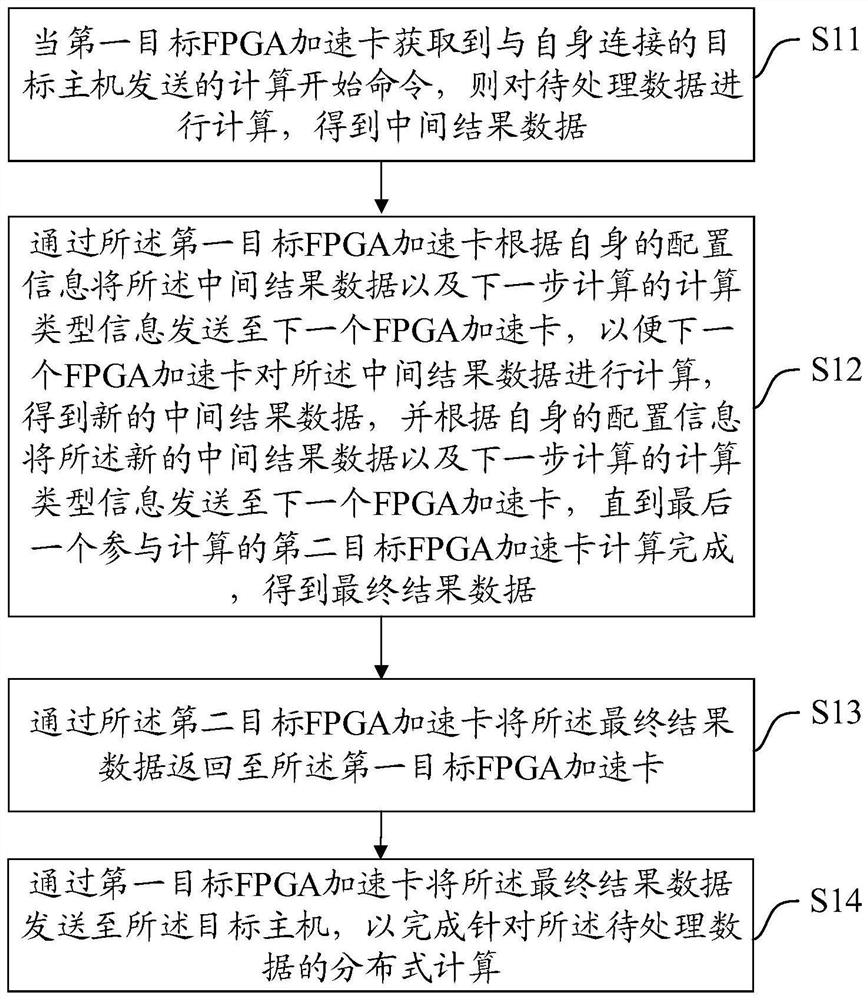

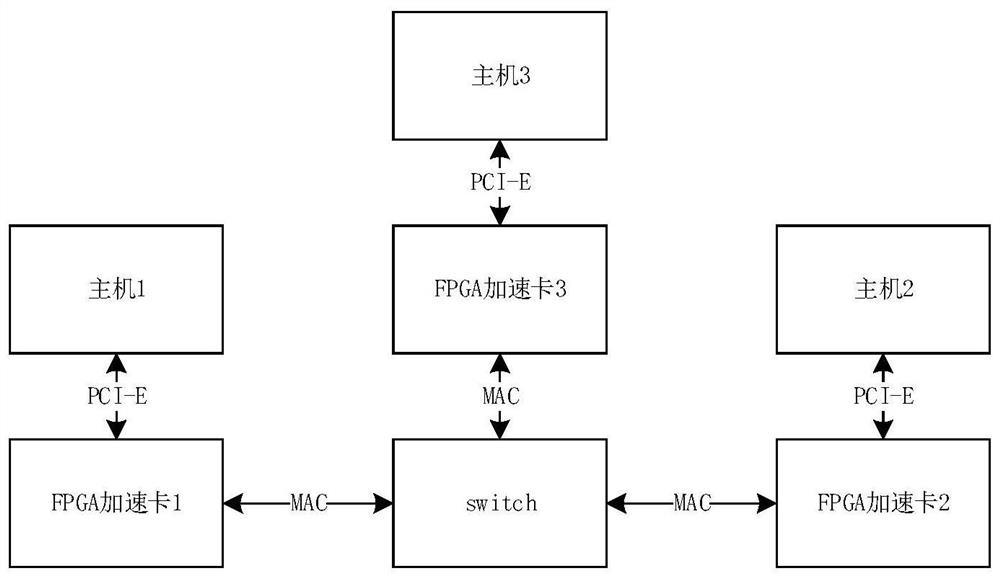

Data processing method and device and medium

PendingCN114138481ARealize automatic transmissionRealize automatic calculationResource allocationArchitecture with single central processing unitComputer hardwareParallel computing

The invention discloses a data processing method and device and a medium, and the method comprises the steps: a first target FPGA acceleration card obtains a calculation start command sent by a target host connected with the first target FPGA acceleration card, carries out the calculation of to-be-processed data, and obtains intermediate result data, the intermediate result data and the next-step calculation type information are sent to the next FPGA accelerator card according to self configuration information, the next FPGA accelerator card calculates the intermediate result data to obtain new intermediate result data, the new intermediate result data and the next-step calculation type information are sent to the next FPGA accelerator card, and the new intermediate result data and the next-step calculation type information are sent to the next FPGA accelerator card. Obtaining final result data until the calculation of the last second target FPGA accelerator card participating in the calculation is completed; and returning the final result data to the target host through the second target FPGA accelerator card so as to complete distributed calculation for the to-be-processed data. According to the invention, the calculation delay of distributed calculation of a plurality of FPGA acceleration cards can be reduced, so that the calculation efficiency is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

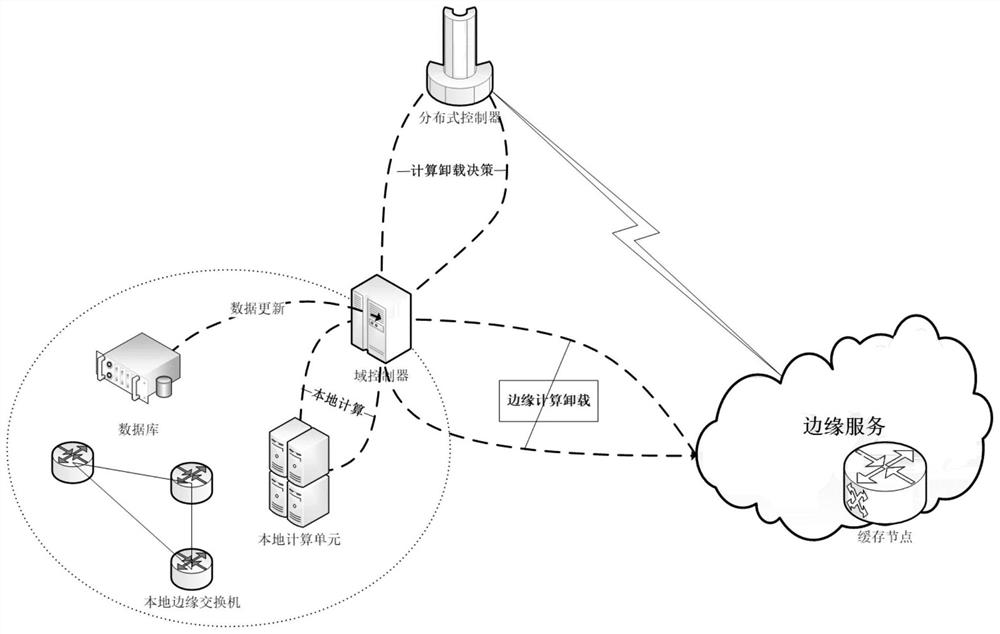

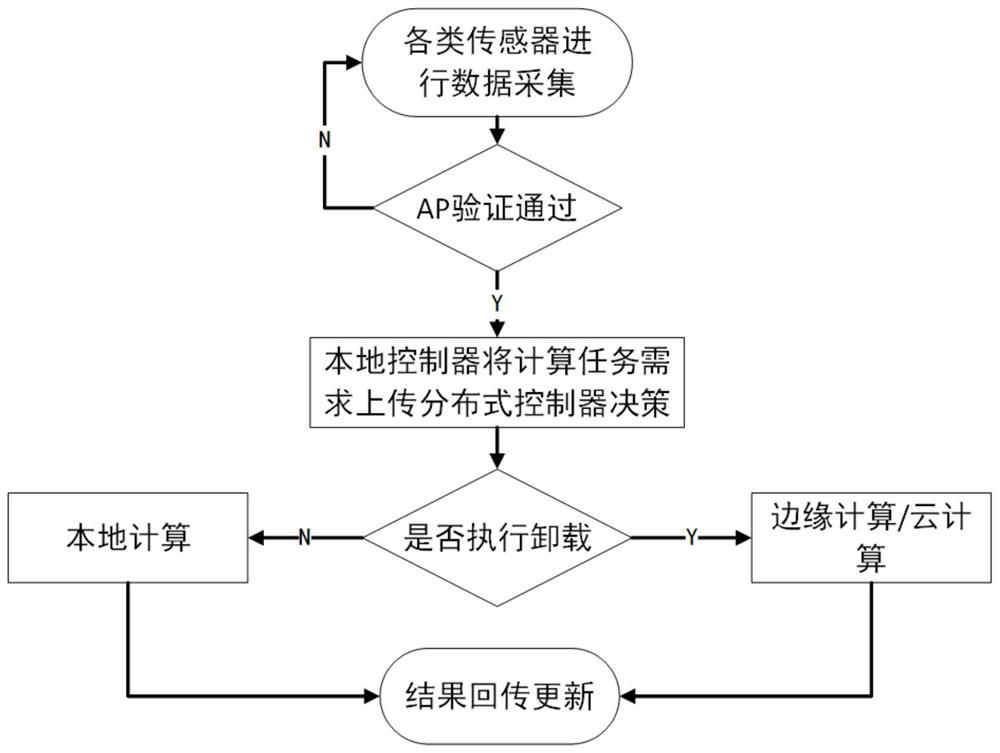

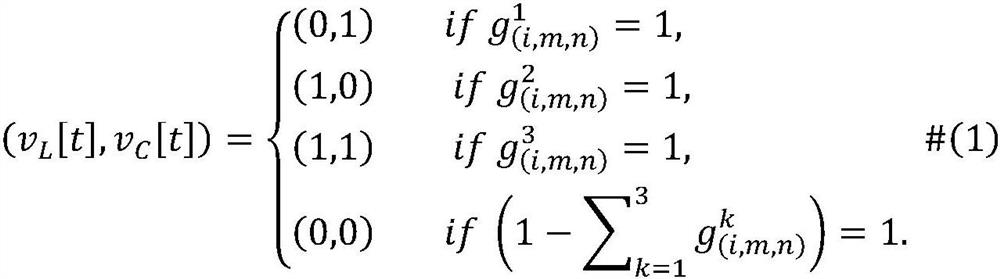

Edge computing scheduling method and system based on software definition

ActiveCN112162837AEasy to useConvenient ArrangementProgram initiation/switchingResource allocationData packEdge computing

The invention discloses an edge computing scheduling method and system based on software definition. The edge computing scheduling method method comprises the following steps that: 1) a local computing unit collects a local computing task i and uploads the local computing task i to a distributed controller; 2) the distributed controller determines that the i is computed locally or unloaded to an edge computing service node to be executed according to a value of a two-tuple (v<L>[t], v<C>[t]), wherein v<L>[t]=1 represents that the i is executed locally in a t-th time slot, and v<C>[t]=1 represents that the i is unloaded to the edge computing service node to be executed in the t-th time slot; and introducing a value for determining the two-tuple (v<L>[t], v<C>[t]), wherein S={0, 1,..., Q}*{0, 1,..., M}*{0, 1,..., N1}, Q represents the maximum capacity of a task queue buffer area, M represents the number of data packets contained in the i, and N represents the number of time slots required by local calculation of the i.

Owner:COMP NETWORK INFORMATION CENT CHINESE ACADEMY OF SCI

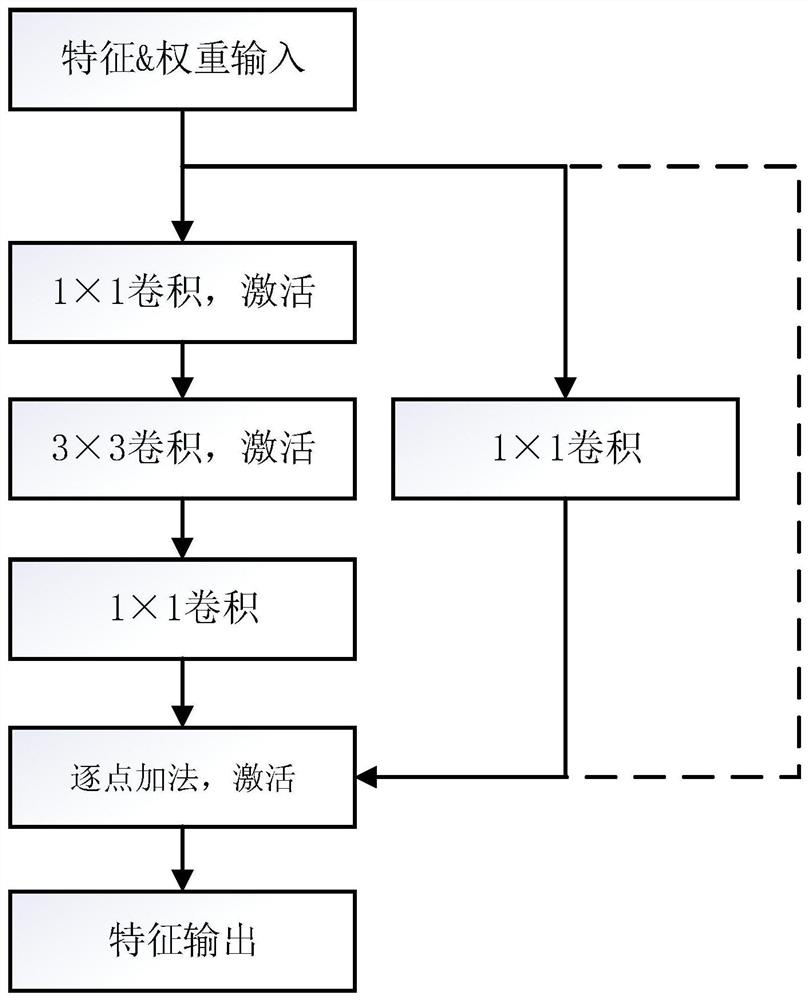

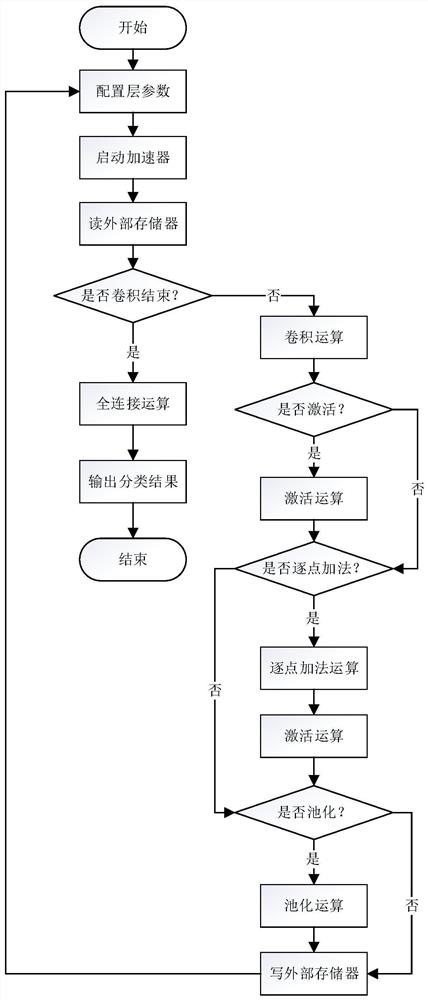

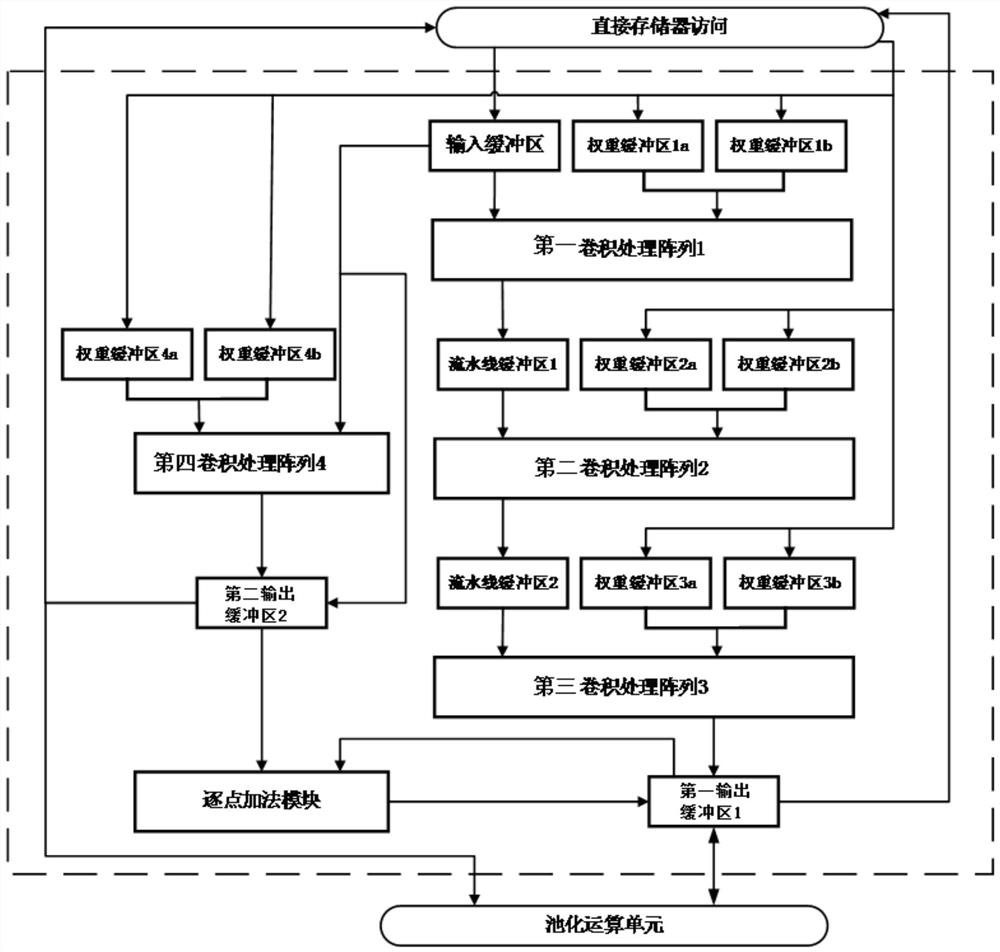

Streamlined convolution computing architecture design method and residual network acceleration system

ActiveCN112862079AImprove parallelismReduce computing latencyNeural architecturesEnergy efficient computingComputer architectureEngineering

The invention provides a streamlined convolution computing architecture design method and a residual network acceleration system. According to the method, a hardware acceleration architecture is divided into an on-chip buffer area, a convolution processing array and a point-by-point addition module; a main path of the hardware acceleration architecture is composed of three convolution processing arrays which are arranged in series, and two assembly line buffer areas are inserted among the three convolution processing arrays and used for achieving interlayer assembly lines of three layers of convolution of the main path. A fourth convolution processing array is set to be used for processing convolution layers, with the kernel size being 1 * 1, of the branches of the residual building blocks in parallel, a register in the fourth convolution processing array is configured, the working mode of the fourth convolution processing array is changed, the fourth convolution processing array can be used for calculating a residual network head convolution layer or a full connection layer, and when the branches of the residual building blocks are not convolved, the fourth convolution processing array is skipped out and convolution is not exected; and a point-by-point addition module is set to add corresponding output feature pixels element by element for the output feature of the main path of the residual building block and the output feature of the branch quick connection.

Owner:SUN YAT SEN UNIV

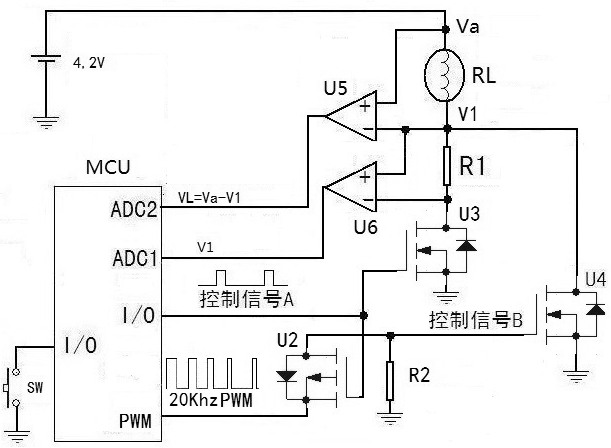

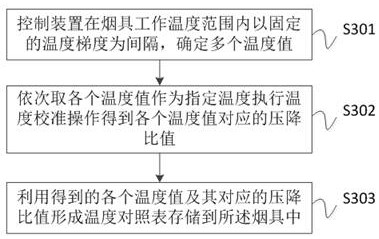

Heating smoking set calibration method and system

The invention discloses a heating smoking set calibration method and system. The smoking set is used for obtaining the voltage drop of a sampling resistor and the voltage drop of a heating device during temperature measurement, calculating the voltage drop ratio, and finding out the smoking set temperature corresponding to the voltage drop ratio from a pre-stored temperature comparison table through table look-up. According to the invention, the smoking set directly locks the temperature through a table look-up mode, so that calculation delay is reduced, and the detection precision is improved; the temperature comparison table has great influence on a temperature checking result, so that in order to ensure the accuracy of the result, the temperature comparison table is calibrated so as to greatly improve the precision and the reliability of the temperature measurement result; and the calibration of the suction capacity is achieved.

Owner:SHENZHEN TOBACCO IND

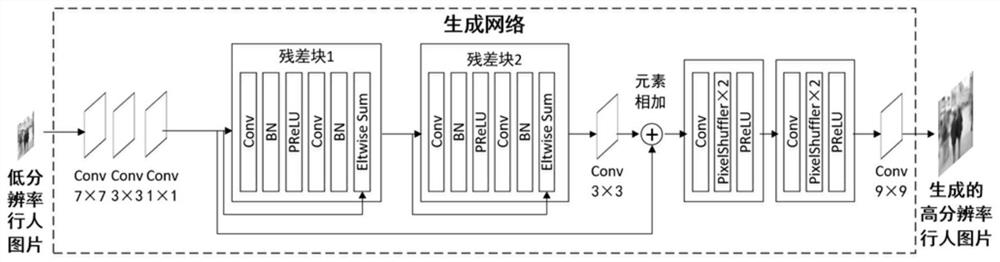

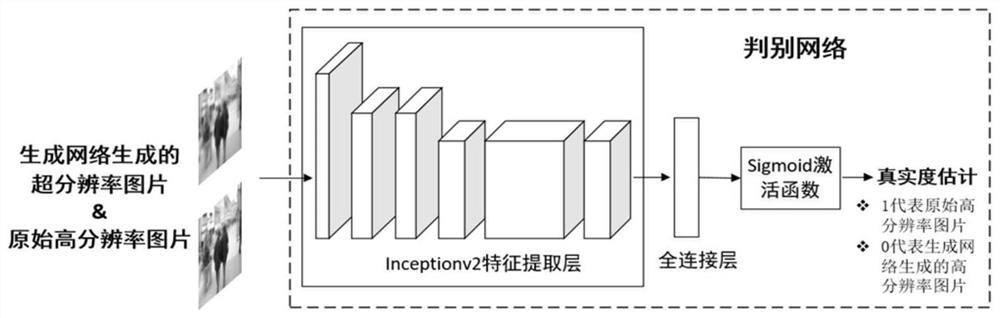

Small-scale pedestrian target rapid super-resolution method for intelligent roadside equipment

ActiveCN112132746AReduce the number of training iterationsQuality improvementImage enhancementImage analysisImage resolutionSimulation

The invention discloses a small-scale pedestrian target rapid super-resolution method for intelligent roadside equipment. The method comprises the steps of collecting and constructing a small-scale pedestrian high-low resolution data training set; based on a generative adversarial idea, building a lightweight generative network for a low-resolution small-scale pedestrian image. The network firstlyuses separable convolution to extract image preliminary features, then combines a residual module to fit high-frequency information, and finally uses a pixel recombination module to perform high-resolution reconstruction on the low-resolution pedestrian image. A discrimination network is built, and discrimination training is performed on the parameters of the generation network to obtain an optimal generation network; and super-resolution on the low-resolution small-scale pedestrian picture is performed by using the optimal generation network to obtain a high-resolution pedestrian target. Thelightweight super-resolution generation network designed by the invention has the remarkable advantages of short training time and low reasoning delay, and fills the technical gap of small-scale pedestrian real-time super-resolution in the field of intelligent roadsides.

Owner:SOUTHEAST UNIV

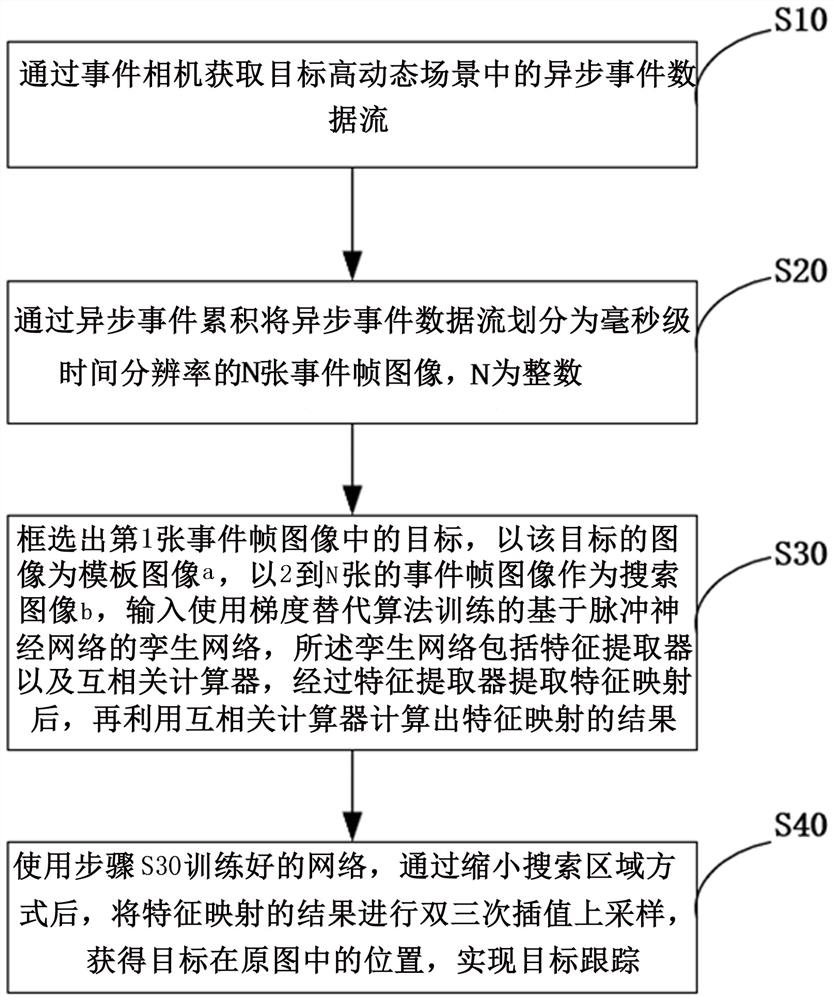

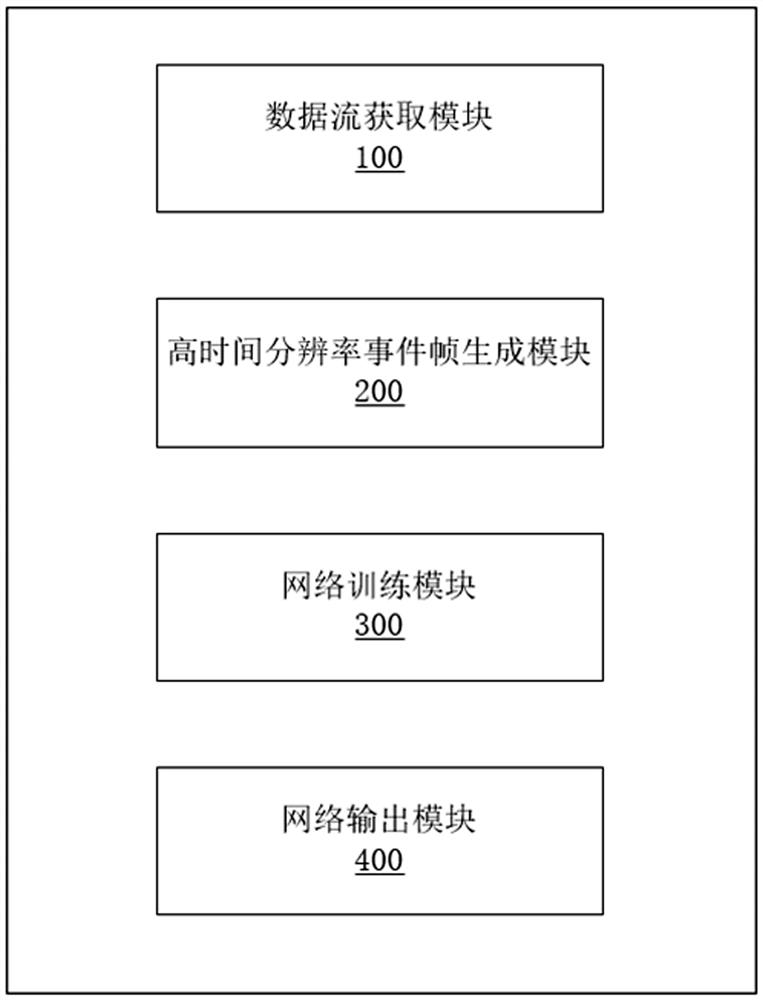

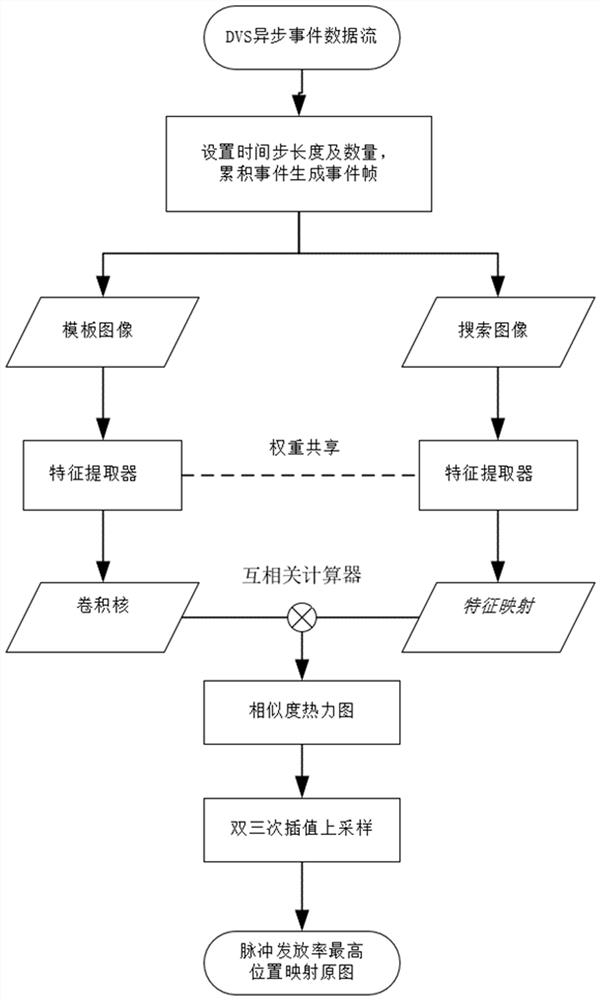

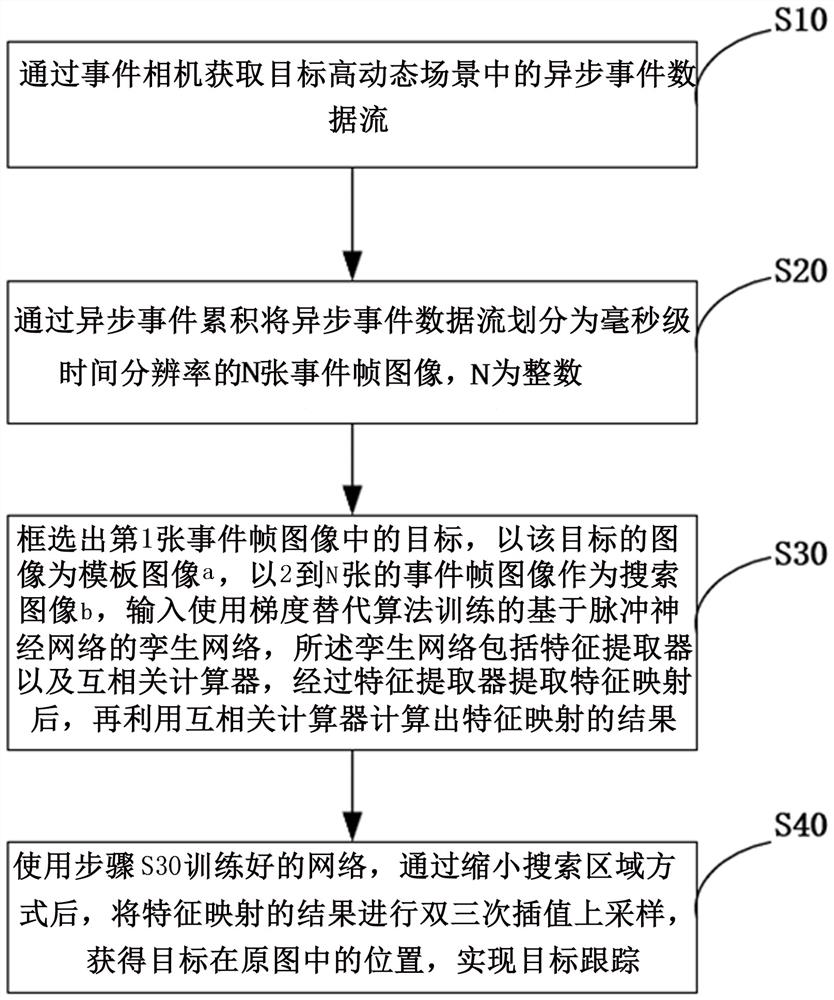

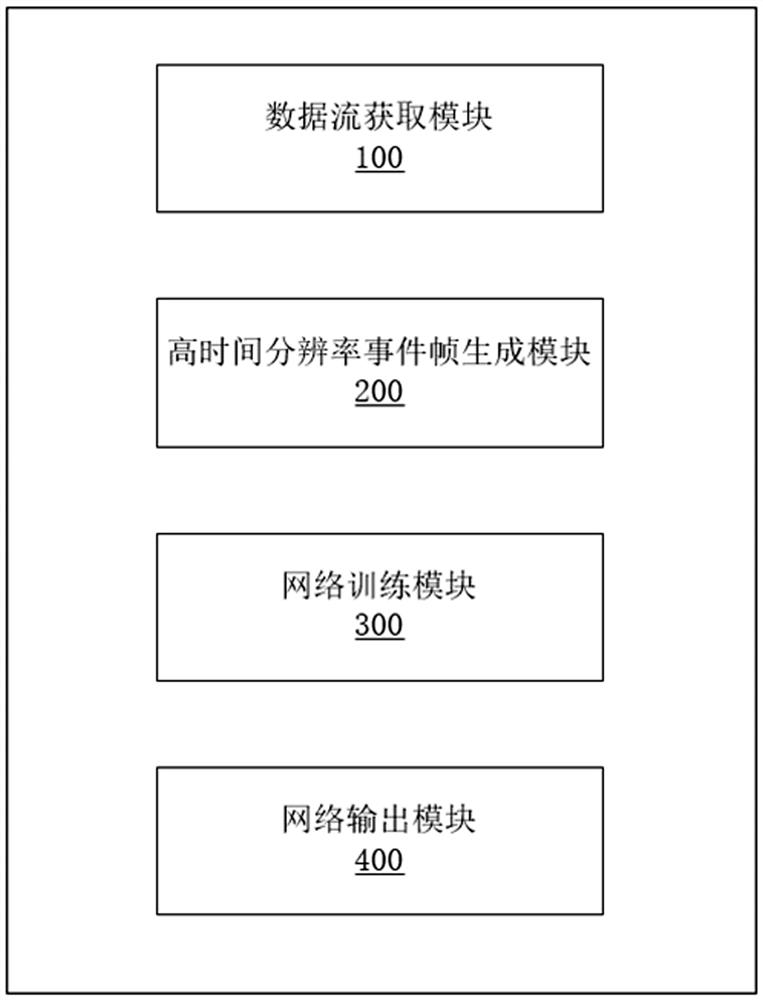

Pulse neural network target tracking method and system based on event camera

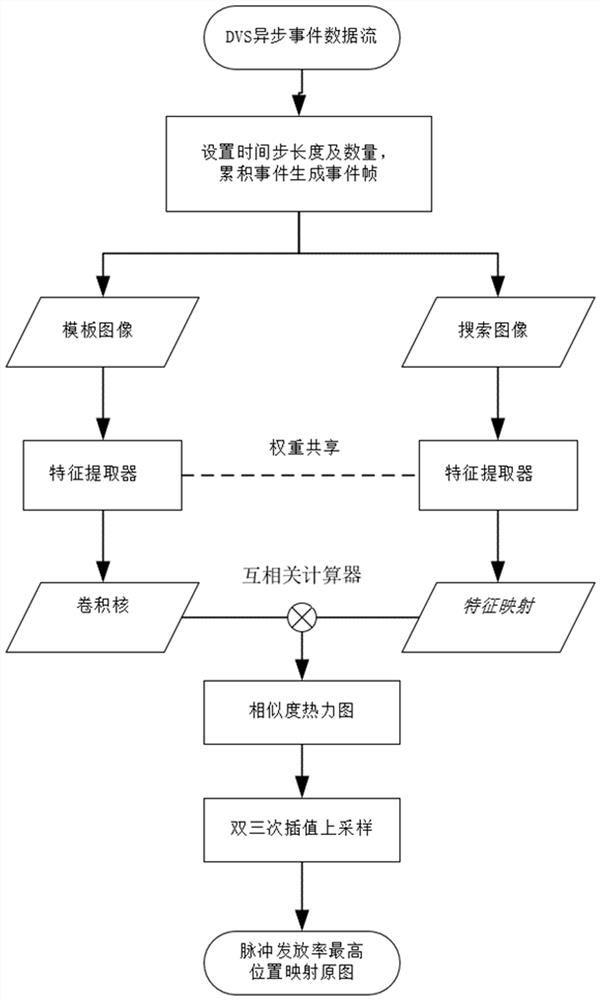

ActiveCN114429491AFix high latencySolution rangeImage enhancementImage analysisData streamFeature extraction

The invention belongs to the field of target tracking, and particularly relates to a pulse neural network target tracking method and system based on an event camera, and the method comprises the steps: obtaining an asynchronous event data flow in a target high-dynamic scene through the event camera; dividing the asynchronous event data stream into event frame images with millisecond-level time resolution; a target image is used as a template image, a complete image is used as a search image, a twin network based on a pulse neural network is trained, the network comprises a feature extractor and a cross-correlation calculator, and after feature mapping of the image is extracted through the feature extractor, the cross-correlation calculator is used for calculating a feature mapping result; and performing interpolation up-sampling on a feature mapping result by using the trained network to obtain the position of the target in the original image so as to realize target tracking. According to the invention, the transmission delay of image data and the calculation delay of a target tracking algorithm are reduced, and the precision of target tracking in a high-dynamic scene is improved.

Owner:ZHEJIANG LAB +1

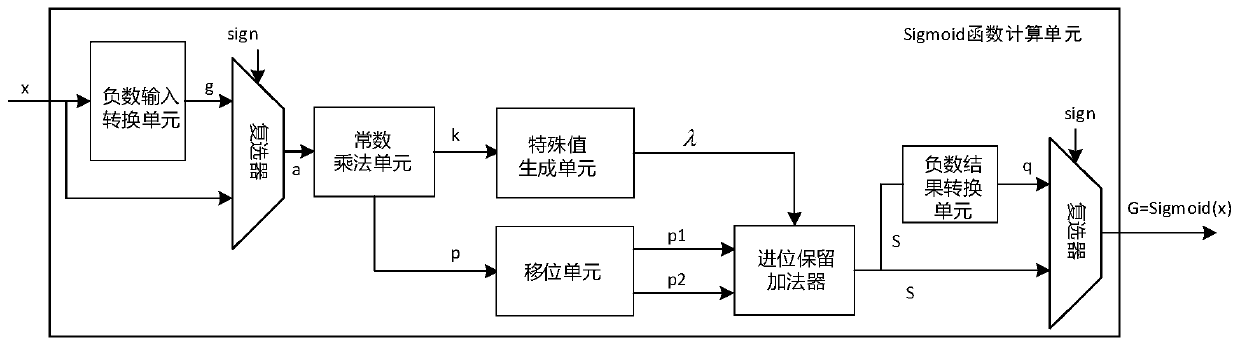

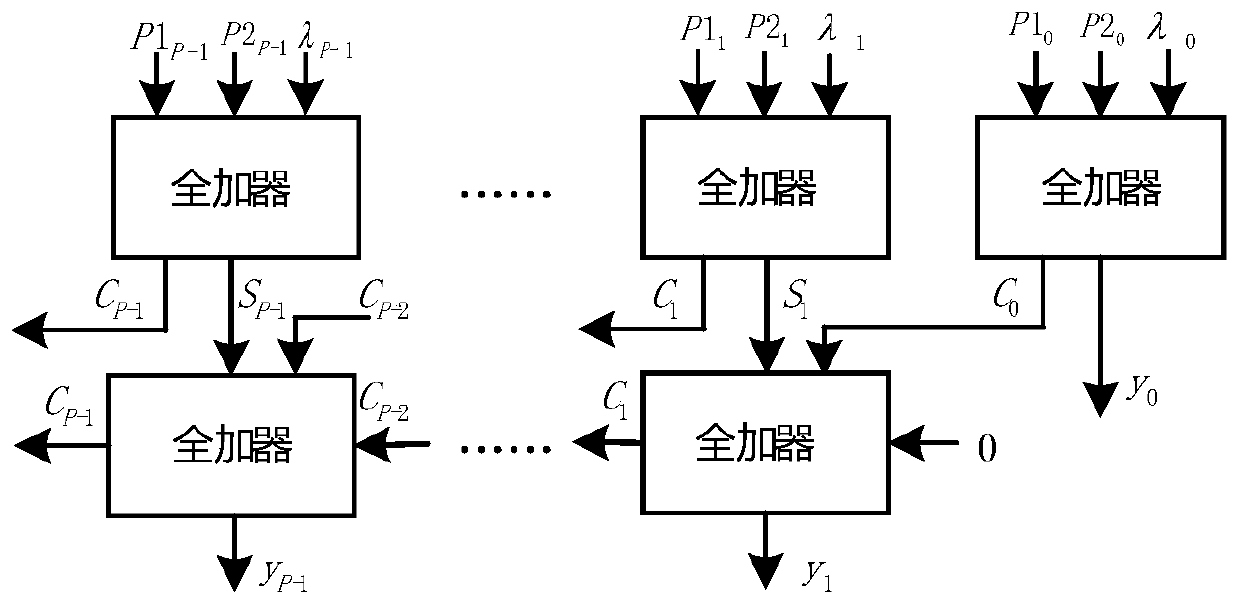

Approximate calculation device for sigmoid function

ActiveCN110837624AReduce power consumptionLower latencyEnergy efficient computingComplex mathematical operationsSigmoid functionEngineering

The invention discloses an approximate calculation device for a sigmoid function. The approximate calculation device comprises a negative number input conversion unit, a constant multiplication unit,a shift unit, a special value generation unit, a carry-save adder and a negative number result conversion unit. The negative number input conversion unit is used for taking an absolute value of an input negative value x and outputting a binary source code of the absolute value; the constant multiplication unit calculates a value of 1.4375 * x and outputs an integral part k and a decimal part p ofan operation result; the shifting unit is used for shifting the input p; the special value generation unit is used for generating an approximate value lambda = sigmoid (kln2); the carry-save adder isused for realizing addition of three numbers; and the negative result conversion unit realizes a conversion function of a corresponding result when the input x is a negative number. According to the device, approximate calculation of the sigmoid function can be achieved, and while high approximate precision is kept, operation delay and power consumption are greatly reduced, and area expenditure isreduced.

Owner:NANJING UNIV

An online pipe network anomaly detection system based on machine learning

ActiveCN103580960BImprove exception recognition rateSave human effortData switching networksReal-time dataNetwork packet

The invention discloses an online pipe network anomaly detection system based on machine learning. The online pipe network anomaly detection system comprises a data collection unit, a data distribution unit and a plurality of anomaly detection units. The data collection unit is used for collecting real-time data of an online pipe network, merging the real-time data according to position areas and grouping the real-time data into different data packages. The data distribution unit is used for receiving the data packages, extracting data elements from the data packages and dividing the data packages into a plurality of data subsets after formatting the data packages. The anomaly detection units are used for receiving the data subsets in a one-to-one correspondence mode and predicating anomalism of the data subsets based on a semi-supervised machine learning framework. The anomaly detection units can be used for carrying out parallel data processing, and data transmission can be carried out among the anomaly detection units through an MPI. The online pipe network anomaly detection system can meet the requirements of the online anomaly detection units based on machine learning for usability of a server, and can prevent extra hardware on standby in an idle state from being introduced in.

Owner:FOSHAN LUOSIXUN ENVIRONMENTAL PROTECTION TECH

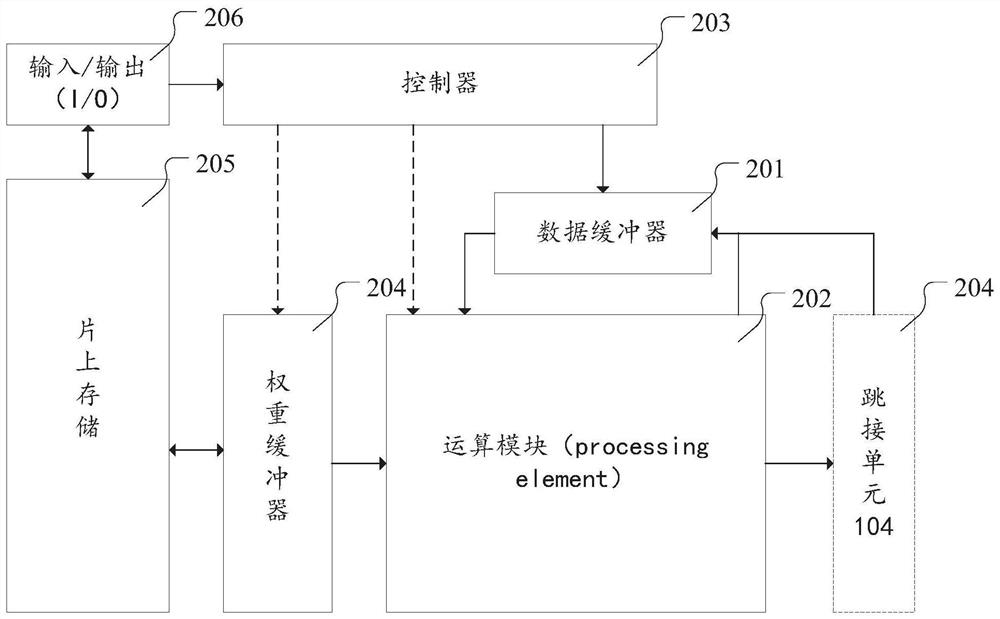

Acceleration device for deep learning and computing device

PendingCN112396153AImprove data processing efficiencyReduce computing latencyNeural architecturesPhysical realisationNeural network nnComputer engineering

The invention discloses an acceleration device for deep learning and a computing device for deep learning. The acceleration device for deep learning comprises a data buffer and an operation module connected with the data buffer; the data buffer is used for caching an intermediate calculation result of a network layer of the vocoder neural network; and the operation module is used for obtaining anintermediate calculation result of a first network layer of the vocoder neural network from the data buffer according to the received control instruction, and sending the intermediate calculation result of the first network layer to the data buffer. By adopting the acceleration device for deep learning provided by the invention, the processing efficiency of the vocoder neural network is improved.

Owner:ALIBABA GRP HLDG LTD

An Iterator Based on Optimistic Fault Tolerance

ActiveCN110795265BImprove processing efficiencyBest trouble-free performanceNon-redundant fault processingExecution paradigmsFailure rateParallel computing

The invention discloses an iterator based on an optimistic fault-tolerant method, which belongs to the technical field of distributed iterative computing in a big data environment. The iterator includes an incremental iterator and a batch iterator, and comprehensively considers iteration tasks of different sizes and different faults. For the iterative calculation task of rate, a compensation function is introduced, and the system uses this function to re-initialize the lost partitions. When a failure occurs, the system pauses the current iteration, ignores the failed tasks, and redistributes the lost computations to newly acquired nodes, calling the compensation function on the partition to restore a consistent state and resume execution. For situations where the fault frequency is low, the calculation delay is greatly reduced and the iterative processing efficiency is improved. For situations with high failure frequency, this iterator can ensure that the iteration processing efficiency is not lower than that of the iterator before optimization. This optimistic fault-tolerant iterator does not need to add additional operations to the task, effectively reducing the fault-tolerant overhead.

Owner:NORTHEASTERN UNIV LIAONING +1

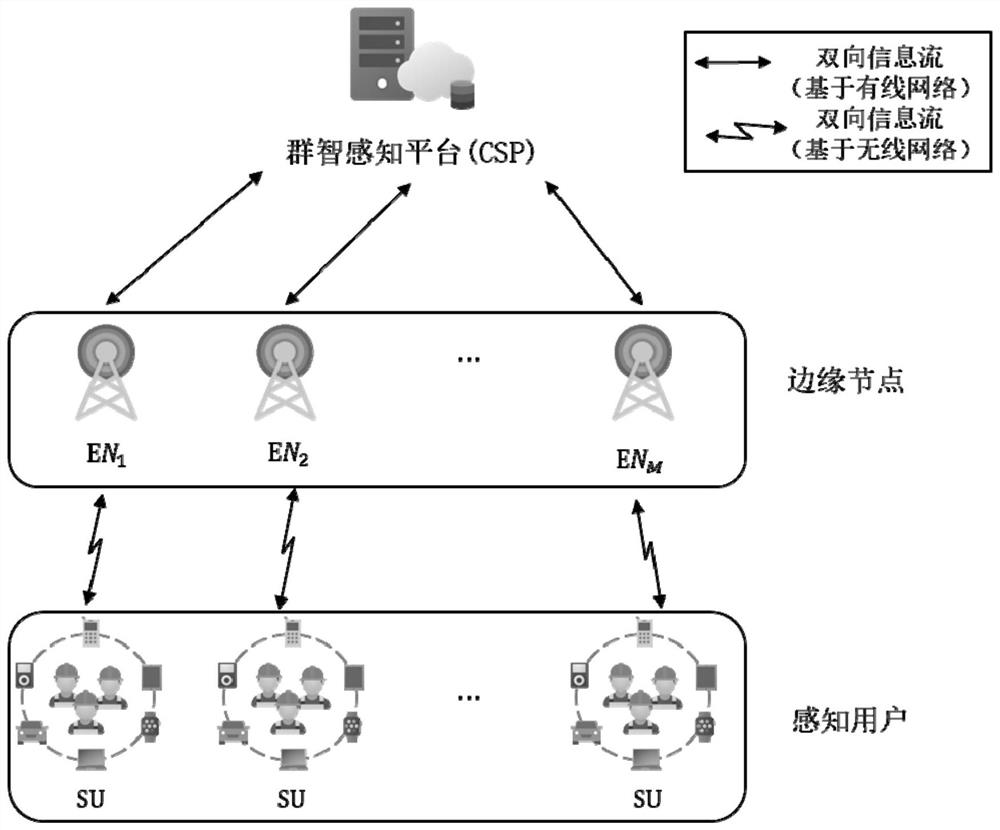

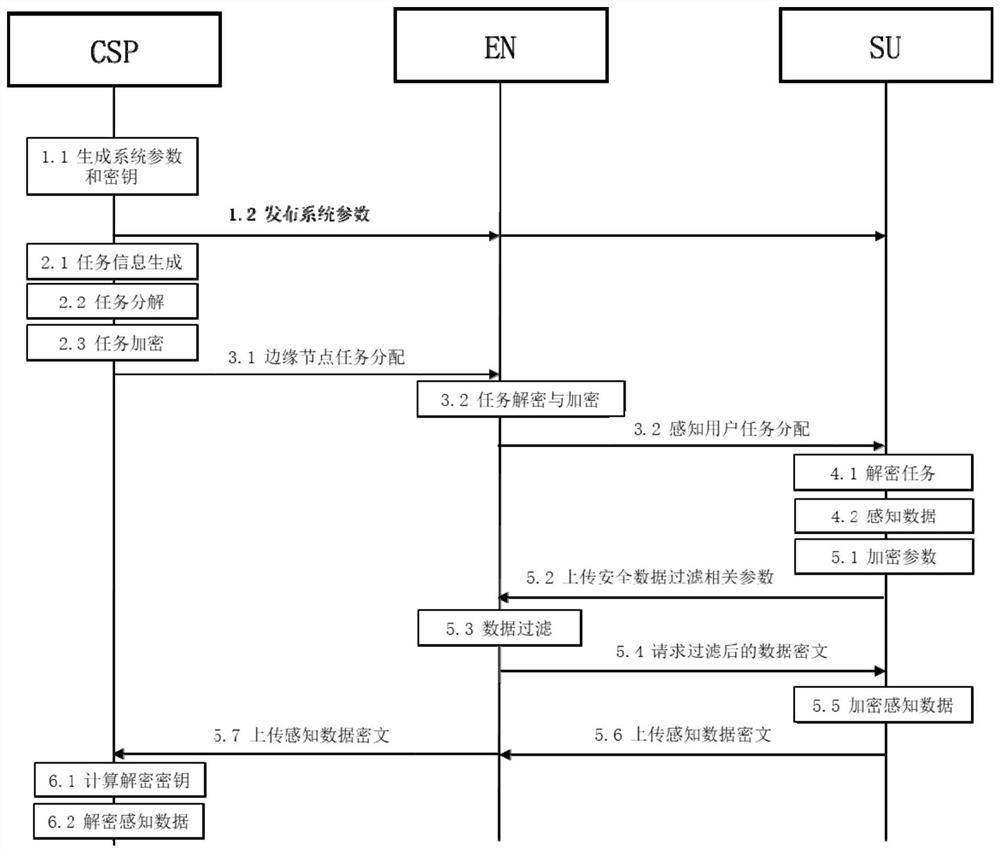

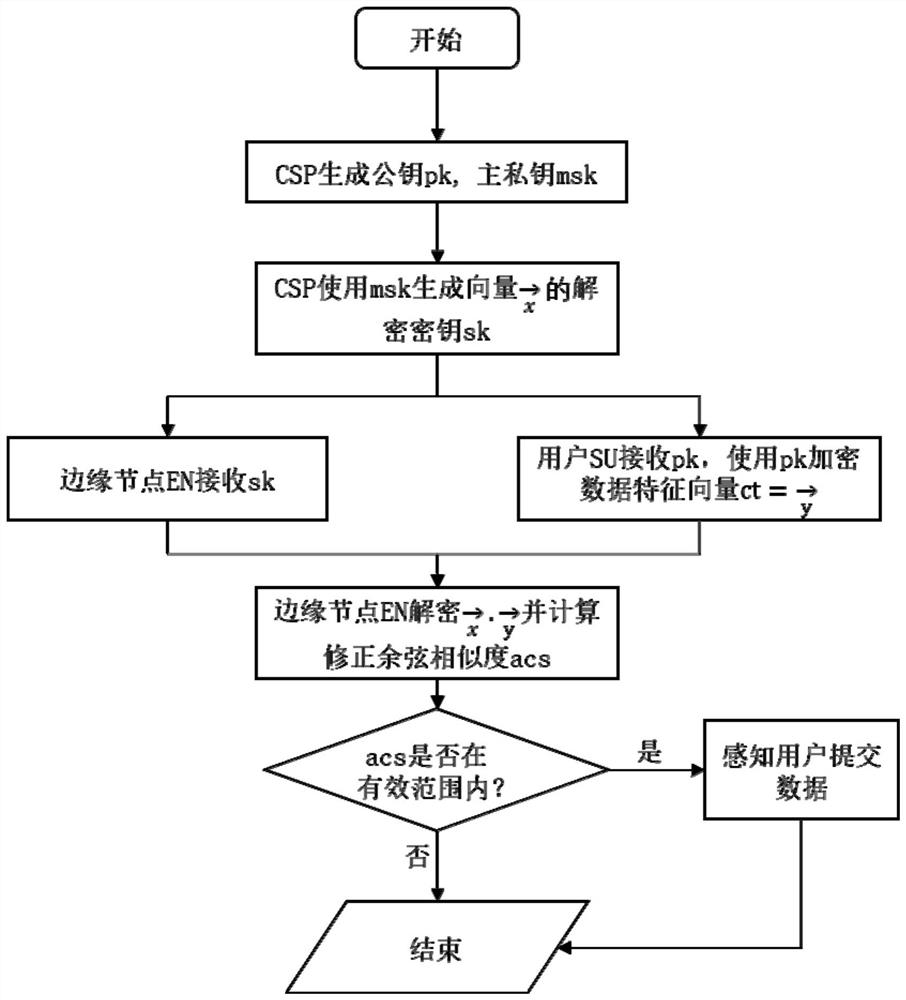

Data security filtering system and method in crowd sensing

PendingCN114491596AGuaranteed privacyReduce overheadDigital data protectionEdge nodePrivacy protection

The invention discloses a data security filtering system and method in crowd sensing. The method comprises six parts of initialization and key generation, task generation, task release, data sensing, data security filtering and data decryption. A feature vector encrypted by a public key of a crowd sensing platform CSP is submitted by a sensing user to an edge node for filtering, and the edge node can only obtain a corrected cosine similarity relationship between the data feature vector of the sensing user and a data sample provided by the crowd sensing platform CSP and cannot obtain other information of the sensing user. And the filtered submitted data is also encrypted by the public key of the crowd sensing platform, so that the edge node cannot mine the effective information of the user, and the privacy of the user is ensured. Meanwhile, the edge node can calculate and correct the cosine similarity only by performing one round of interaction with the sensing user, so that the communication overhead can be greatly reduced. The method has the advantages of high privacy protection security, high communication efficiency and high data availability.

Owner:HUBEI UNIV OF TECH

A spiking neural network target tracking method and system based on event camera

ActiveCN114429491BFix high latencyReduce data transfer volumeImage enhancementImage analysisData streamFeature extraction

The invention belongs to the field of target tracking, and in particular relates to a pulse neural network target tracking method and system based on an event camera. The method includes acquiring an asynchronous event data stream in a target high dynamic scene through an event camera; dividing the asynchronous event data stream into milliseconds event frame images with high temporal resolution; take the target image as the template image and the complete image as the search image, train a twin network based on the spiking neural network, which includes a feature extractor and a cross-correlation calculator, and the image passes through the feature extractor After the feature map is extracted, the cross-correlation calculator is used to calculate the result of the feature map; the trained network is used to interpolate and upsample the result of the feature map to obtain the position of the target in the original image to achieve target tracking. The invention reduces the transmission delay of the image data and the calculation delay of the target tracking algorithm, and improves the accuracy of the target tracking in the high dynamic scene.

Owner:ZHEJIANG LAB +1

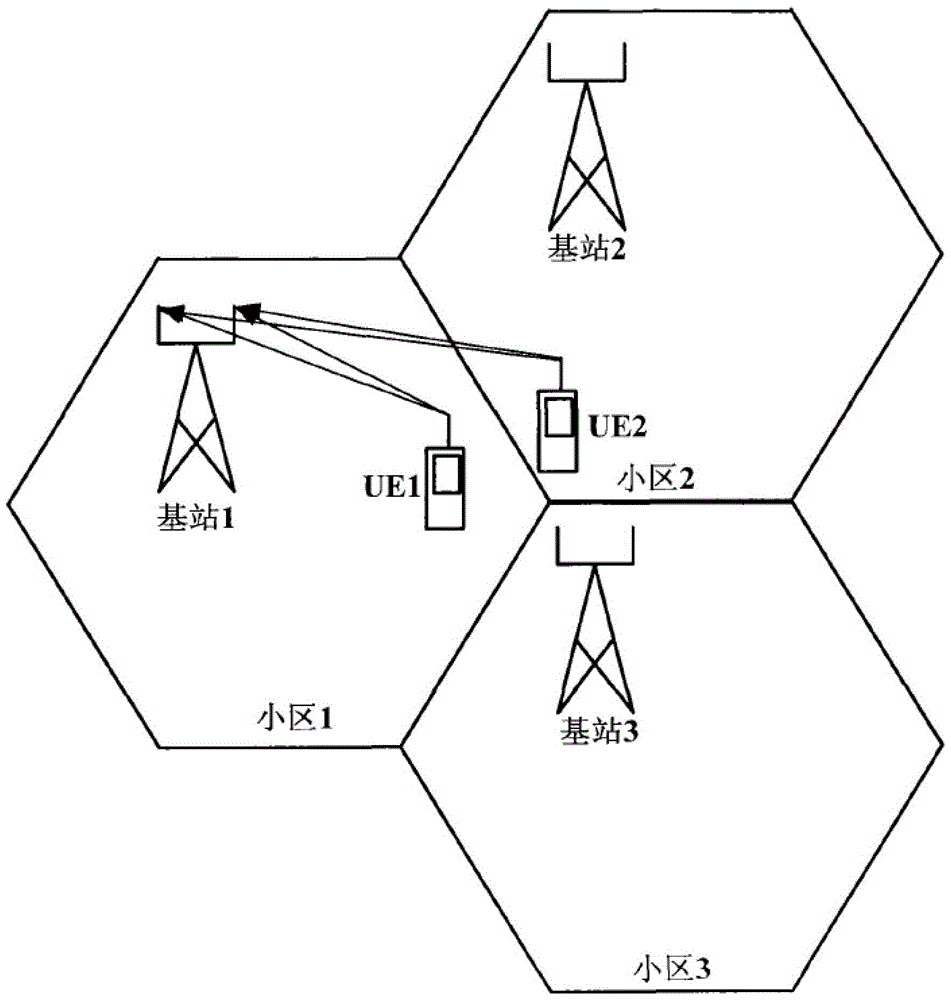

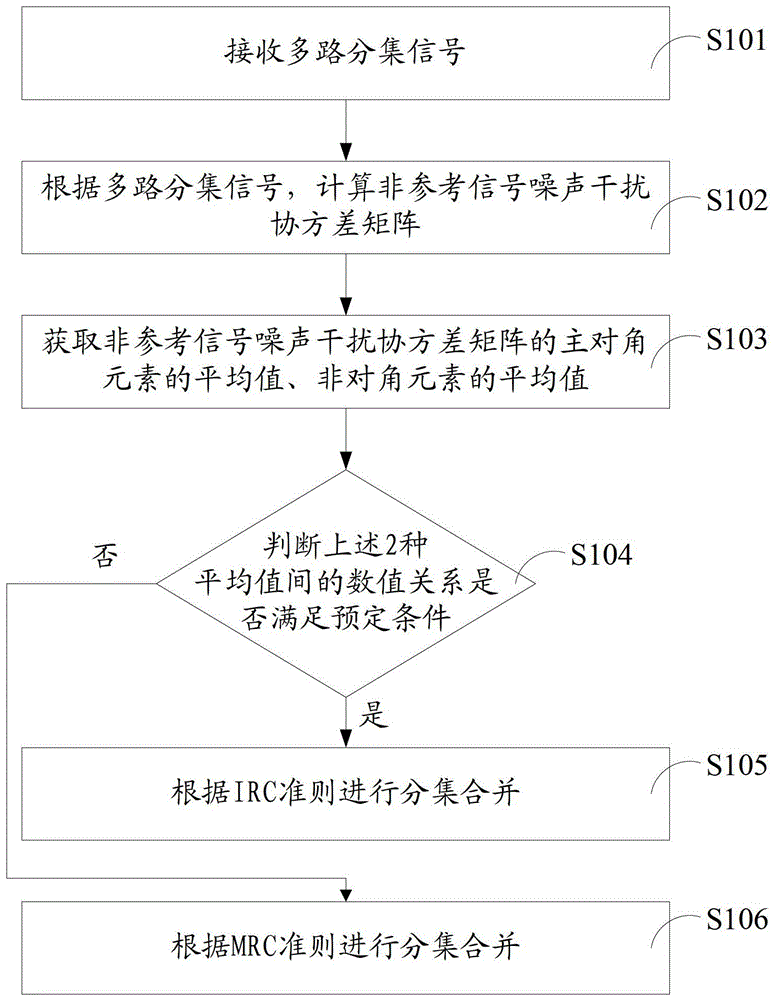

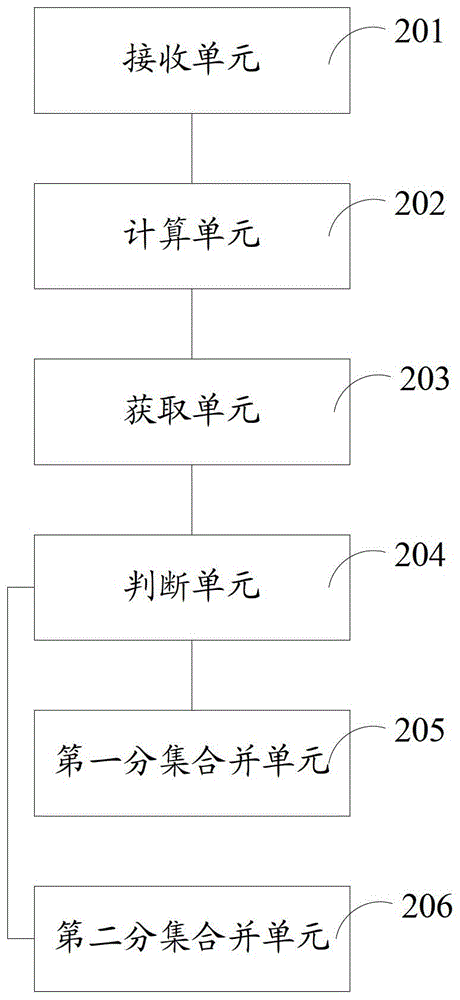

Adaptive diversity combining method and apparatus

ActiveCN103873129BReduce complexityReduce computing latencySpatial transmit diversityMain diagonalSelf adaptive

The invention provides a self-adaption diversity combining method. The self-adaption diversity combining method comprises the following steps: receiving a plurality of paths of diversity signals; calculating a non-reference signal noise interference covariance matrix according to the paths of diversity signals; obtaining an average value of a main diagonal element of the non-reference signal noise interference covariance matrix and an average value of a non-diagonal element; judging whether a numerical value relation of the average value of the non-diagonal element and the average value of the main diagonal element meets a pre-set condition or not; if so, carrying out diversity combining according to an IRC (Interference Rejection Combining) principle; if not, carrying out the diversity combining according to an MRC (Maximum Ratio Combining) principle. The invention further provides a self-adaption diversity combining device which is simple and effective to realize; the complexity of system calculation is reduced and the application range of the diversity combining is enlarged.

Owner:COMBA TELECOM SYST CHINA LTD

Credit processing equipment and flow control transmission apparatus and method thereof

ActiveCN101184022BCalculation speedReduce computing latencyEnergy efficient ICTData switching networksRing counterControl table

The invention discloses a stream packet header credit processing device, comprising a packet header buffer unit and a packet header credit calculation unit, which is characterized in that: the packet header credit is calculated directly according to the amount of the packet header credit received, recorded and transmitted by a writing pointer and a reading pointer, so that delay-time is reduced; meanwhile, a credit processing device is provided in the invention and the credit processing device comprises the packet header credit processing device and a data credit calculation unit; wherein, inthe data calculation circuit a ring counter control unit and a controlling table unit are used to choose different types of data; the data credit is calculated via the shared circuit, so that circuitarea is saved and calculation speed is raised; the invention also provides a sending device, a receiving device, a transmission system and a processing and transmitting method using the receiving andsending devices and transmission system, so that credit processing is optimized; efficiency is increased and power consumption is reduced with the stream control device and stream control method in the invention.

Owner:SEMICON MFG INT (SHANGHAI) CORP

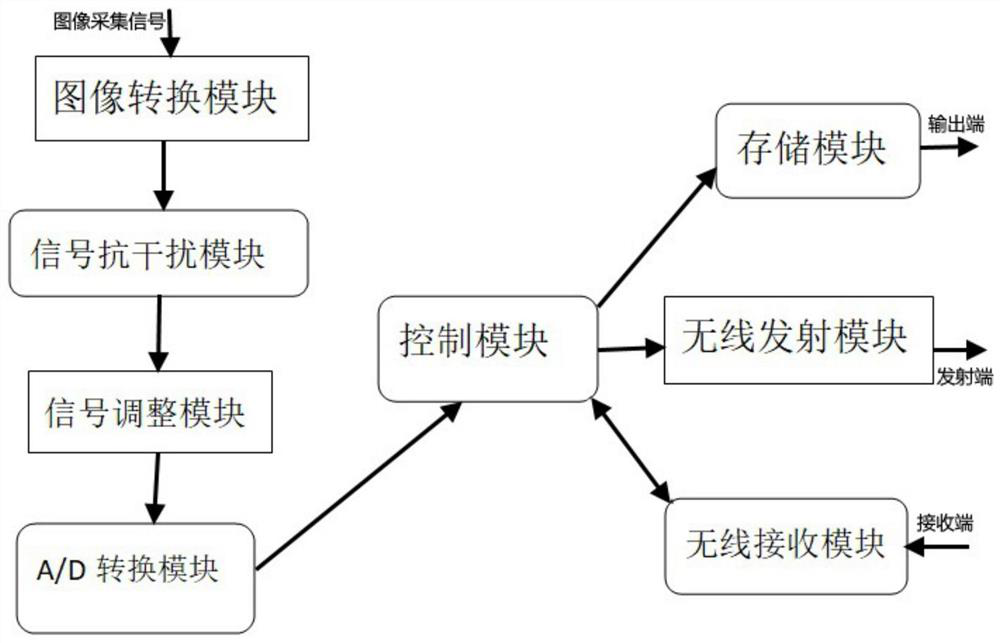

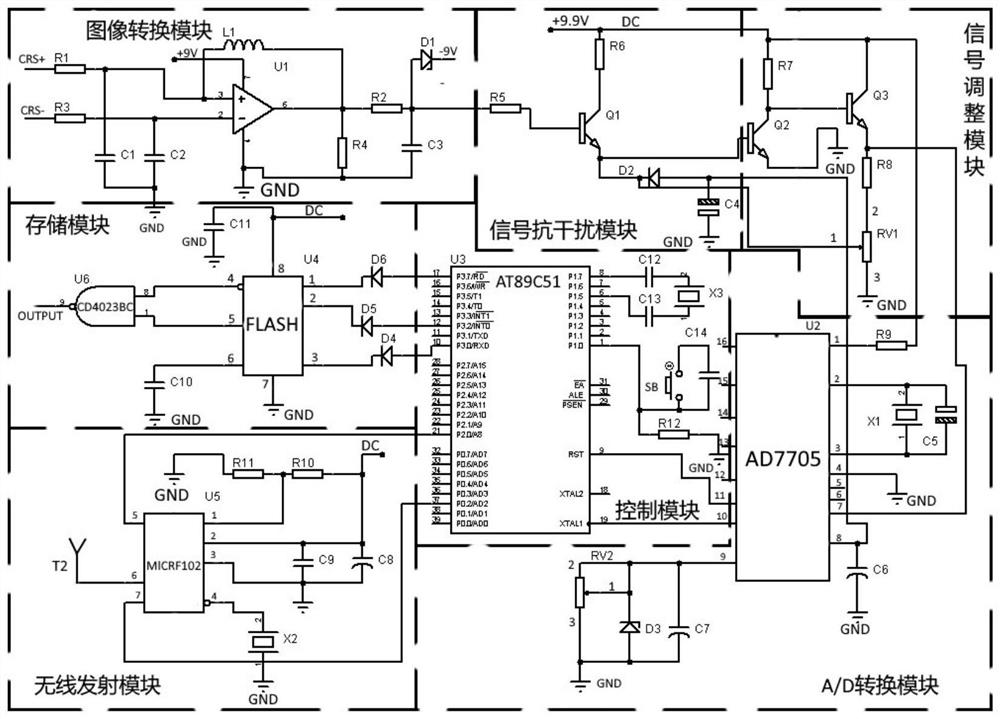

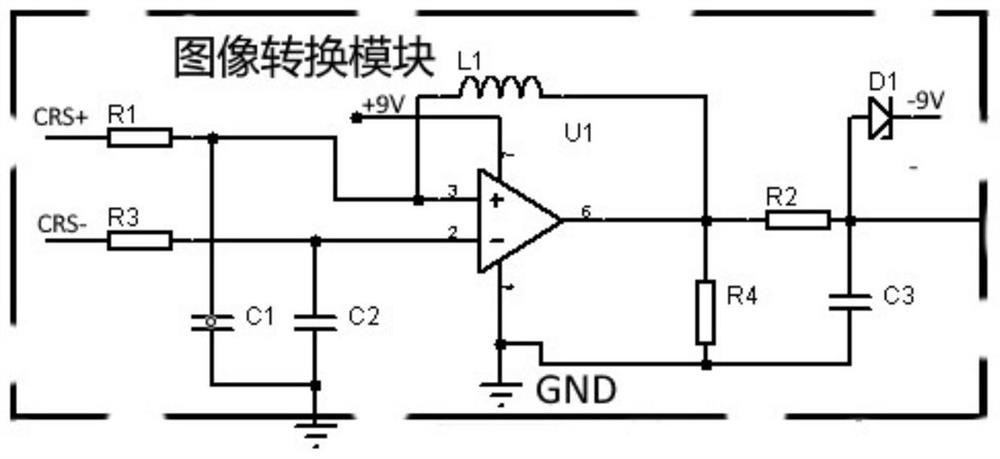

A crop disease and insect pest identification system and identification method based on edge computing

ActiveCN111918030BEasy accessAccurate acquisitionTelevision system detailsCharacter and pattern recognitionComputer hardwareInsect pest

The invention discloses an edge computing-based crop disease and insect pest identification system and its identification method, comprising: an image conversion module, a signal anti-interference module, a signal adjustment module, an A / D conversion module, a control module, a storage module, a wireless transmission module, The wireless receiving module, the image conversion module converts the received image acquisition signal into an electrical signal; the signal anti-interference module filters the interference signal generated in the conversion; the signal adjustment module performs the signal detection by the image acquisition component. adjustment; the A / D conversion module converts the analog signal into a digital signal; the control module obtains the detection signal, so that the next-level module operates; the storage module stores the collected image information; the wireless transmission module will The received image signal is converted into a wireless signal, thereby realizing remote monitoring; the wireless receiving module receives the transmission of the digital image, thereby realizing the fast transmission of the detection signal and reducing the calculation delay.

Owner:NANJING COREWELL CLOUD COMPUTING INFORMATION TECH CO LTD

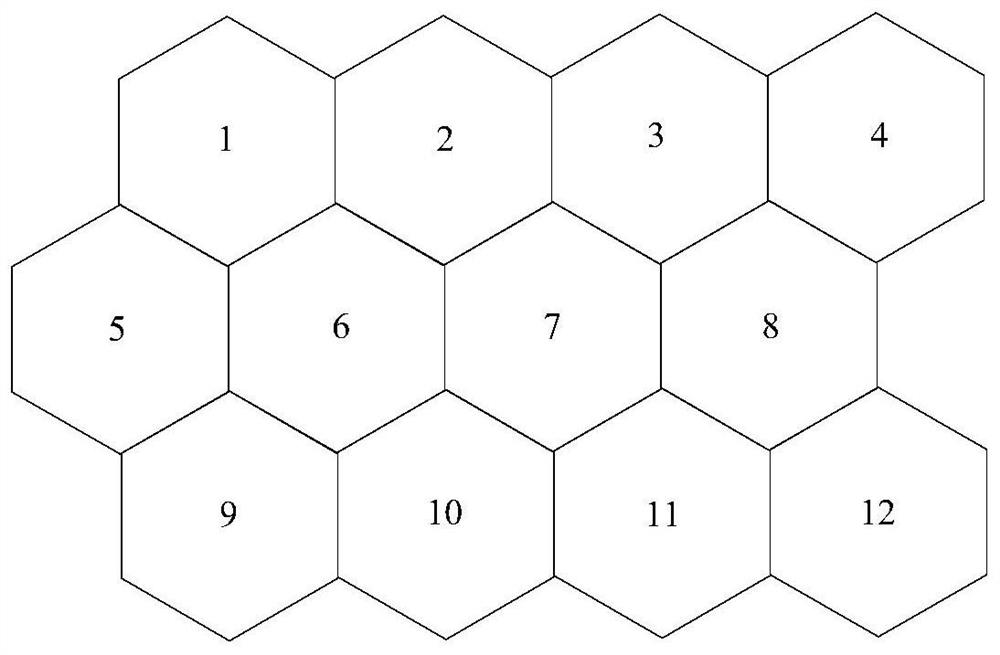

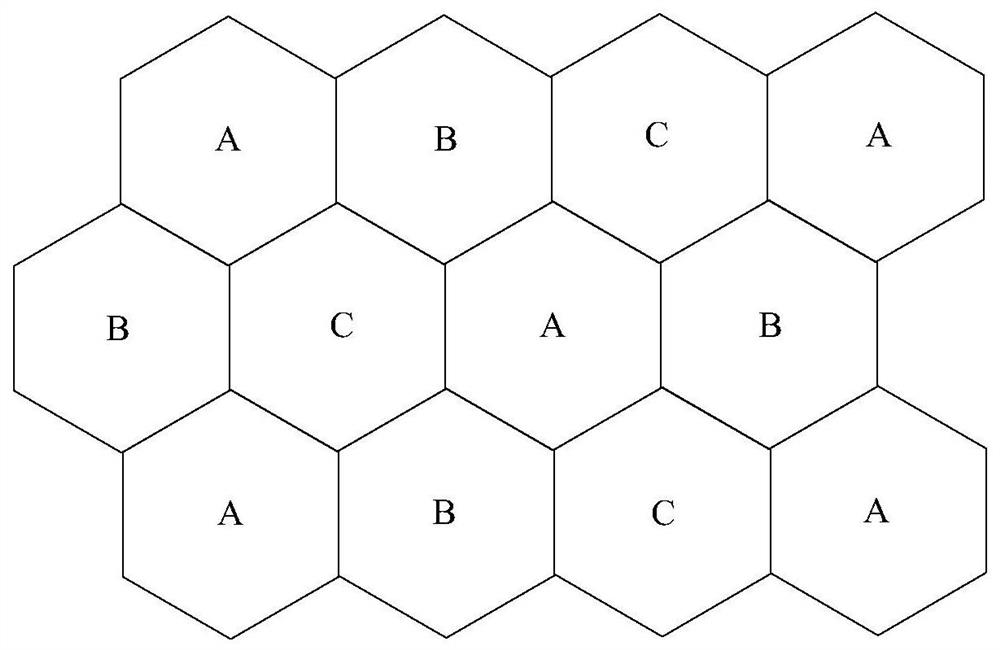

A dynamic channel allocation method for ofdma cellular networks

ActiveCN108964812BSmall amount of calculationMeet channel requirementsTransmission monitoringData switching networksNerve networkEngineering

This patent proposes a channel allocation method for OFDMA cellular networks, which belongs to the technical field of mobile communication. The implementation steps are: (1) Color the cellular network with 3 colors, and find the channel demand numbers of the three-color cells adjacent to each other and the sum of the channel demand numbers is the largest; (2) According to the found 3-color cell The number of channels required by the cell, generate continuous OFDMA channels numbered from 1; (2) Use the generated OFDMA channel to allocate the first OFDMA channel for the cellular network; (3) If the first OFDMA channel allocation cannot satisfy all cells For the requirement of the number of channels, the hysteresis noise chaotic neural network is used for the second OFDMA channel allocation. This patent can effectively reduce the total number of required OFDMA channels and the calculation amount of dynamic channel allocation through two OFDMA channel allocations, thereby improving the utilization rate of OFDMA channel resources and ensuring the real-time performance of wireless mobile communications.

Owner:QIQIHAR UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com