Entrance-control-free unmanned store checkout method based on pure vision

A store and visual technology, applied in the field of access-free unmanned store checkout based on pure vision, can solve a lot of manpower and other problems, and achieve the effect of increasing business hours, reducing financial resources and saving time.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

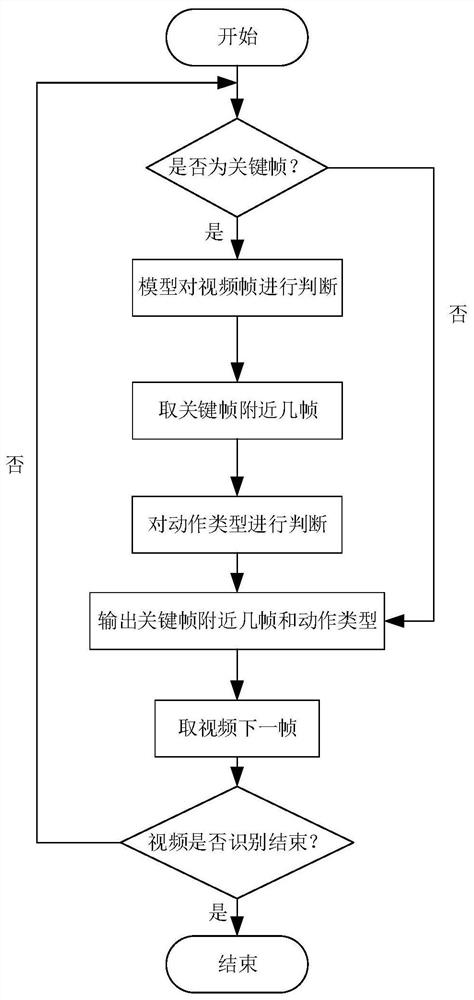

[0038] according to Figure 1-Figure 4 As shown, the present invention provides a checkout method based on pure vision without access control and unmanned store, comprising the following steps:

[0039] A checkout method for an access-free unmanned store based on pure vision, comprising the following steps:

[0040] Step 1: Train the action discrimination model to determine the action of the customer to take, take or put back for a scene;

[0041] The step 1 is specifically:

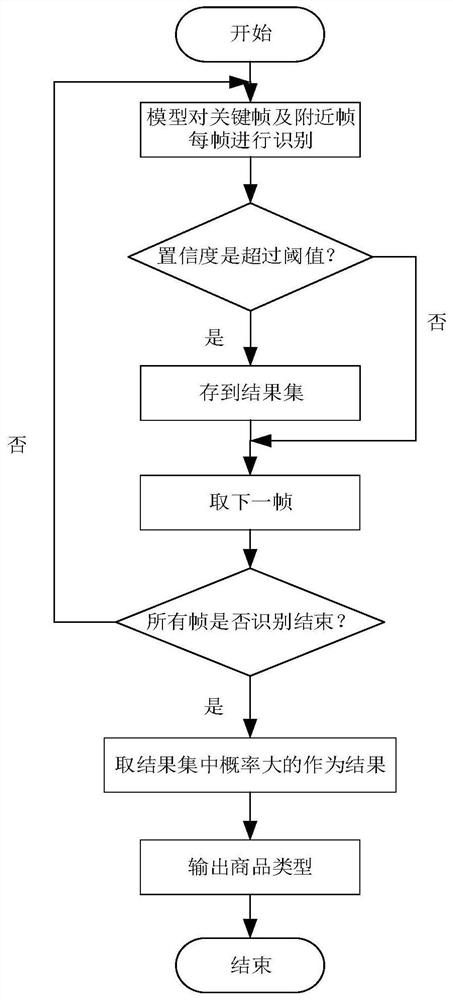

[0042] Step 1.1: Train an action discrimination model, obtain continuous RGB video frame stream and optical flow information from the video recorded by the camera, extract features with the help of neural network, detect actions through the extracted features, and judge each frame of the video. Whether there is a pick-and-place action, the frame where this action occurs is recorded as a key frame;

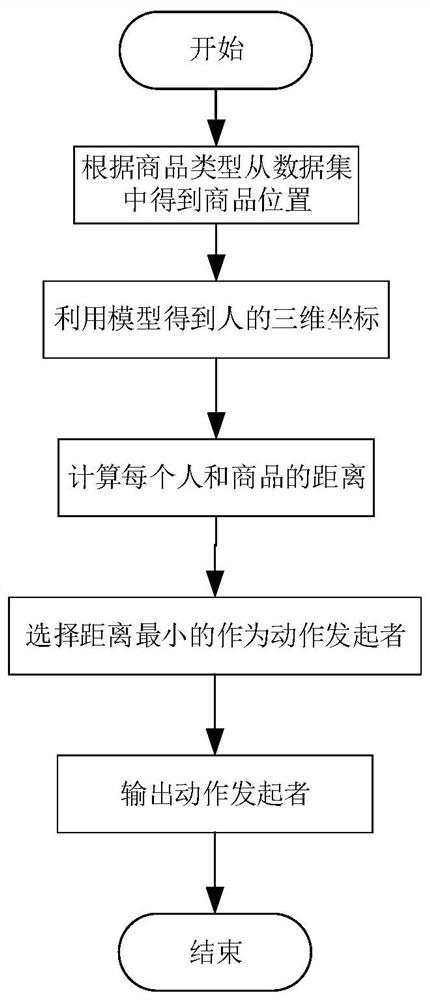

[0043] Step 1.2: For a scene, respectively determine the time stamps of the key frames taken or put bac...

specific Embodiment 2

[0058] Take and put back judgment: Train an action discrimination model to judge each frame of the video to see if there is a take and put back action. The frame where this action occurs is recorded as a key frame. For a scene, find out the timestamps of the keyframes that are picked up or put back in, respectively. Collect these time stamps as the time stamp of the entire scene, and then take frames close to these time stamps from the 12 videos, take 3 frames before and 10 frames after. All timestamps represent the number of actions to be taken or put back, so that we can find as many as possible. The purpose of taking 3 frames before and 10 frames after the same timestamp in the 12 videos is to better detect the goods in the hand. Then you have to judge whether to take it or put it back. If there is a product in the hand in the first few frames of the key frame, and there is no product in the hand in the next few frames, it is the put operation; in the few frames before th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com