Chinese named entity recognition method based on multilevel residual convolution and attention mechanism

A named entity recognition and attention technology, applied in neural learning methods, based on specific mathematical models, instruments, etc., to achieve the effect of improving entity recognition speed, high efficiency, and reducing overfitting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The technical solutions of the present invention will be further described below according to the embodiments and the accompanying drawings.

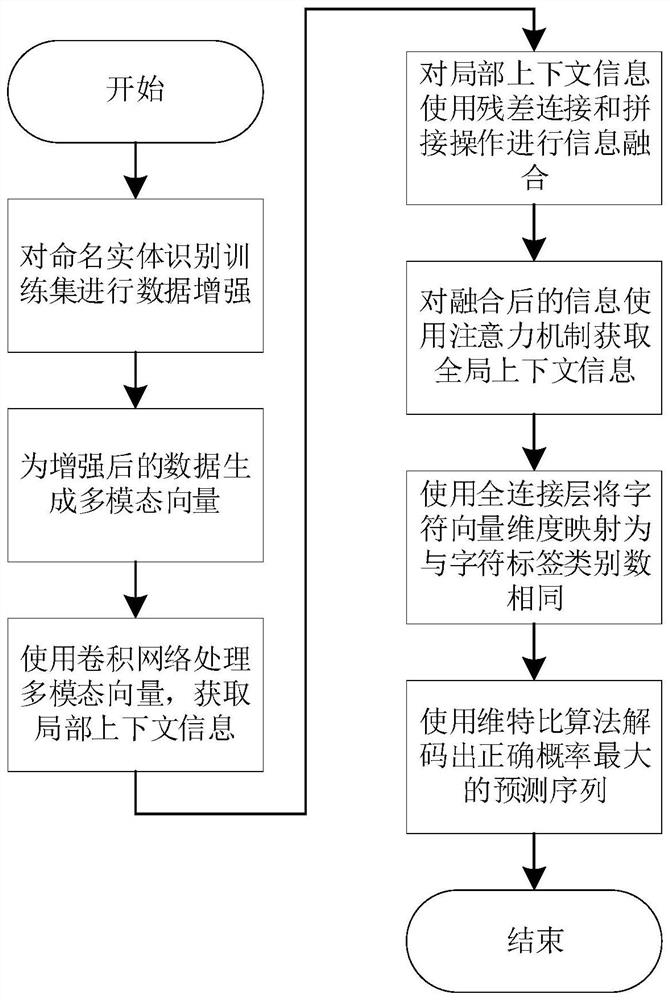

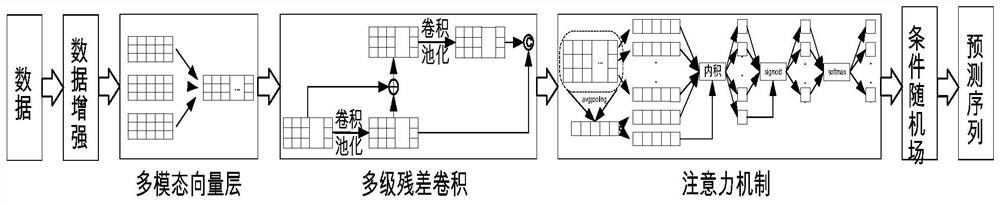

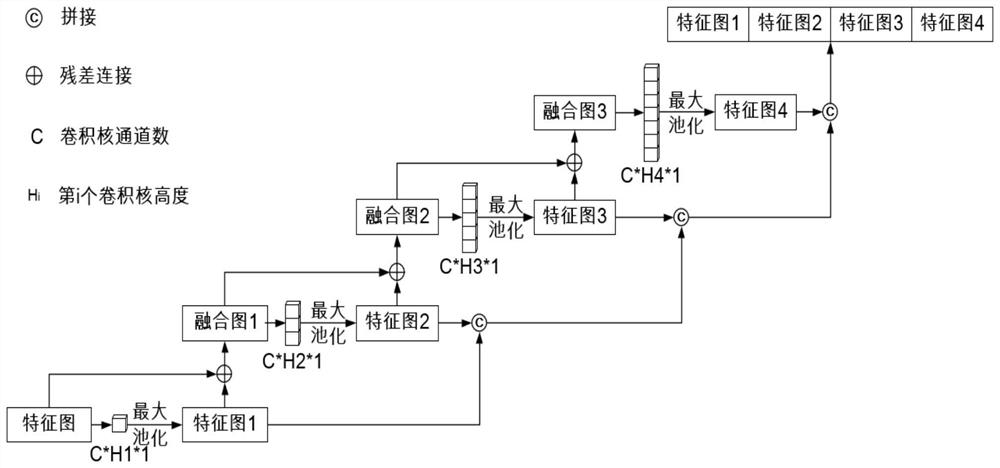

[0024] figure 2 It represents the algorithm model diagram of the present invention. The model includes five key parts: data enhancement, multi-modal vector layer, multi-level residual convolution, attention mechanism, and conditional random field. In order to better illustrate the present invention, the following is an example of the public Chinese named entity recognition data set Resume.

[0025] The data enhancement algorithm in step 1 in the above technical solution is:

[0026] Swap entities of the same type in the training set samples to generate a new training set. Then the original training set and the newly generated training set are combined as a new training set to achieve the purpose of expanding the amount of data. For example, there are two samples in the training set that contain "National People's Congress re...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com