Dialogue generation method and device based on two-stage decoding, medium and computing equipment

A stage and decoder technology, applied in computing, biological neural network models, special data processing applications, etc., can solve the problems of model lack of information, such as reply, single, etc., to improve relevance and information, easy to control, have an interpretable effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

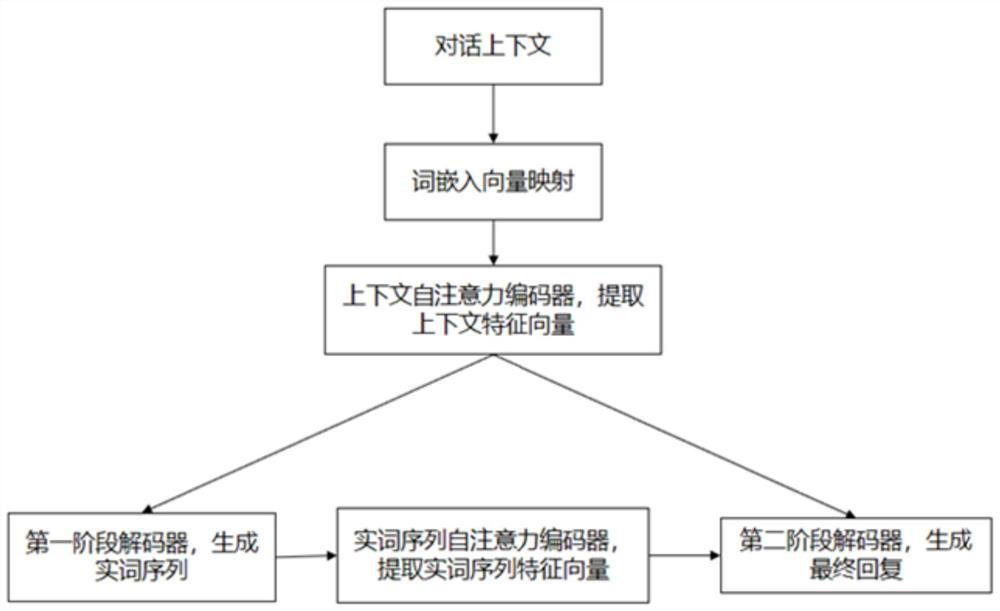

[0074] This embodiment discloses a dialogue generation method based on two-stage decoding. The method generates a dialogue through a two-stage decoding dialogue generation model, which can be applied to various language response systems in the field of human-computer interaction, such as intelligent robots that can chat , voice-controlled smart home products, etc.

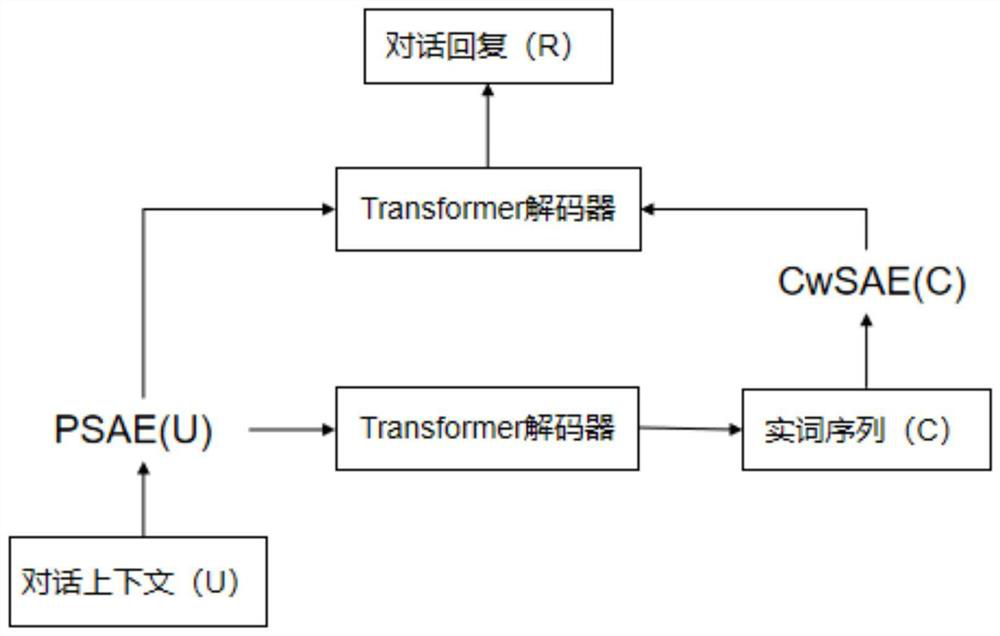

[0075] The structure of the dialog generation model is as follows figure 2 As shown, it mainly includes two self-attention encoders (Self-Attention Encoder, SAE): context self-attention encoder (Post Self-Attention Encoder, PSAE) and content-word sequence self-attention encoder (Content-words Self-Attention Encoder) Attention Encoder, CwSAE), which are used to extract the features of the context sentence U and the content word sequence C, respectively, and two Transformer decoders: the first stage Transformer decoder, the second stage Transformer decoder. The contextual self-attention encoder is connected to the ...

Embodiment 2

[0125] This embodiment discloses a dialog generation device based on two-stage decoding, which can implement the dialog generation method in Embodiment 1. The dialogue generation device includes a sequentially connected mapping module, a context self-attention encoder, a first-stage Transformer decoder, a content word sequence self-attention encoder and a second-stage Transformer decoder, and the context self-attention encoder is also connected to the second stage. Two-stage Transformer decoder.

[0126] Among them, for the mapping module, it takes the text of the dialogue context as input and can be used to map each word in the text to a word embedding vector;

[0127] For the context self-attention encoder, it takes the sentence as the unit and takes the word embedding vector as input, which can be used to extract the feature vector of the context;

[0128] For the first-stage Transformer decoder, it takes the contextual feature vector as input, which can be used to decode ...

Embodiment 3

[0133] This embodiment discloses a computer-readable storage medium, which stores a program. When the program is executed by a processor, the method for generating a dialog based on two-stage decoding described in Embodiment 1 is implemented, specifically:

[0134] (1) Input the text of the dialogue context in the model, and map each word in the text to a word embedding vector;

[0135] (2) Take the sentence as a unit, input the word embedding vector into the context self-attention encoder, and extract the context feature vector through the context self-attention encoder;

[0136] (3) Input the obtained context feature vector into the Transformer decoder in the first stage, and decode to generate a content word sequence, which expresses the main semantic information in the final reply;

[0137] (4) input the obtained content word sequence into the content word sequence self-attention encoder to obtain the feature vector of the content word sequence;

[0138] (5) Input the enc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com