Image out-of-focus deblurring method based on deep perception network

A depth perception and deblurring technology, applied in image enhancement, image analysis, image data processing, etc., to achieve the effect of low time complexity and high time complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0069] The present invention will be described in further detail below in conjunction with examples and accompanying drawings, but the embodiments of the present invention are not limited thereto.

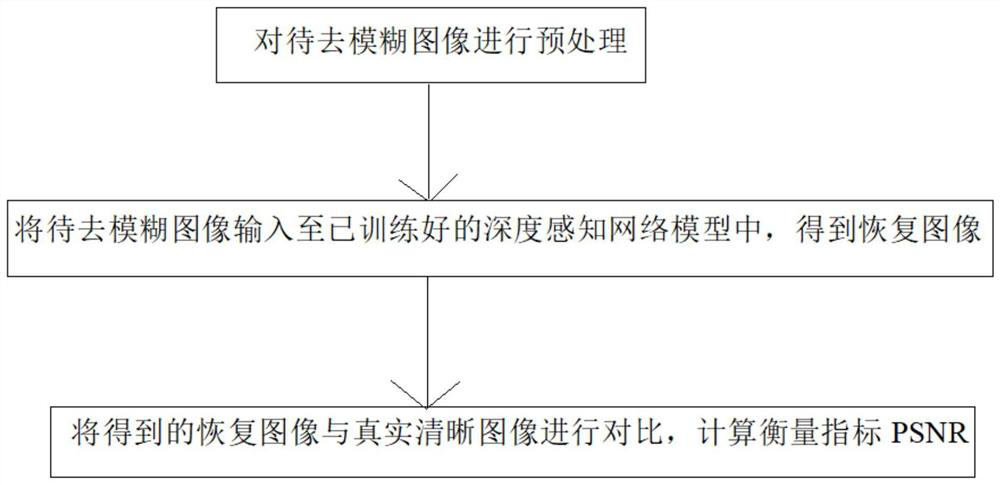

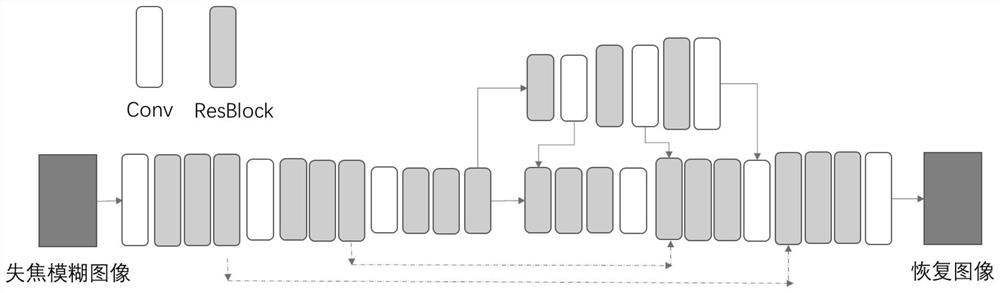

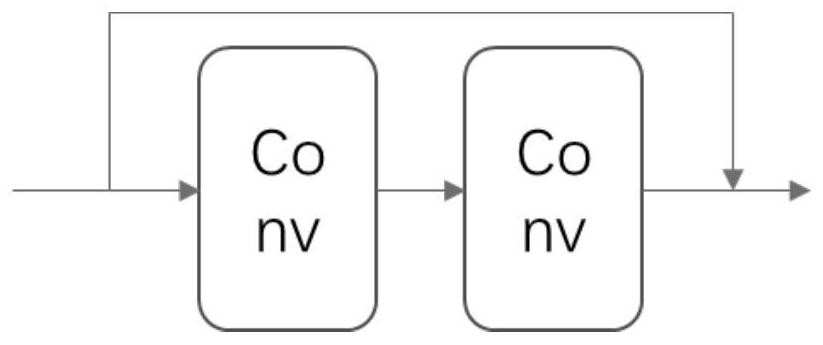

[0070] see Figure 1-3 , an image out-of-focus deblurring method based on a depth perception network, comprising the following steps:

[0071] S1, preprocessing the image to be deblurred; preprocessing includes: size cropping, random flipping and normalization.

[0072] S2, inputting the image to be deblurred into the trained depth perception network model to obtain the restored image;

[0073] S3, comparing the obtained restored image with the real clear image, and calculating the measurement index PSNR;

[0074] Wherein, the training of the depth perception network model includes the following steps:

[0075] (1) Obtain a database of out-of-focus blurred images; specifically, select and download a high-resolution out-of-focus blurred image dataset collected in a real scene. T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com