A text summarization method based on bert pre-training model

A pre-training and model technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve problems such as obstacles in the process of knowledge acquisition, and achieve the effect of improving text quality, quality, accuracy and fluency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

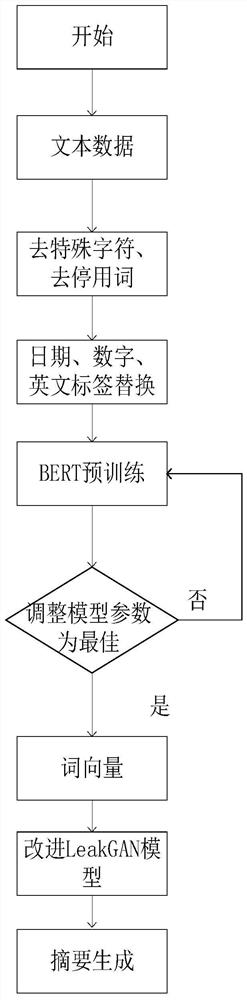

[0061] The technical solutions in the embodiments of the present invention will be described clearly and in detail below with reference to the drawings in the embodiments of the present invention. The described embodiments are only some of the embodiments of the invention.

[0062] The technical scheme that the present invention solves the problems of the technologies described above is:

[0063] In this embodiment, a method for generating abstracts based on the BERT pre-trained model is performed in the following steps.

[0064] Step 1: Preprocessing the text data set (removing special characters, converting animated expressions, replacing date tags, hyperlink URLs, replacing numbers, and replacing English tags);

[0065] (1) Special characters: Remove special characters, mainly including punctuation marks and commonly used stop particles and transition words, including: "「,",¥,..."Ah, hey, and;

[0066] (2) Convert the label content in brackets into words, such as [happy],...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com