Emotional music generation method based on deep neural network and music element driving

A deep neural network and music technology, applied in neural learning methods, biological neural network models, neural architecture, etc., to achieve the effect of enhancing artistic appeal and emotional rendering.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

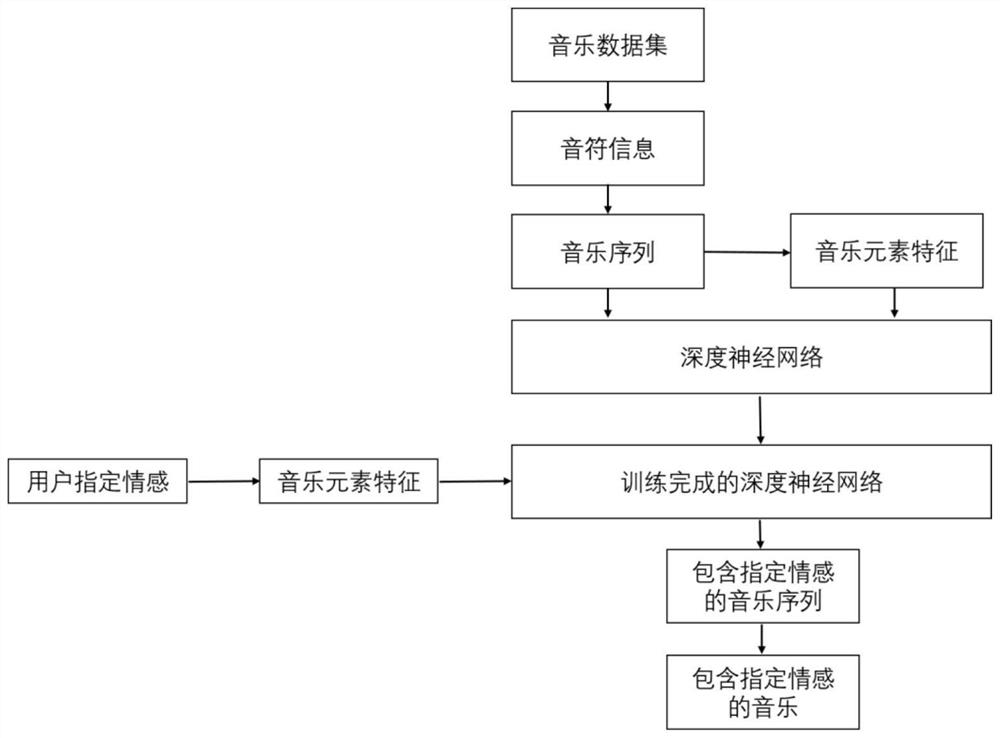

[0057] like figure 1 As shown, Embodiment 1 of the present invention provides a method for generating emotional music based on a deep neural network and music elements. Read the music dataset and perform preprocessing and encoding. Extract music element features, and use music sequence and music element features as the input of deep neural network to train the network. After the training of the deep neural network is completed, a music sequence containing the specified emotion can be generated according to the emotion specified by the user, and then the music containing the specified emotion can be output through decoding.

[0058] Step 1: Prepare the music data set in MIDI format as the training data. This time, 329 piano pieces including 23 classical piano players are used. These piano pieces are composed in various styles, including different rhythms and modes, and are suitable for training A generative model for emotional music.

[0059]Step 2: Use python's pretty-midi ...

Embodiment 2

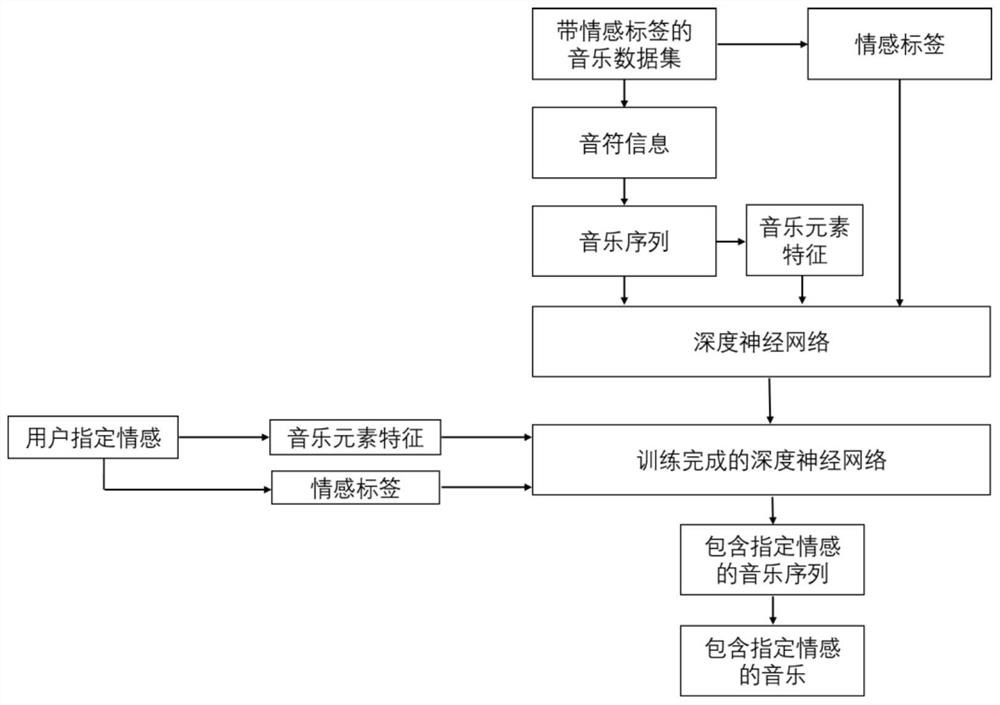

[0088] like image 3 As shown, Embodiment 2 of the present invention proposes yet another method for generating emotional music. The music data set with emotional labels is preprocessed and encoded, and the features of music elements and corresponding emotional labels are extracted. The network is trained by taking music sequences, music element features and emotion labels as input to the deep neural network. After the network training is completed, the music sequence containing the specified emotion can be generated according to the emotion specified by the user, and then the music containing the specified emotion can be output through decoding.

[0089] Step 1: Prepare a data set of emotional music with manual annotation in MIDI format as training data. This embodiment adopts piano pieces containing 4 different emotions, of which 56 contain happy emotions, 58 contain calm emotions, and 40 Contains sad emotions, 47 piano pieces containing tense emotions. These piano compos...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com