Action recognition method and device based on first person view angle

A first-person and action recognition technology, applied in the field of action recognition, can solve problems such as large amount of calculation, interference, and lack of original information, and achieve strong robustness and the effect of getting rid of dependence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

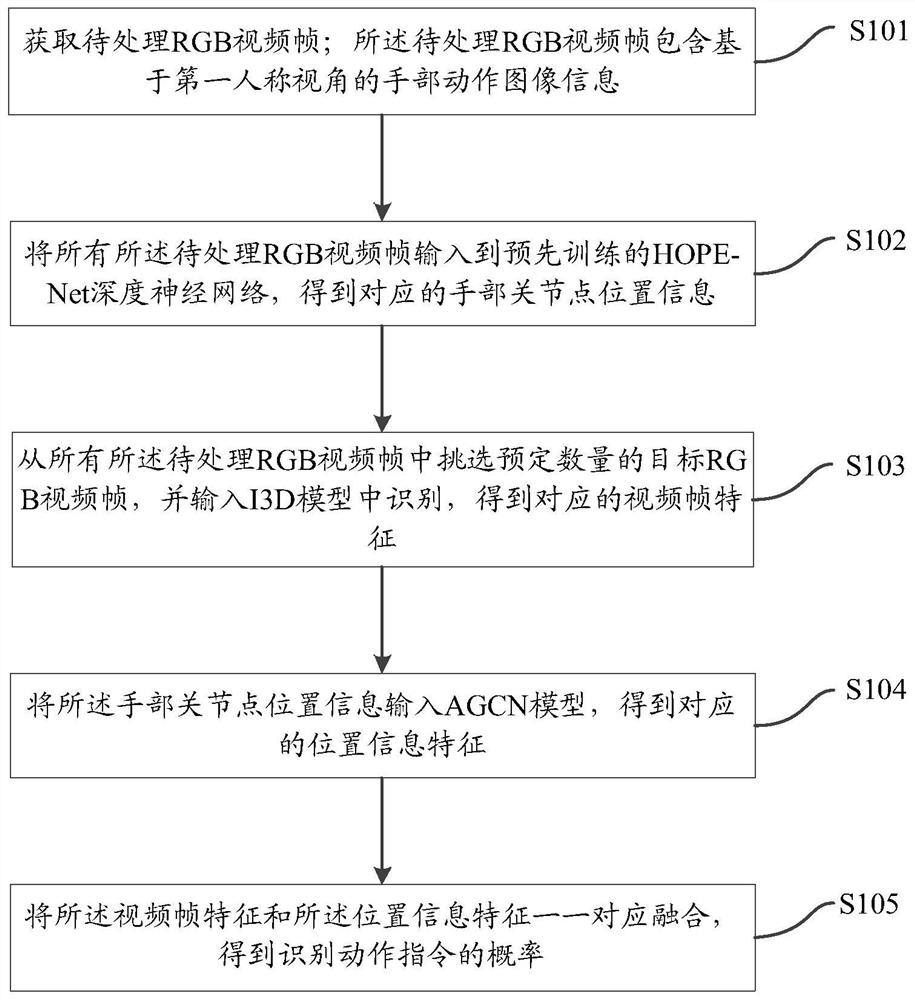

[0057] Example 1, please refer to figure 1 , figure 1 It is a flow chart of steps in Embodiment 1 of an action recognition method based on a first-person perspective in the present invention, including:

[0058] S101. Obtain an RGB video frame to be processed; the RGB video frame to be processed includes hand movement image information based on a first-person perspective;

[0059] S102, inputting all the RGB video frames to be processed into the pre-trained HOPE-Net deep neural network to obtain corresponding hand joint point position information;

[0060] S103, selecting a predetermined number of target RGB video frames from all the RGB video frames to be processed, and inputting them into the I3D model for identification to obtain corresponding video frame features;

[0061] S104. Input the position information of the hand joint points into the AGCN model to obtain corresponding position information features;

[0062] S105, merging the video frame features and the positio...

Embodiment 2

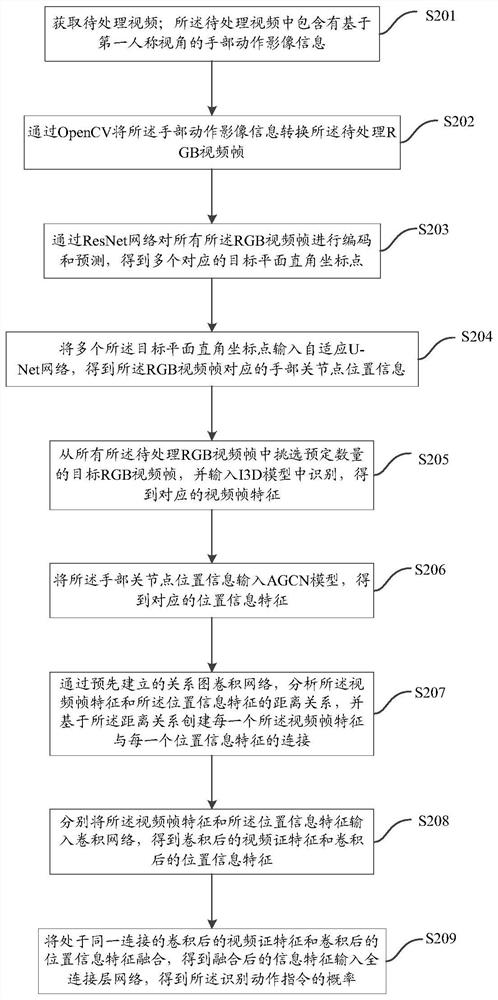

[0064] Example 2, please refer to figure 2 , figure 2 It is a flow chart of steps in Embodiment 2 of an action recognition method based on a first-person perspective in the present invention, specifically including:

[0065] Step S201, acquiring the video to be processed; the video to be processed contains hand movement image information based on the first-person perspective;

[0066] Step S202, converting the hand movement image information into the RGB video frame to be processed through OpenCV;

[0067] In the embodiment of the present invention, first the video to be processed is converted into a plurality of RGB video frames to be processed using OpenCV.

[0068] It should be noted that OpenCV is a cross-platform computer vision and machine learning software library that can run on Linux, Windows, Android and Mac OS operating systems. OpenCV has light-weight and high-efficiency features—consists of a series of C functions and a small number of C++ classes, and provid...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com