Image matching method based on deep semantic alignment network model

A network model and matching method technology, which is applied in biological neural network models, neural learning methods, character and pattern recognition, etc., can solve the problems of time-consuming and labor-intensive labeling of image data with dense correspondence, and low accuracy, so as to improve image alignment Effect, high accuracy, effect of improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0078] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

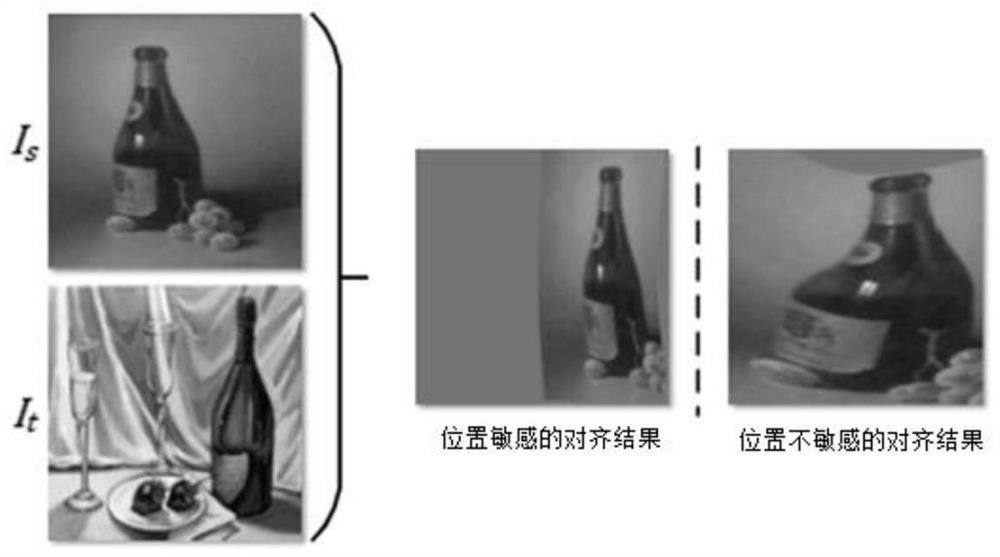

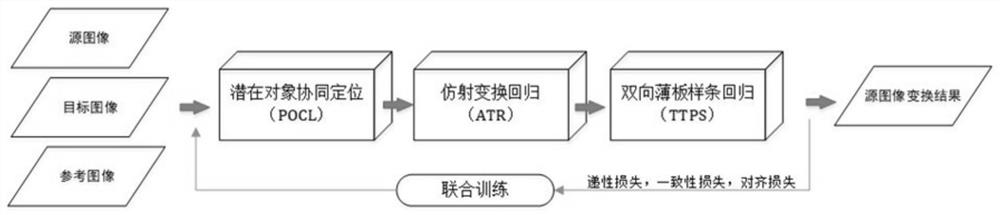

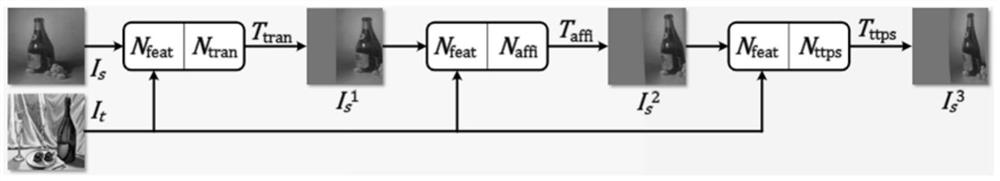

[0079] The image matching method based on the deep semantic alignment network model proposed by the present invention is a deep neural network model OLASA based on semantic alignment. The input of the model method is the source image, the target image and the reference image. In-depth semantic analysis estimates the deformation parameters of the source image according to its internal alignment relationship. The deformed source image is the output result of this method, and the target objects contained in it can be matched to the corresponding objects in the target image. The internal implementation of OLASA is through the joint learning of three sub-networks: three sub-networks, potential object co-localization (POCL), affine transformation regression (ATR), two-way thin plate spline regression (TTPS)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com