Method and hardware structure capable of realizing convolution calculation in multiple neural networks

A technology of neural network and hardware structure, applied in the direction of biological neural network model, neural architecture, physical realization, etc., can solve the problems of low computing efficiency of traditional CPU, long hardware iteration cycle, huge deployment cost, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

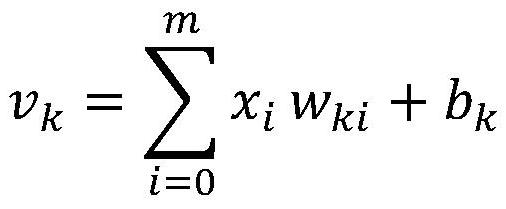

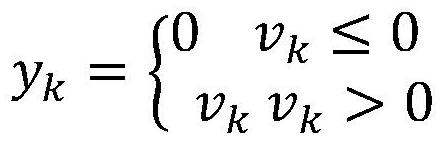

[0044] Embodiment 1, a method that can realize convolution calculation in various neural networks, by analyzing the convolution operation process in various neural networks, extracting the common features of the convolution calculation change methods involved in the convolution operation process, and The common features are parameterized, and the hardware structure is used to analyze and configure the processed parameters to realize the analysis of different convolution algorithms.

[0045] The basic operations of different CNN networks are roughly the same. The differences are mainly reflected in the different sizes of input feature maps, the number of input feature maps is too different, the dimensions of convolution kernels are different, and the positions of full connections in the network are different. But among the above differences, The process of CNN network operation is composed of convolution, activation, pooling, full connection and other calculation processes; ther...

Embodiment 2

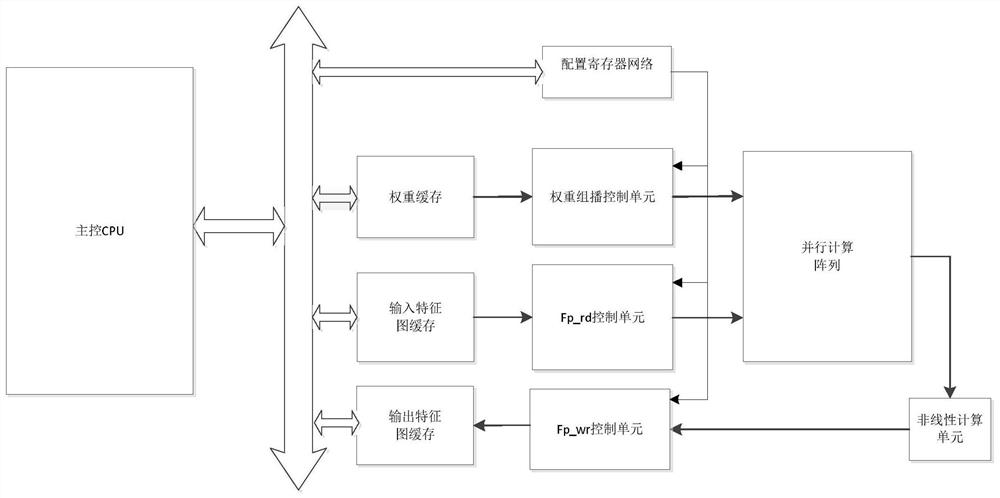

[0069] Embodiment 2 provides a kind of hardware structure (such as figure 1 shown), including: a main control CPU, and a weight cache unit connected to the main control CPU, an input feature map cache unit, an output feature map cache unit, the weight cache unit is used to cache weight data, and the input feature map cache unit , The output feature map cache unit is used to cache feature map data;

[0070] The output end of the weight cache unit is connected to the weight multicast control unit, and the weight multicast control unit is used to broadcast weight data to the parallel computing array unit;

[0071] The output end of the input feature map cache unit is connected to the FP_rd unit, and the output end of the FP_rd unit is connected to the parallel computing array unit;

[0072] The input end of the output feature map cache unit is connected to the Fp_wr unit, and the input end of the Fp_wr unit is connected to the nonlinear computing unit;

[0073] The output end o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com