Human body posture classification method based on computer vision

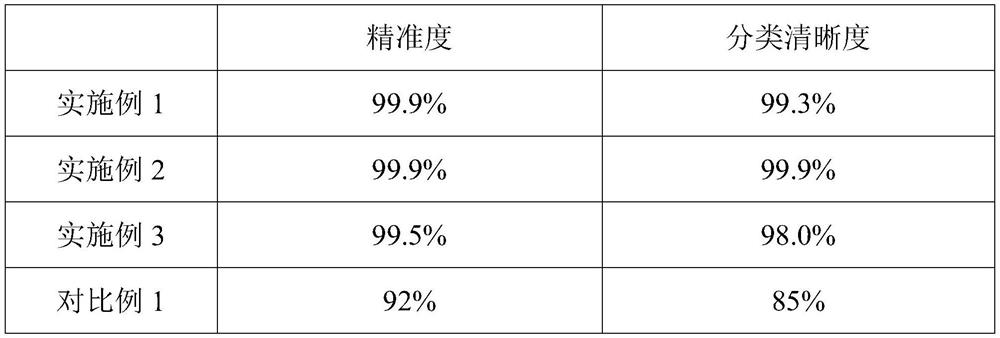

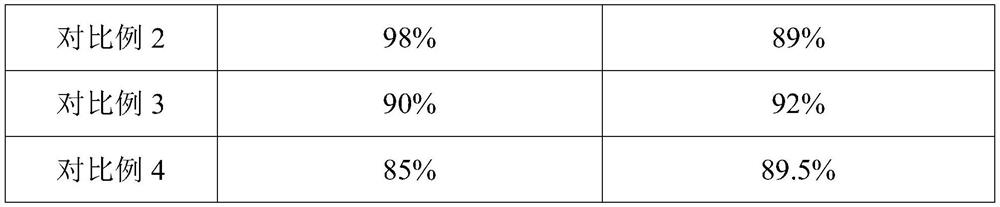

A computer vision and human body posture technology, applied in computer parts, calculation, image analysis, etc., can solve the problems of no length extraction, blur, low accuracy and clarity, etc., and achieve the effect of high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0053] A computer vision-based human posture classification method, comprising the following steps:

[0054] S1. Skeleton data acquisition: the purpose of constructing human body representation based on 3D skeleton data is to extract compact and discriminative human body posture or action feature descriptors to represent human body posture or action;

[0055] 1) Use the structured light color-depth sensor light projector to emit structured light, which is modulated by the surface height of the object to be measured after being projected onto the surface of the object to be measured;

[0056] 2) The modulated structured light is collected by the receiver and sent to the computer for analysis and calculation to obtain the three-dimensional surface data of the measured object;

[0057] 3) After a specific algorithm, the depth information of the object can be analyzed. In addition, the color camera can also be used on the color-depth sensor to obtain the color image frame correspo...

Embodiment 2

[0083] This embodiment is roughly the same as the method provided in Embodiment 1, and the main difference is that in step S5022, marking is performed every 15 cm in length.

Embodiment 3

[0085] This embodiment is roughly the same as the method provided in Embodiment 1, the main difference being that in step S5022, the marking is performed every 20 cm in length.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com