Shared updatable Deepfake video content supervision method and system

A video content and content technology, which is applied in the field of sharing and updating Deepfake video content supervision, can solve the problems of detection method accuracy reduction, detection method failure, detection effect decline, etc., to achieve fast detection efficiency, high degree of interpretation, and over-solved The effect of fitting the problem

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

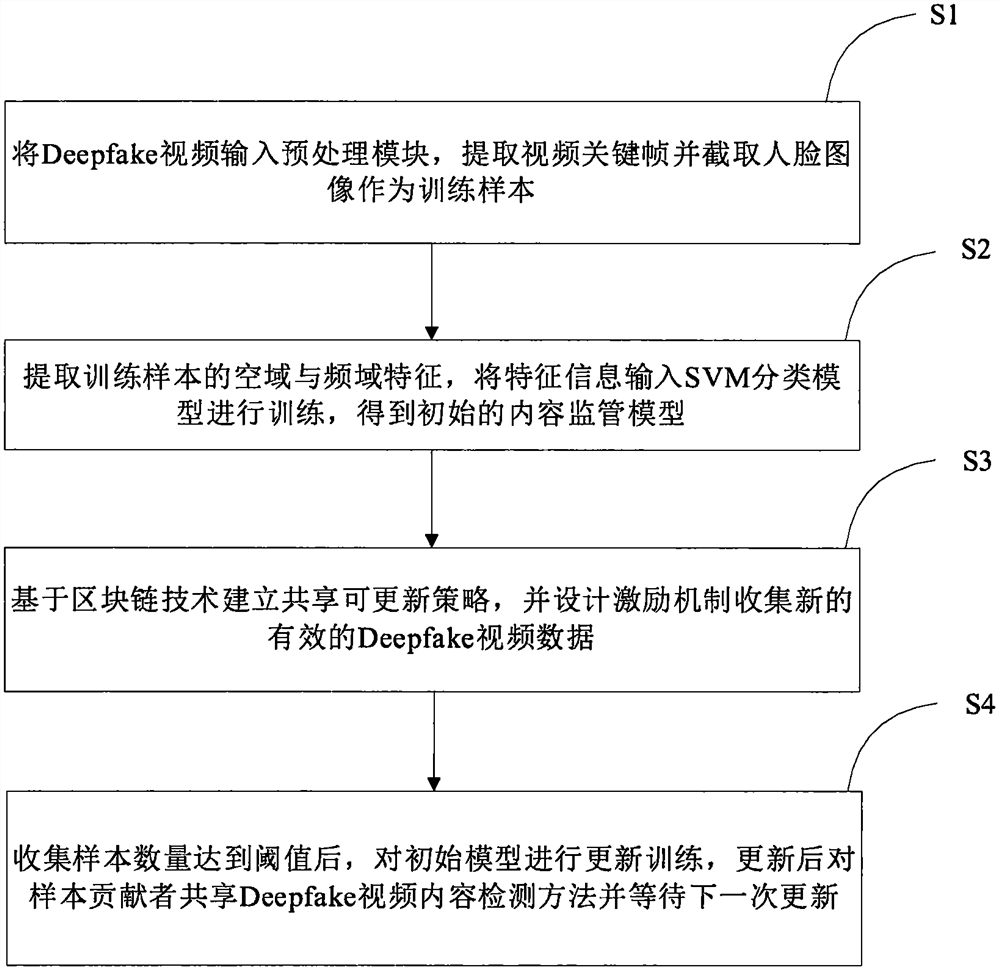

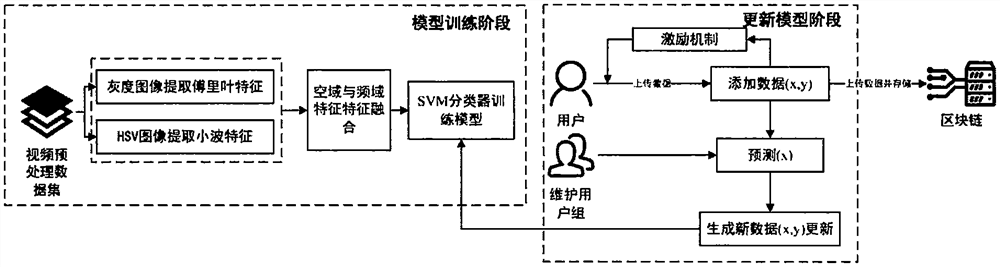

[0026] Such as figure 1 , figure 2 As shown, in one embodiment, a kind of deepfake video content supervising method that the embodiment of the present invention provides can be shared, comprises the following steps:

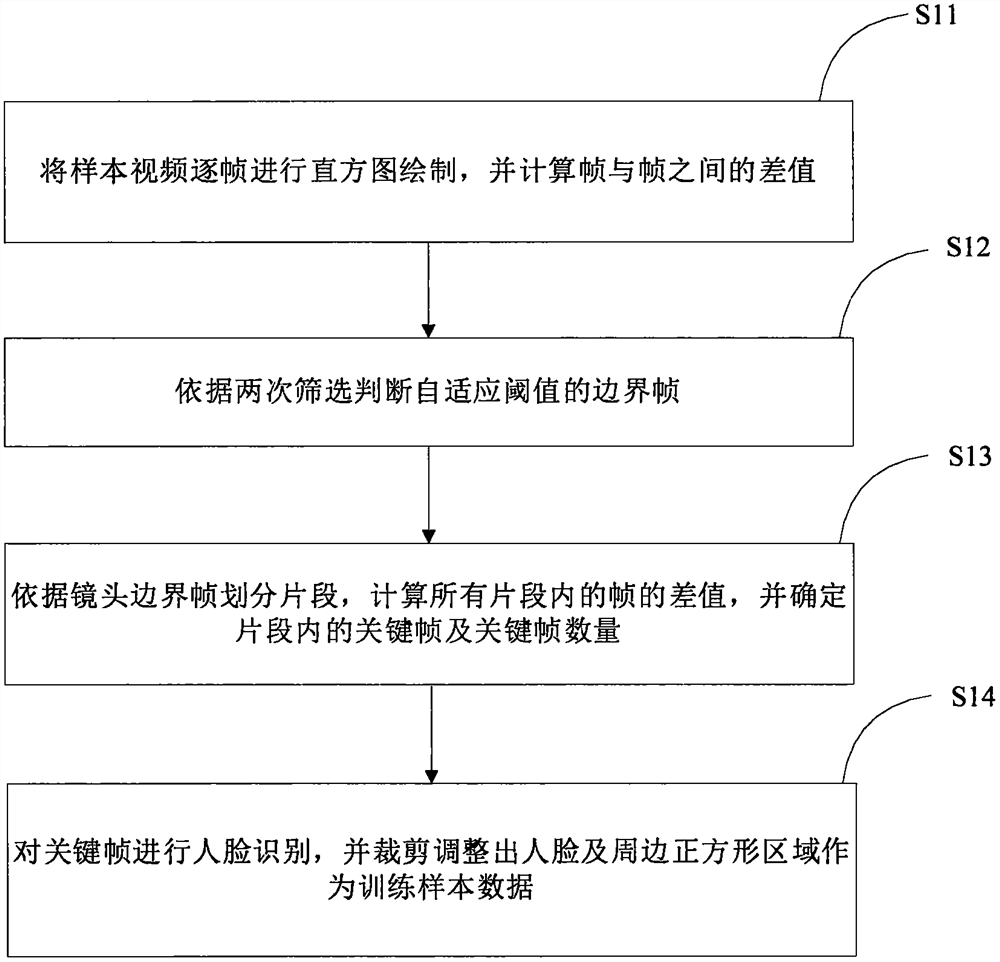

[0027] Step S1: Input the Deepfake video into the preprocessing module, extract key frames of the video and capture face images as training samples;

[0028] Step S2: Extract the spatial domain and frequency domain features of the training samples, input the feature information into the SVM classification model for training, and obtain the initial content supervision model;

[0029] Step S3: Establish a shared and updatable strategy based on blockchain technology, and design an incentive mechanism to collect new and effective Deepfake video data;

[0030] Step S4: After the number of collected samples reaches the threshold, update the initial model for training, and after updating, share the Deepfake video content detection method with the sample contributors ...

Embodiment 2

[0075] Such as Figure 8 As shown, the embodiment of the present invention provides a kind of shared and updatable Deepfake video content supervision system, including the following modules:

[0076] The data preprocessing module is used to process the video data on the blockchain into sample data suitable for model training, extract key frames of the video using the method based on segment classification, and crop the frame image into a fixed square after face recognition the size of the image;

[0077] The supervision model training module is used to obtain the initial content supervision model model, by extracting the spatial domain features and frequency domain features of the sample data image respectively, cascading and normalizing them into global discriminant features, and inputting them into the SVM model training;

[0078] The incentive mechanism module is used to encourage sample contributors to upload high-quality new data, and at the same time prevent malicious a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com