Mask robust face recognition network and method, electronic equipment and storage medium

A face recognition and network technology, applied in the field of computer vision, to achieve the effect of improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

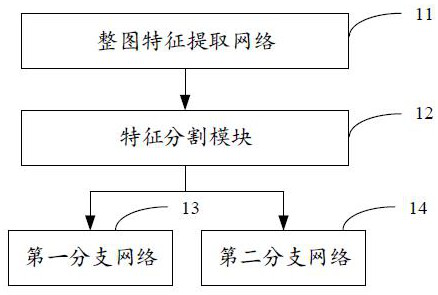

[0038] figure 1 It shows a schematic structural diagram of a mask robust face recognition network provided by Embodiment 1 of the present invention. For the convenience of description, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0039] The mask robust face recognition network 1 provided by the embodiment of the present invention includes a whole picture feature extraction network 11, a feature segmentation module 12 connected to the whole picture feature extraction network, a first branch network 13 and a first branch network 13 connected respectively with the feature segmentation module. Two-branch network14. Among them, the whole image feature extraction network is used to extract the shallow whole image features from the input face image, and the feature segmentation module is used to spatially segment the shallow whole image features according to the position of the preset segmentation point to obtain the a...

Embodiment 2

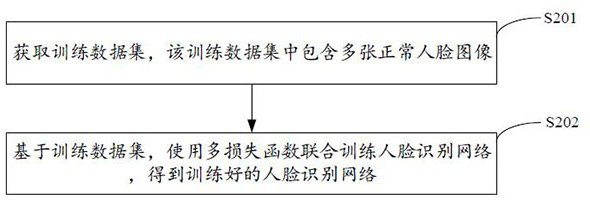

[0045] The embodiment of the present invention is based on embodiment one, figure 2 The implementation process of the mask robust face recognition network training method provided by the second embodiment of the present invention is shown. For the convenience of explanation, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0046] In step S201, a training data set is obtained, and the training data set includes a plurality of normal face images.

[0047] In the embodiment of the present invention, the basic data set can be obtained first, and the basic data set includes multiple normal face images. The basic data set can be image data selected from a general data set, such as Megaface, etc., which is not limited here. After obtaining the basic data set, perform key point detection on each face image in the basic data set, and align the detected key points with the standard key points. Specifically, the face key poin...

Embodiment 3

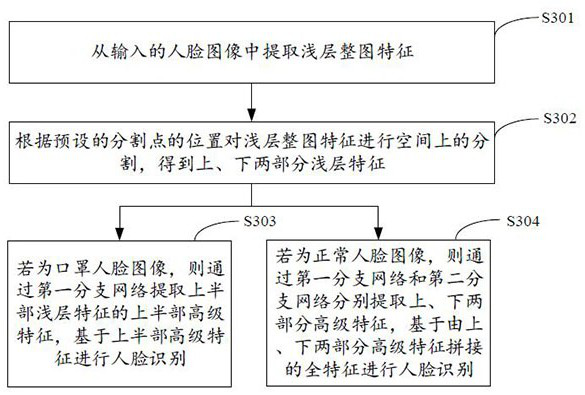

[0057] Embodiment 3 of the present invention is based on Embodiment 1, image 3 It shows the implementation process of the mask robust face recognition method provided by the third embodiment of the present invention. For the convenience of explanation, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0058]In step S301, shallow whole image features are extracted from the input face image.

[0059] In the embodiment of the present invention, the input face image is an aligned face image. Before inputting the face image, the acquired face image can be aligned with the preset face key points (for example, five face key points of eyes, nose, left and right mouth corners), and the aligned face image can be input into In the trained face recognition network. The trained face recognition network can be trained using the method described in Embodiment 2.

[0060] In step S302, the shallow whole image features are spatia...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com