Universal visual SLAM (Simultaneous Localization and Mapping) method

A visual and time-consuming technology, applied in directions such as road network navigators to achieve the effect of low computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

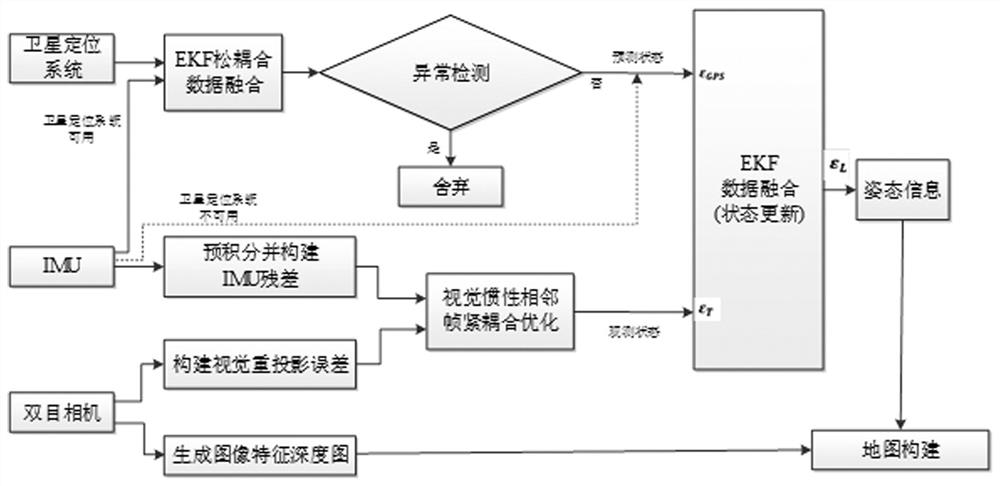

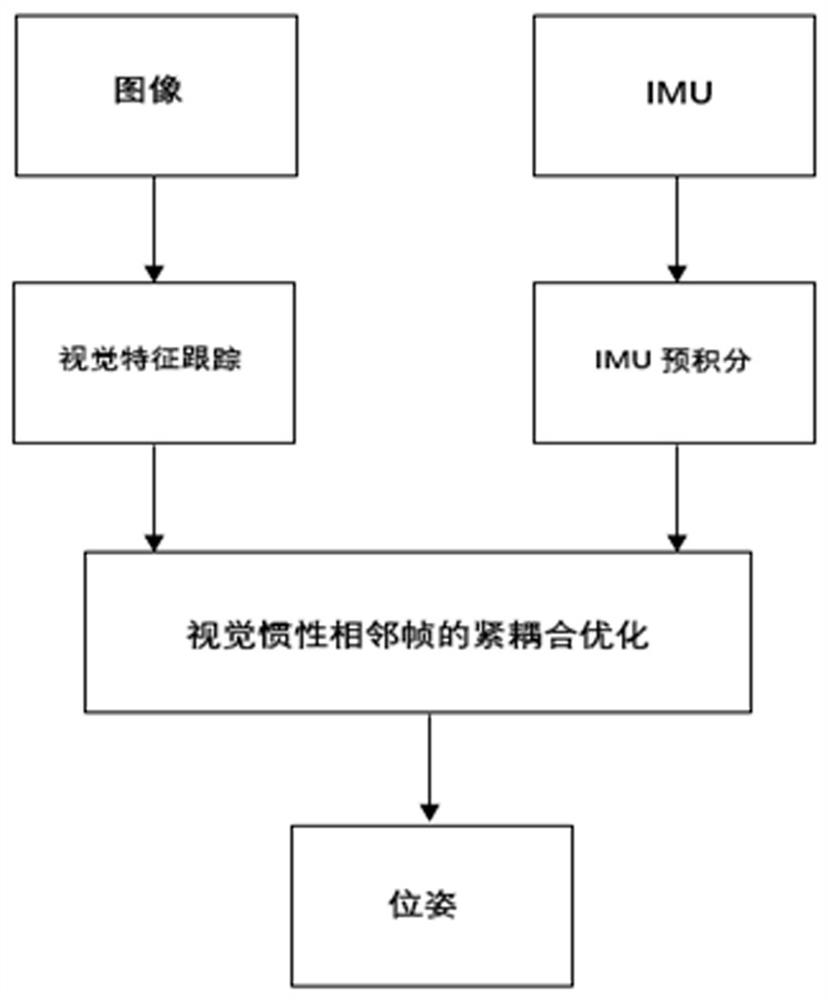

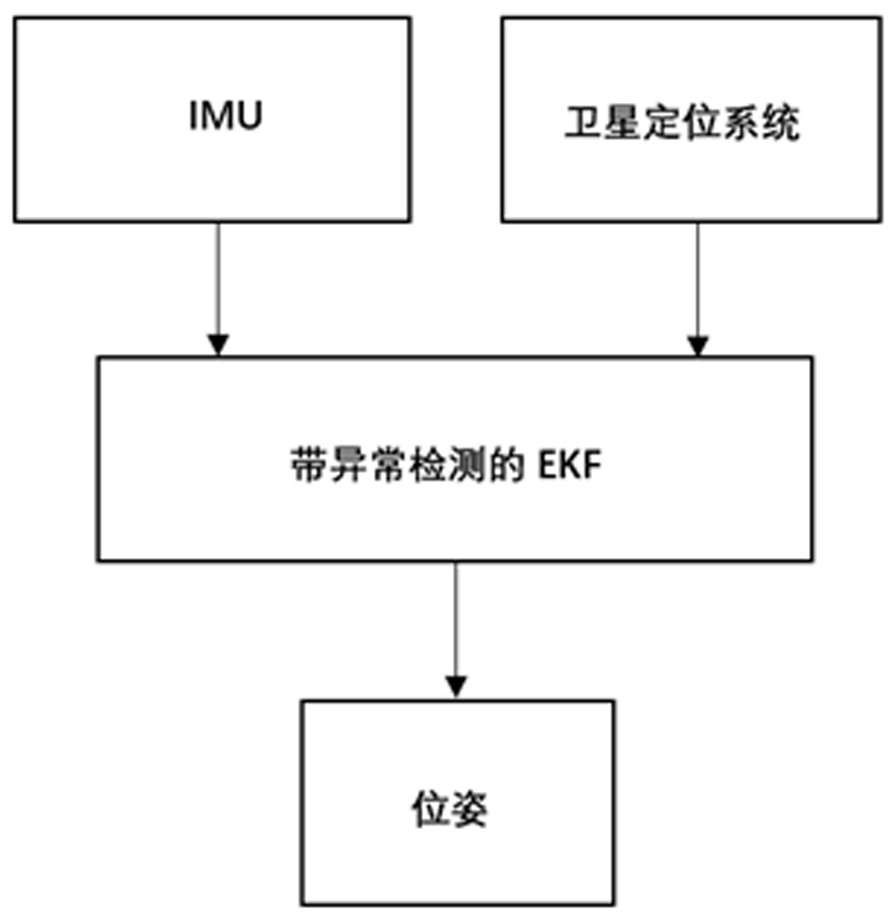

[0134] Step 1: First, use the binocular camera to obtain the image data, perform visual feature acquisition and matching, and construct the visual reprojection error; at the same time, pre-integrate the IMU data of the inertial measurement unit and construct the IMU residual; then the visual reprojection error Combining the two with the IMU residual to optimize the tight coupling of adjacent frames of visual inertia, and obtain the attitude information of the preliminary measurement as the observation state;

[0135] The specific process is:

[0136] Firstly, the angular velocity and acceleration data of the carrier are obtained through the IMU, pre-integrated, and the residual function is constructed through the pre-integrated results; the binocular camera obtains the image data; then feature extraction and matching are performed on the image data, and the visual reprojection error is used to construct Residual function; Jointly construct a tightly coupled optimized residual ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com