Dynamic load allocating method for network processor based on cache and apparatus thereof

A network processor and load distribution technology, applied in data exchange networks, digital transmission systems, electrical components, etc., can solve problems such as out-of-order packets and unbalanced loads, avoid packet processing bottlenecks, avoid severe oscillation, The effect of reducing the probability of out-of-order packets

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention will be described in further detail below in conjunction with the accompanying drawings.

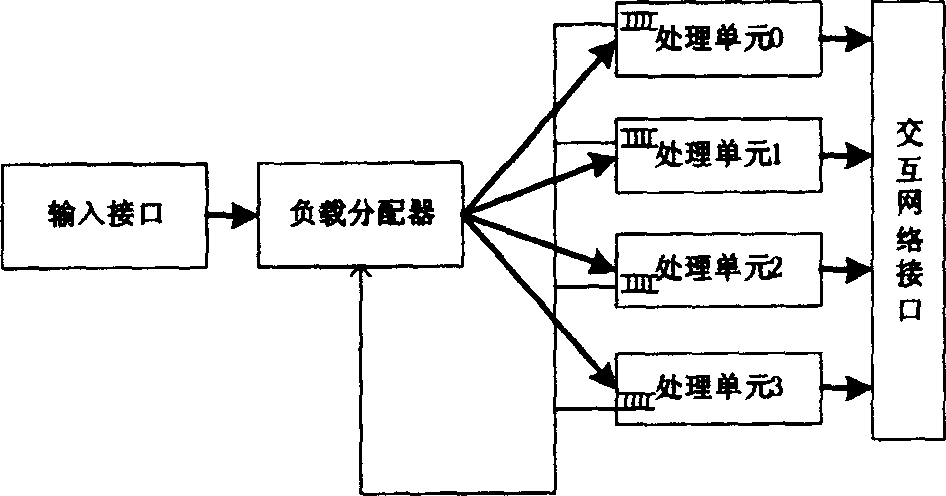

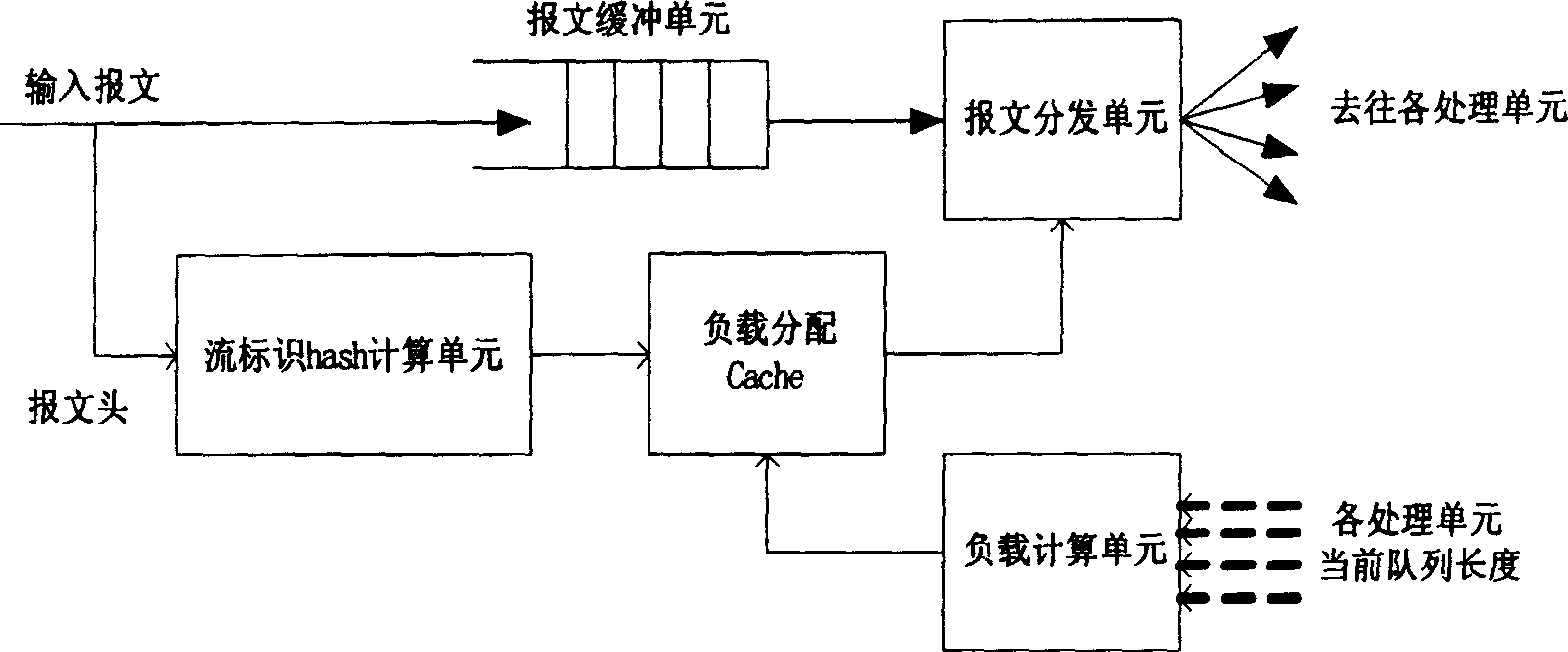

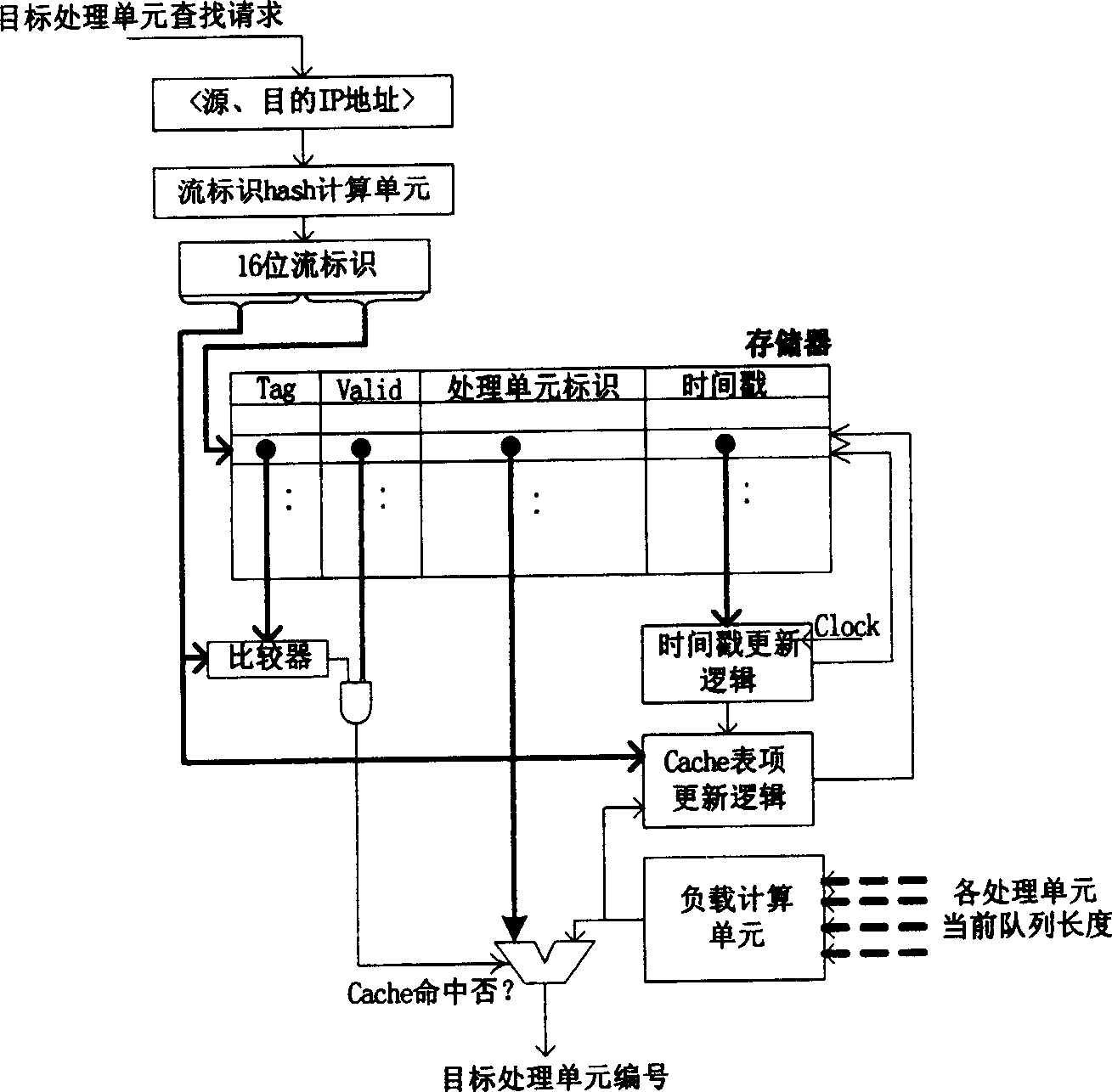

[0027] The technical solution of the present invention is to dynamically monitor the load status of each processing unit in the network processor, and adaptively adjust the distribution mode of the wire-speed message flow to each processing unit according to the load status, and at the same time adopt the cache structure to maintain the message to each processing unit. The allocation status of the unit, and update the allocation status by adjusting the invalidation and replacement timing of cache entries. The distribution method in the present invention is applied to the load distributor inside the network processor chip, such as figure 1 As shown, the IP packets sent from the input interface of the network processor are sent to each processing unit for processing after load adjustment in the load distribution unit, and the processed packets are sent to the o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com