Memory system and method for controlling the same, and method for maintaining data coherency

a memory system and memory technology, applied in the field of memory system and a control method of the memory system, can solve the problems of increasing the latency of the device and the memory system, providing slower access performance, and reducing the utilization rate of the bus, so as to improve the efficiency of memory access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

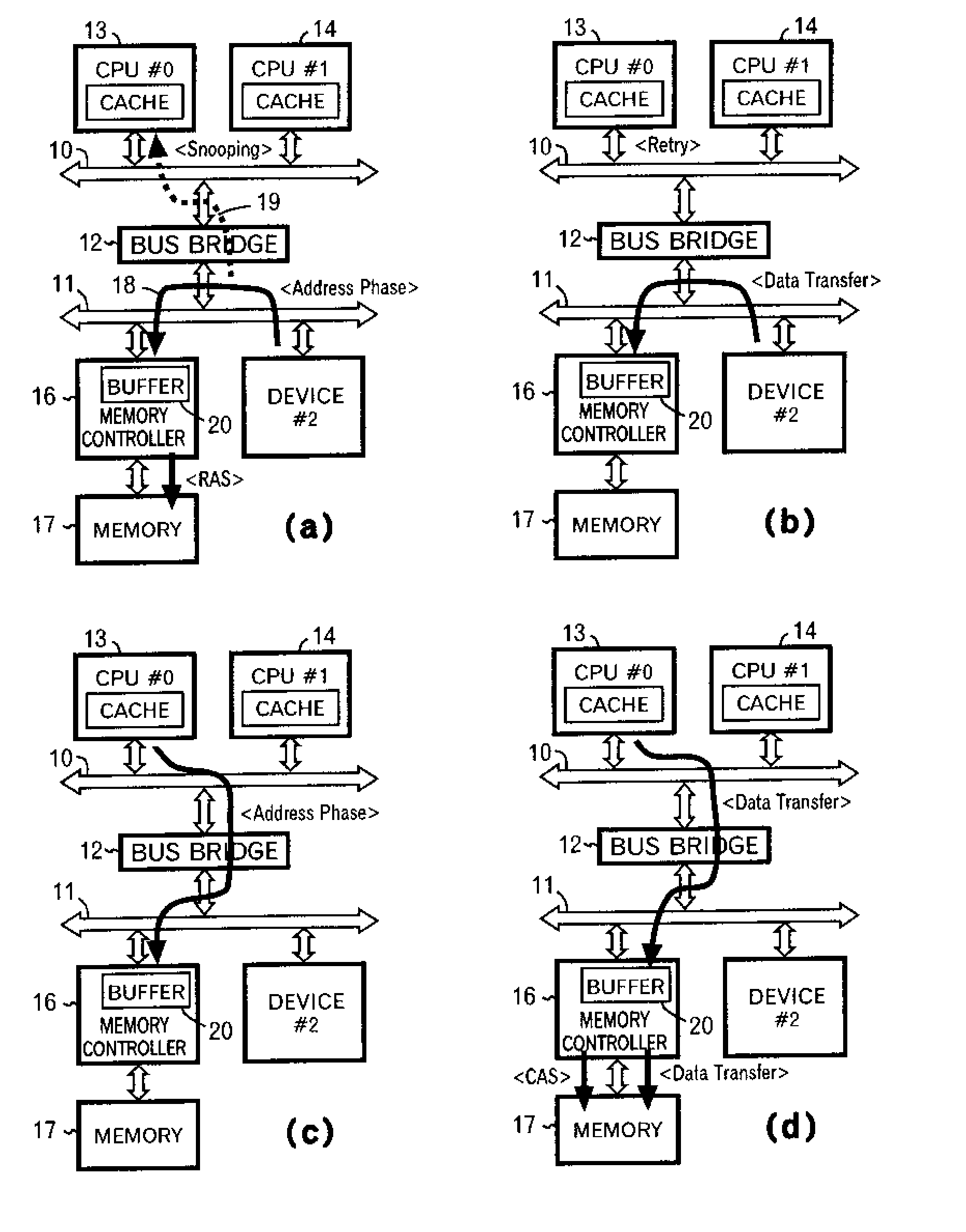

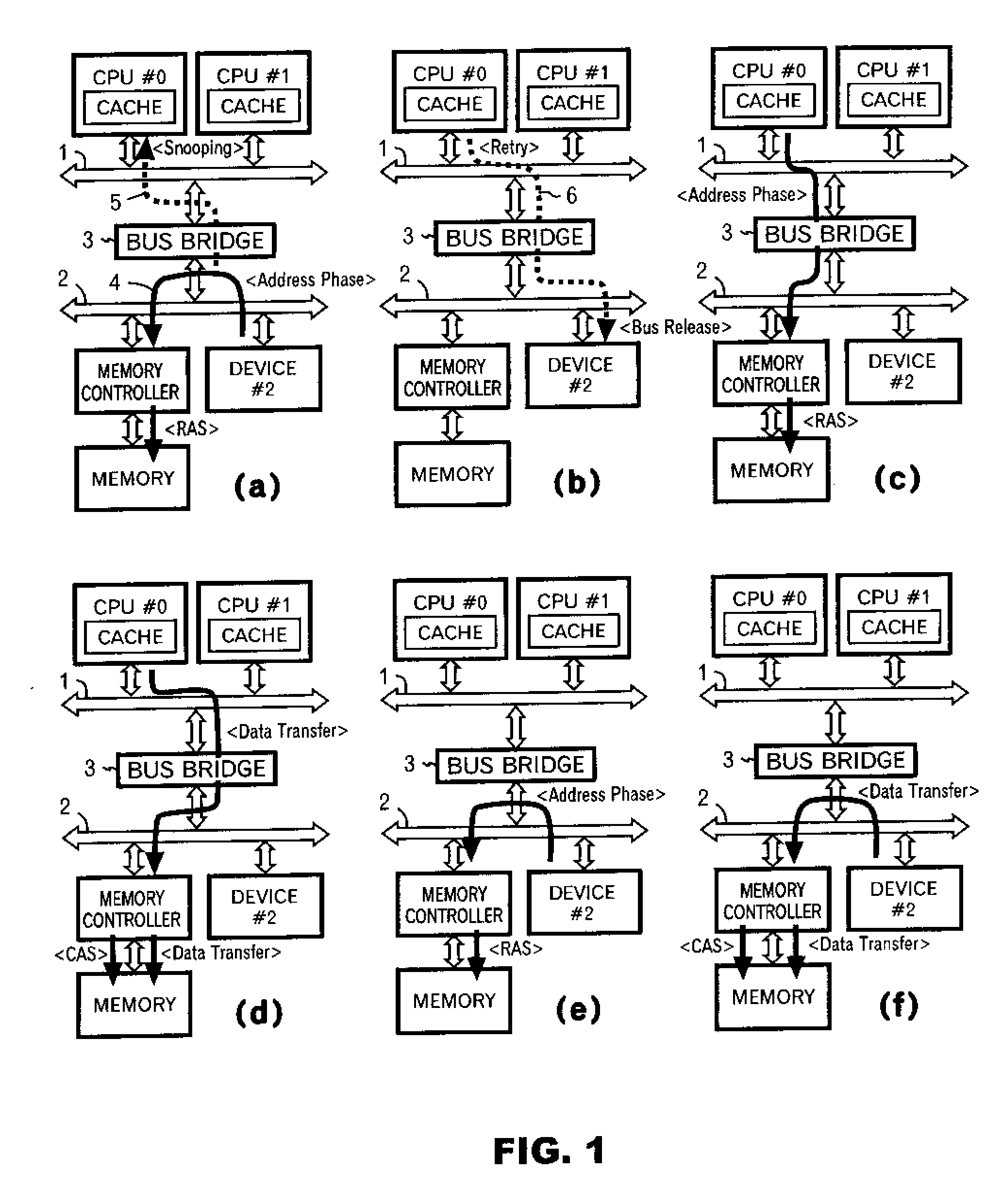

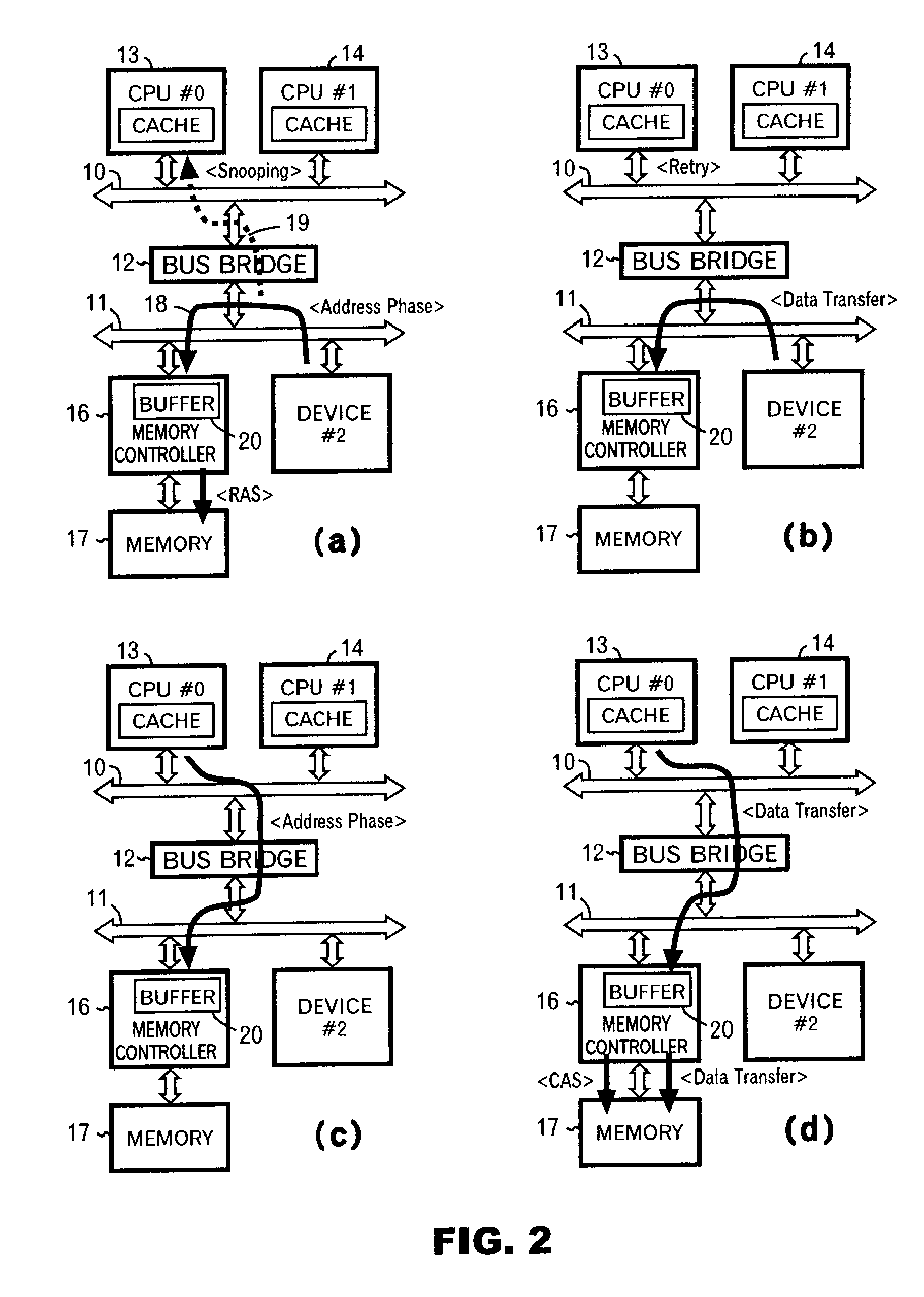

[0016] The present invention will be described with reference to the accompanying drawings. FIG. 2 is a diagram for illustrating an overview of a method (operation) of the present invention. In FIG. 2, a CPU bus 10 and a system bus 12 is interconnected through a bus bridge 13. Coupled onto the CPU bus 10 are CPU #0 (13) and CPU #2 (14). Each of the two CPUs has a cache. Coupled onto the system bus 12 are a device #2 (15), a memory controller (16) and a memory (17). The memory (17) is a system memory such as a DRAM. The memory controller (16) has a buffer 20 for temporarily storing data. While the configuration in FIG. 2 includes the two buses, the CPU bus 10 and system bus 12, a configuration in which devices are coupled onto one system bus may be used. Furthermore, any number of devices may be connected to a bus, provided that at least two master devices that can occupy the bus are connected to the bus.

[0017] In a snooping algorithm, CPU #0 (13) having a cache monitors (snoops 19)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com