Automated voice and speech labeling

a voice and speech labeling and automatic technology, applied in the field of speech data analysis system, can solve the problems of a large amount of training, several limitations on the application of the approach, and a substantial barrier to adoption by new users, and achieve the effect of reducing the number of users, reducing and increasing the difficulty of speech analysis

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

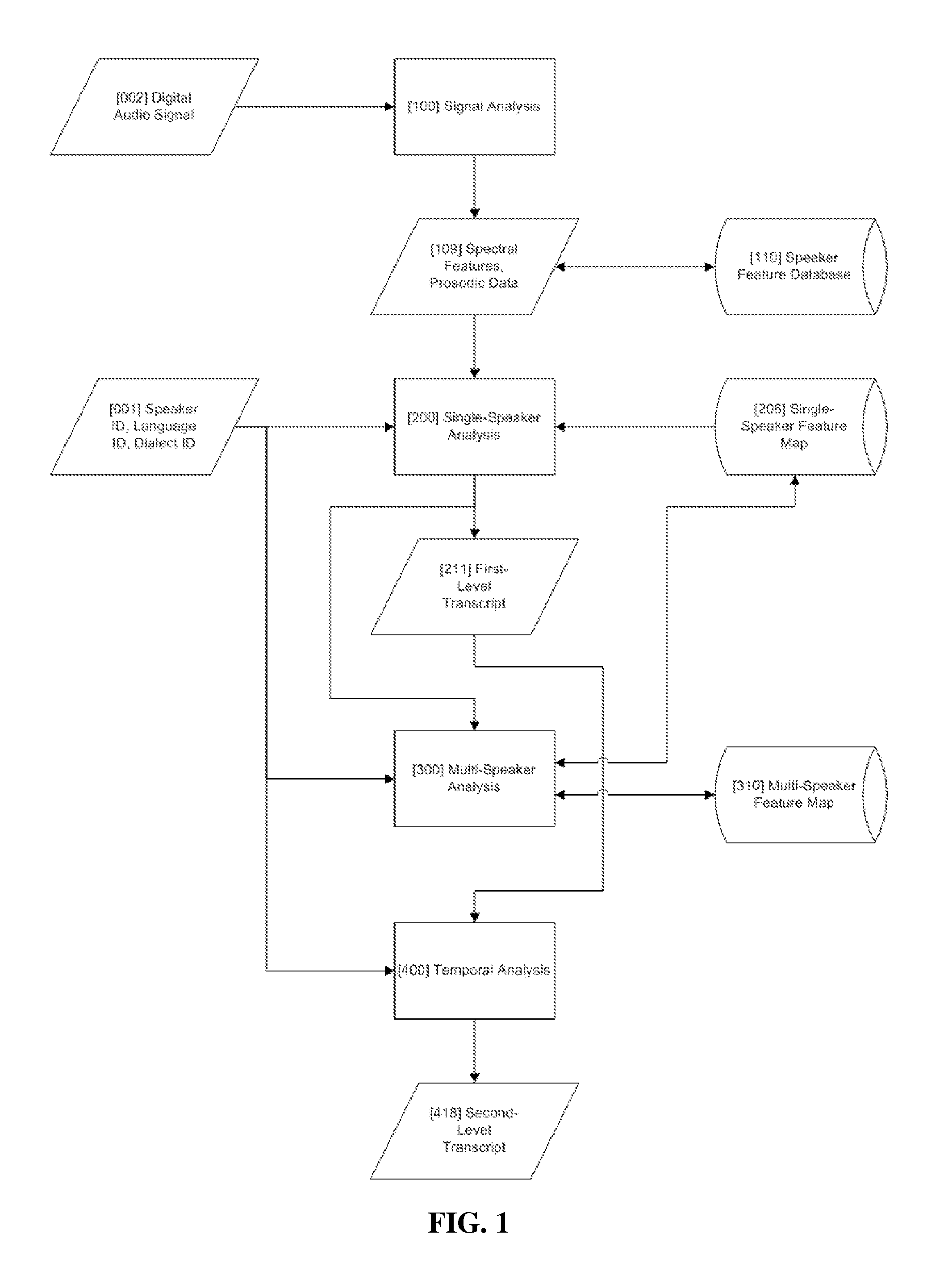

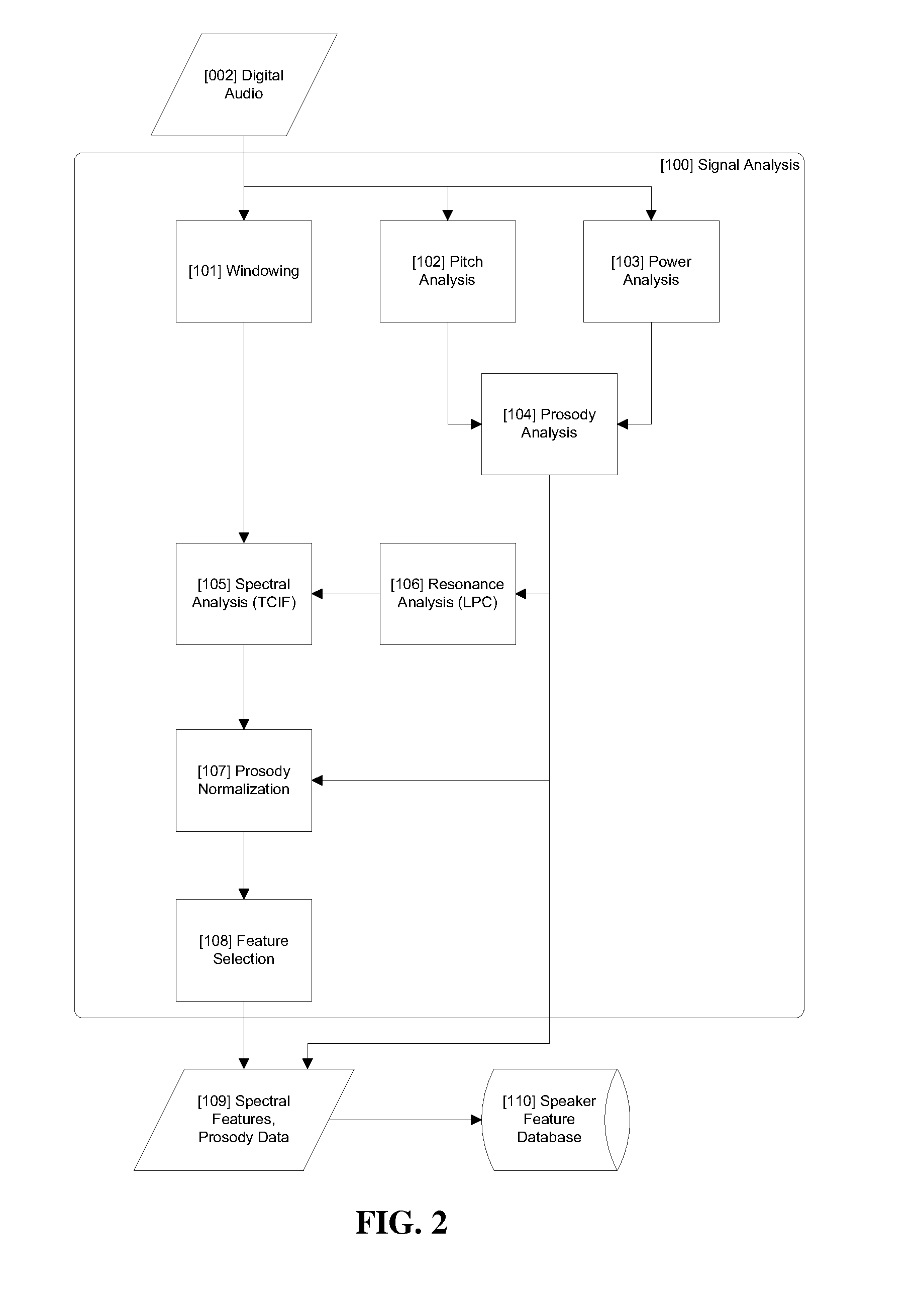

[0020]Referring now to the drawings, wherein like reference numerals refer to like parts throughout, FIG. 1 depicts a flowchart overview of a method of automated voice and speech labeling according to one embodiment of the present invention. The initial inputs comprises digital audio signal 2 and can comprise any digital audio signal, but preferably comprises words spoken aloud by a human being or computerized device. As a pre-processing step, an analog waveform, for example, may be digitized according to any method of analog-to-digital conversion known in the art, including through the use of a commercially-available analog-to-digital converter. A digital signal that is either received by the system for analysis or created per the digitization of an analog signal can be further prepared for downstream analysis by any known process of digital manipulation known in the art, including but not limited to storage of the signal in a database until it is needed or the system is ready to a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com