Automatic generation of training data for anomaly detection using other user's data samples

a training data and data sample technology, applied in the field of security on computers, can solve problems such as the challenge of anomaly detection, the lack of labeled samples from real applications, and the importance of anomaly detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

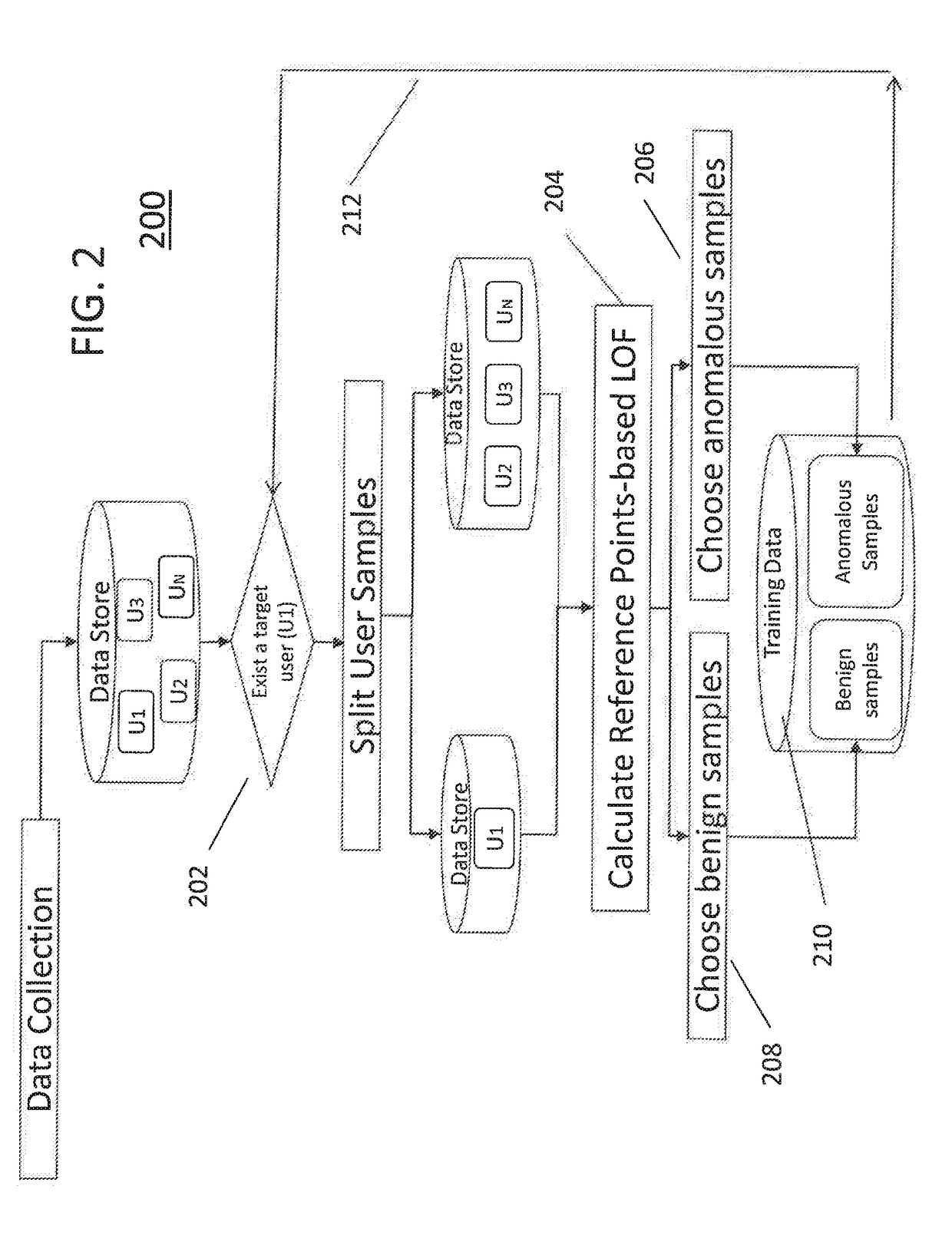

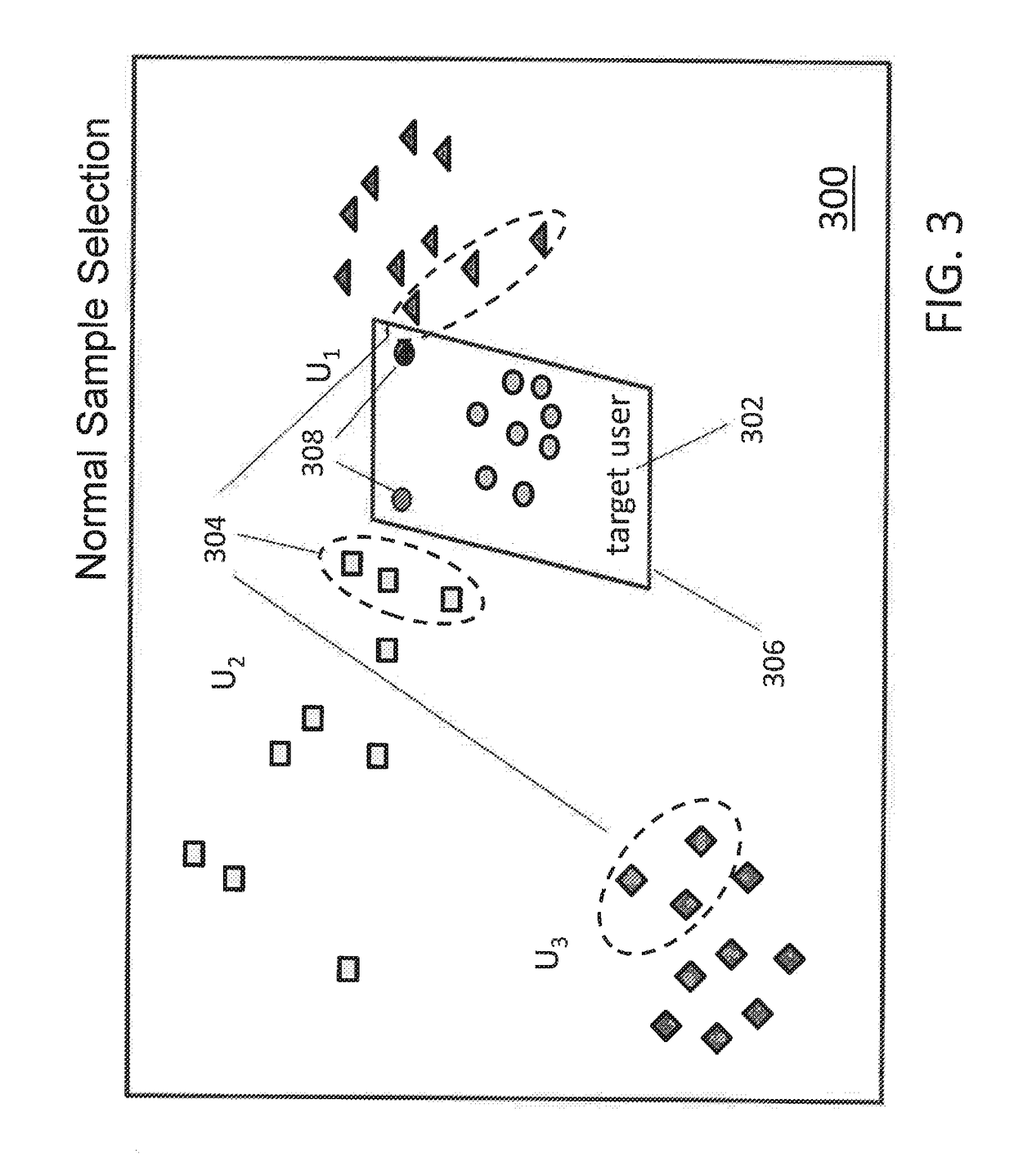

[0031]The present invention focuses on a method of providing abnormal behavior samples for a targeted user for use in developing, for example, an ML classifier for a normal / abnormal behavioral pattern detector for a system or application shared by multiple users. According to the present invention, in such scenarios, a target user's normal behavior is learned using training samples of the target user's own past behavior samples, whereas the target user's possible abnormal behavioral patterns can be learned from other users' training samples, since the other users expectedly exhibit quite different behavioral patterns from the target user.

[0032]Standard anomaly detection techniques, such as statistical analysis or one-class classification, aim to rank new samples based on their similarity to the model of the negative samples, assuming that all previously known samples are negative (benign). Many approaches use distance or density of the points as a measurement for the similarity, in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com