Methods and systems for representing a pre-modeled object within virtual reality data

a technology of virtual reality data and methods, applied in the field of methods and systems for representing pre-modeled objects within virtual reality data, can solve the problems of inaccuracy entering the resultant virtual reality data, inaccuracy may be distracting users, and the rendered depictions of objects may become distorted, lost, or otherwise reproduced inaccurately,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

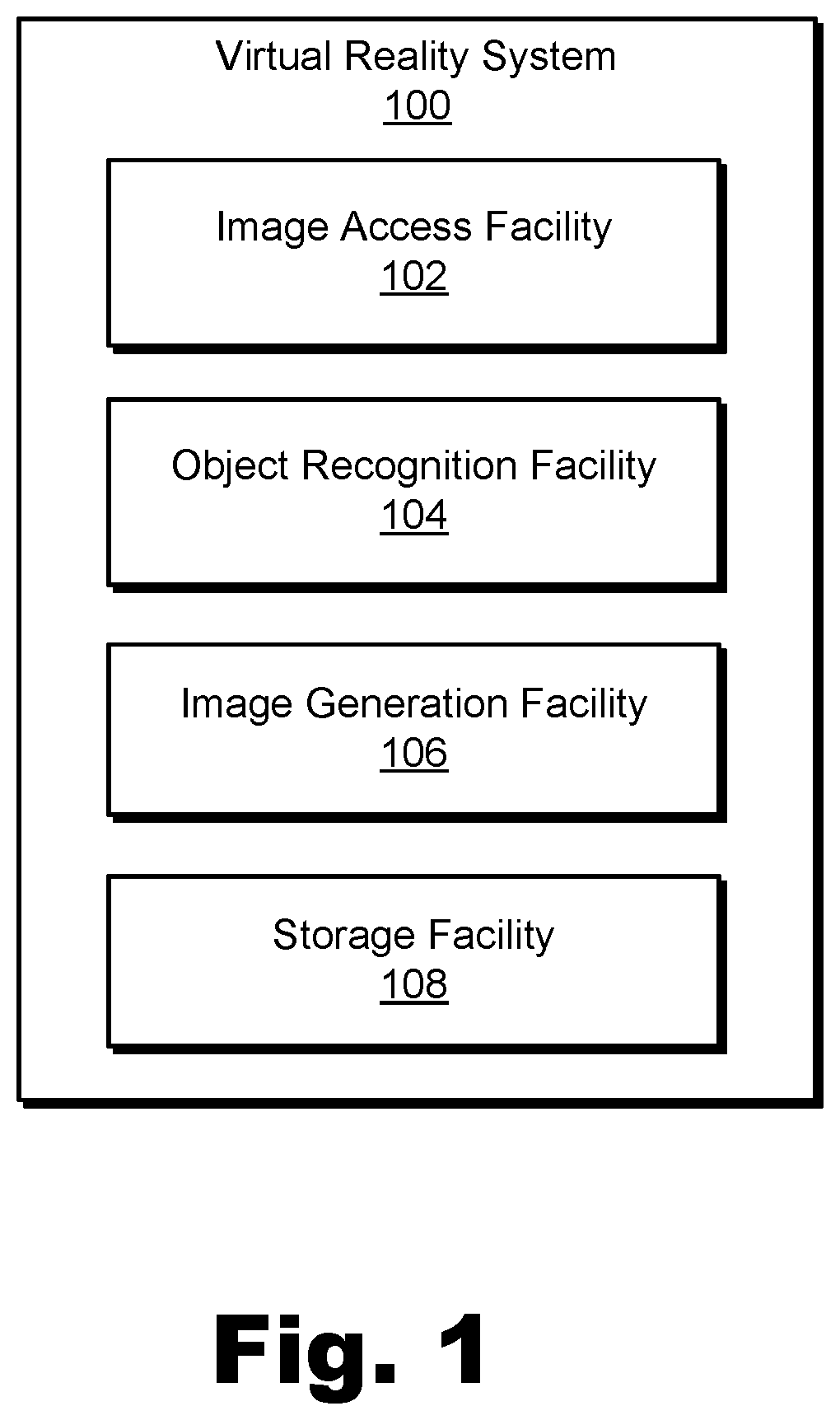

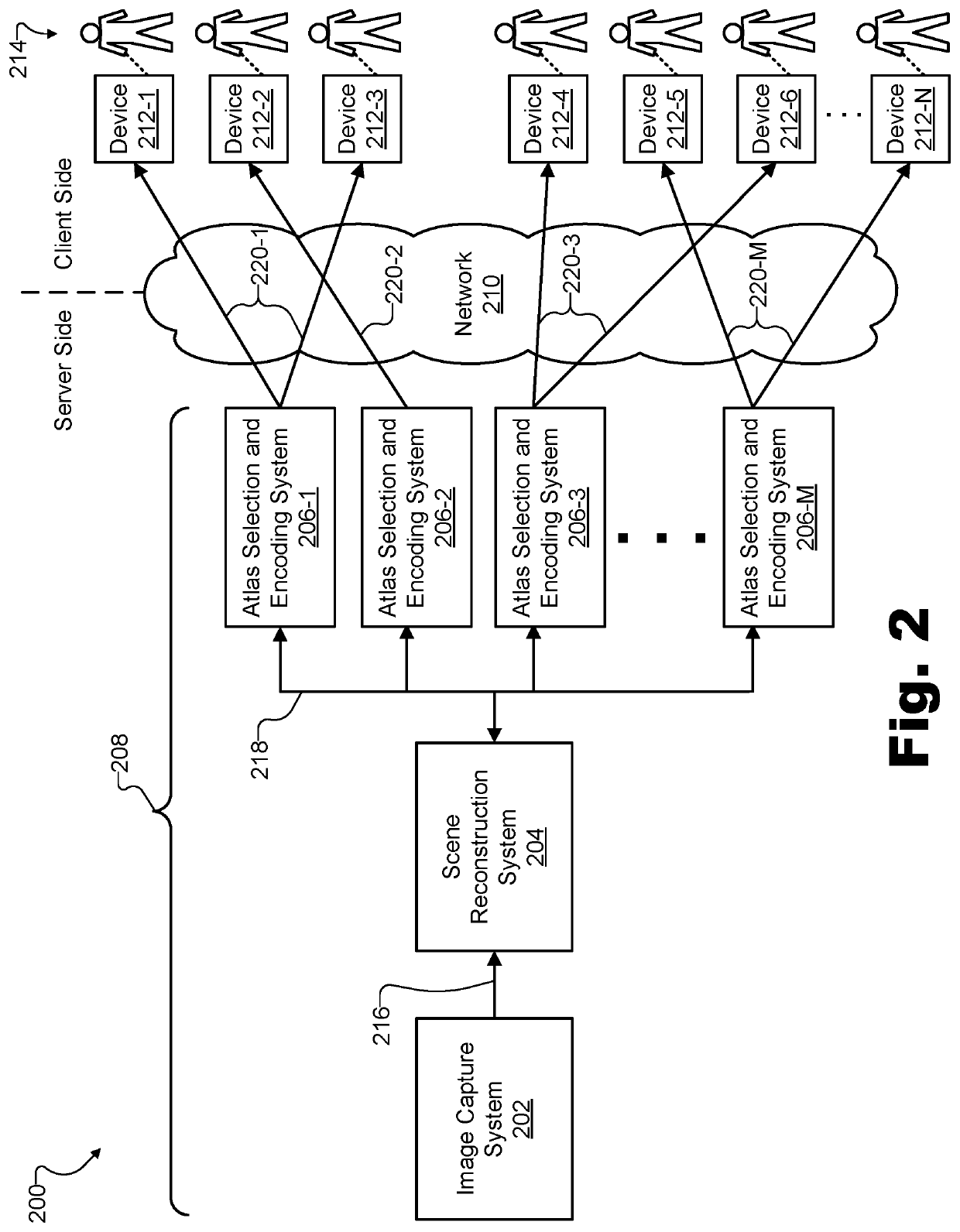

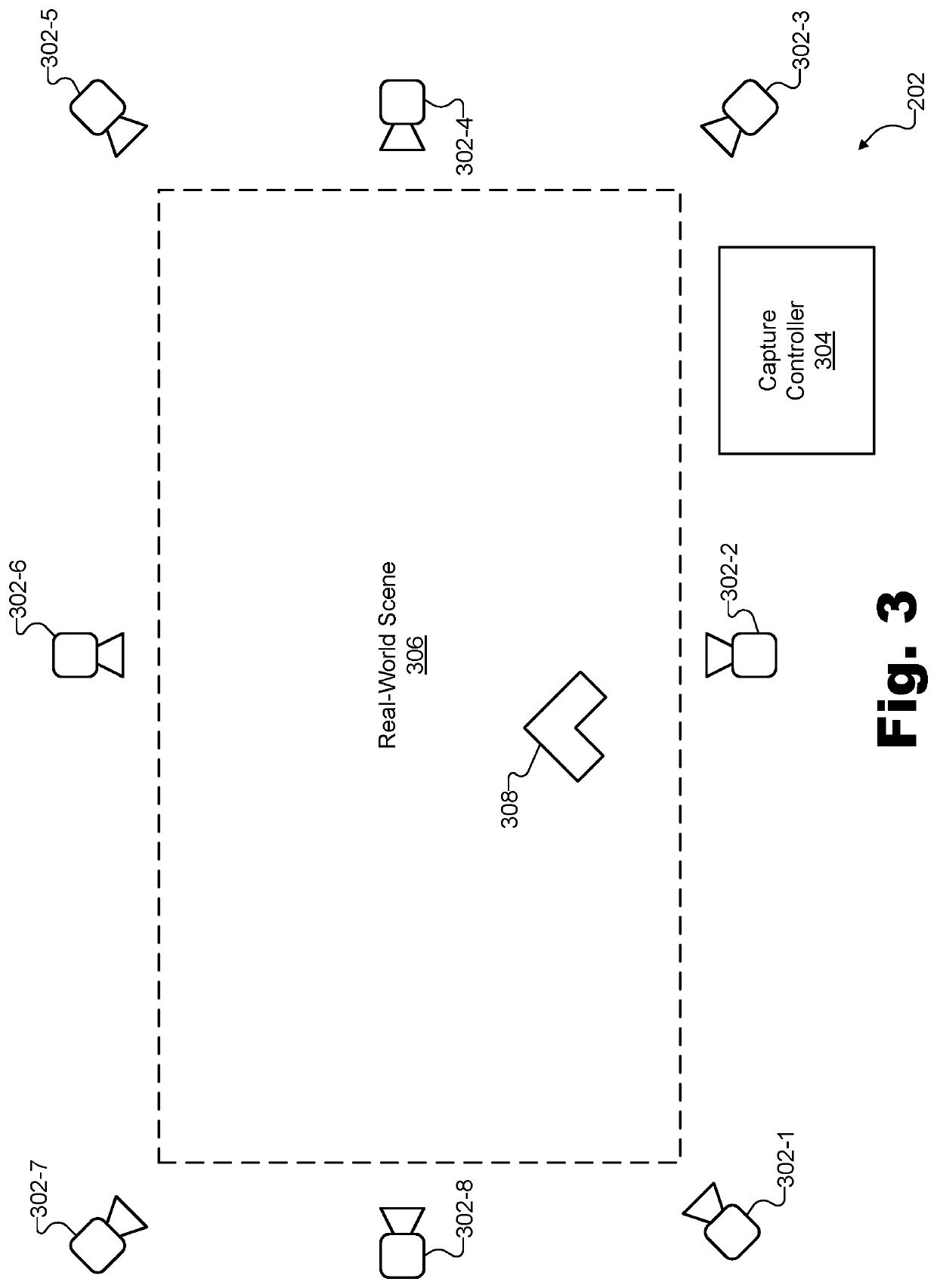

[0019]Methods and systems for representing a pre-modeled object within virtual reality data are described herein. For example, in certain implementations, a virtual reality system may access (e.g., receive, retrieve, load, transfer, etc.) a first image dataset and a second image dataset. An image dataset may be implemented by one or more files, data streams, and / or other types of data structures that contain data representative of an image (e.g., a two-dimensional image that has been captured or rendered in any of the ways described herein). The first image dataset accessed by the virtual reality system may be representative of a first captured image depicting a real-world scene from a first vantage point at a particular time, and the second image dataset may be representative of a second captured image depicting the real-world scene from a second vantage point distinct from the first vantage point at the particular time. These image datasets may be accessed from any suitable source...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com