Patents

Literature

36 results about "Vantage point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Eye-tap for electronic newsgathering, documentary video, photojournalism, and personal safety

InactiveUS6614408B1Augment and diminishEasy to catchTelevision system detailsCathode-ray tube indicatorsCommunications mediaComputer science

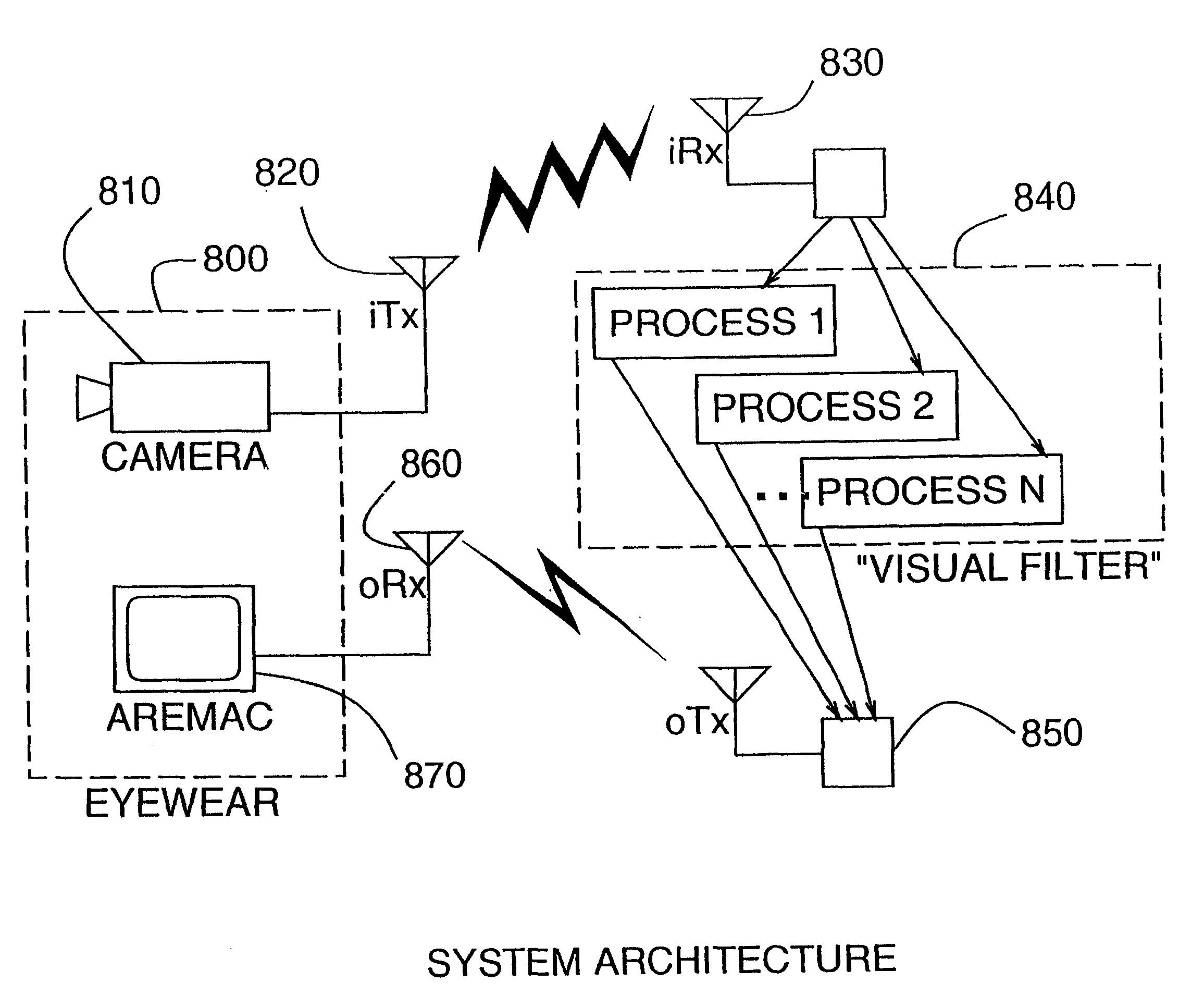

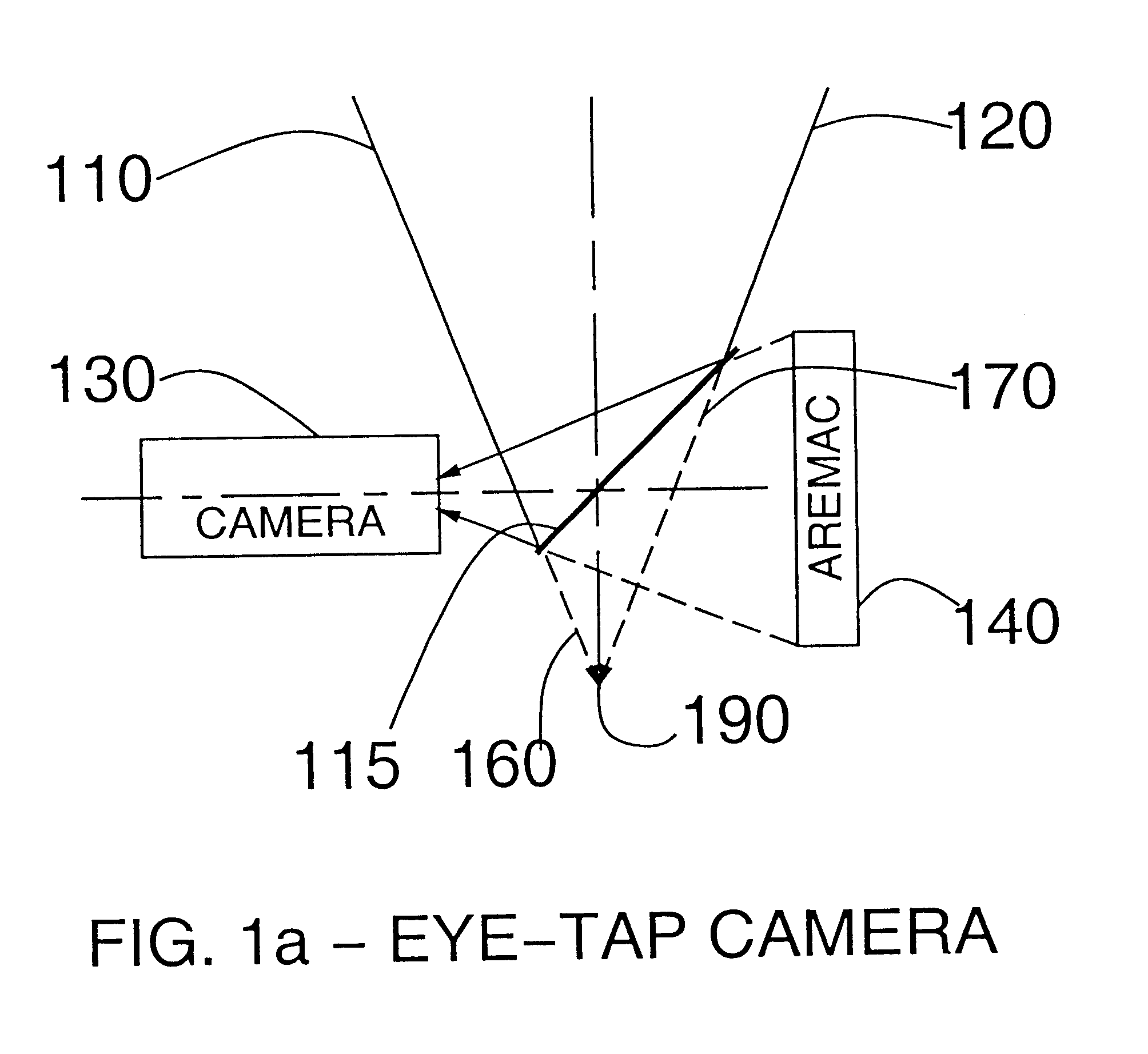

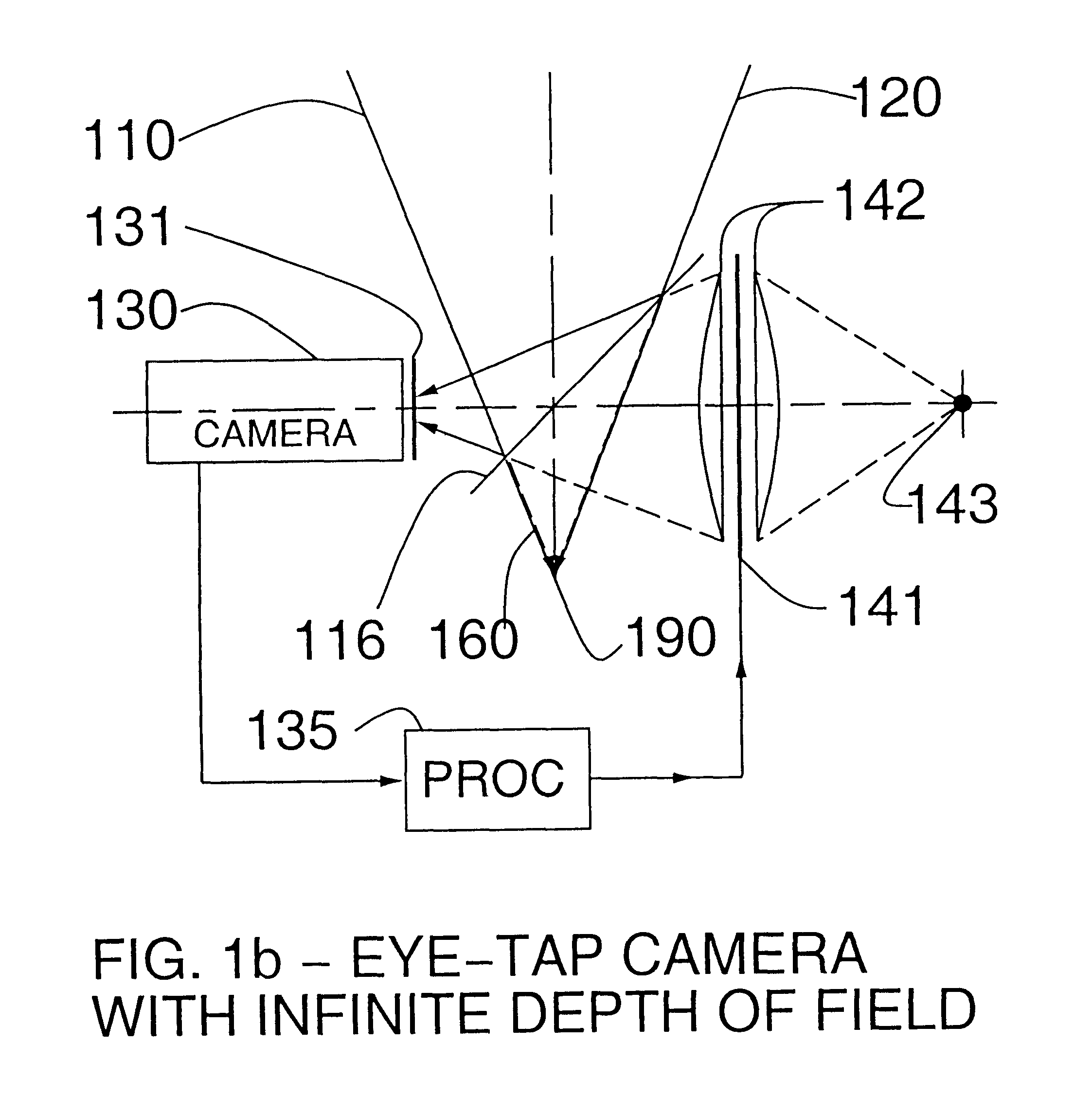

A novel system for a new kind of electronic news gathering and videography is described. In particular, a camera that captures light passing through the center of a lens of an eye of the user is described. Such an electronic newsgathering system allows the eye itself to, in effect, function as a camera. In wearable embodiments of the invention, a journalist wearing the apparatus becomes, after adaptation, an entity that seeks, without conscious thought or effort, an optimal point of vantage and camera orientation. Moreover, the journalist can easily become part of a human intelligence network, and draw upon the intellectual resources and technical photographic skills of a large community. Because of the journalist's ability to constantly see the world through the apparatus of the invention, which may also function as an image enhancement device, the apparatus behaves as a true extension of the journalist's mind and body, giving rise to a new genre of documentary video. In this way, it functions as a seamless communications medium that uses a reality-based user-interface.

Owner:MANN W STEPHEN G

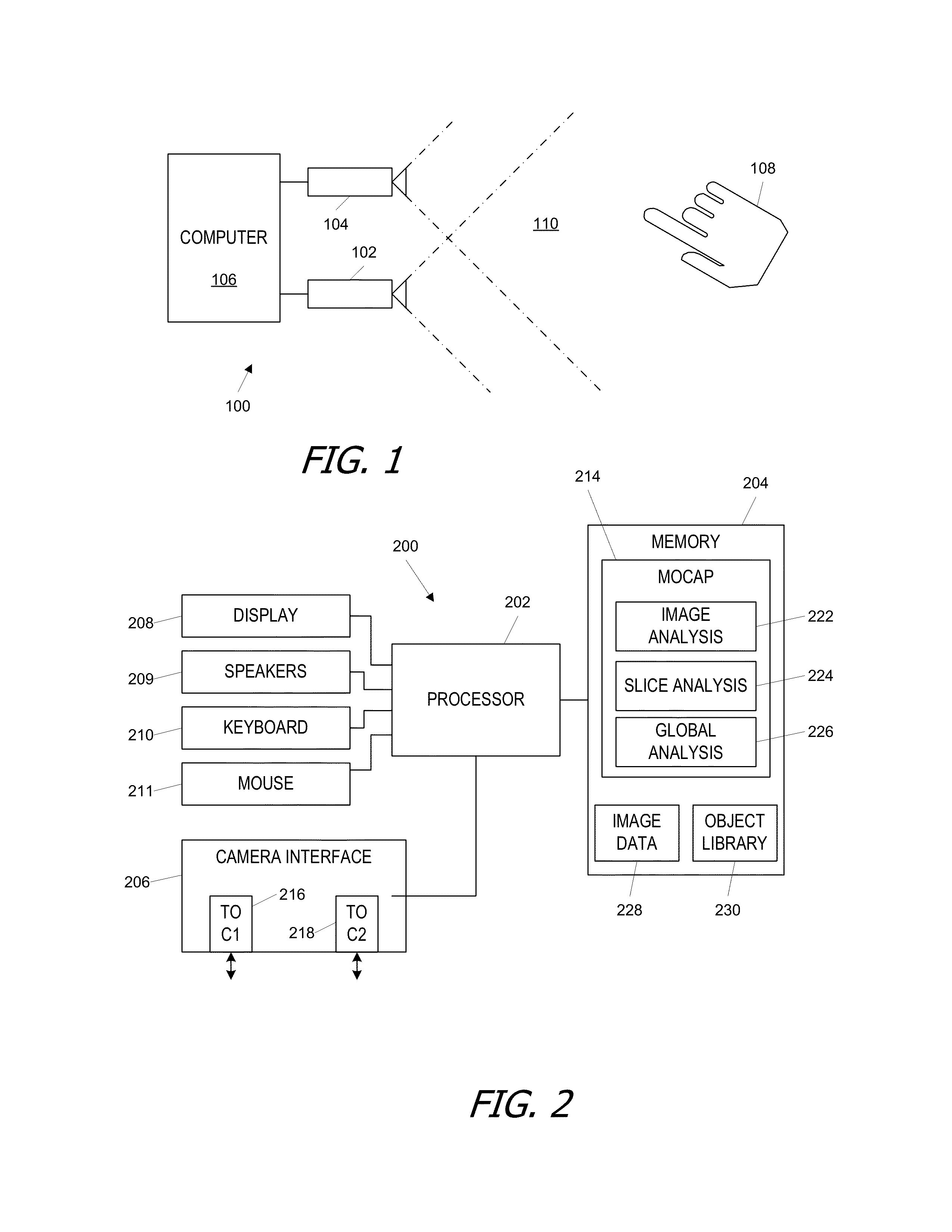

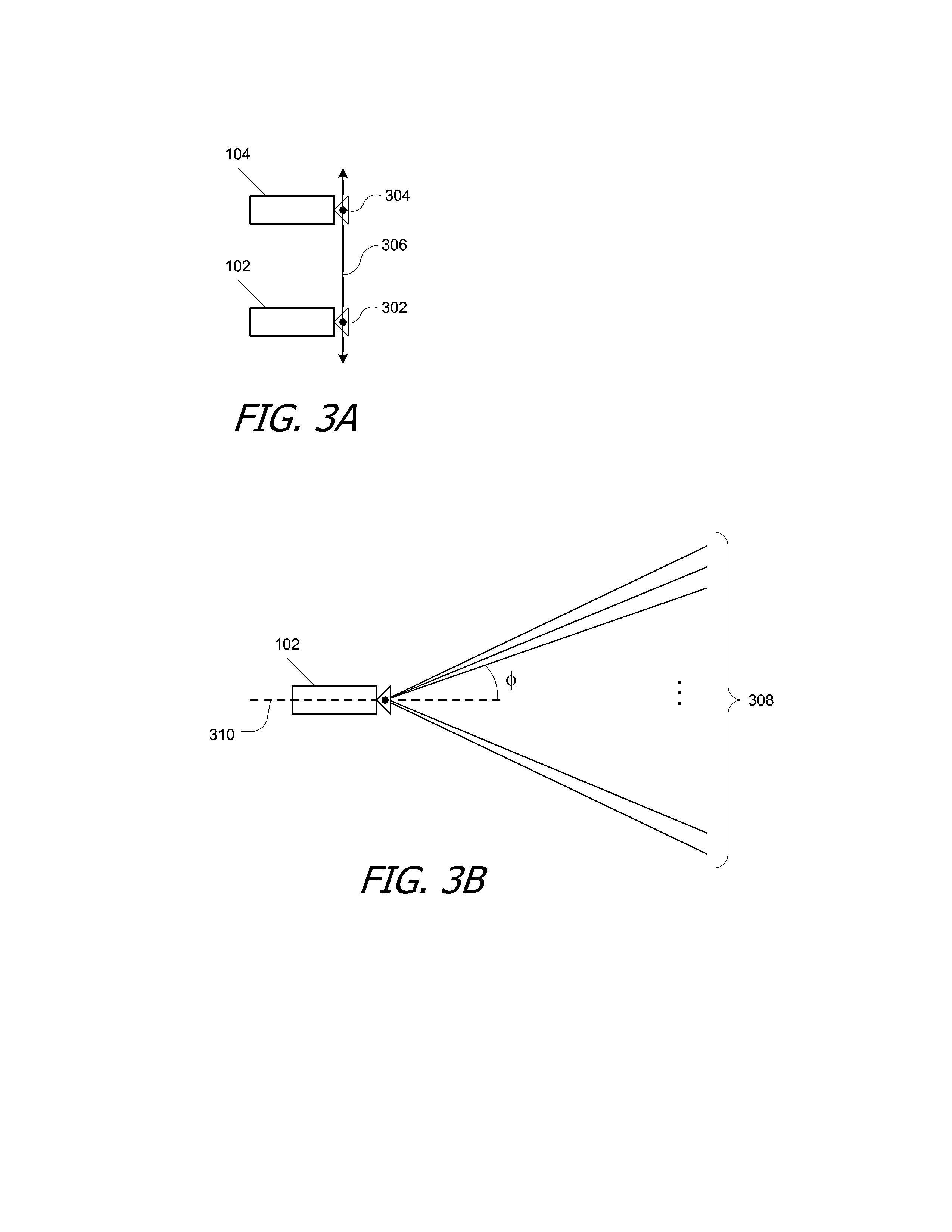

Motion capture using cross-sections of an object

InactiveUS20130182079A1Image enhancementDetails involving processing stepsEllipseThree-dimensional space

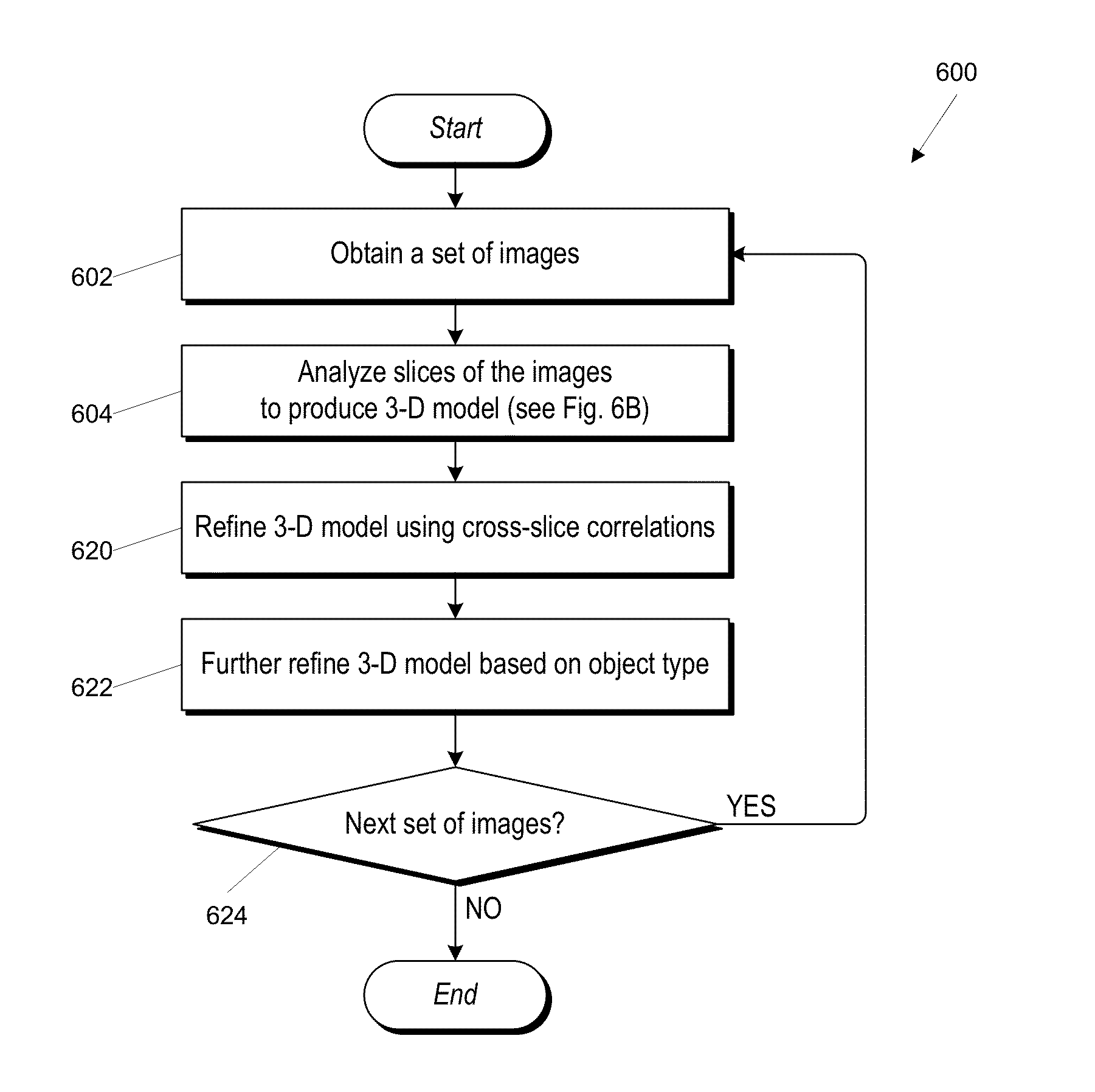

An object's position and / or motion in three-dimensional space can be captured. For example, a silhouette of an object as seen from a vantage point can be used to define tangent lines to the object in various planes (“slices”). From the tangent lines, the cross section of the object is approximated using a simple closed curve (e.g., an ellipse). Alternatively, locations of points on an object's surface in a particular slice can also be determined directly, and the object's cross-section in the slice can be approximated by fitting a simple closed curve to the points. Positions and cross sections determined for different slices can be correlated to construct a 3D model of the object, including its position and shape. A succession of images can be analyzed to capture motion of the object.

Owner:ULTRAHAPTICS IP TWO LTD

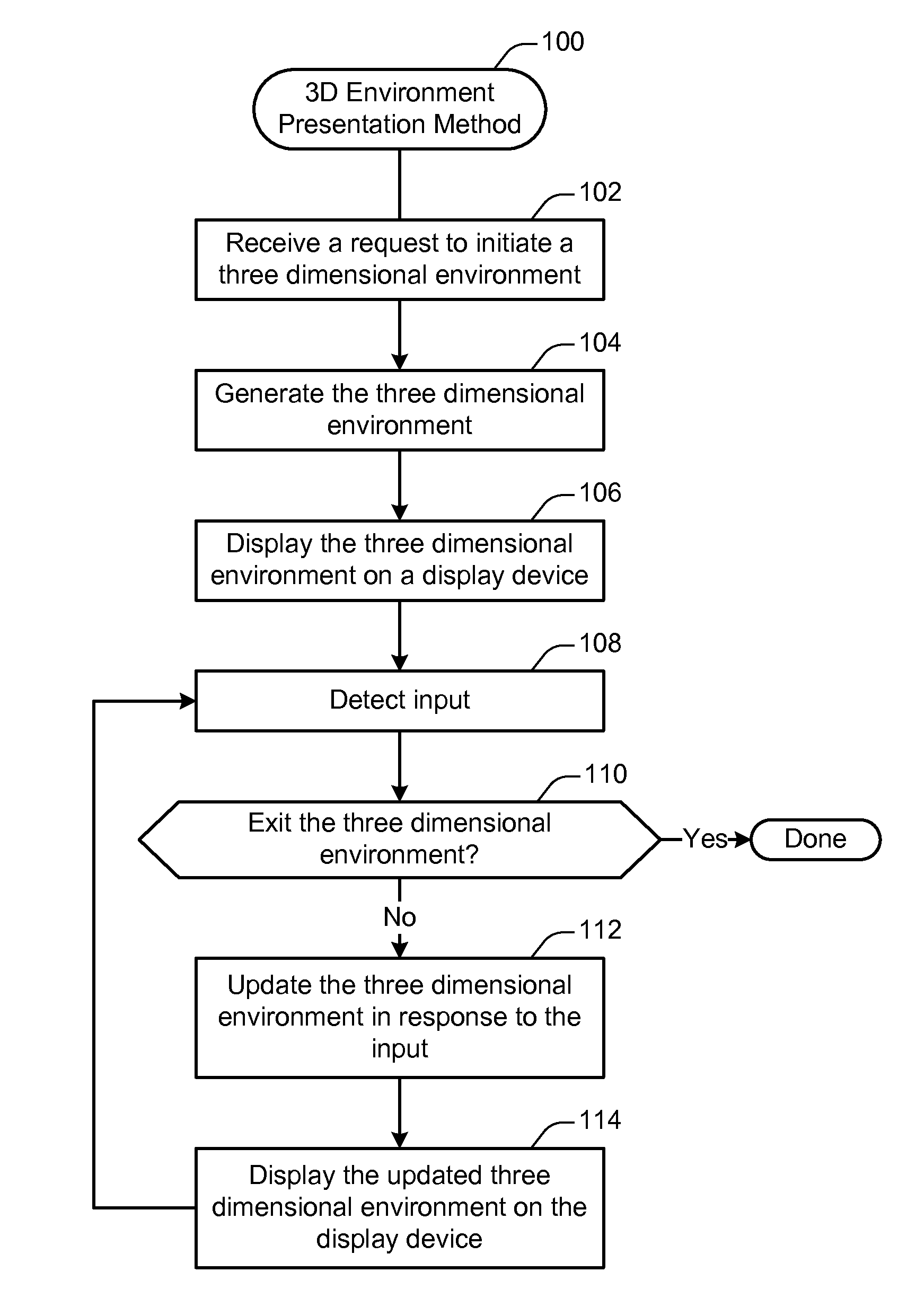

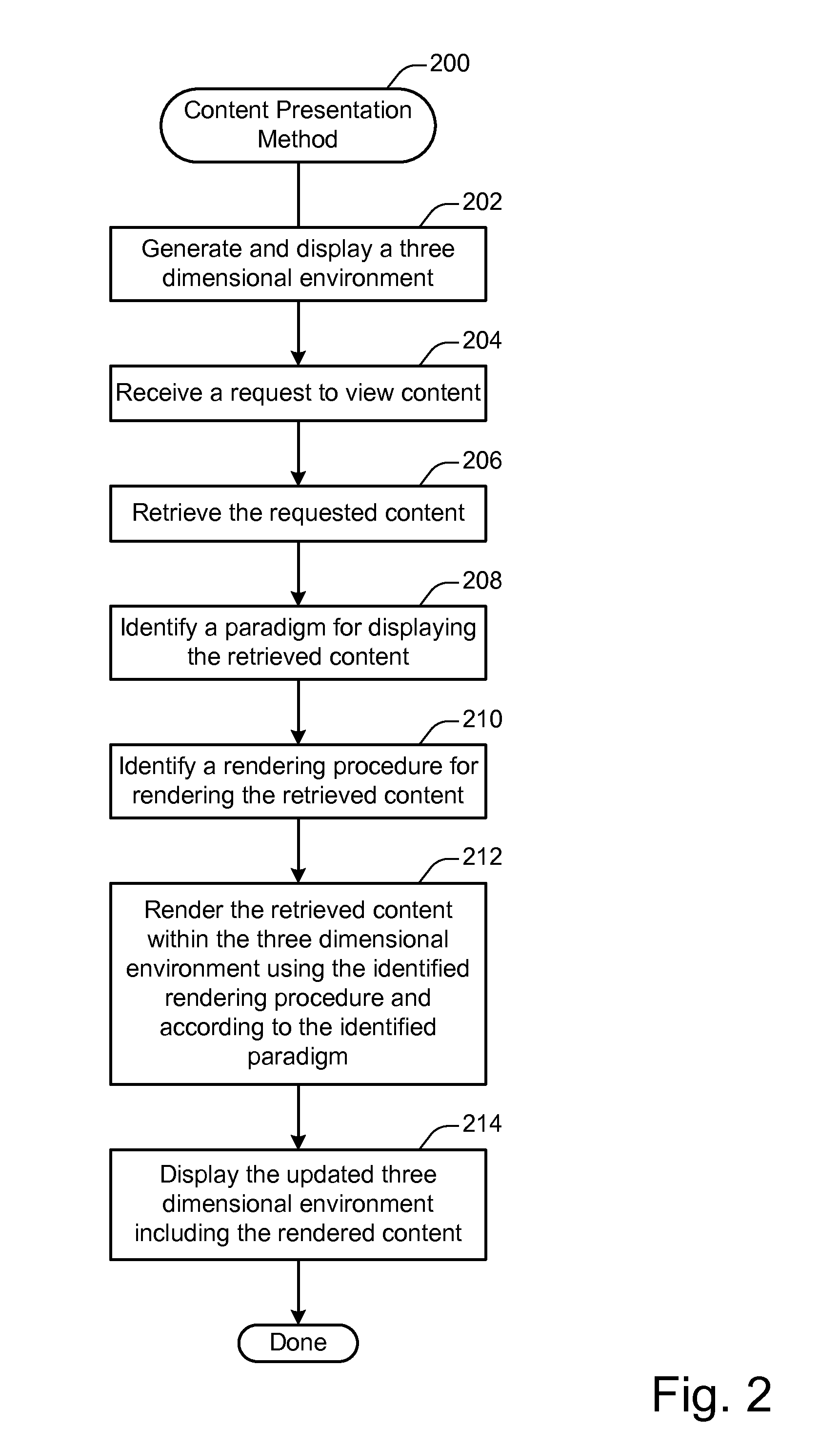

Content Presentation in a Three Dimensional Environment

InactiveUS20110169927A1Steroscopic systemsInput/output processes for data processingDisplay deviceMedia content

Systems, devices, and methods for displaying method content are described. In some embodiments, media content for display in a virtual three dimensional environment may be described. The virtual three dimensional environment including a representation of the identified media content may be generated. The generated virtual three dimensional environment may be displayed on a display device in communication with the first computing device. The virtual three dimensional environment may be displayed from a vantage point at a first location within the virtual three dimensional environment. Input modifying the virtual three dimensional environment may be detected. The virtual three dimensional environment may be updated in accordance with the detected input. The updated virtual three dimensional environment may be displayed on the display device.

Owner:HALOSNAP STUDIOS

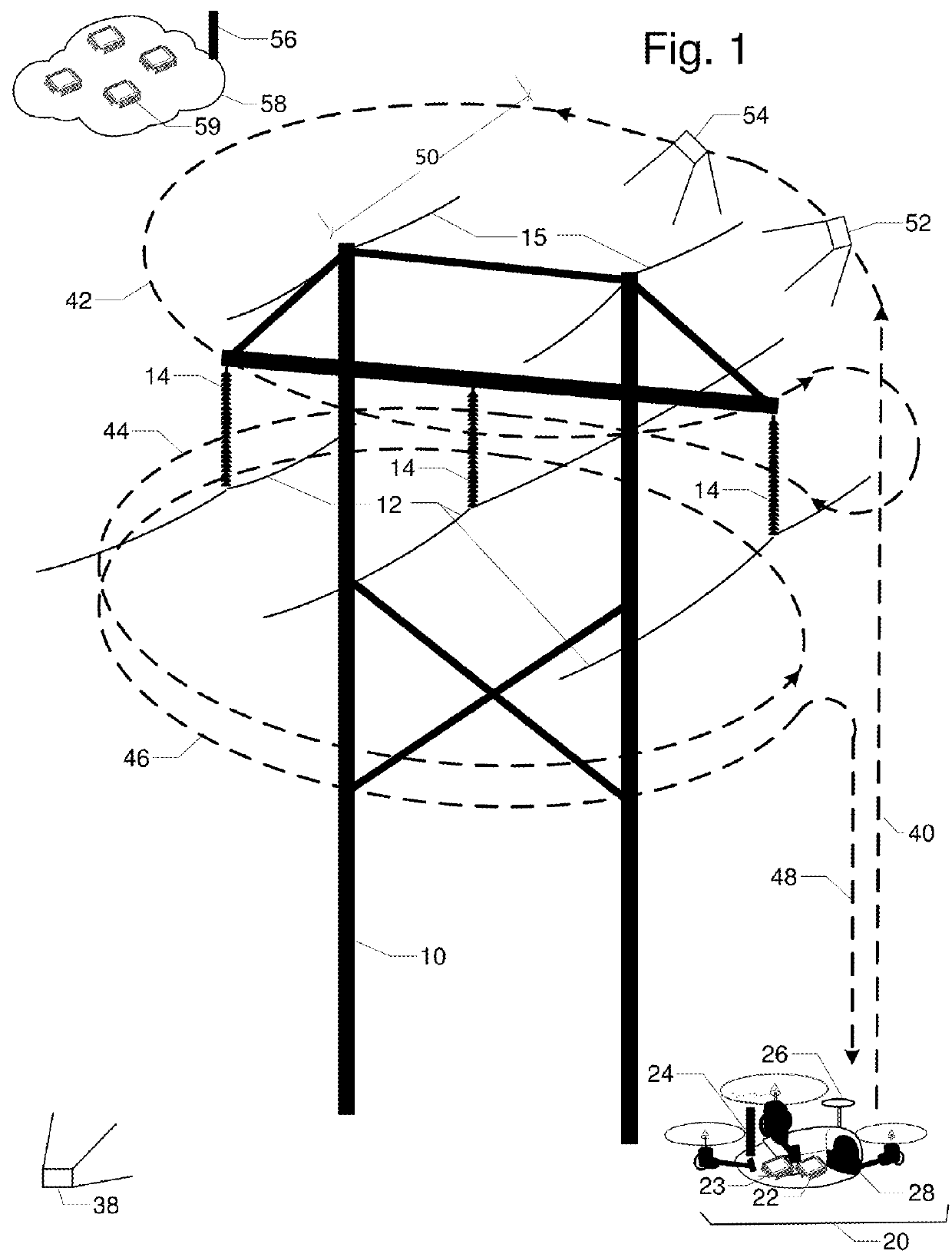

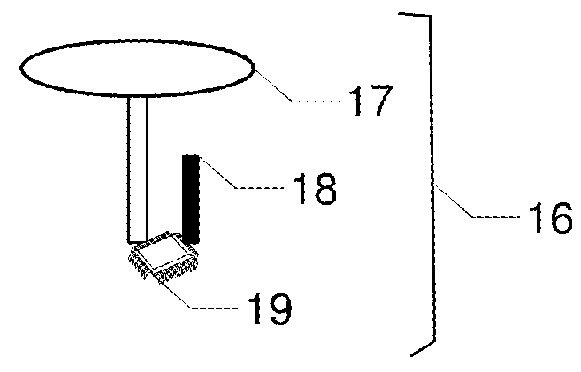

Flight Planning for Unmanned Aerial Tower Inspection with Long Baseline Positioning

ActiveUS20180095478A1Accurate calculationFull coverageSatellite radio beaconingElectromagnetic wave reradiationTransmission towerRadio equipment

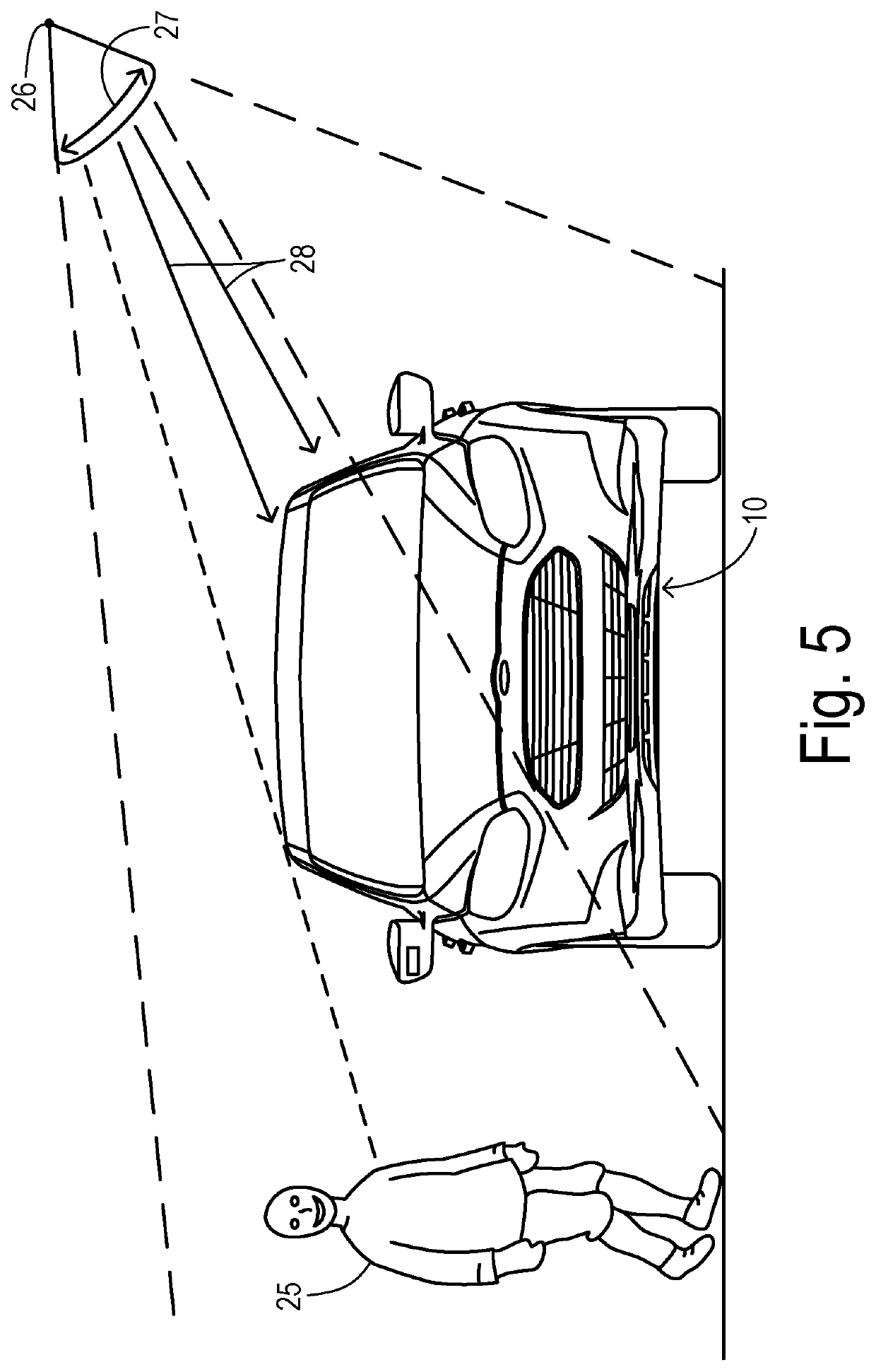

FIG. 1 is a perspective view of transmission tower 10, phase conductors 12, insulators 14, and shield wires 15. They are to be inspected by unmanned aerial vehicle UAV 20 with embedded processor and memory 22, radio 24, location rover 26, and camera 28. Continuously operating reference station 16 has GNSS antenna 17, GNSS receiver 19, and radio 18. The relative location between UAV 20 and reference station 16 can be accurately calculated using location corrections sent by radio 18 to radio 24. Camera 28 on UAV 20 is first used to capture two or more orientation images 38 and 39 of tower 10; lines 12 and 15; and insulators 14 from different vantage points. Terrestrial or close-range photogrammetry techniques are used create a three dimensional model of tower 10; lines 12 and 15; and insulators 14. Based on inspection resolution and safety objectives, a standoff distance 50 is determined. Then a flight path with segments for ascent 40, one or more loops 42, 44, 46, and a descent 48 is designed to ensure full inspection coverage via inspection images like 52 and 54.

Owner:VAN CRUYNINGEN IZAK

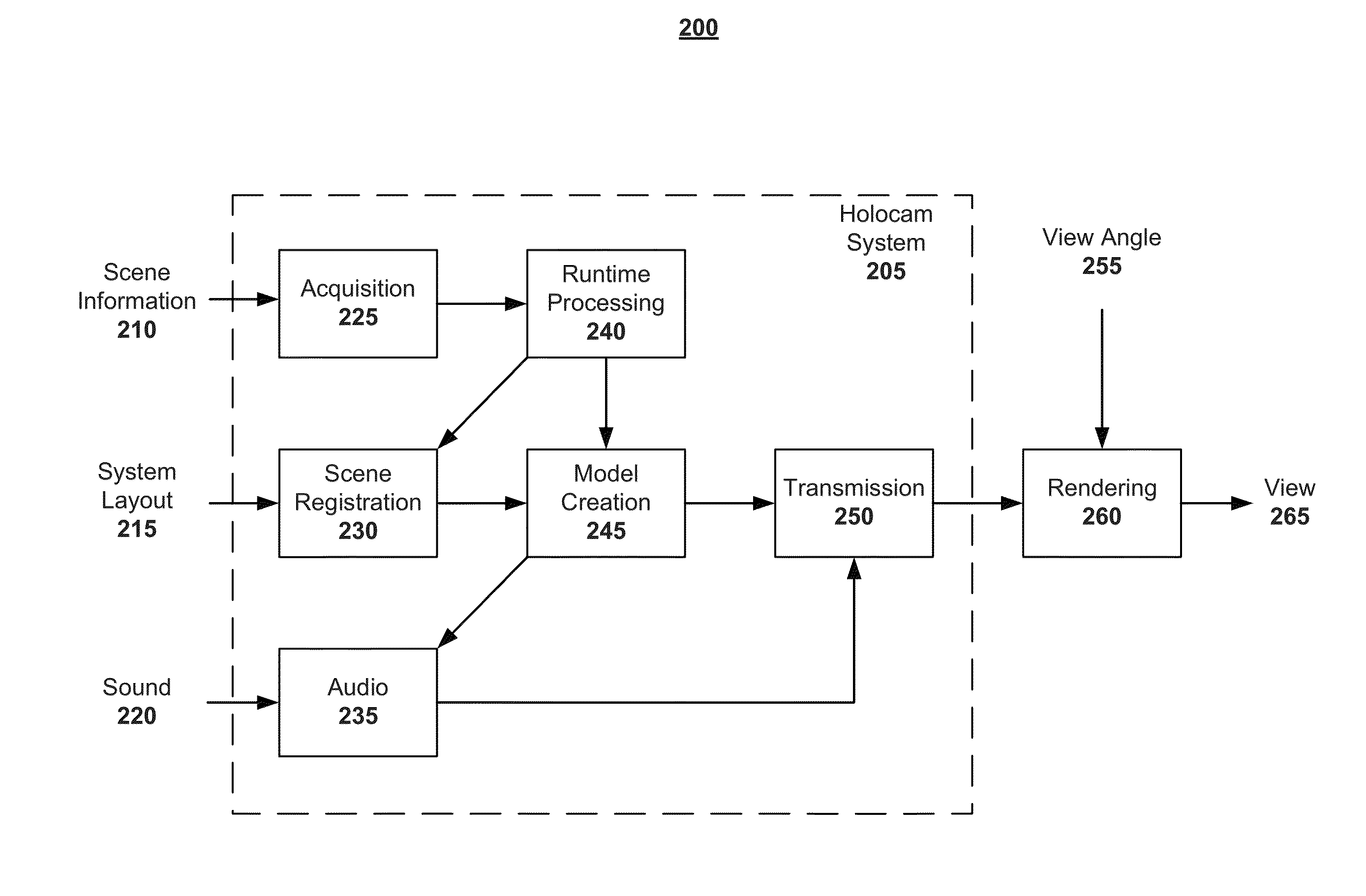

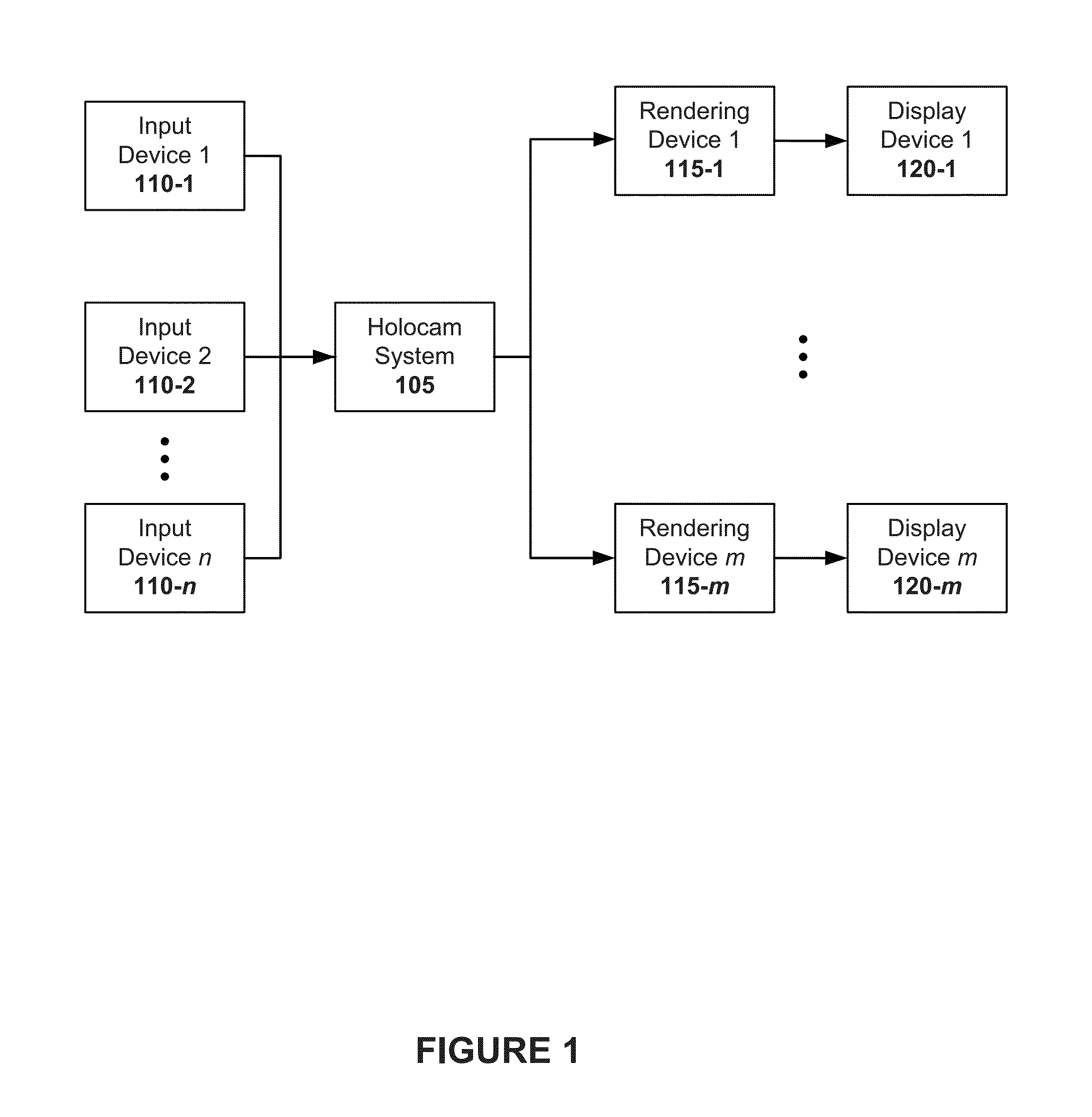

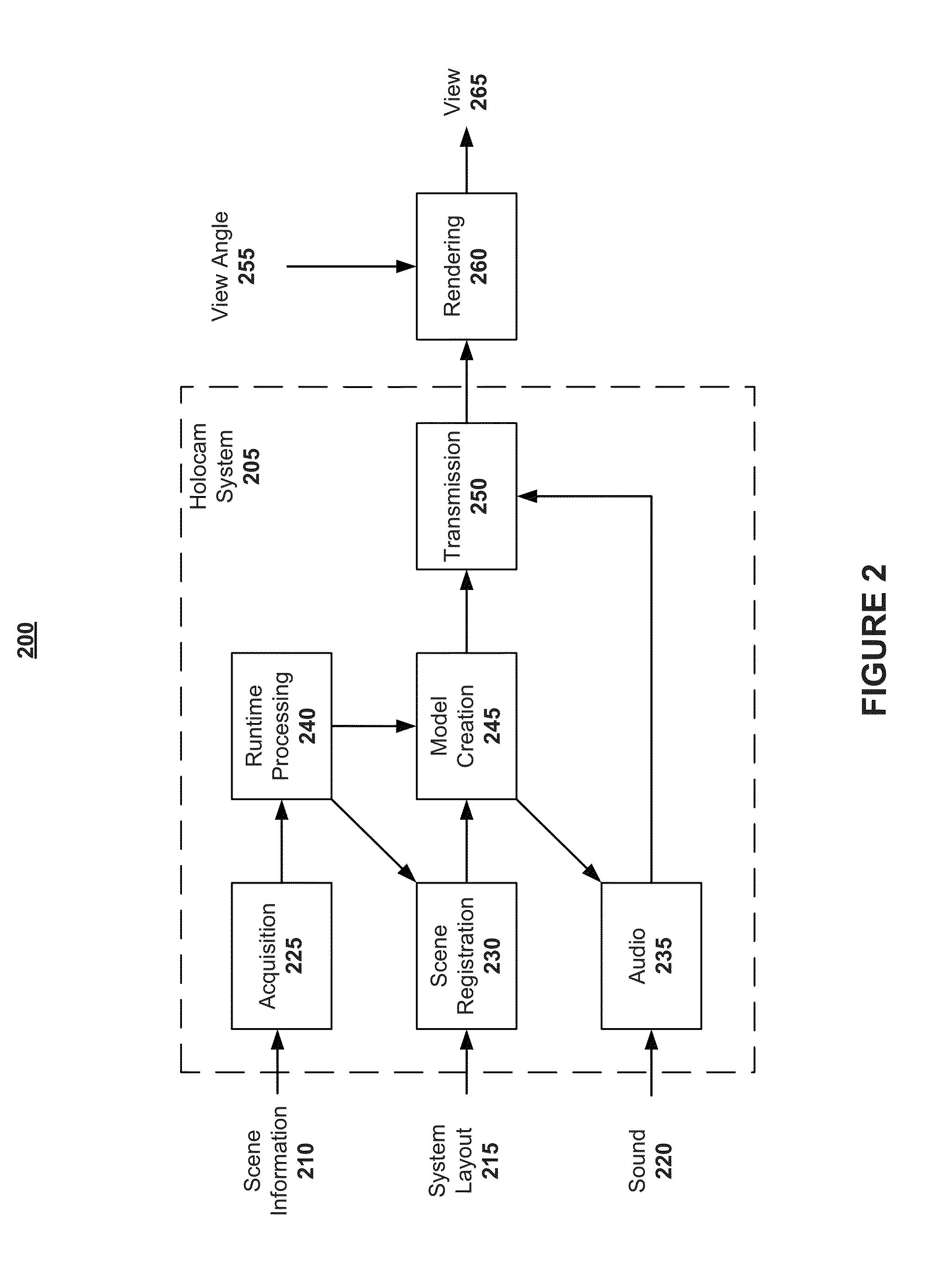

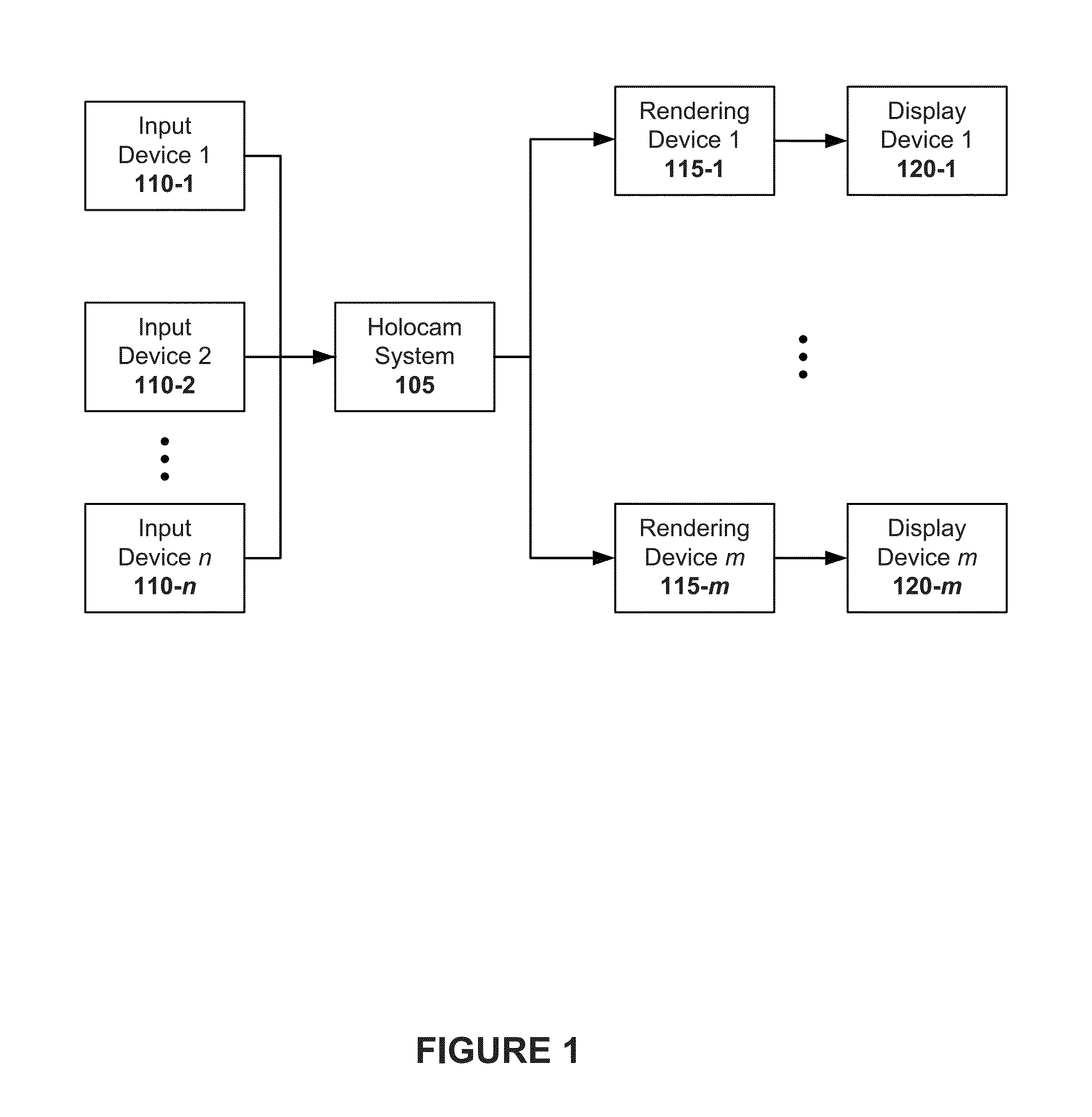

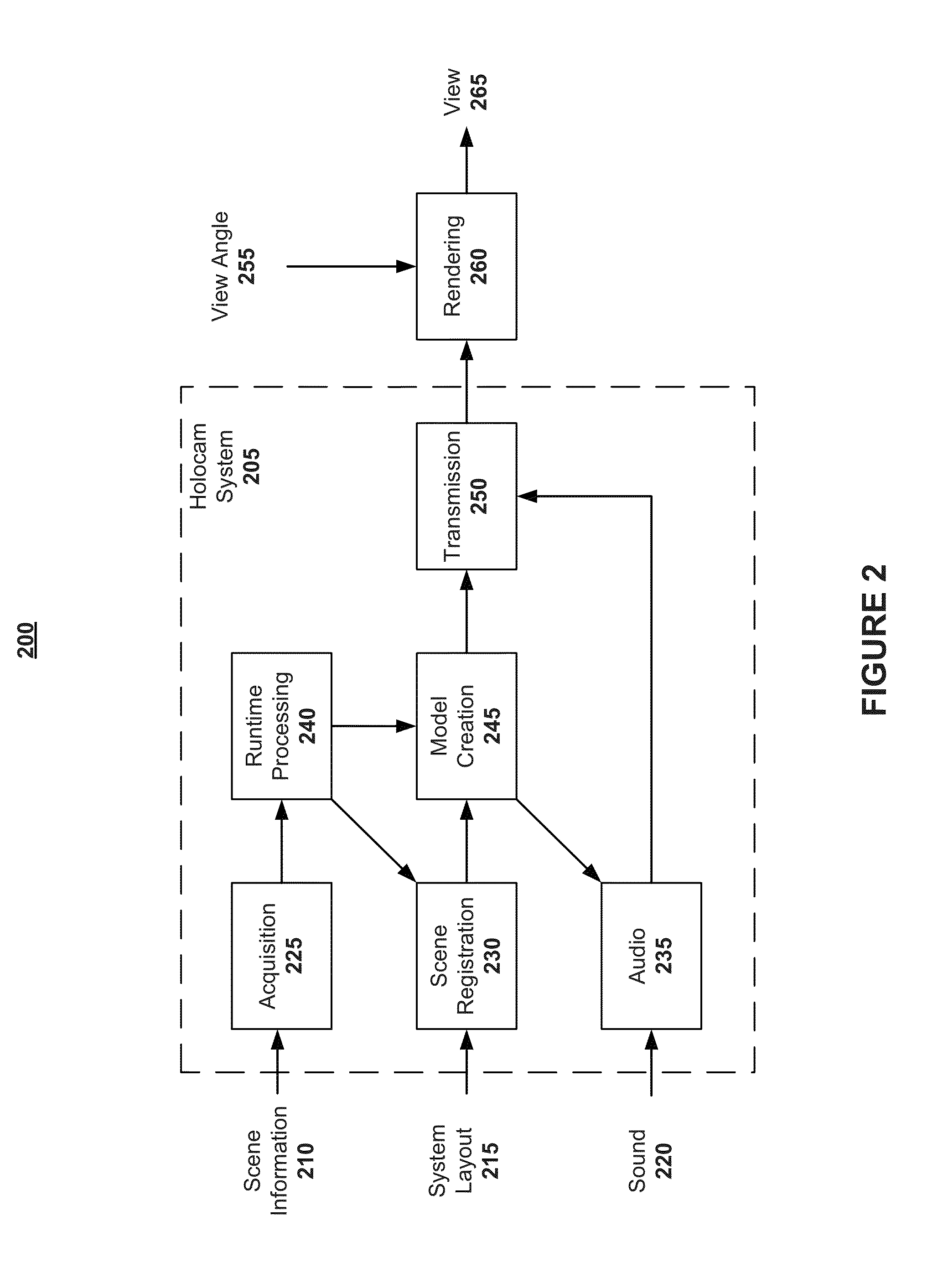

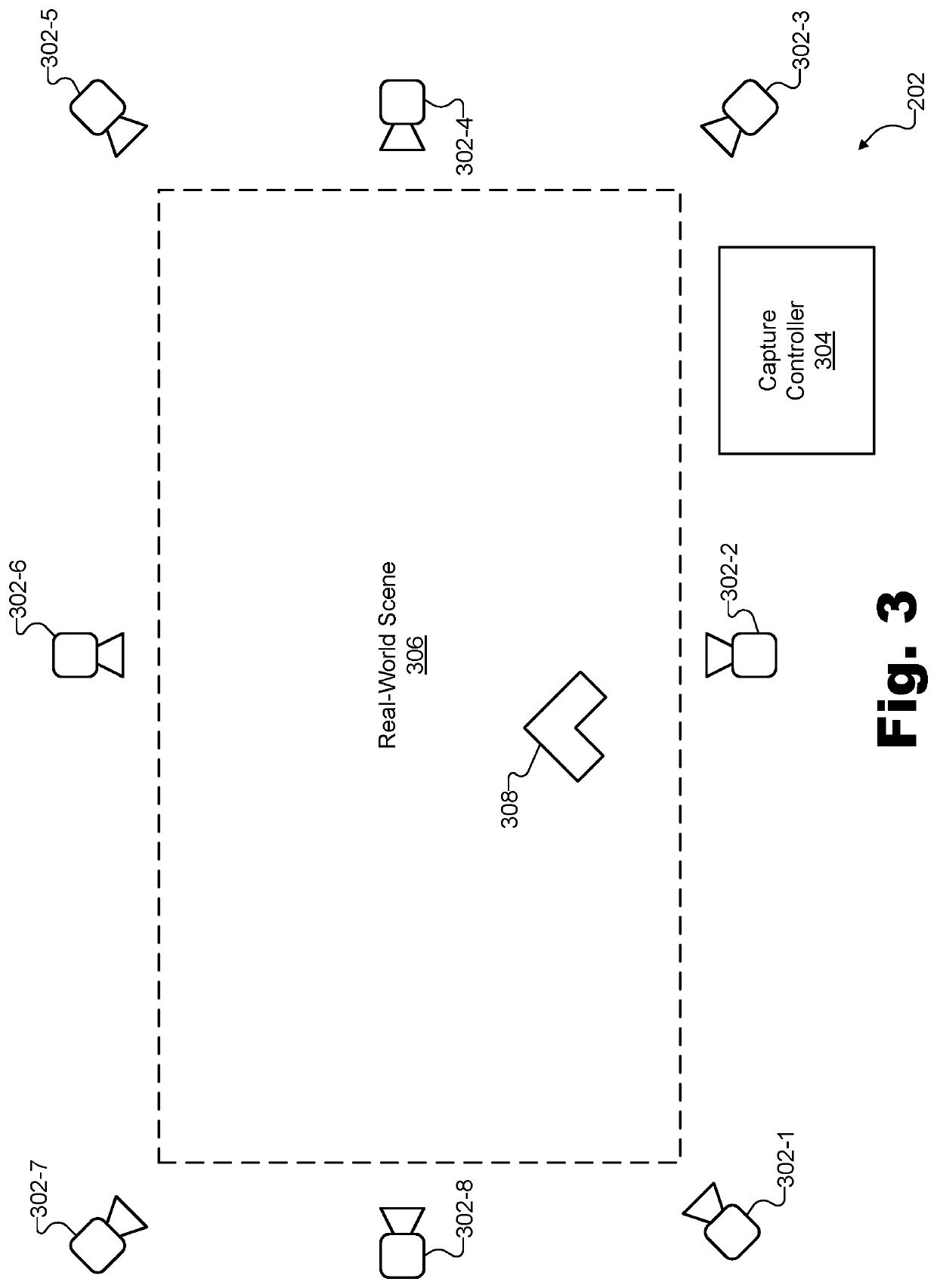

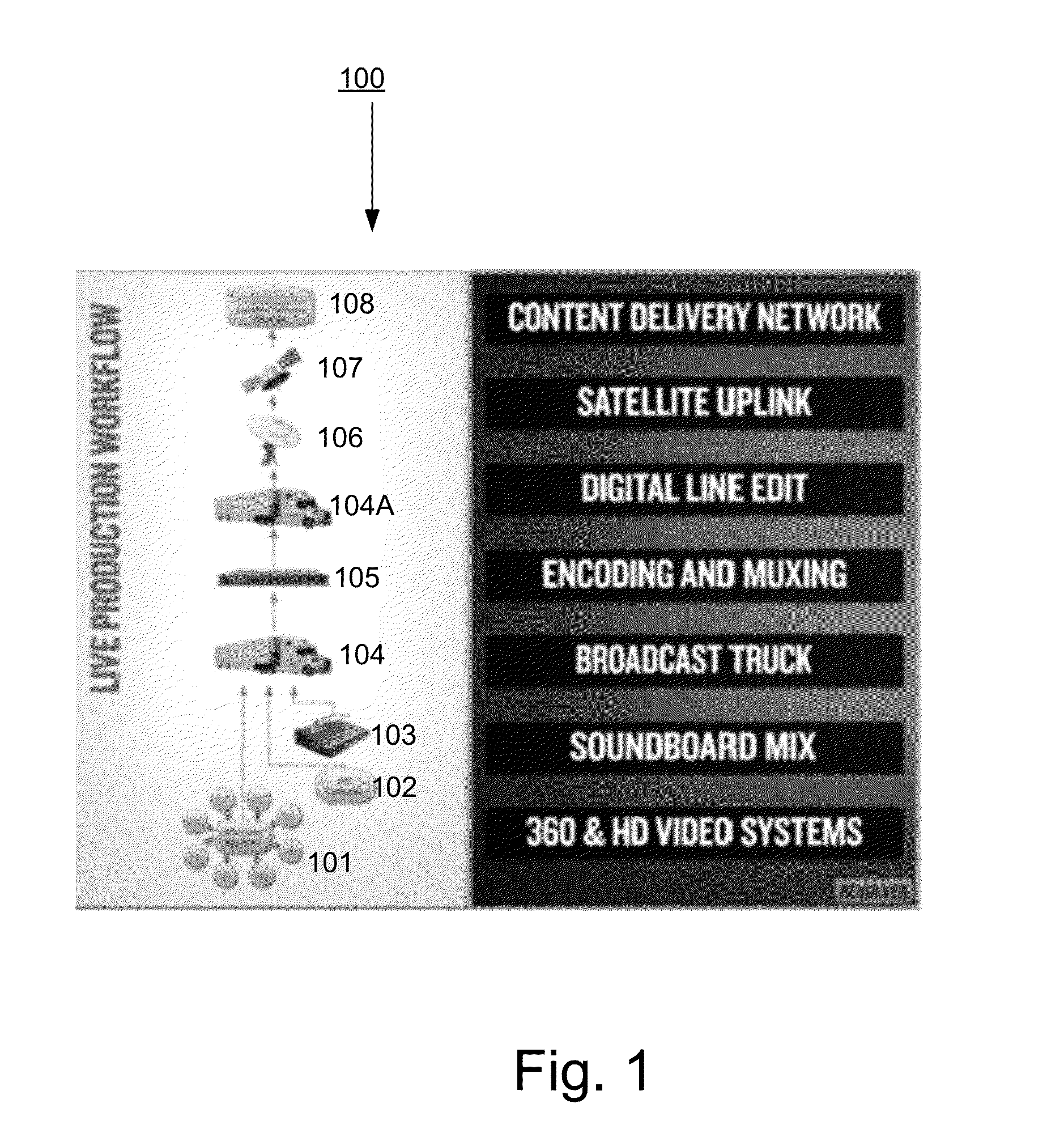

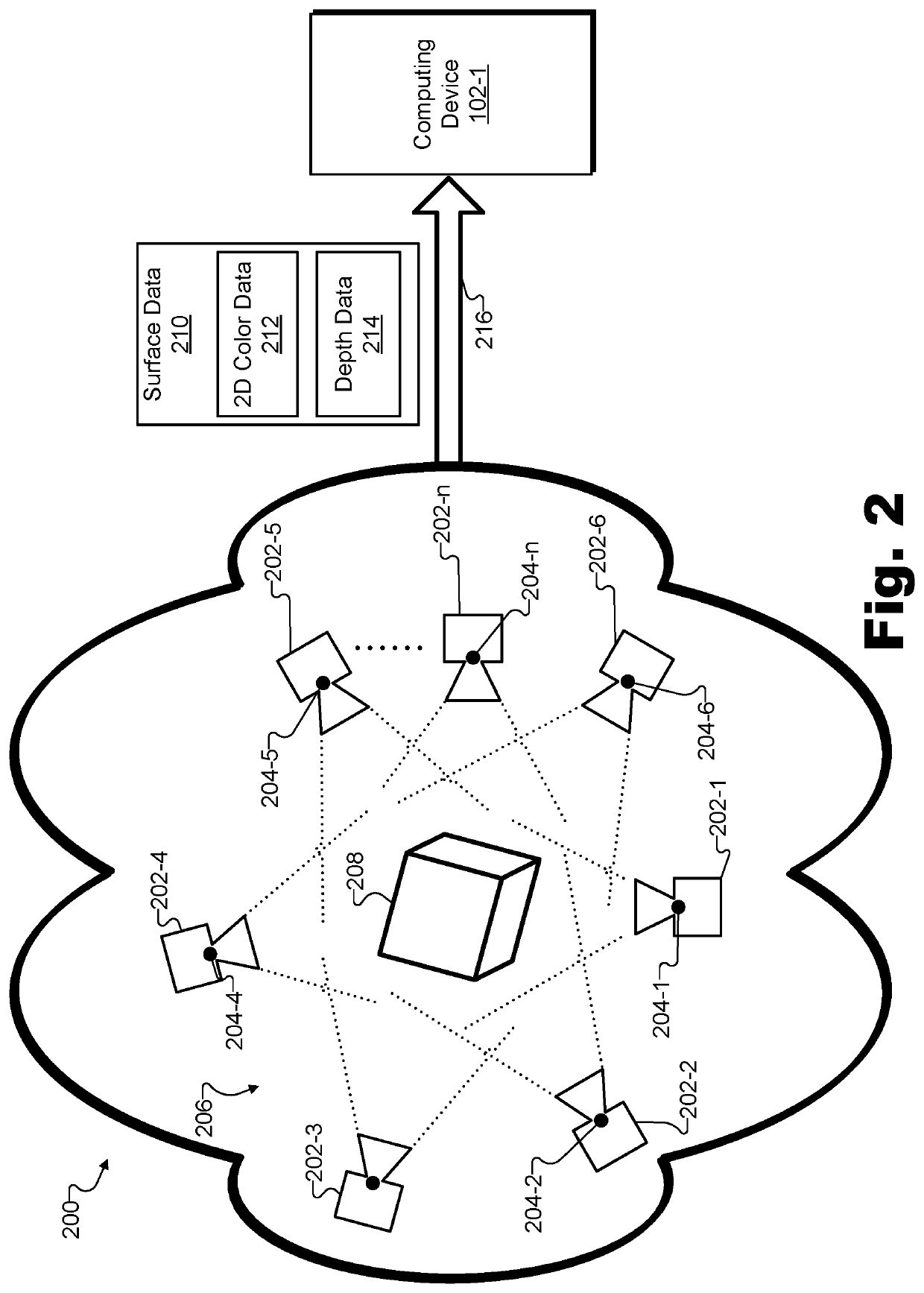

Holocam Systems and Methods

Aspects of the present invention comprise holocam systems and methods that enable the capture and streaming of scenes. In embodiments, multiple image capture devices, which may be referred to as “orbs,” are used to capture images of a scene from different vantage points or frames of reference. In embodiments, each orb captures three-dimensional (3D) information, which is preferably in the form of a depth map and visible images (such as stereo image pairs and regular images). Aspects of the present invention also include mechanisms by which data captured by two or more orbs may be combined to create one composite 3D model of the scene. A viewer may then, in embodiments, use the 3D model to generate a view from a different frame of reference than was originally created by any single orb.

Owner:SEIKO EPSON CORP

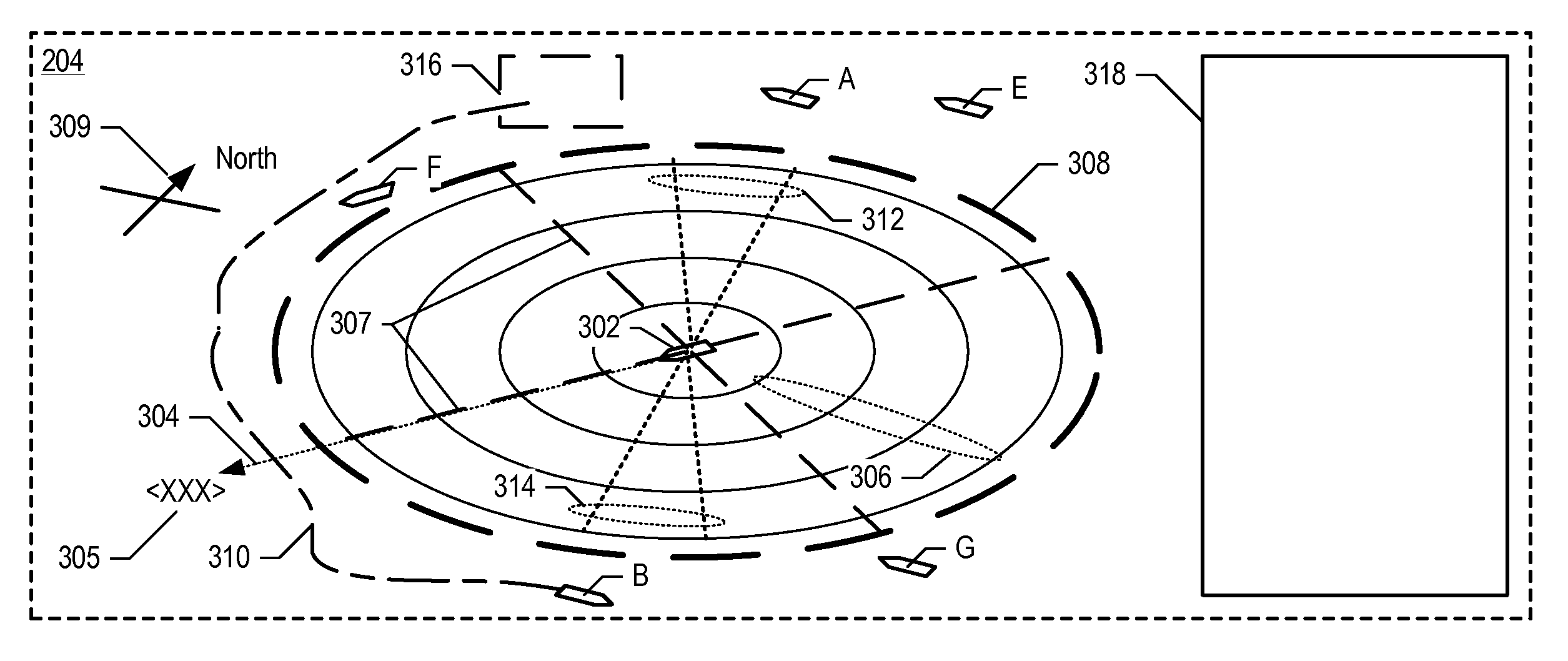

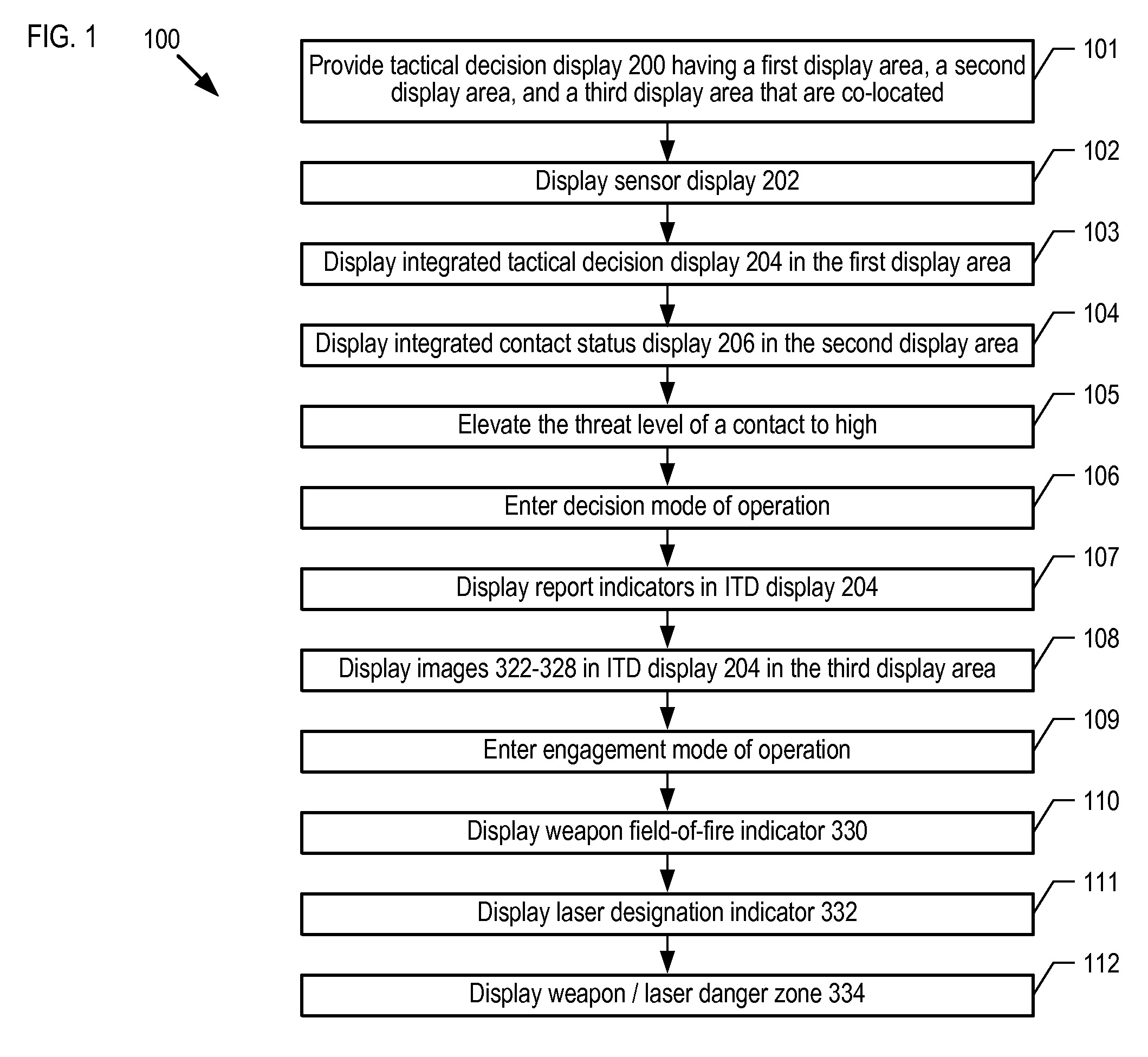

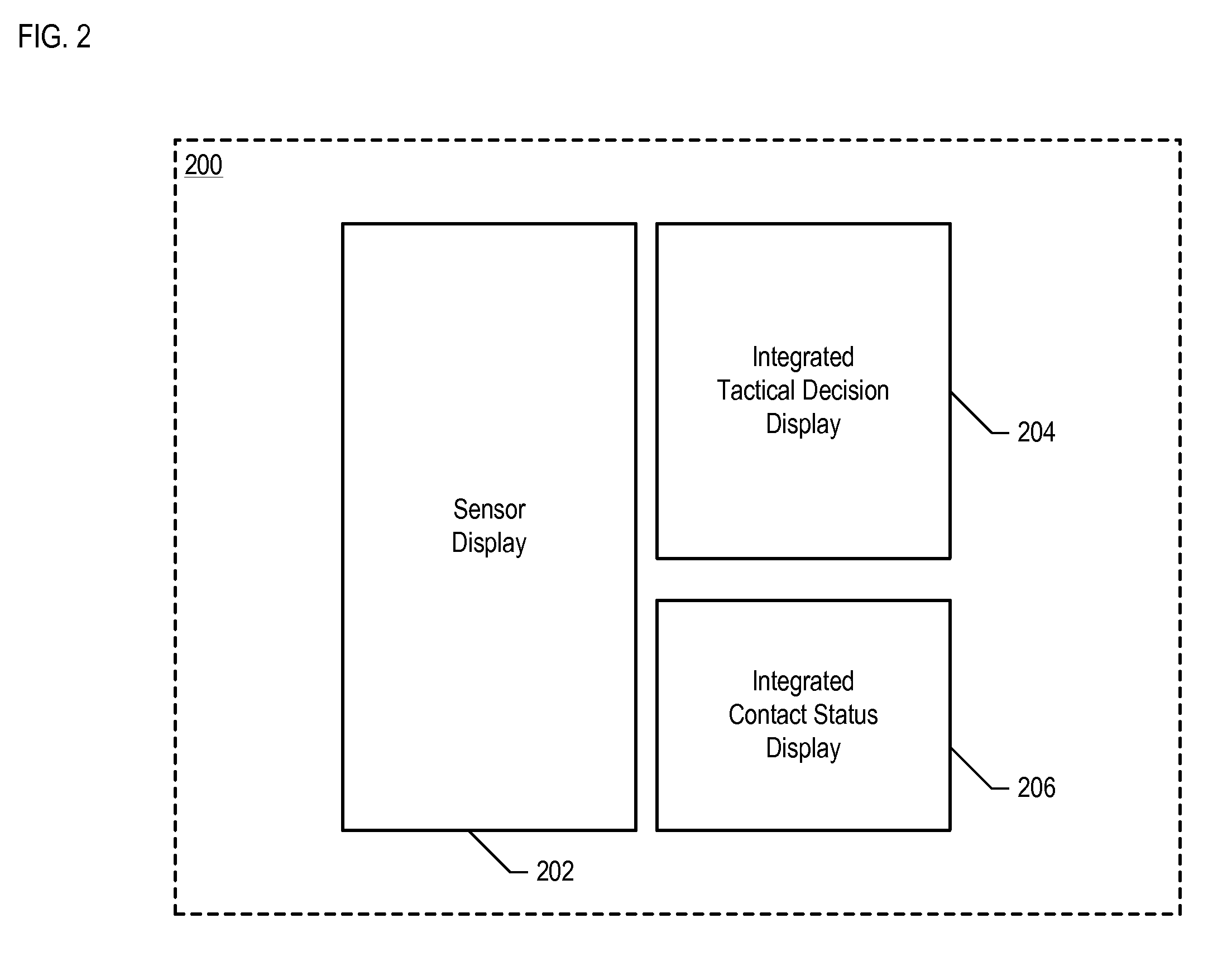

Intuitive tactical decision display

ActiveUS20090223354A1ConfidenceReduce restrictionsWave based measurement systemsStatic indicating devicesEngineeringHostility

Owner:LOCKHEED MARTIN CORP

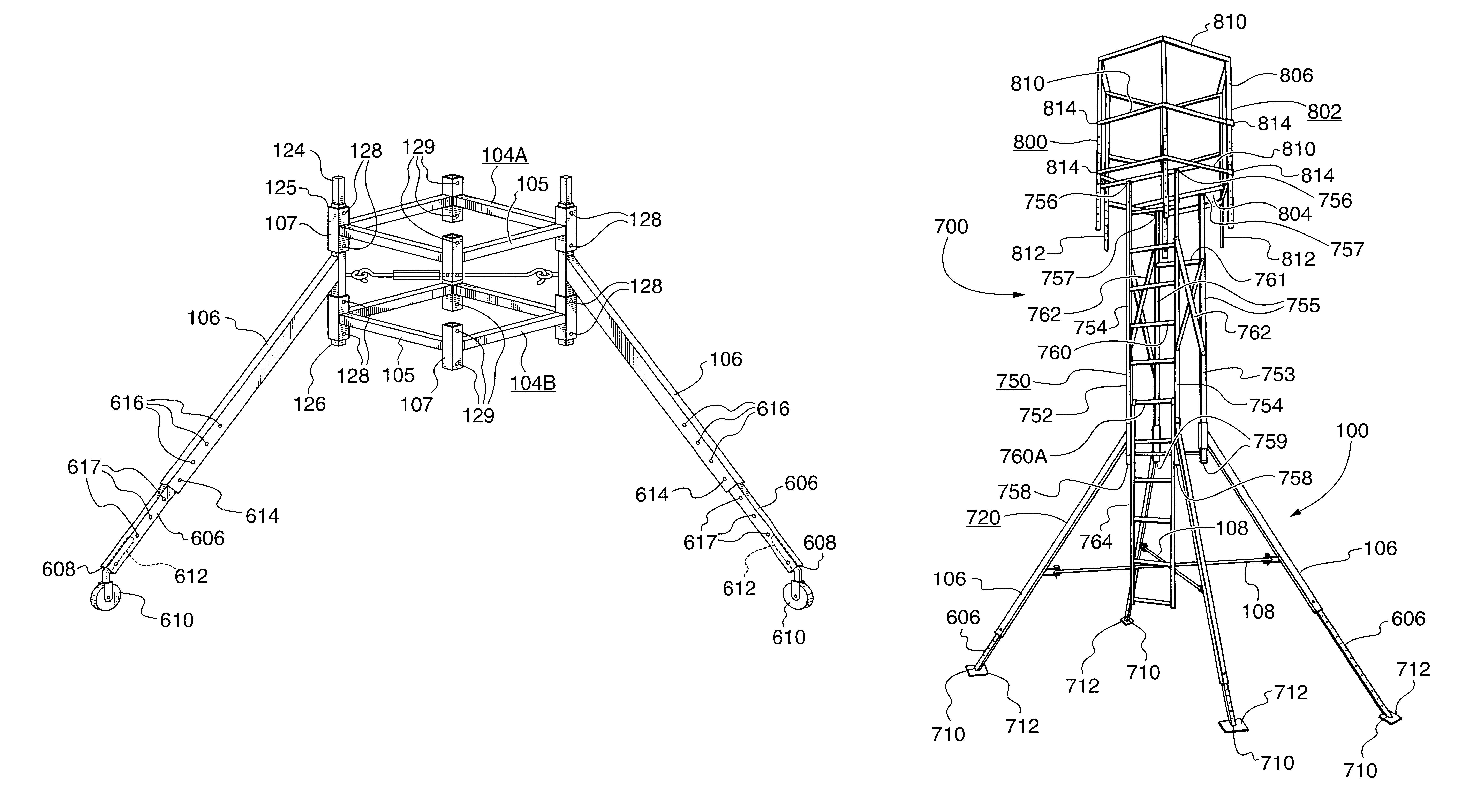

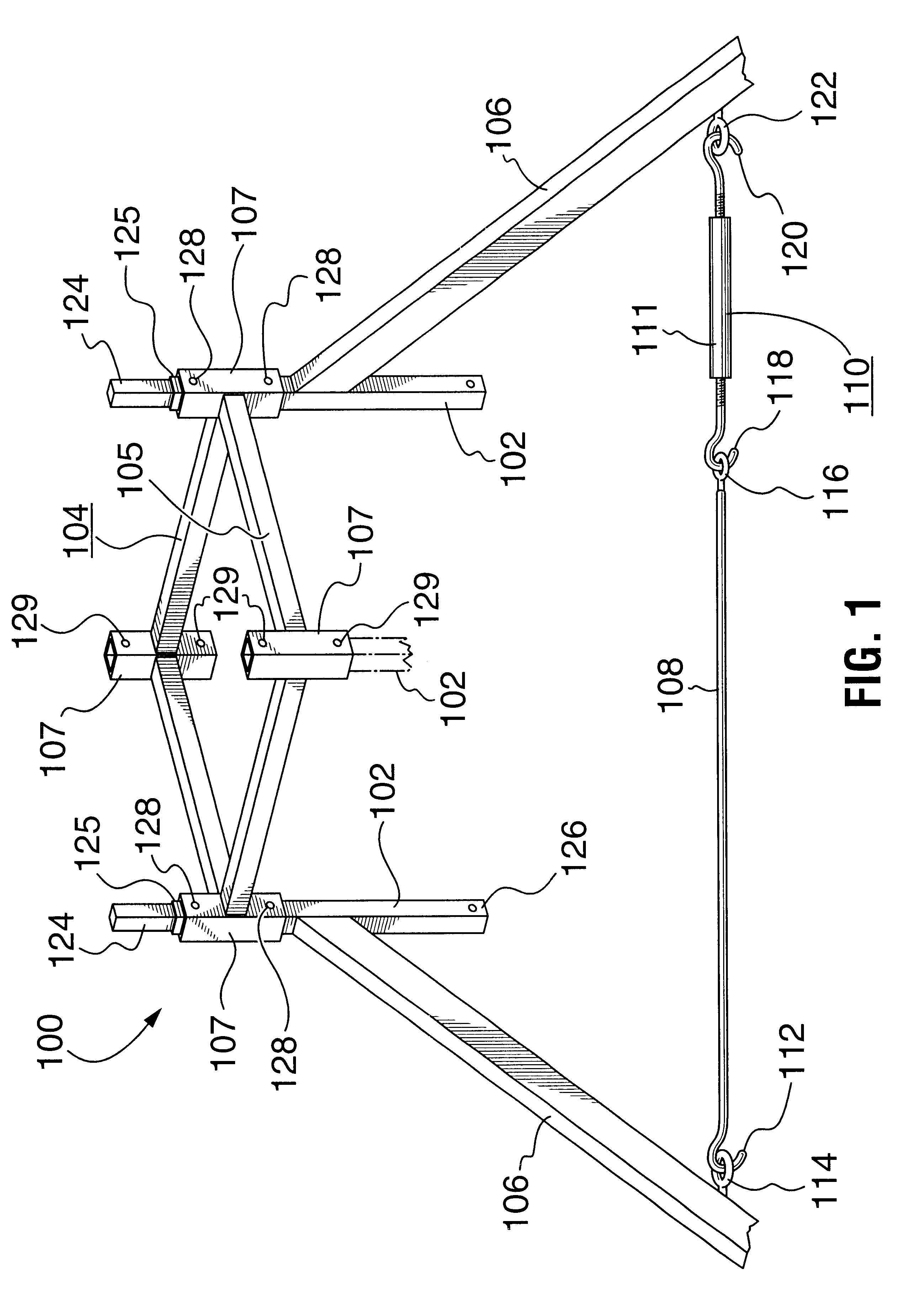

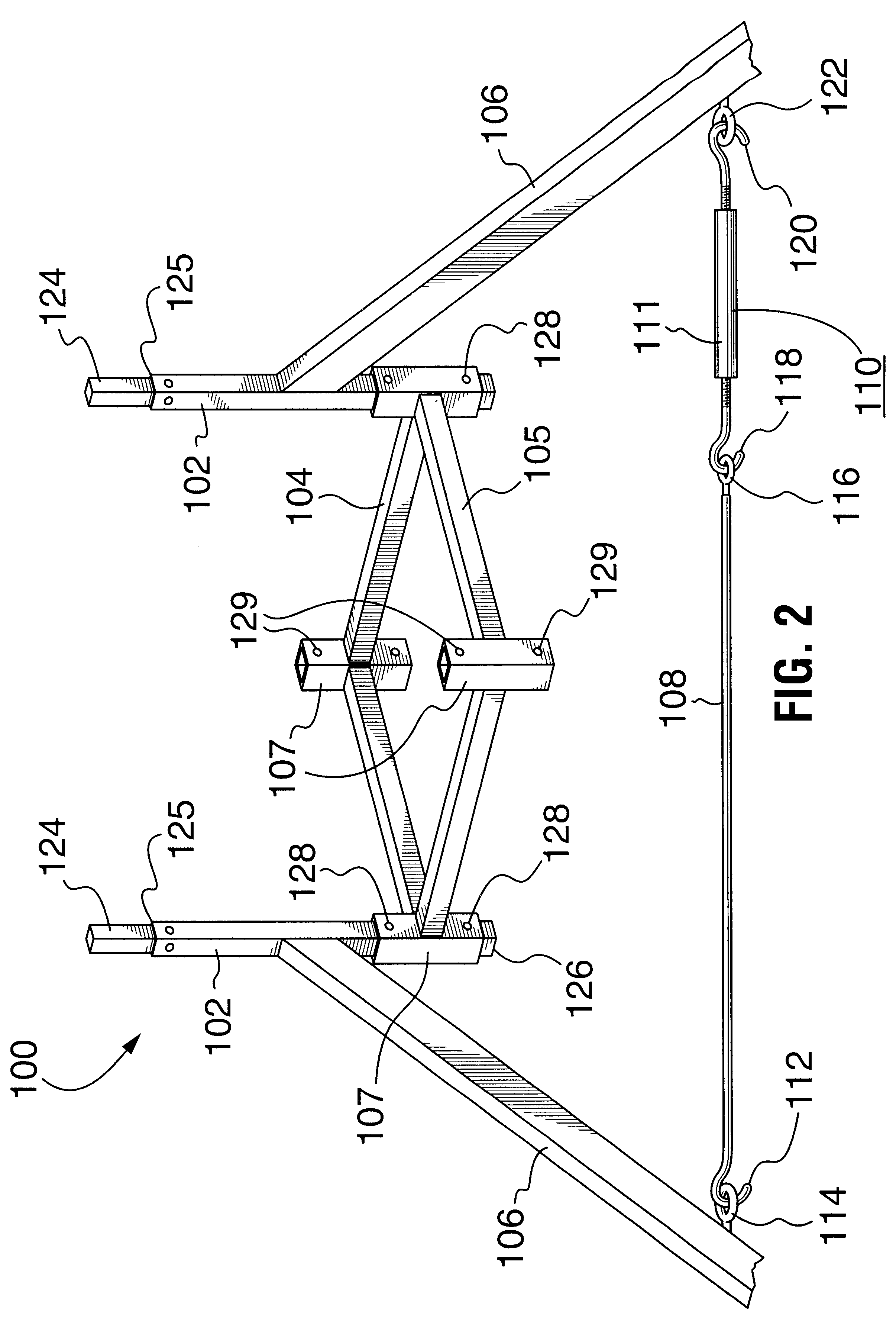

Stability alignment frame for erecting a portable multi-purpose stand

InactiveUS6725970B2Safe and cost-effectiveWeight optimizationAnimal huntingBuilding support scaffoldsEngineeringControl engineering

Owner:GAROFALO TONY

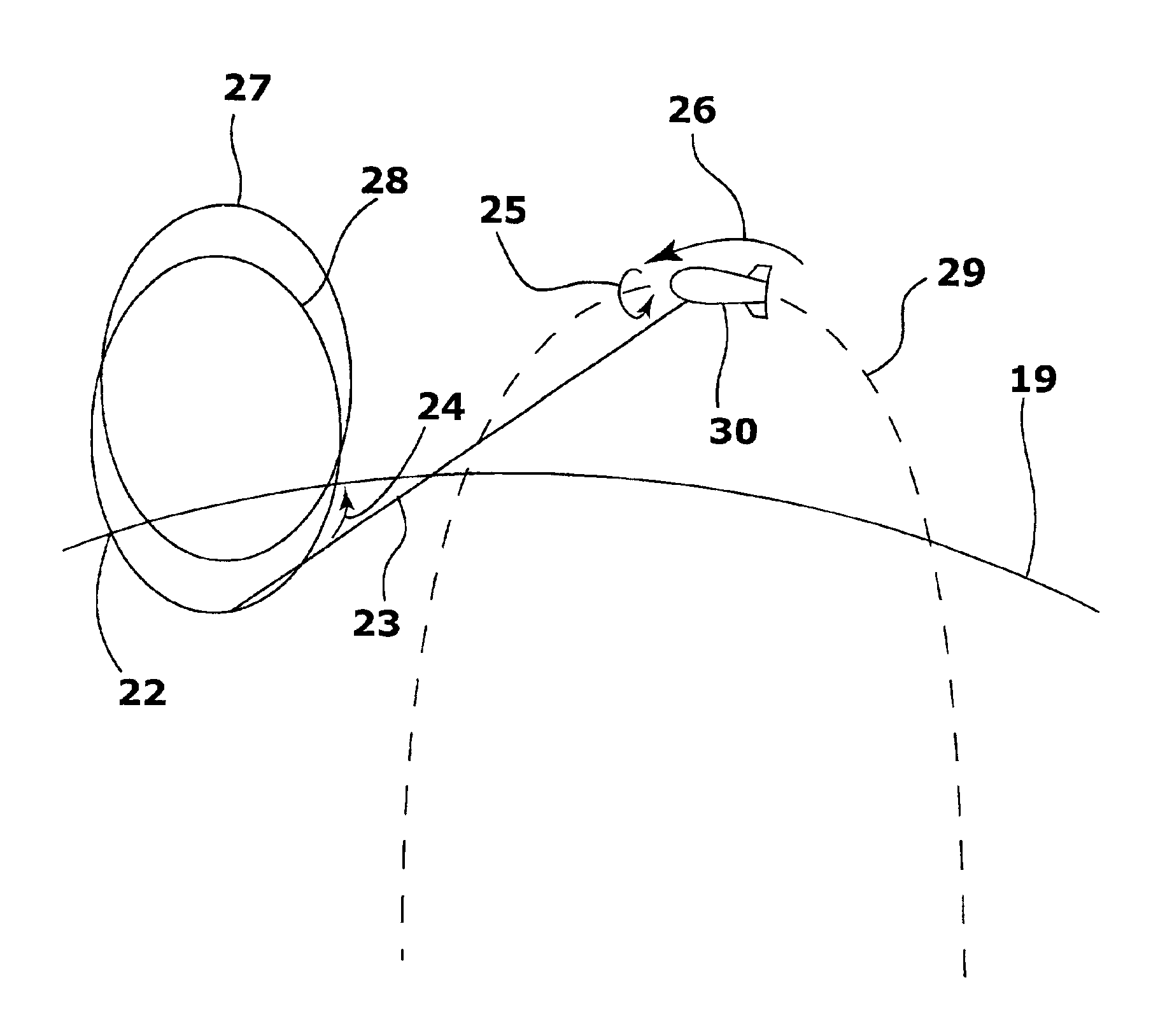

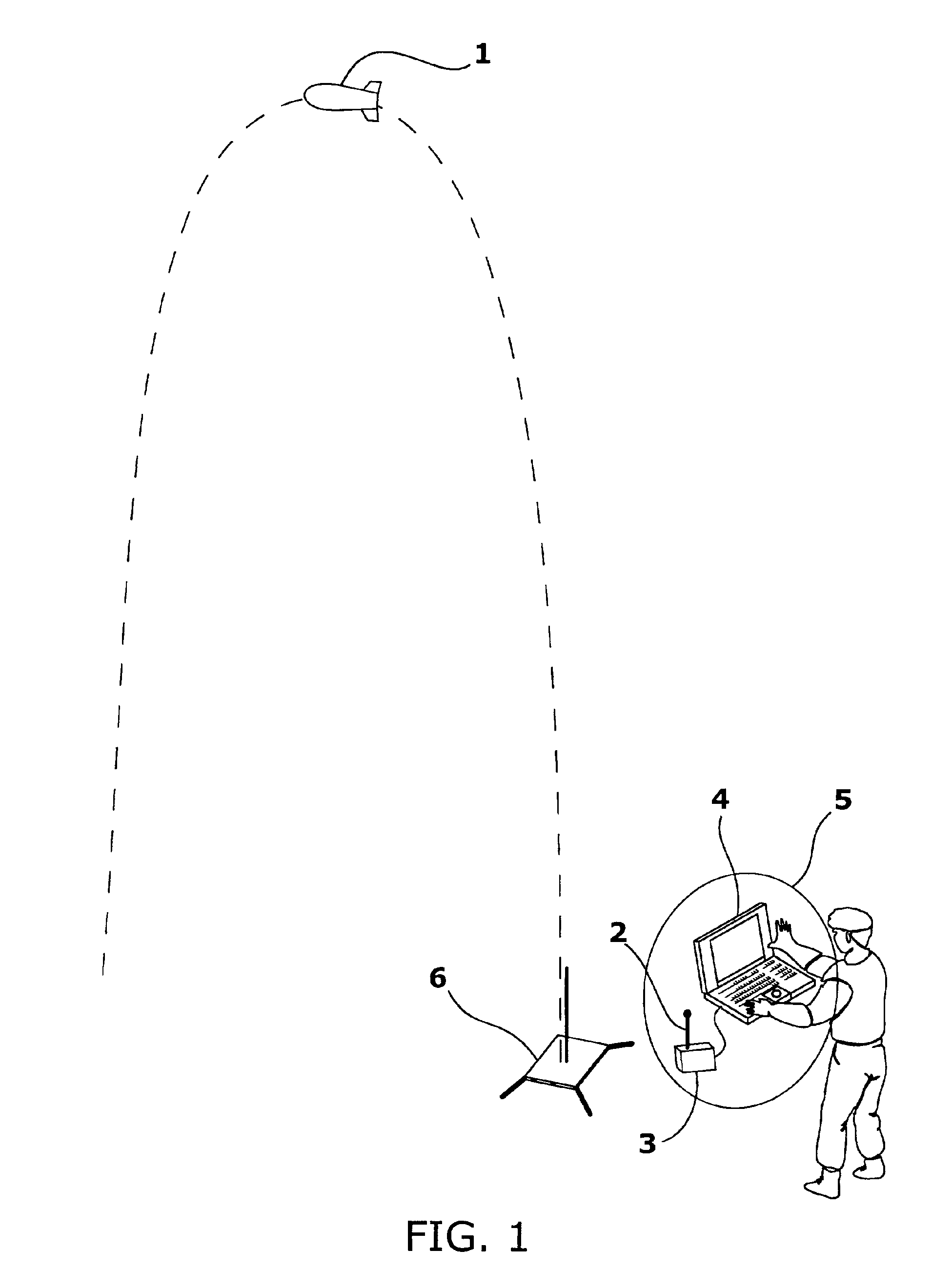

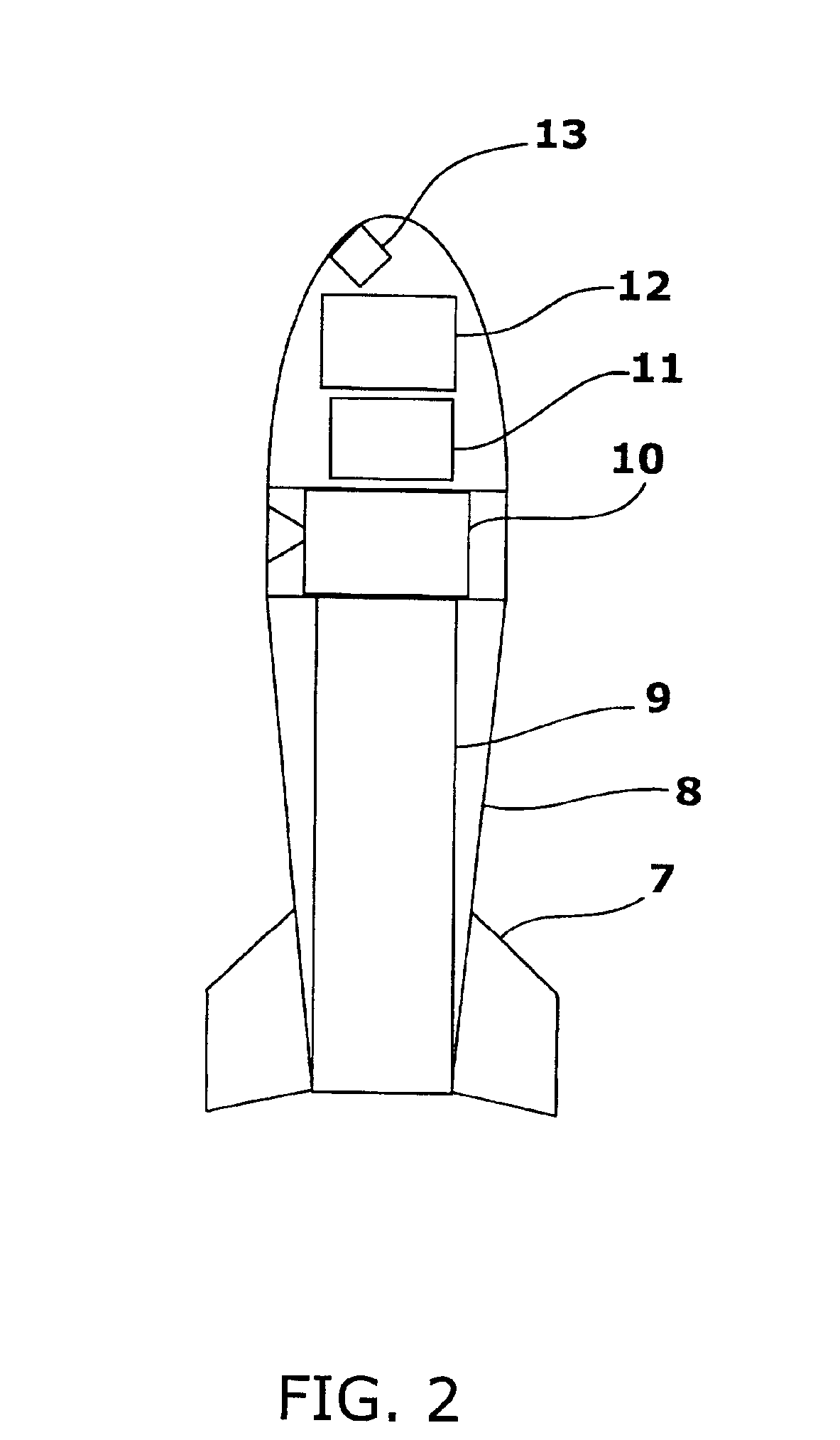

Panoramic aerial imaging device

An imaging device that gives a ground based user immediate access to a detailed aerial photograph of the entire area for a given radius about his present position. The device can be launched into the air, and rotates in a predictable pattern to scan an imager over every point of the ground, from a vantage point high in the air. These pictures can be stored or transmitted to the ground and assembled on a computer to form a spherical picture of everything surrounding the imaging device in the air.

Owner:TACSHOT

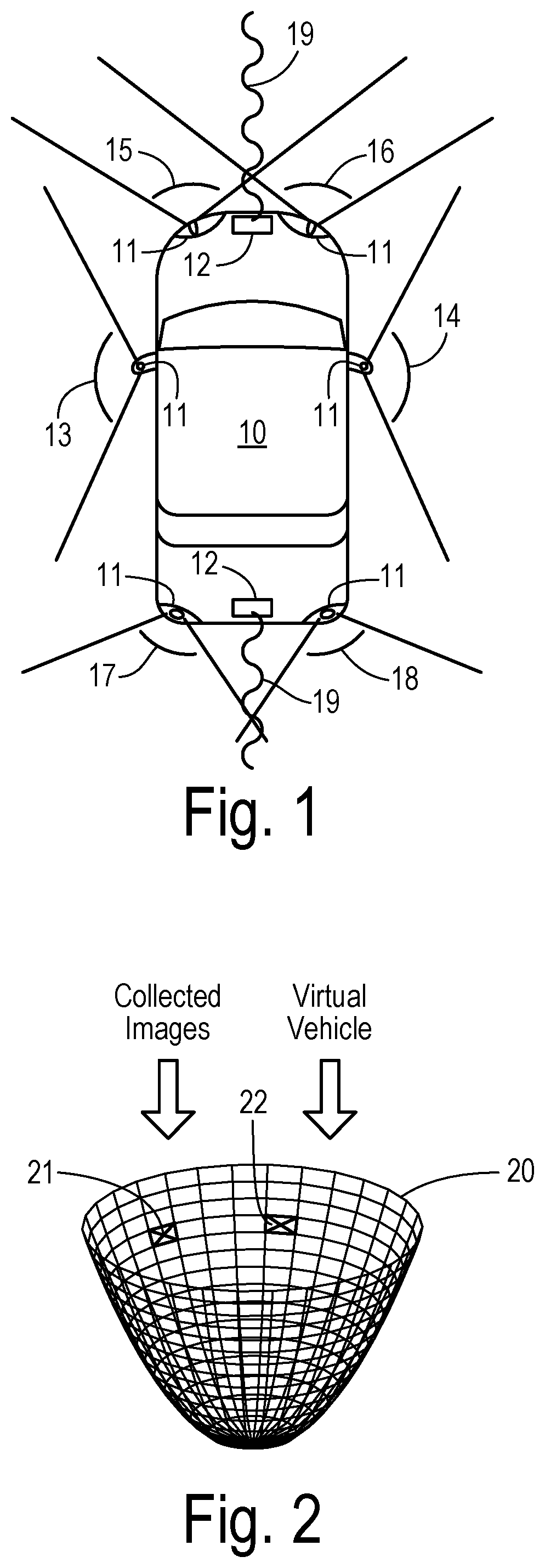

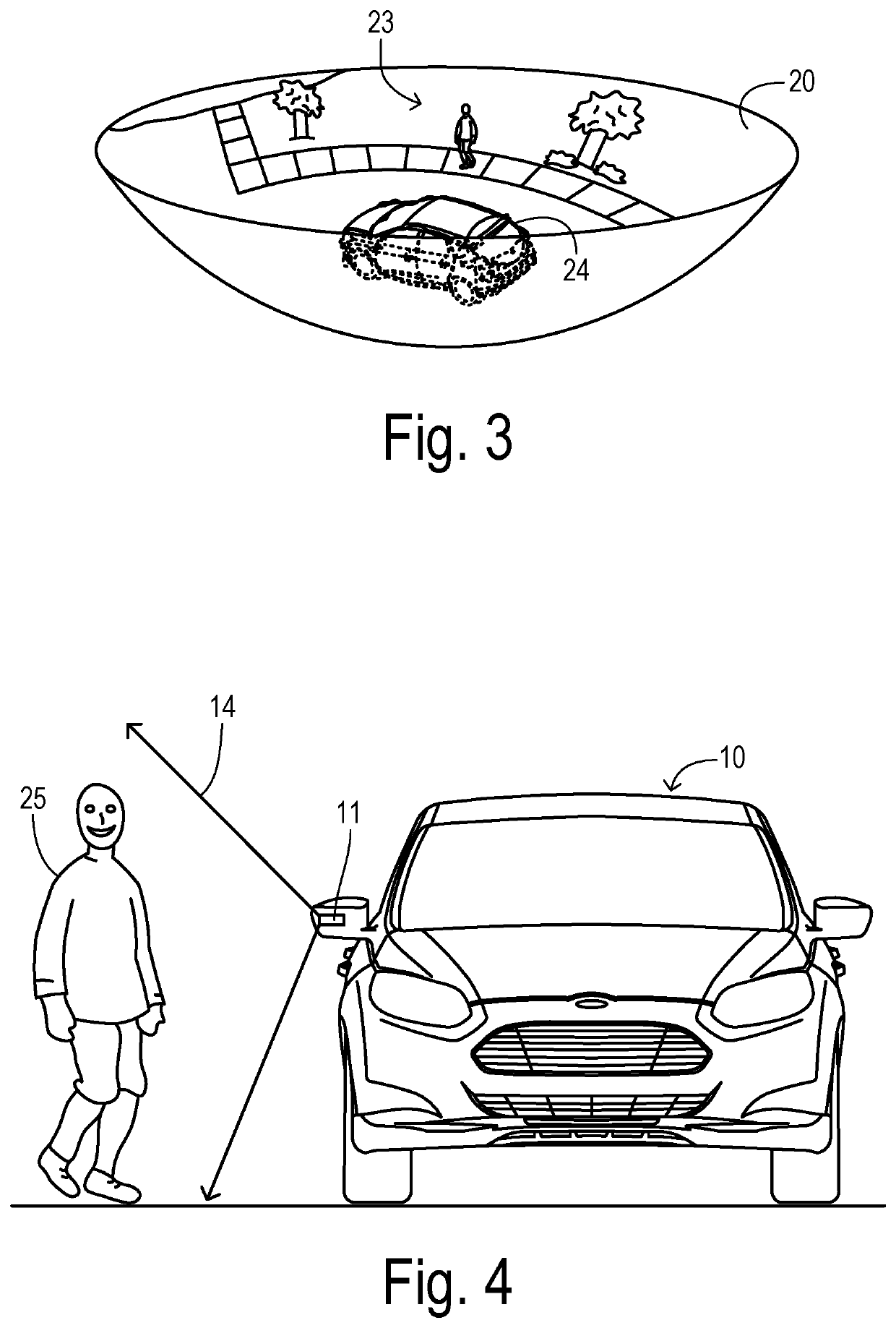

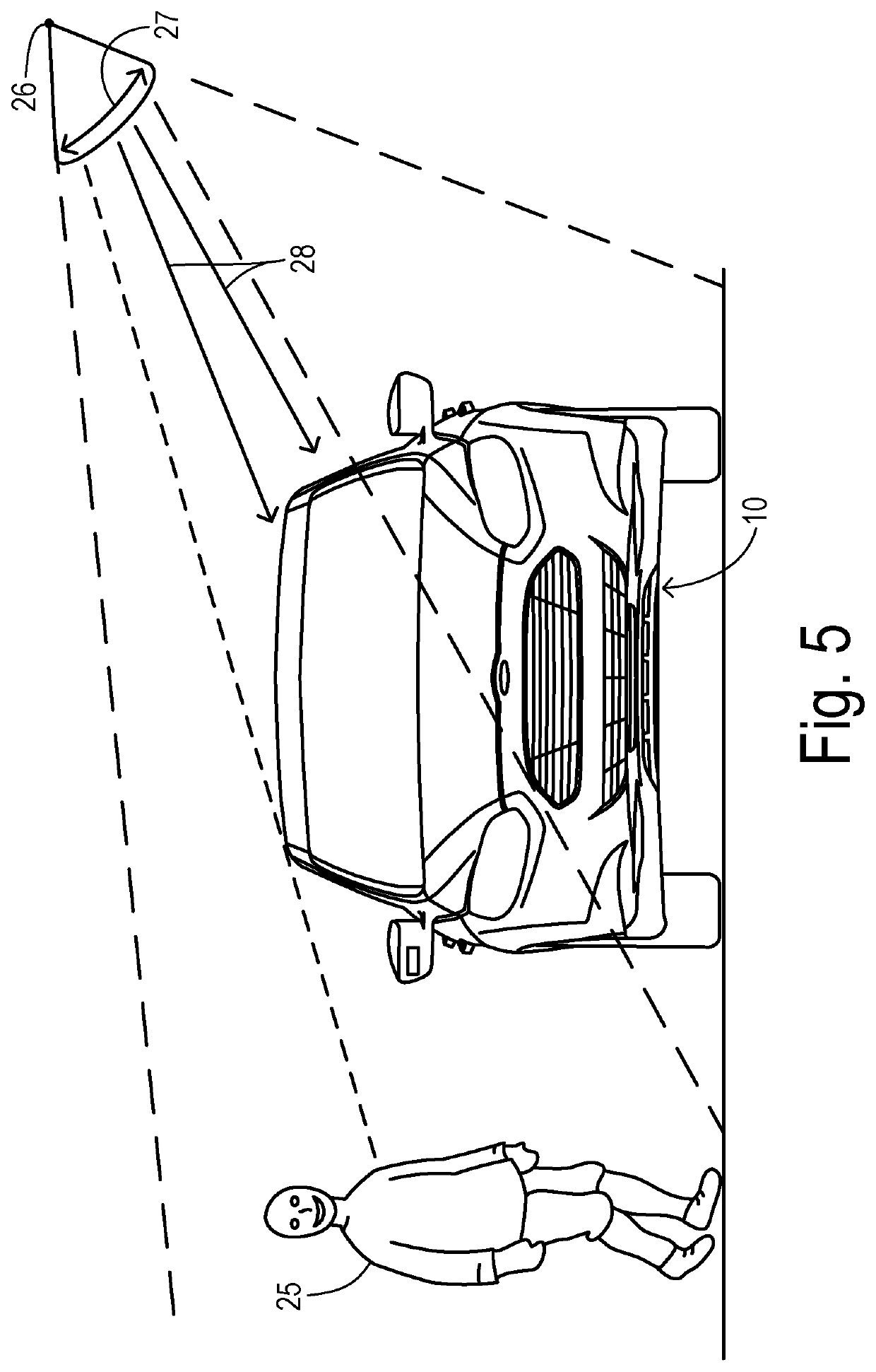

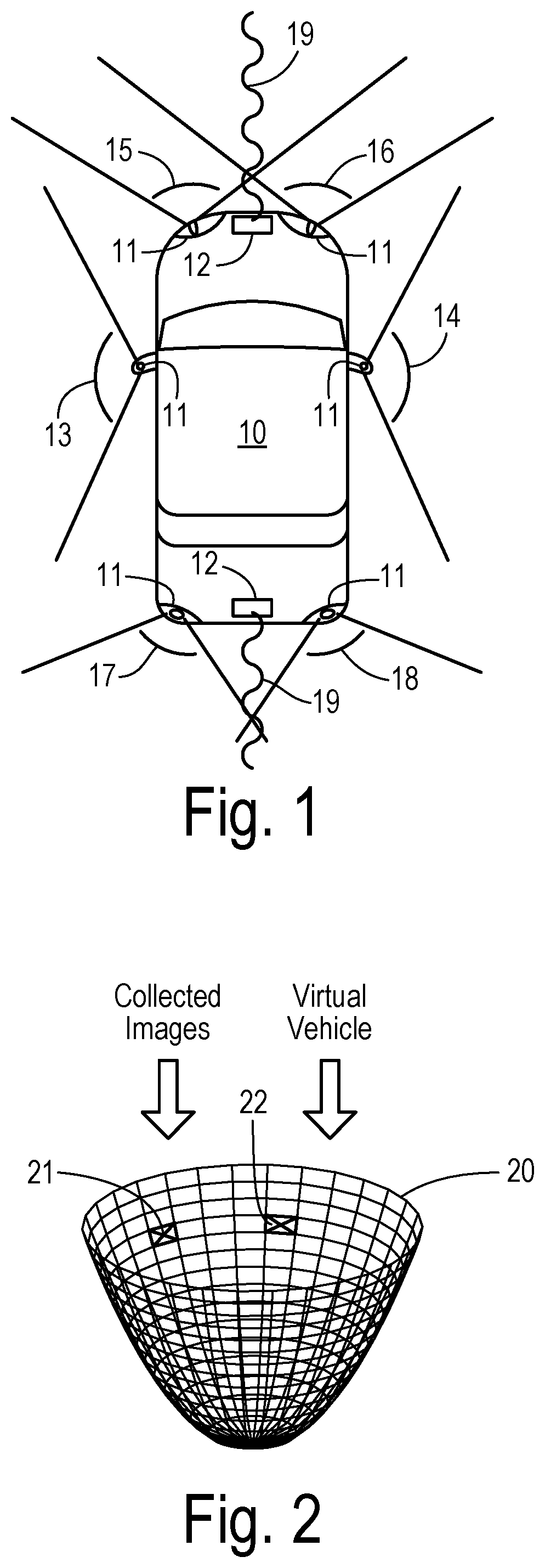

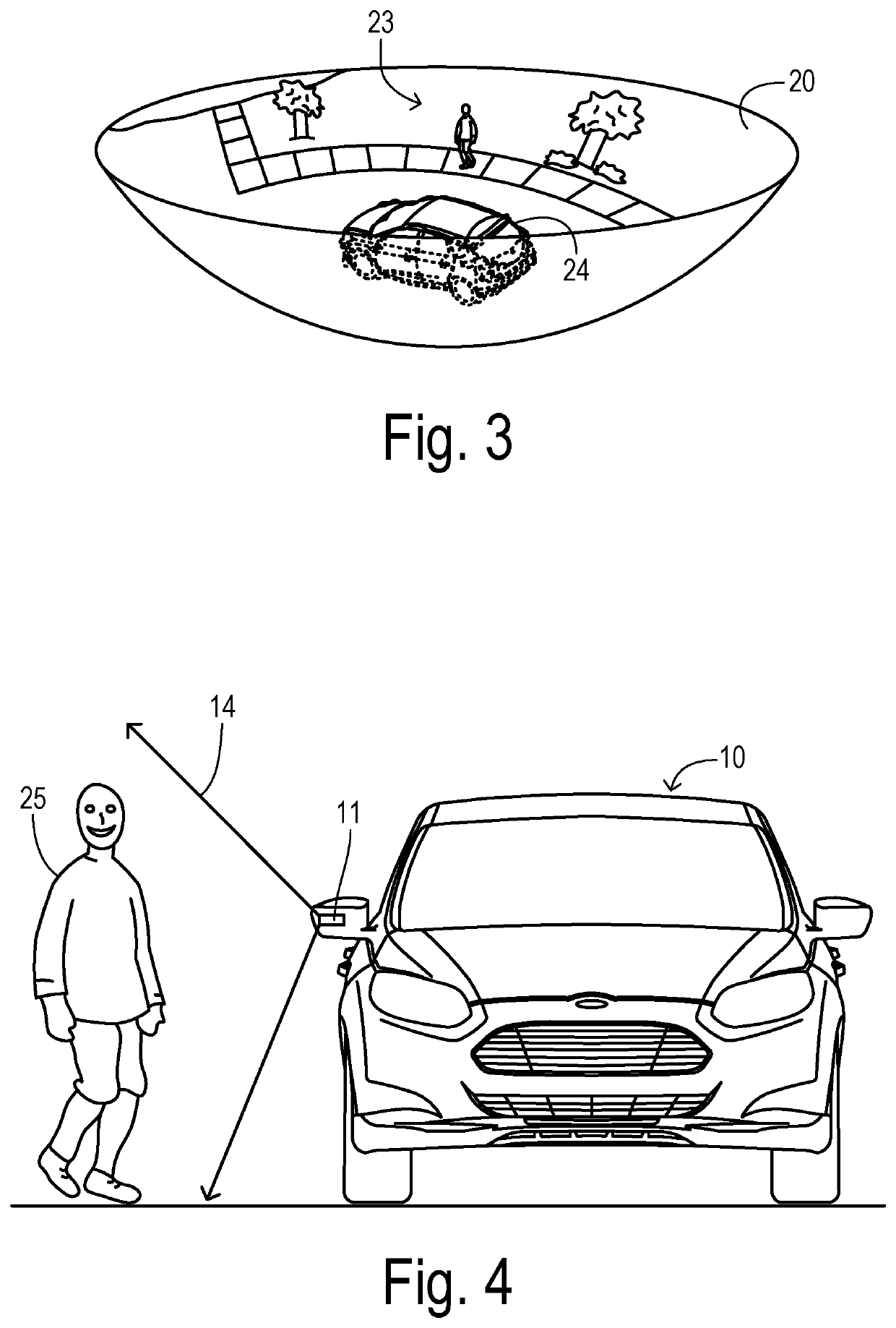

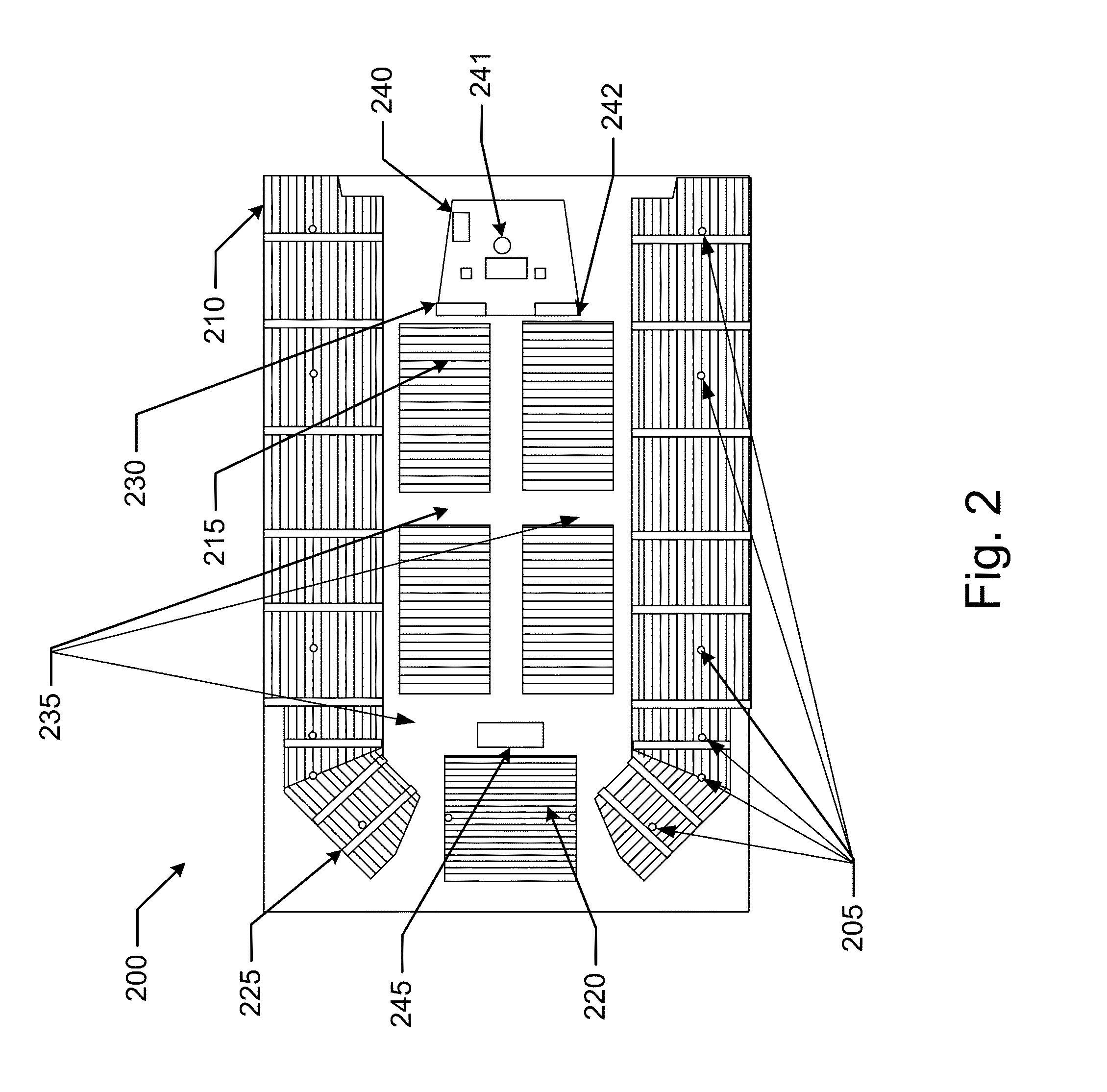

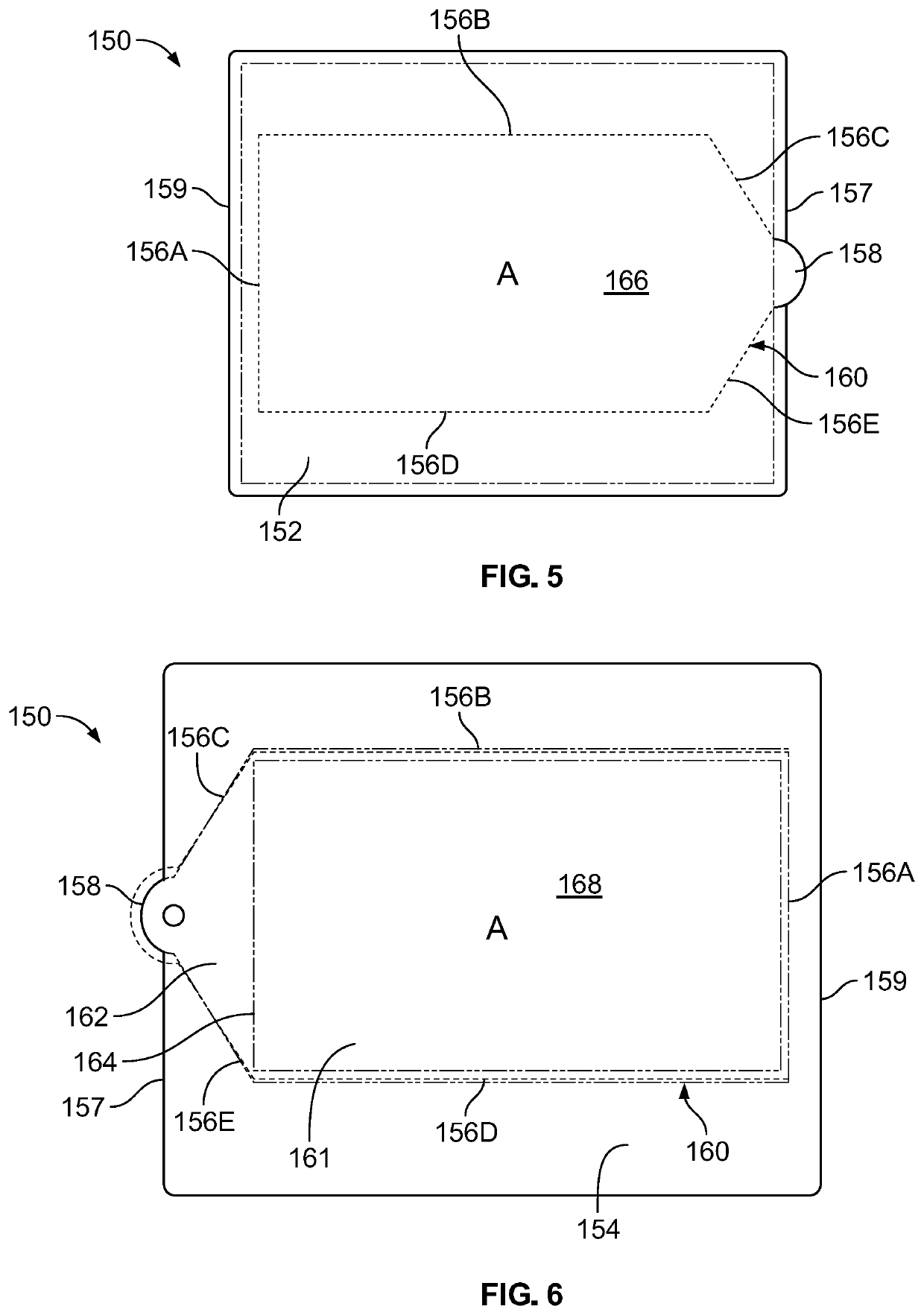

Adaptive transparency of virtual vehicle in simulated imaging system

ActiveUS20200218910A1Easy to identifyImage analysisRoad vehicles traffic controlPhysicsVantage point

A visual scene around a vehicle is displayed to an occupant of the vehicle on a display panel as a virtual three-dimensional image from an adjustable point of view outside the vehicle. A simulated image is assembled corresponding to a selected vantage point on an imaginary parabolic surface outside the vehicle from exterior image data and a virtual vehicle image superimposed on a part of the image data. Objects are detected at respective locations around the vehicle subject to potential impact. An obstruction ratio is quantified for a detected object having corresponding image data in the simulated image obscured by the vehicle image. When the detected object has an obstruction ratio above an obstruction threshold, a corresponding bounding zone of the vehicle image is rendered at least partially transparent in the simulated image to unobscure the corresponding image data.

Owner:FORD GLOBAL TECH LLC

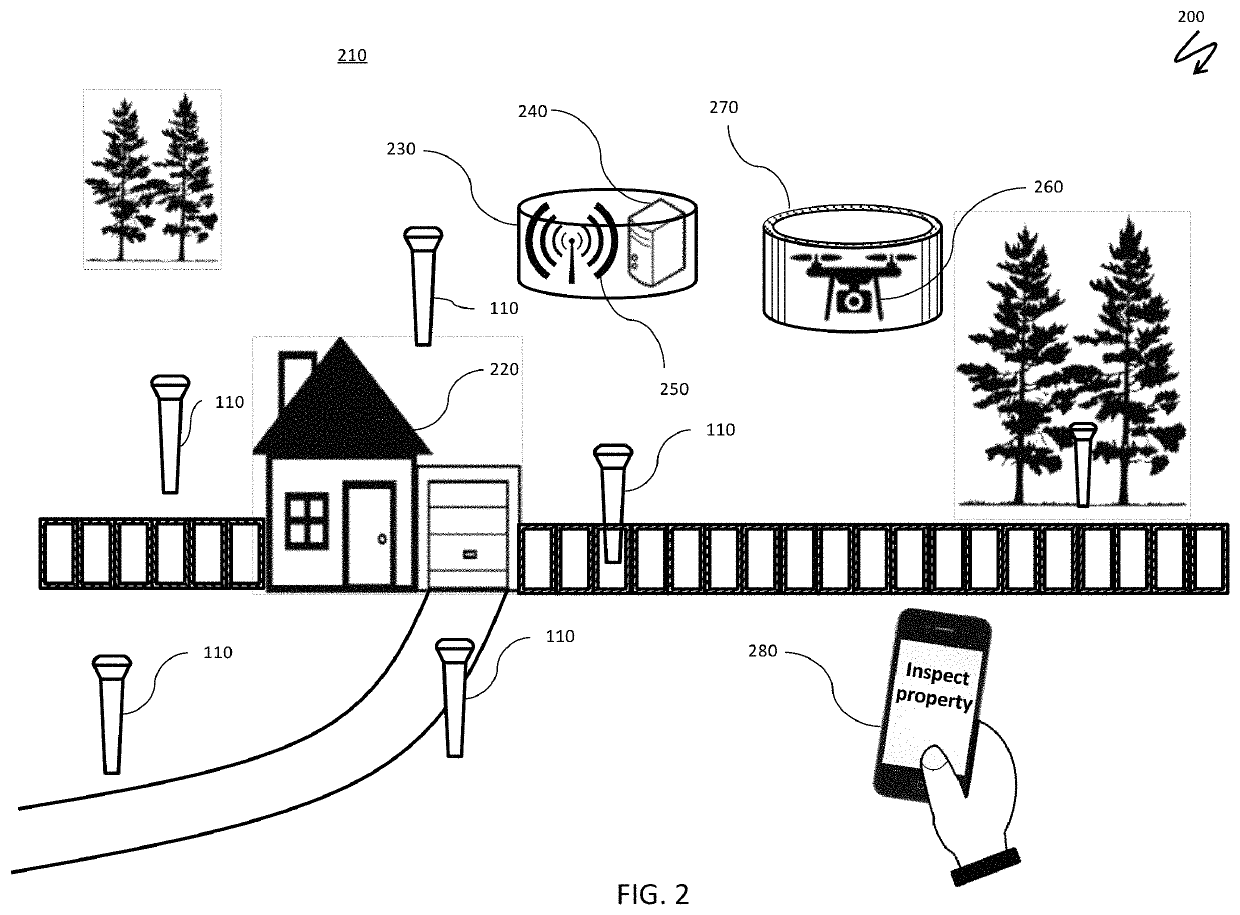

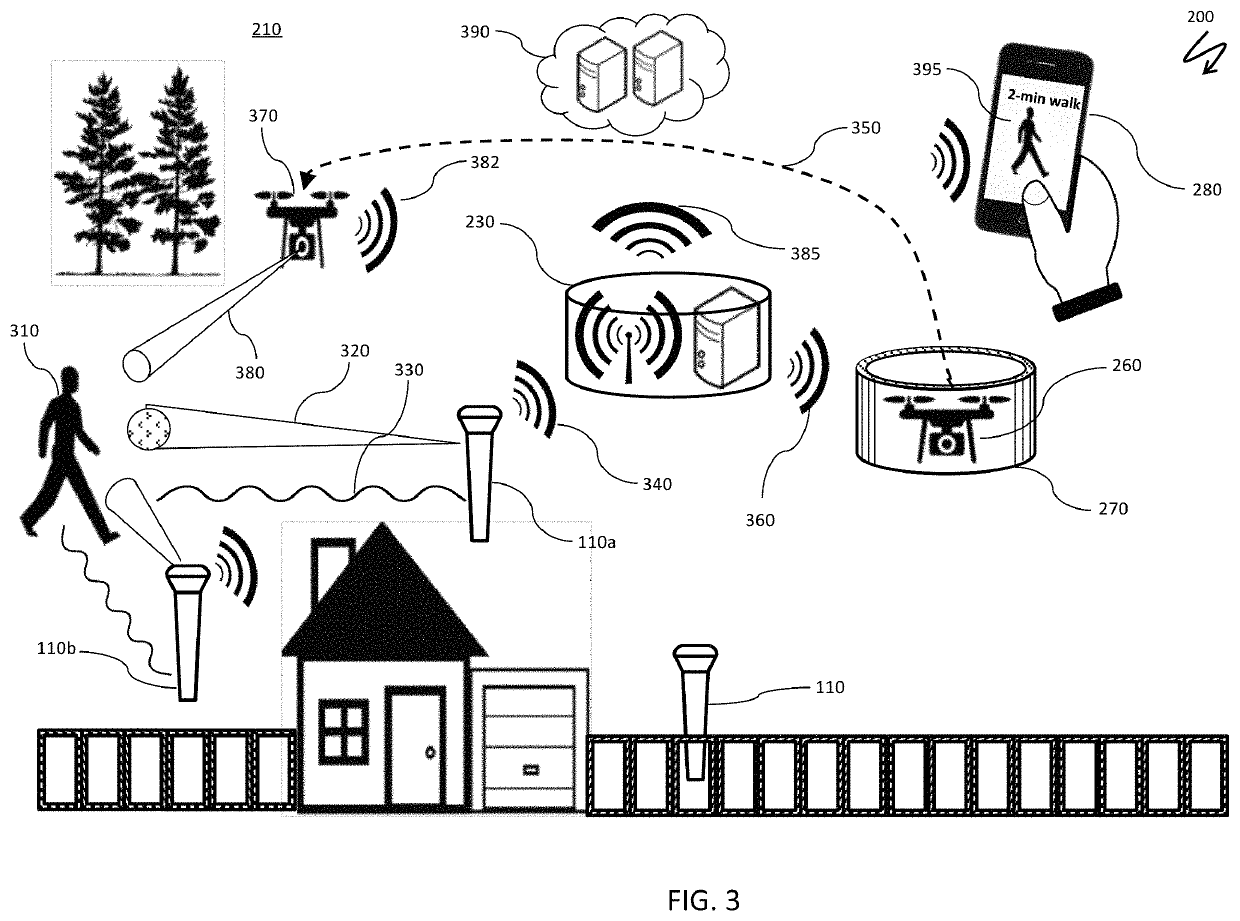

Security system with distributed sensor units and autonomous camera vehicle

ActiveUS10706696B1Good for observationUnobstructed viewAnimal huntingUnmanned aerial vehiclesVantage pointField of view

A security system for monitoring a property includes a plurality of sensor units disposed at different locations throughout the property, an autonomous vehicle that travels throughout the property and contains a camera, and a central station, in communication with the sensor units and the autonomous vehicle, that dispatches the autonomous vehicle to a location corresponding to an unknown object detected by at least some of the sensor units and determined by a processing module of the central station to be a potential intrusion, wherein the autonomous vehicle provides video data of the potential intrusion to the central station. The autonomous vehicle may be a flying vehicle. The autonomous vehicle may be dispatched to a vantage point that is clear of any obstacles and provides an unobstructed view of the location of the potential intruder. A user of the device may approve dispatching the autonomous vehicle.

Owner:SUNFLOWER LABS INC

Holocam systems and methods

Aspects of the present invention comprise holocam systems and methods that enable the capture and streaming of scenes. In embodiments, multiple image capture devices, which may be referred to as “orbs,” are used to capture images of a scene from different vantage points or frames of reference. In embodiments, each orb captures three-dimensional (3D) information, which is preferably in the form of a depth map and visible images (such as stereo image pairs and regular images). Aspects of the present invention also include mechanisms by which data captured by two or more orbs may be combined to create one composite 3D model of the scene. A viewer may then, in embodiments, use the 3D model to generate a view from a different frame of reference than was originally created by any single orb.

Owner:SEIKO EPSON CORP

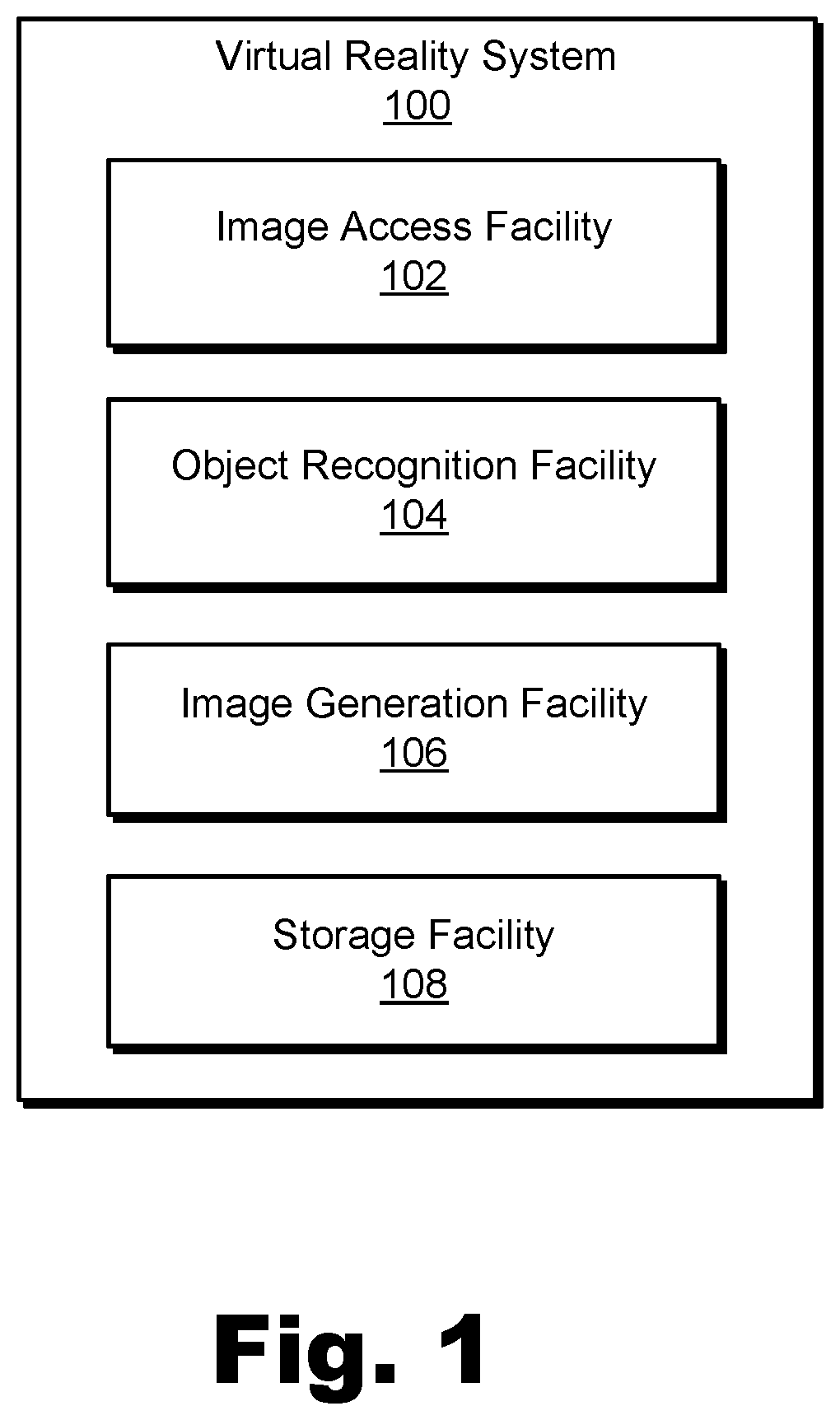

Methods and systems for representing a pre-modeled object within virtual reality data

An exemplary virtual reality system accesses first and second image datasets representative of first and second captured images depicting a real-world scene from first and second vantage points. The system recognizes a pre-modeled object within both the first and second captured images, and determines first and second confidence metrics representative of objective degrees to which the system accurately recognizes the pre-modeled object within the first and second captured images, respectively. The system further generates, a third image dataset representative of a rendered image based on the first and second image datasets. The rendered image includes a depiction of the pre-modeled object within the real-world scene from a third vantage point, and the generating comprises prioritizing, based on a determination that the second confidence metric is greater than the first confidence metric, the second image dataset over the first image dataset for the depiction of the pre-modeled object.

Owner:VERIZON PATENT & LICENSING INC

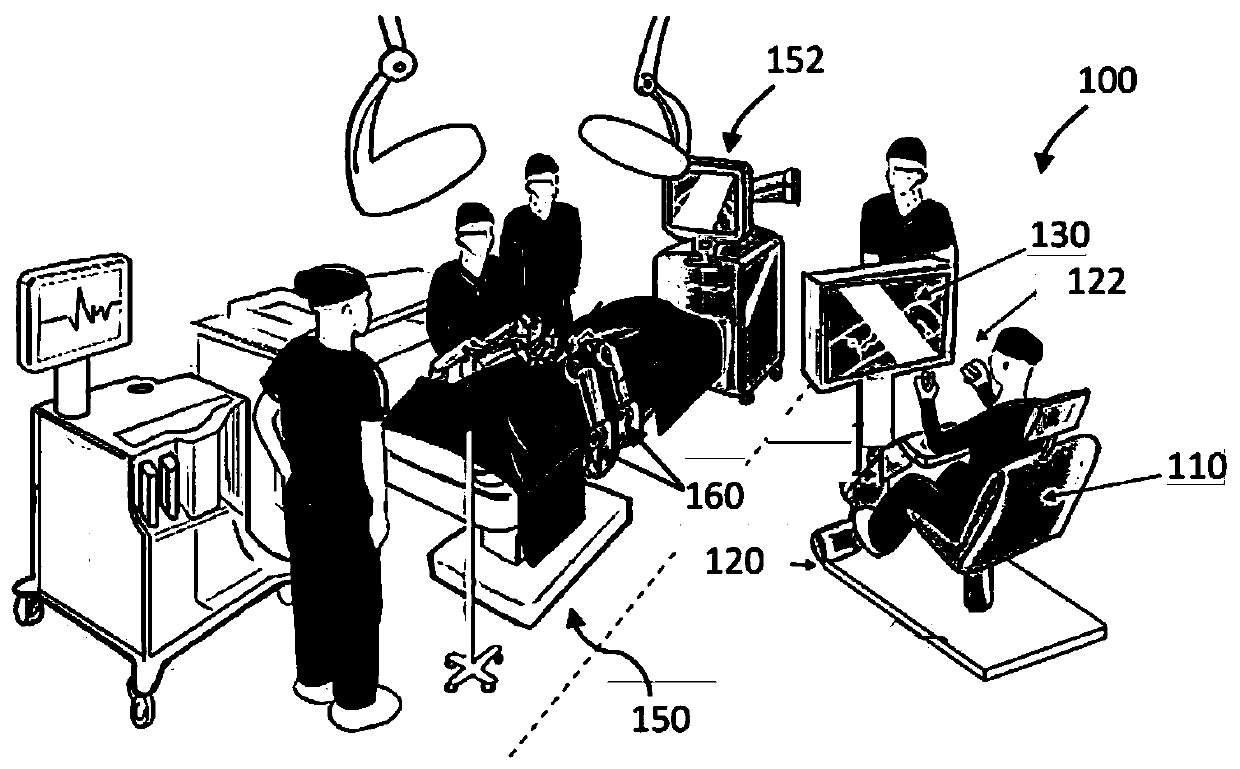

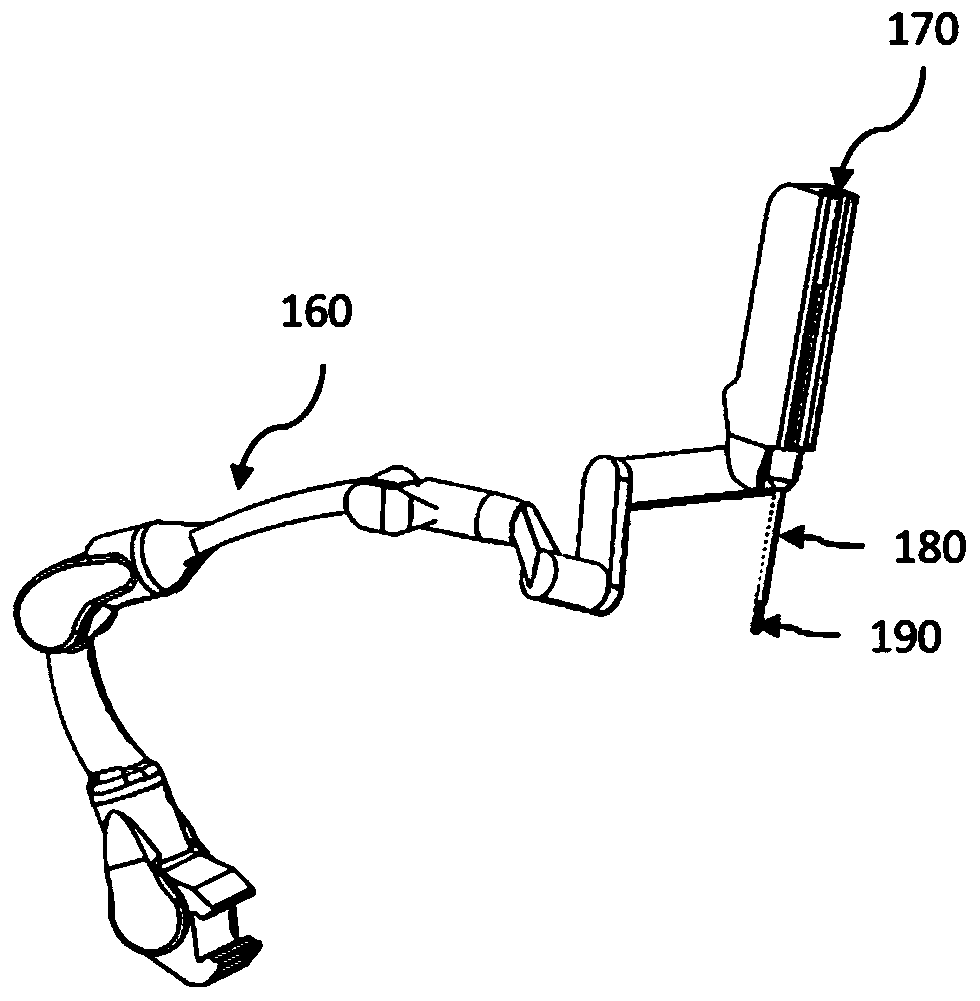

Virtual reality training, simulation, and collaboration in a robotic surgical system

ActiveCN109791801AMechanical/radiation/invasive therapiesGeometric image transformationComputer graphics (images)Virtual robot

The issue of the invention is virtual reality training, simulation, and collaboration in a robotic surgical system. A virtual reality system providing a virtual robotic surgical environment, and methods for using the virtual reality system, are described herein. Within the virtual reality system, various user modes enable different kinds of interactions between a user and the virtual robotic surgical environment. For example, one variation of a method for facilitating navigation of a virtual robotic surgical environment includes displaying a first-person perspective view of the virtual roboticsurgical environment from a first vantage point, displaying a first window view of the virtual robotic surgical environment from a second vantage point and displaying a second window view of the virtual robotic surgical environment from a third vantage point. Additionally, in response to a user input associating the first and second window views, a trajectory between the second and third vantagepoints can be generated sequentially linking the first and second window views.

Owner:VERB SURGICAL INC

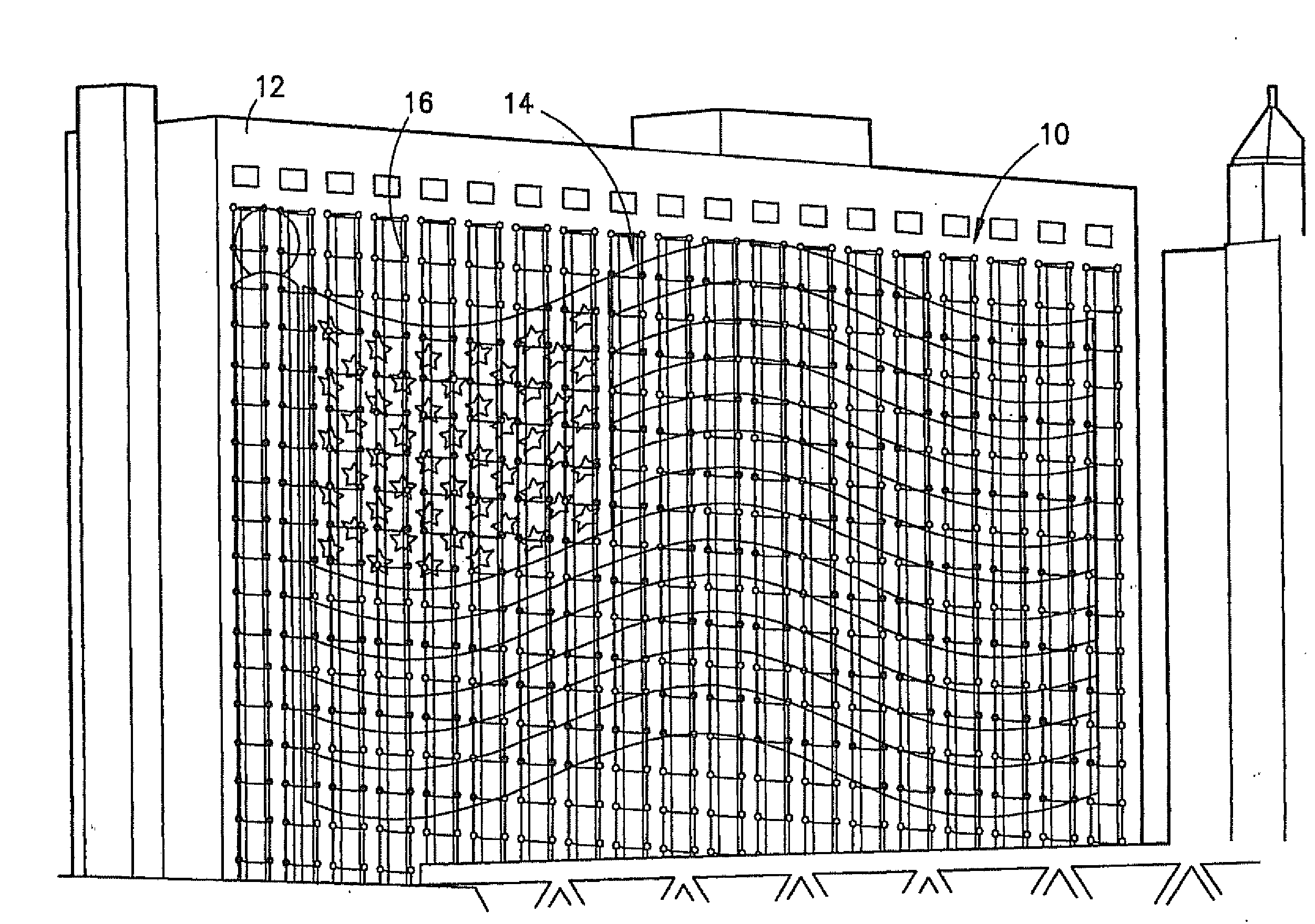

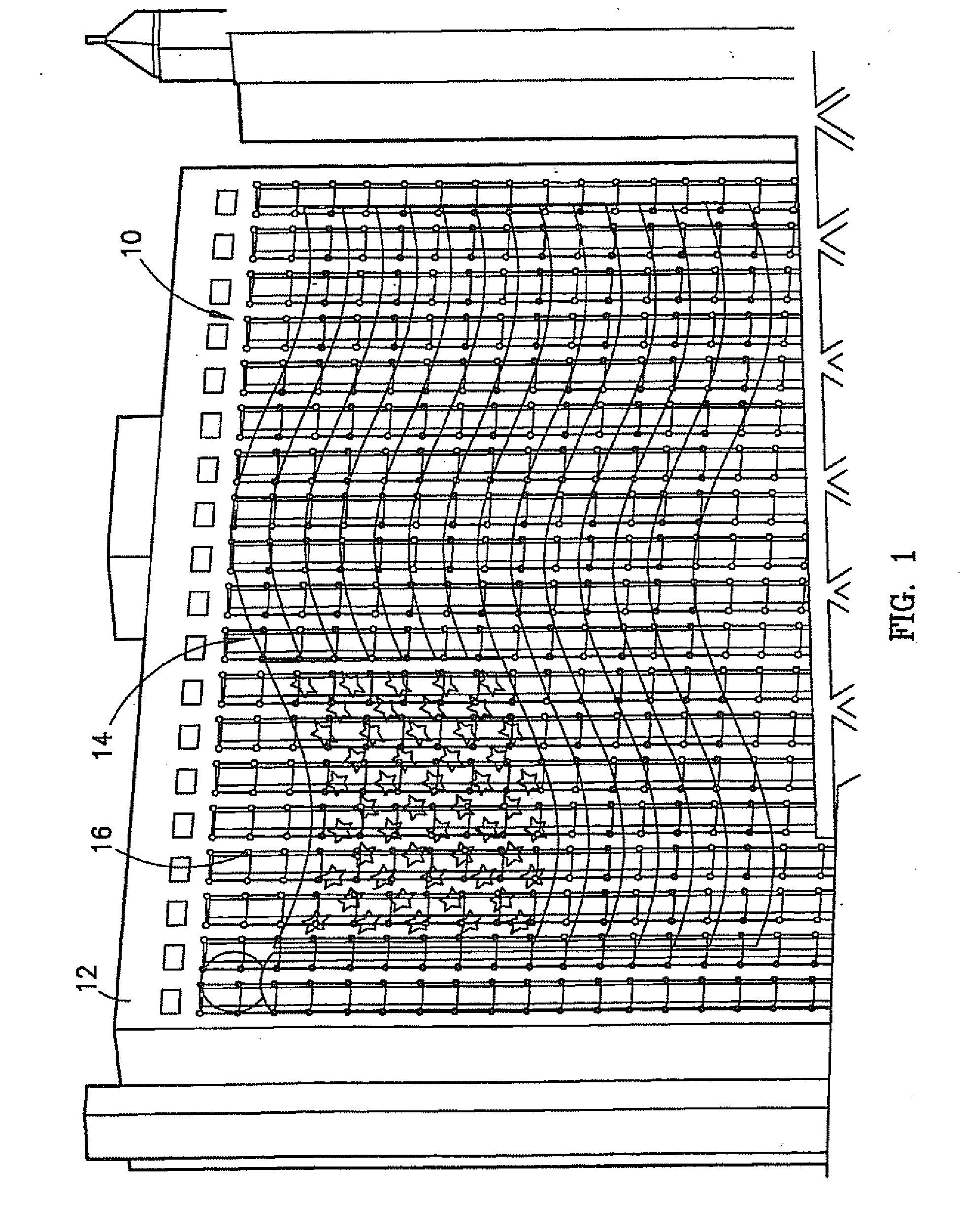

Expanded bit map display for mounting on a building surface and a method of creating same

InactiveUS20090040137A1Low costEasy to install lampsElectrical apparatusLighting support devicesGraphicsDisplay device

An expanded bit map display (“EBMD”) (10) for displaying an image (14) and a method of creating such for mounting the EBMD (10) to a building surface (12) is provided. The EBMD (10)is a large-scale colored light display comprising a plurality of light fixtures (16) mounted to the building surface (12). The building surface (12) may include a plurality of irregularities (18) that interferes with the uniformity of a substrate (36) of the building surface (12). The method broadly comprises the steps of (a) selecting the building surface on which to locate the display (20); (b) selecting at least one graphical image to be displayed (22), such as an animated picture or scrolling text; (c) selecting a type of light fixture to mount to the surface (24); (d) determining a plurality of locations on the surface where a plurality of light fixtures can be mounted (26); (e) selecting from the plurality of locations where the light fixtures can be mounted a plurality of optimal locations at which to mount the light fixtures for producing the selected image (28); (f) mounting the light fixtures to the surface (30); (g) assigning lighting characteristics to each light fixture (32); and (h) determining an angular orientation of each light fixture for optimal viewing of the image from a pre-determined vantage point (34).

Owner:FLEXTRONICS IND

Adaptive transparency of virtual vehicle in simulated imaging system

ActiveUS10896335B2Easy to identifyImage analysisRoad vehicles traffic controlLocation detectionVirtual vehicle

A visual scene around a vehicle is displayed to an occupant of the vehicle on a display panel as a virtual three-dimensional image from an adjustable point of view outside the vehicle. A simulated image is assembled corresponding to a selected vantage point on an imaginary parabolic surface outside the vehicle from exterior image data and a virtual vehicle image superimposed on a part of the image data. Objects are detected at respective locations around the vehicle subject to potential impact. An obstruction ratio is quantified for a detected object having corresponding image data in the simulated image obscured by the vehicle image. When the detected object has an obstruction ratio above an obstruction threshold, a corresponding bounding zone of the vehicle image is rendered at least partially transparent in the simulated image to unobscure the corresponding image data.

Owner:FORD GLOBAL TECH LLC

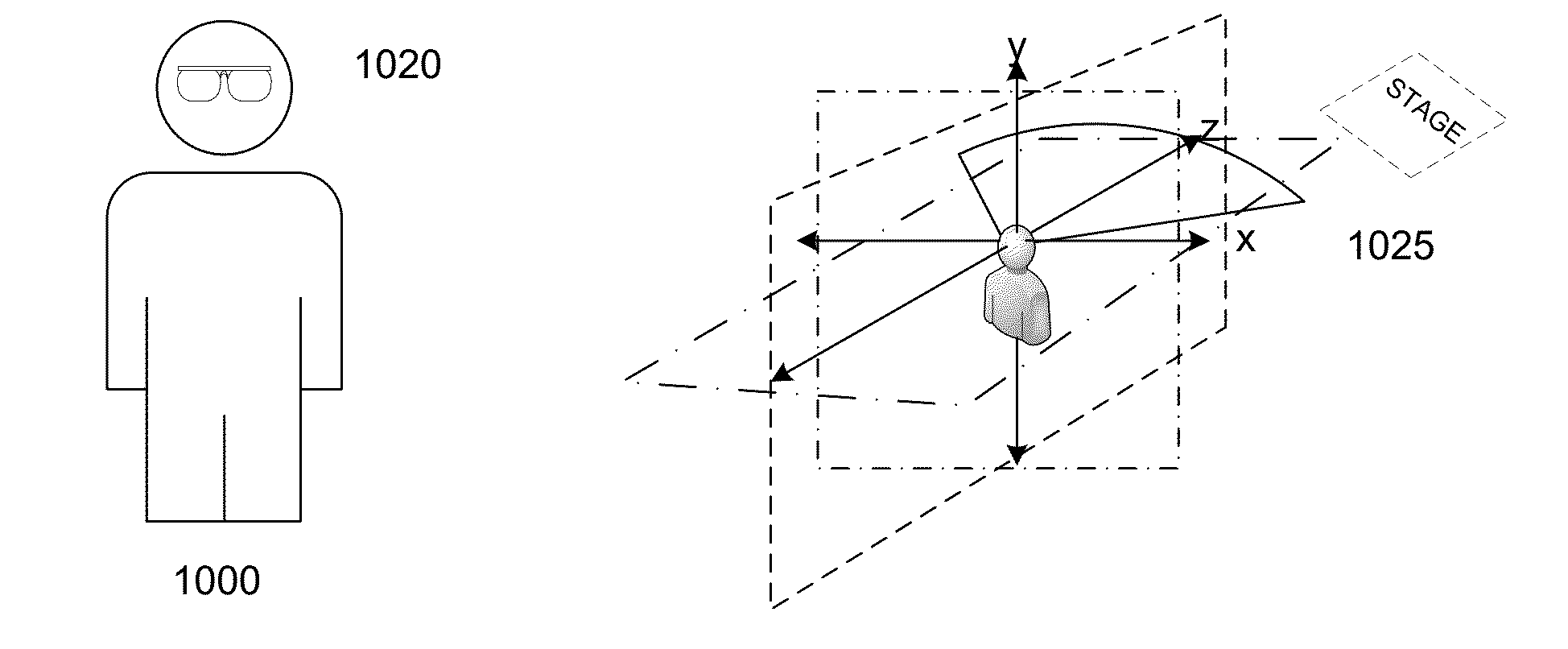

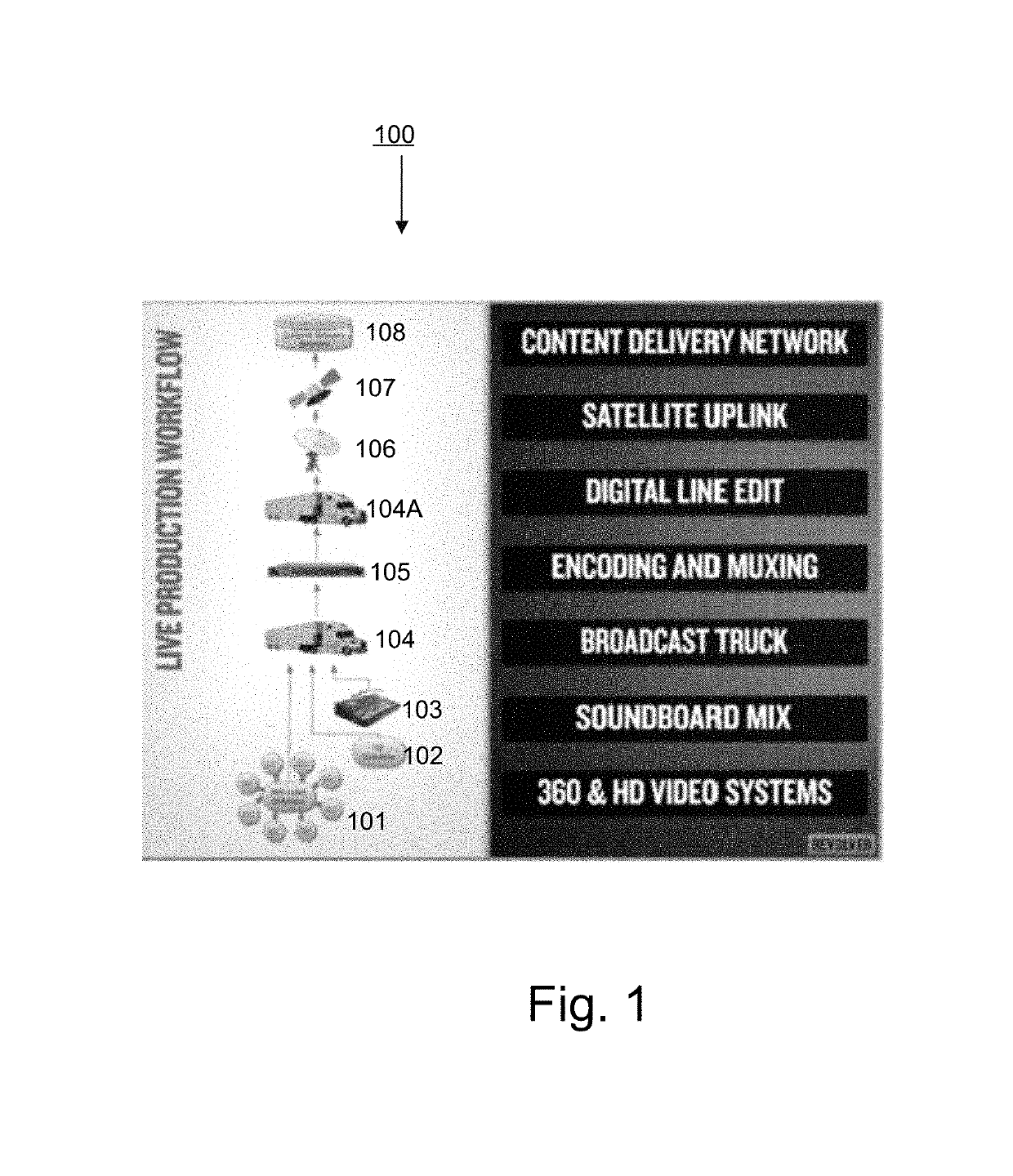

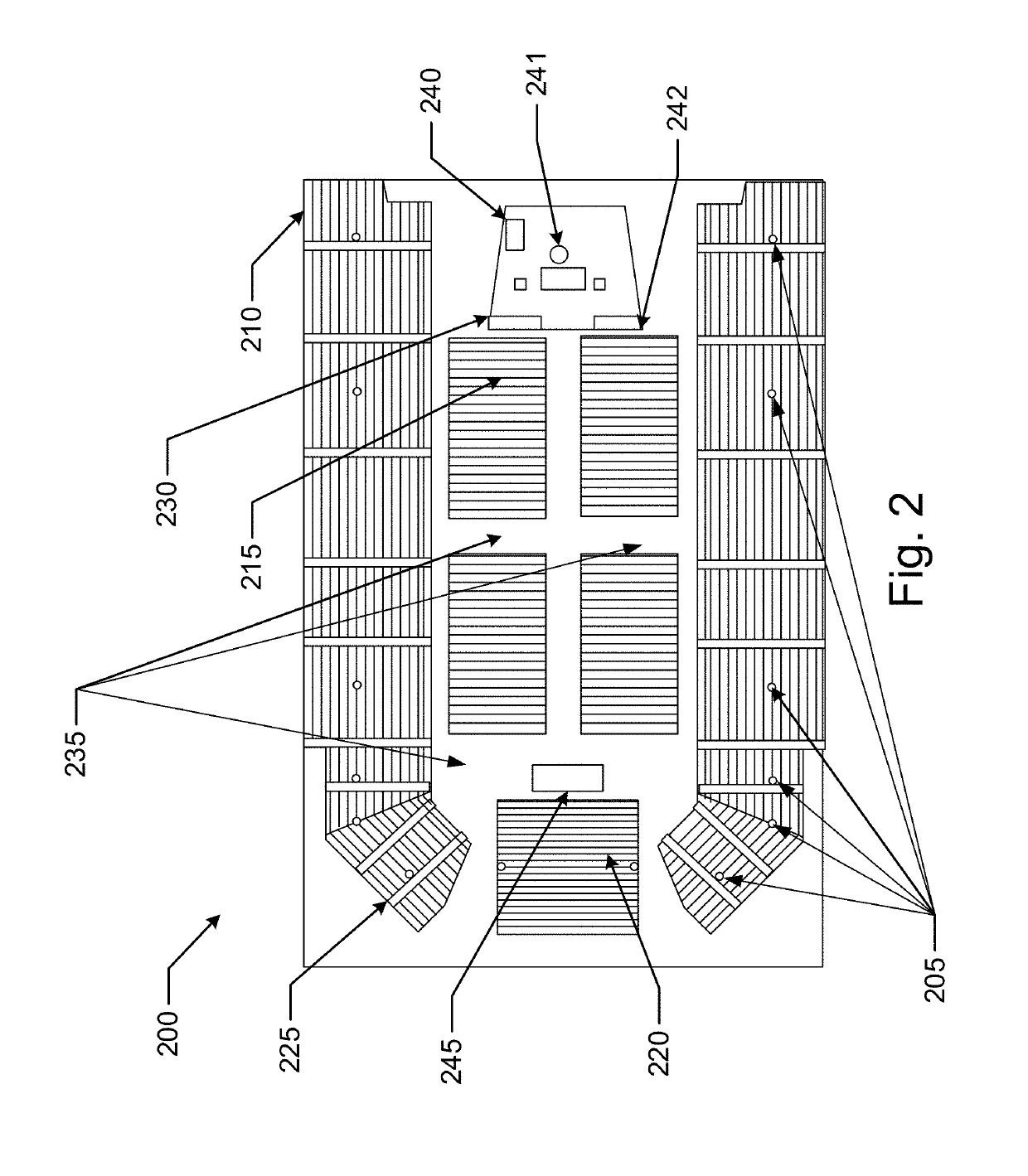

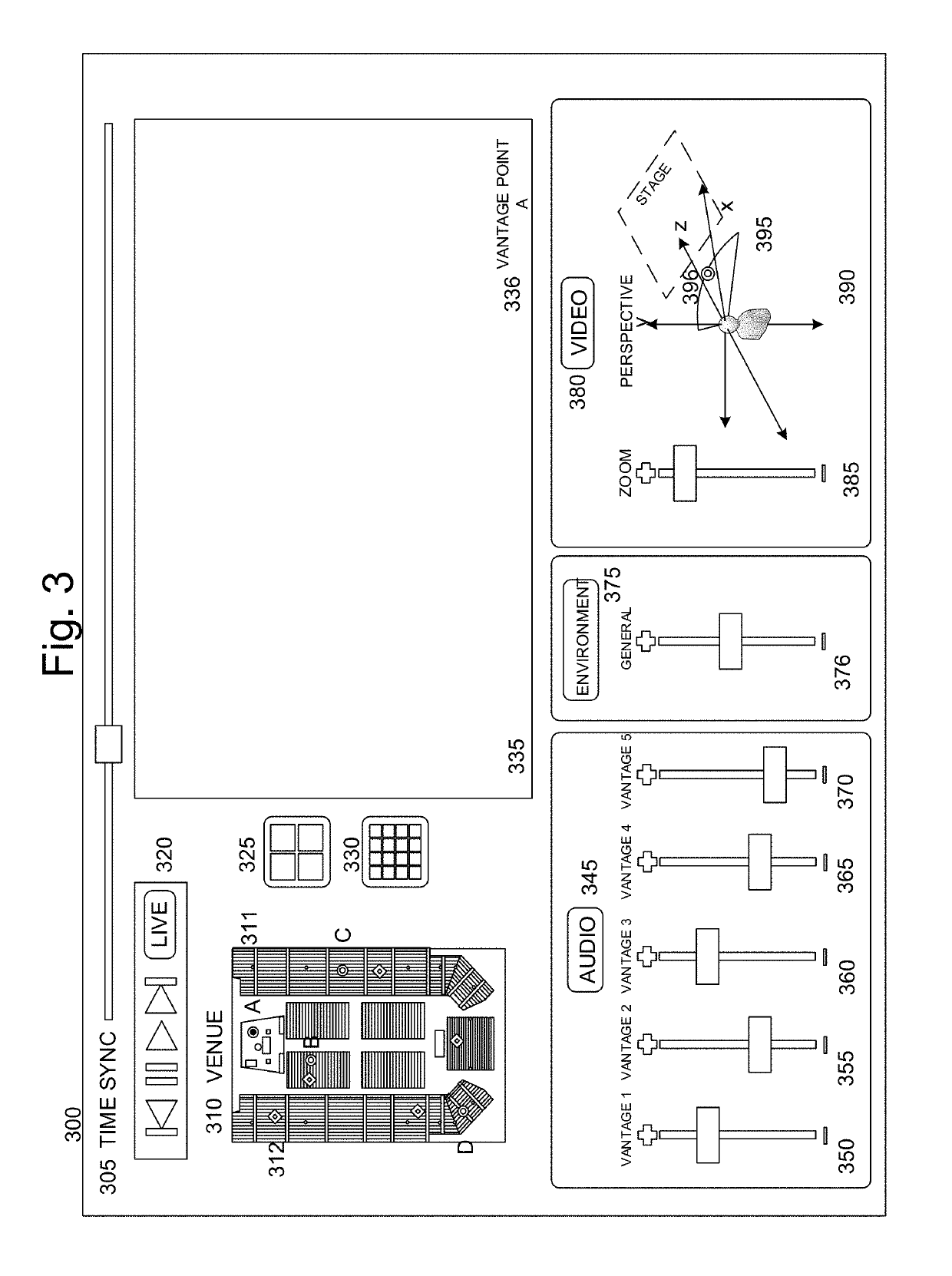

Multi vantage point player with wearable display

ActiveUS20160070346A1Deeper immersion into the event performanceInput/output for user-computer interactionTelevision system detailsVantage pointEngineering

The present invention relates to methods and apparatus for utilizing a wearable display device for viewing streaming video captured from multiple vantage points. More specifically, the present invention presents methods and apparatus for controlling the immersive experience through player configurations for viewing image data captured in two dimensional or three dimensional data formats and from multiple disparate points of capture based on venue specific characteristics, wherein the viewing experience may emulate observance of an event from at least two of the multiple points of capture in specifically chosen locations of a particular venue.

Owner:LIVESTAGE

Multi vantage point audio player

ActiveUS10664225B2Computer controlClosed circuit television systemsComputer graphics (images)Engineering

Owner:LIVESTAGE

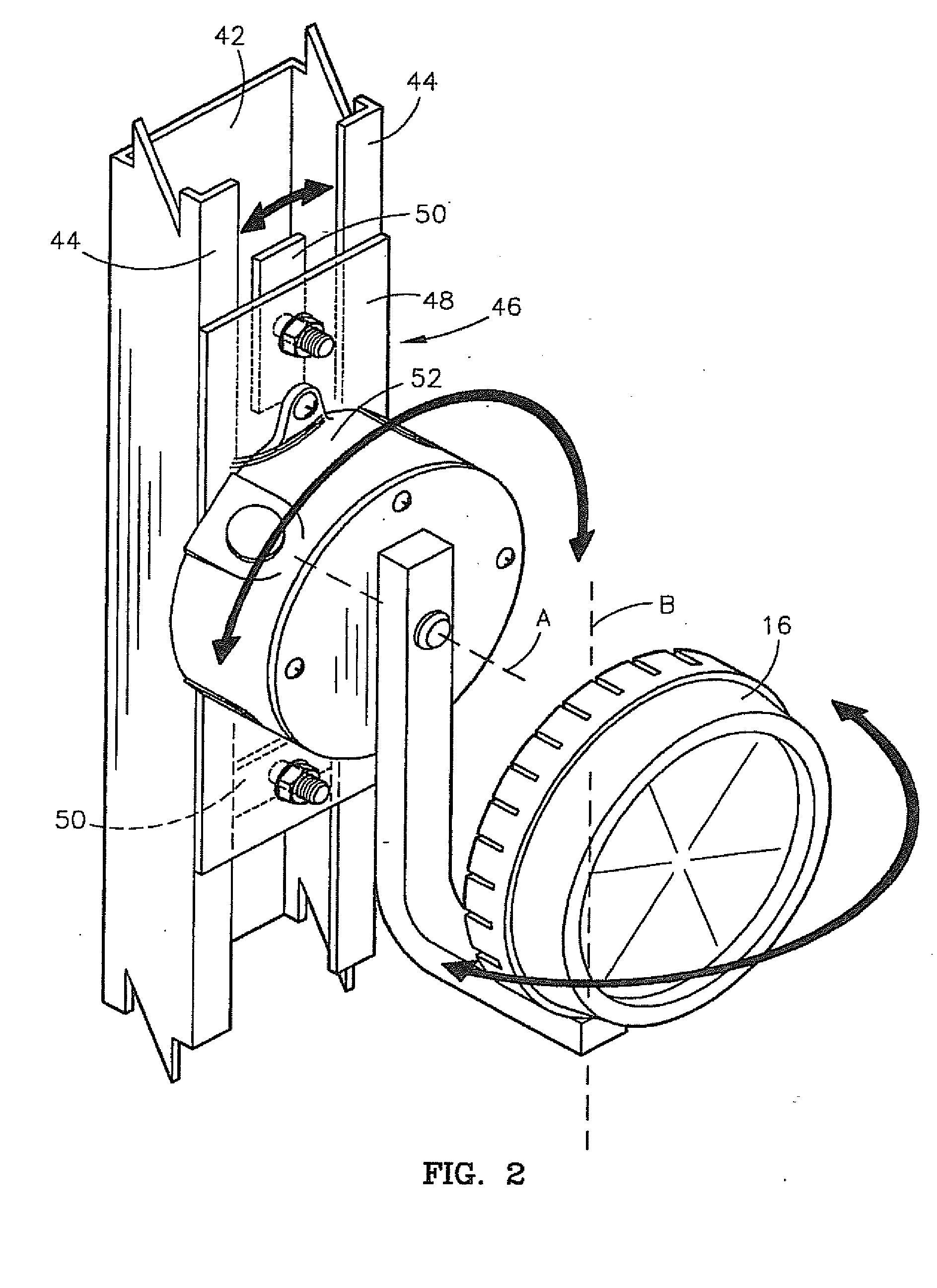

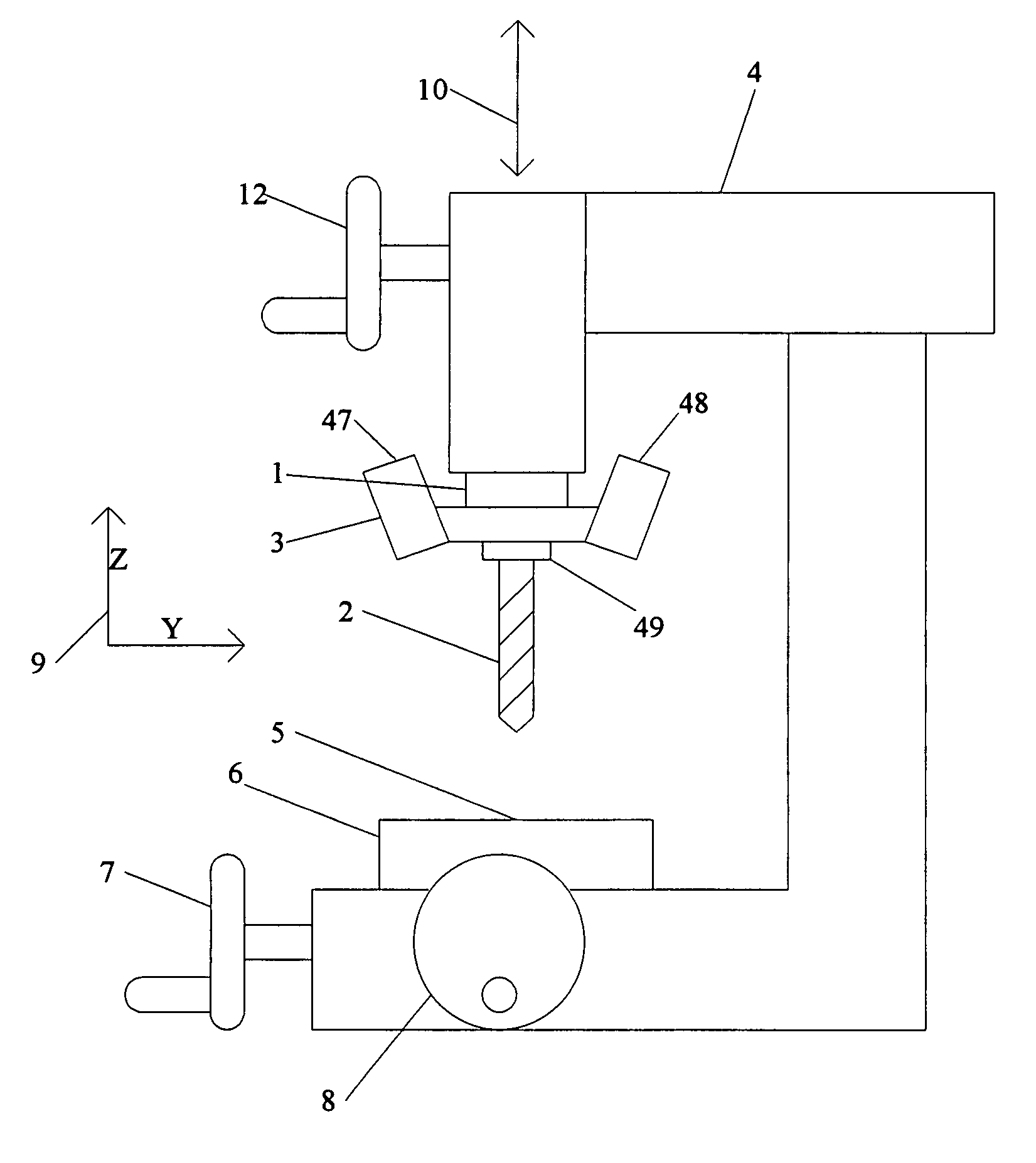

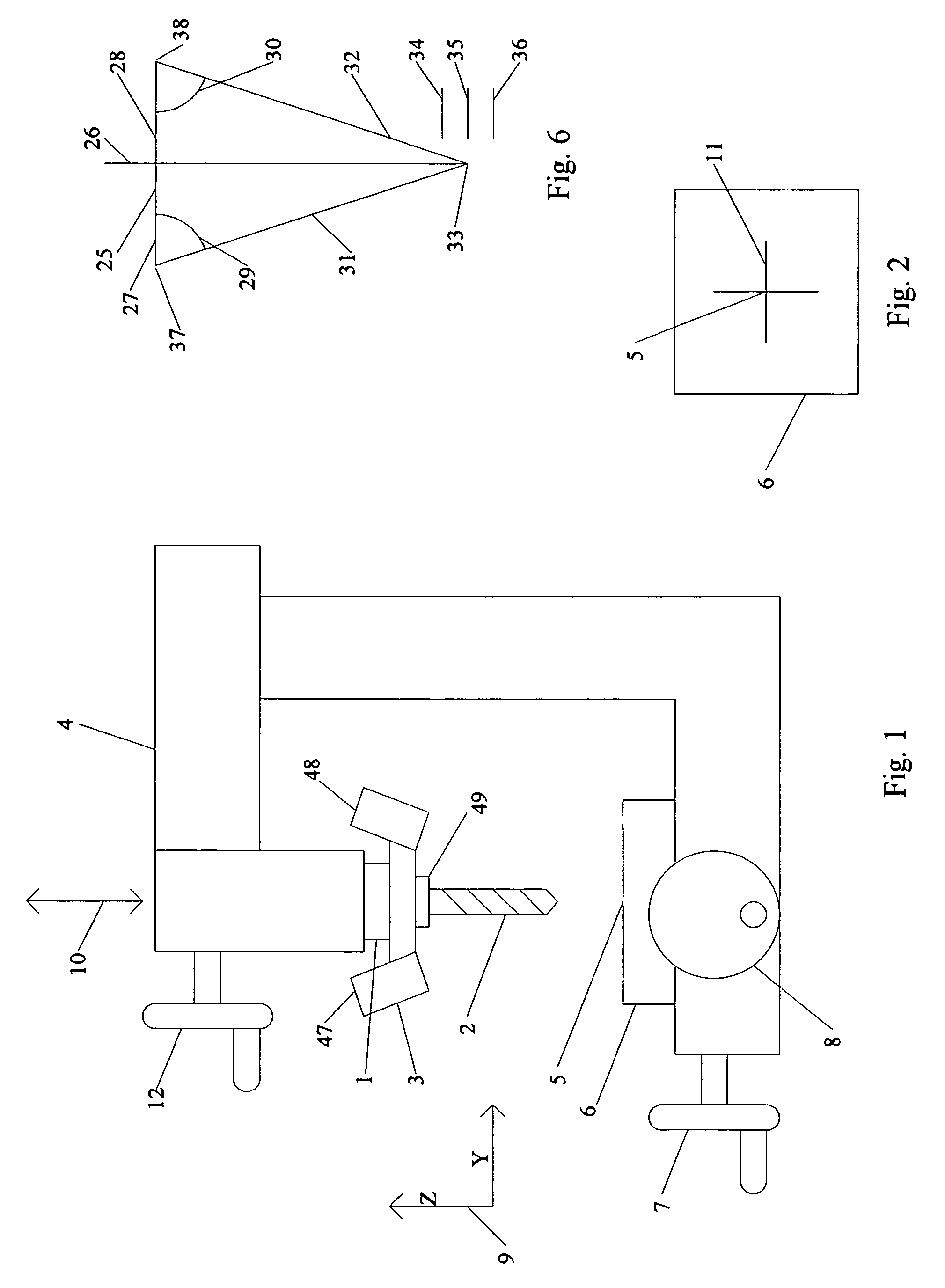

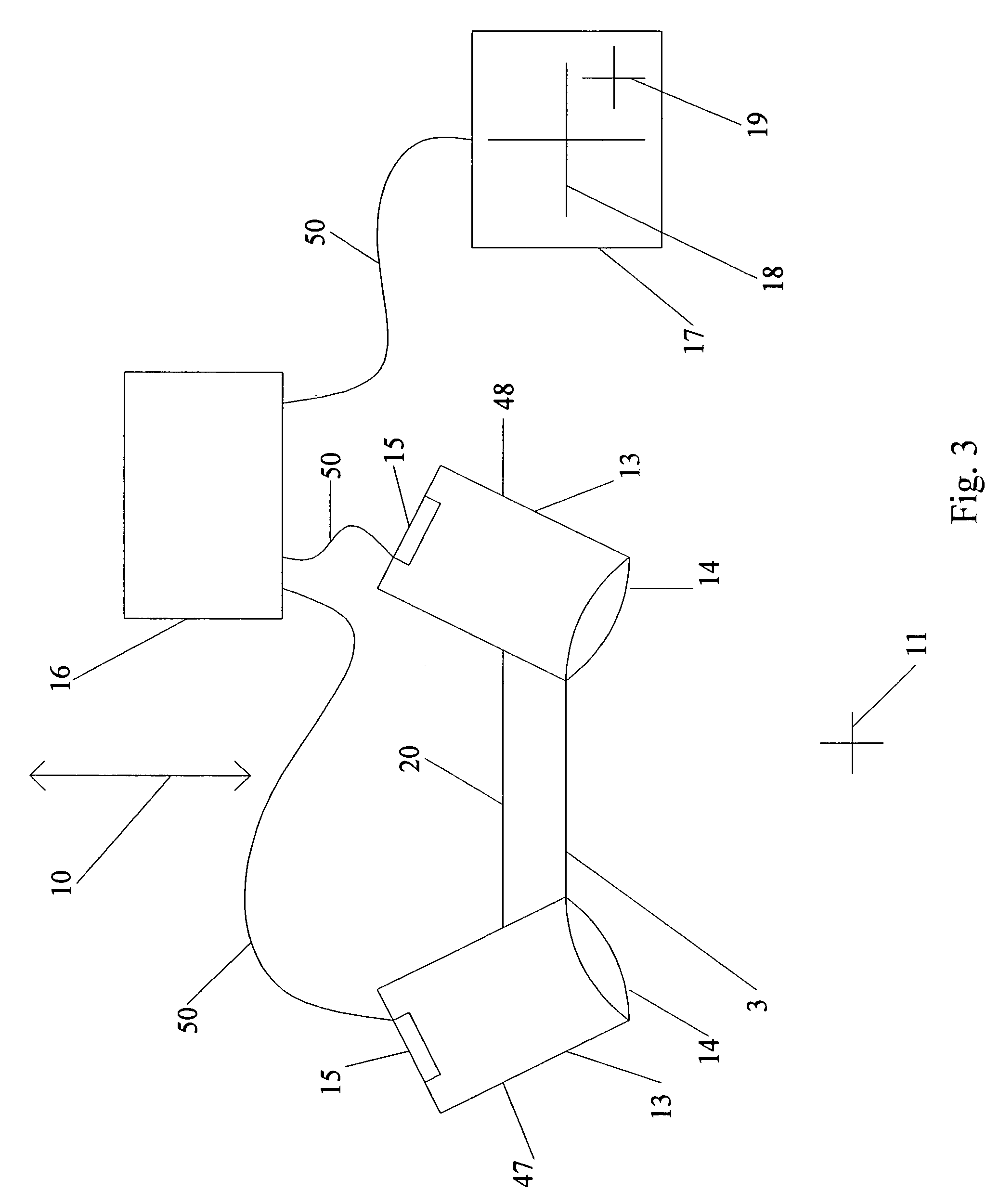

Video centerscope for machine alignment

InactiveUS7405388B2Investigating moving sheetsCounting objects on conveyorsDisplay deviceComputer science

This invention can be attached to the head of a milling machine and performs the functions of a center scope and an edge finder. Unlike a center scope or an edge finder it does not have to be removed from the machine before the machine is used. The invention performs this function by processing images from two cameras that are shot from off axis vantage points. The two images are processed to create a synthetic image that appears to be shot from the on axis vantage point. The processor adds a cross hair to the image to indicate that point on the workpiece that is exactly at the center axis of the quill. This allows the machine operator to exactly position the spindle precisely over a target point on the workpiece in a convent and safe manner.

Owner:REILLEY PETER V

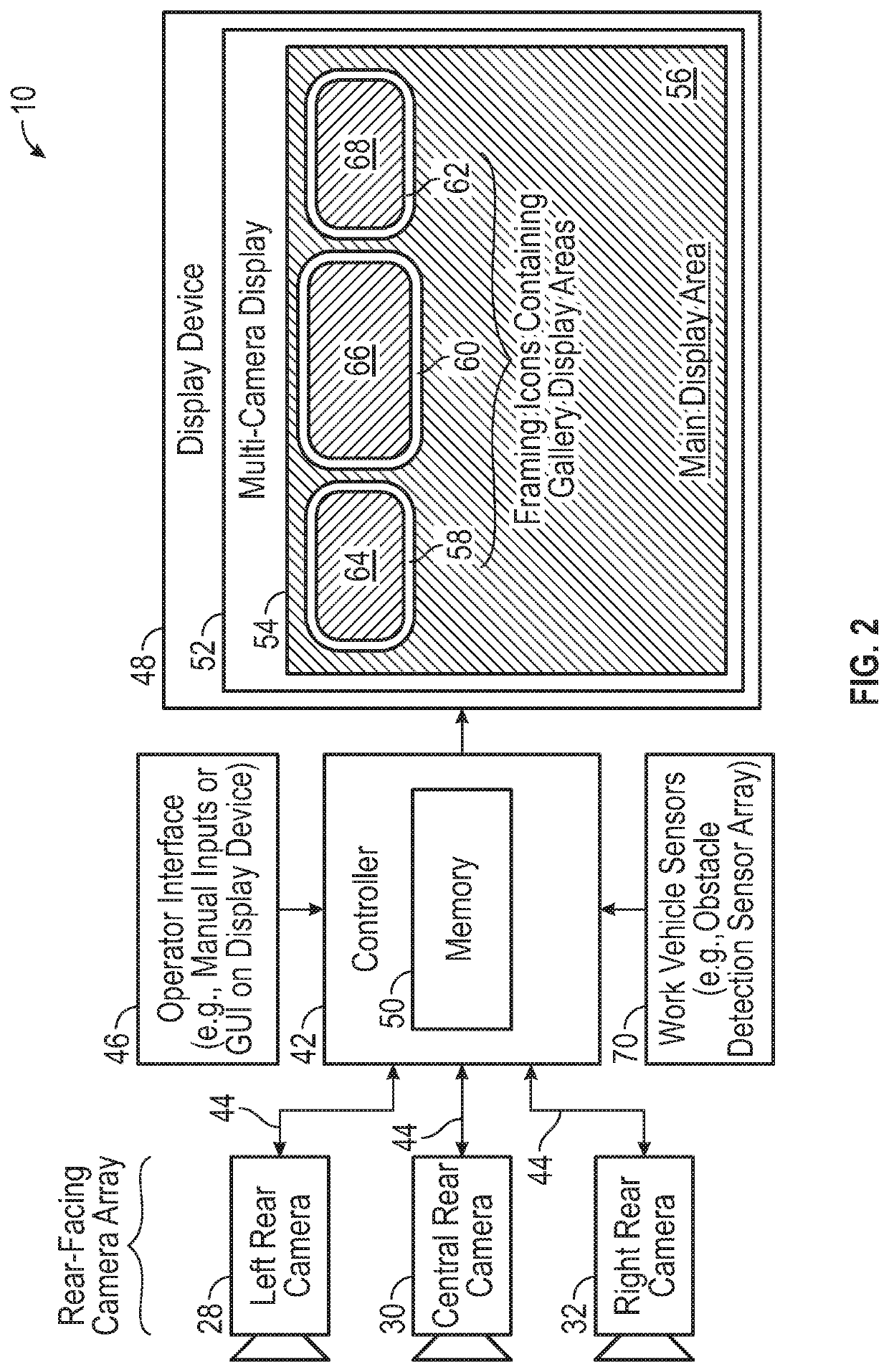

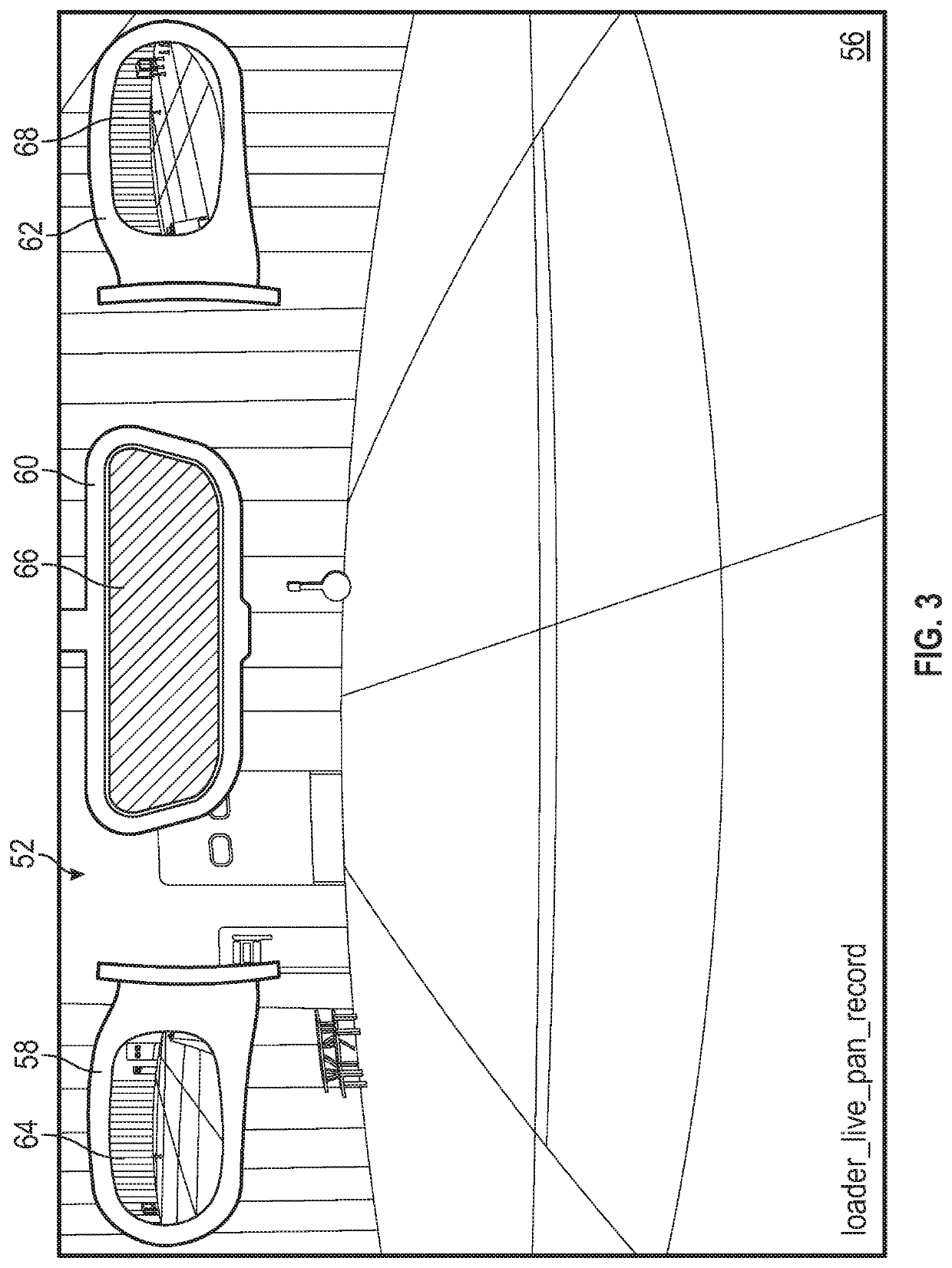

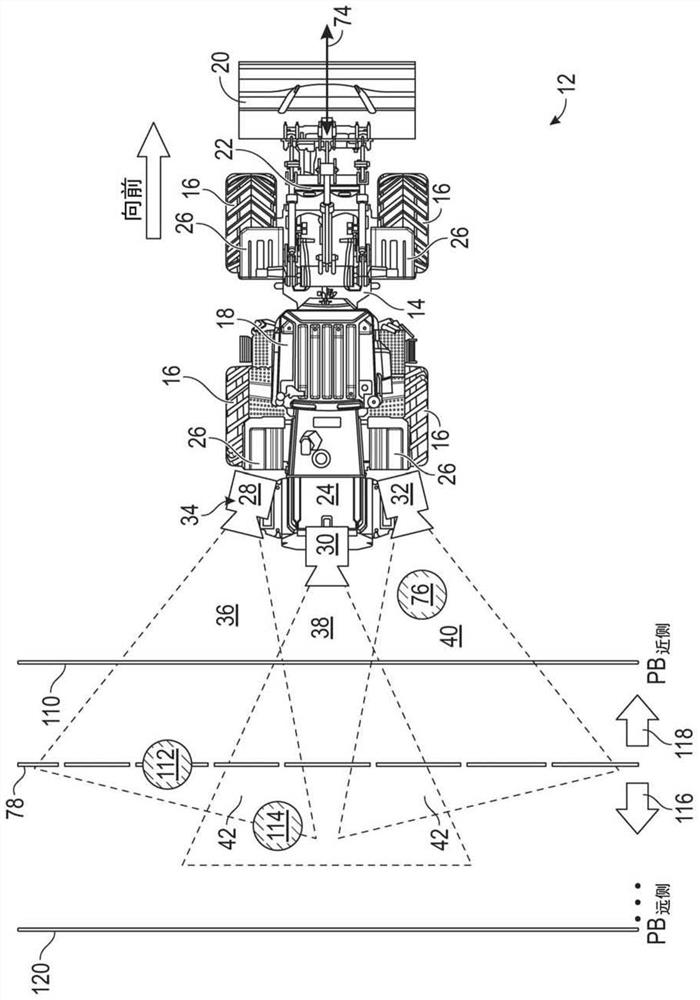

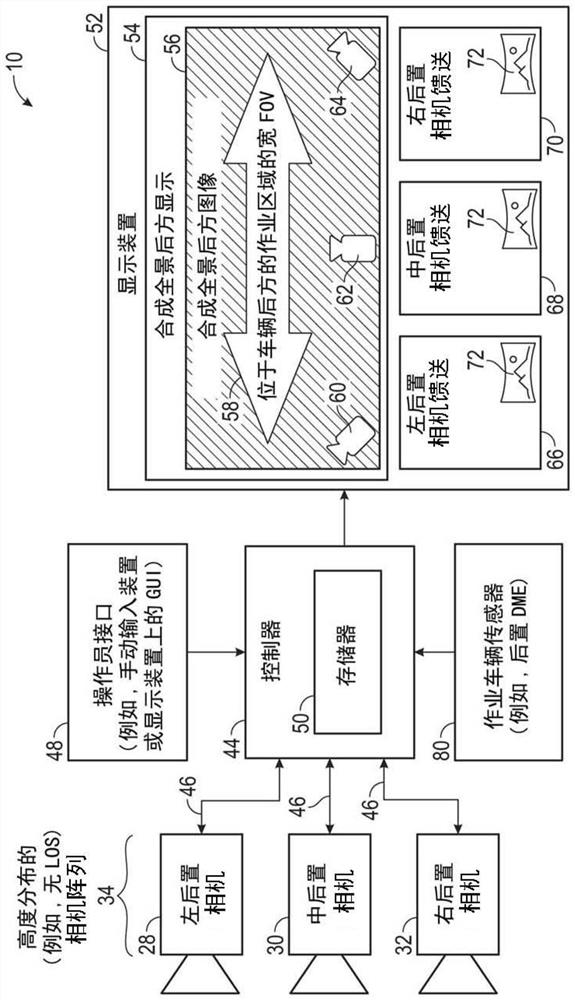

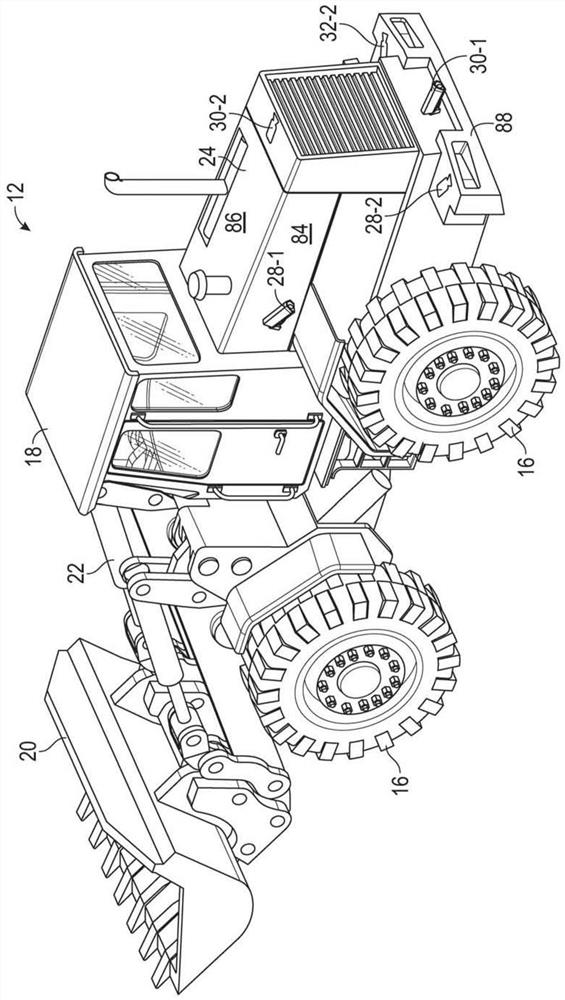

Work vehicle multi-camera vision systems

A multi-camera vision system utilized onboard a work vehicle includes, among other components, vehicle cameras and a display device utilized within an operator station of the work vehicle. The vehicle cameras provide vehicle camera feeds of the work vehicle's surrounding environment, as captured from different vantage points. A controller, which is coupled to the vehicle cameras and to the display device, is configured to: (i) generate, on the display device, a multi-camera display including framing icons, gallery display areas within the framing icons, and a main display area; (ii) identify a currently-selected vehicle camera feed and one or more non-selected vehicle camera feeds from the multiple vehicle camera feeds; and (iii) present the currently-selected vehicle camera feed in the main display area of the multi-camera display, while concurrently presenting the one or more non-selected vehicle camera feeds in a corresponding number of the gallery display areas.

Owner:DEERE & CO

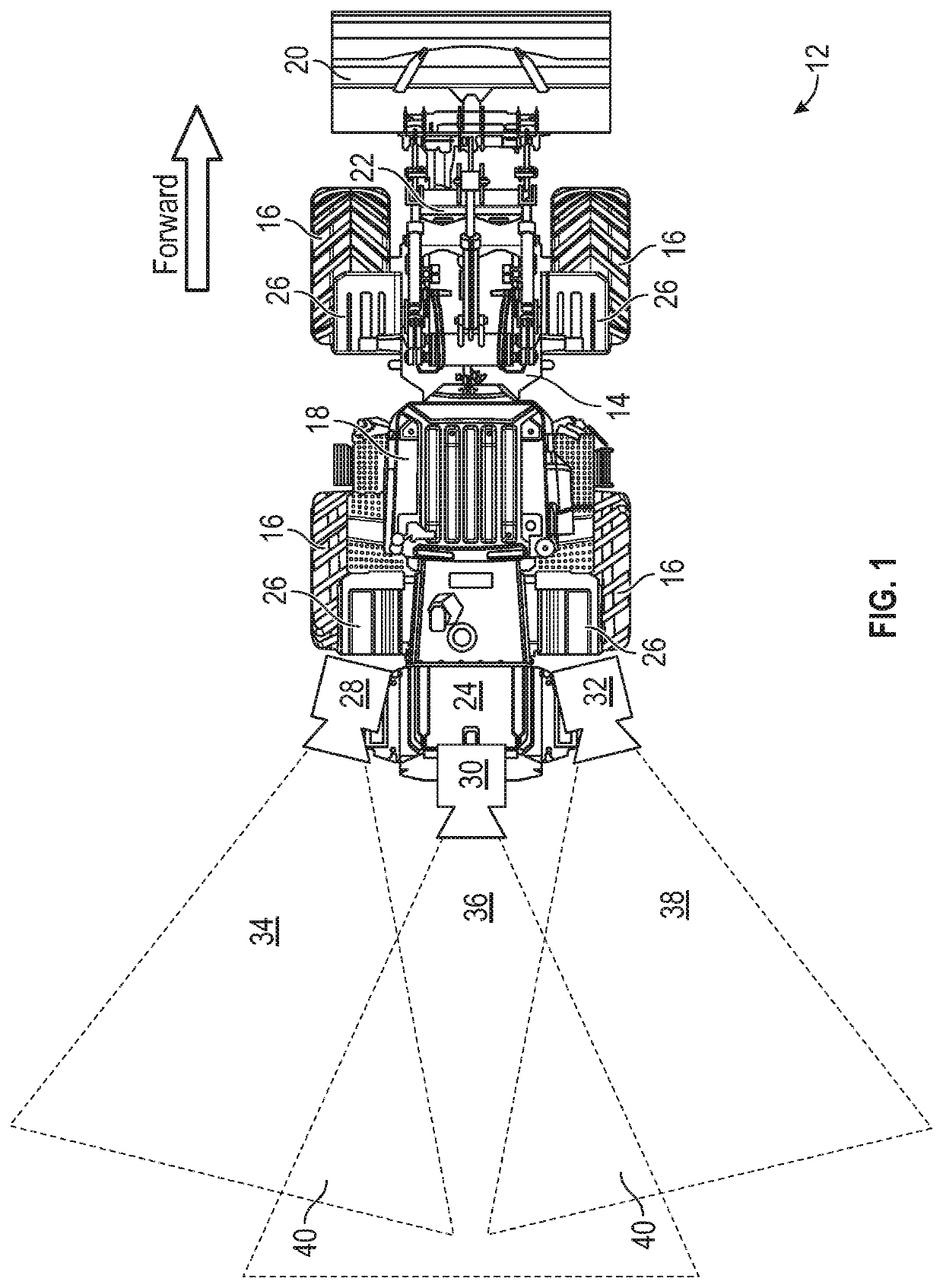

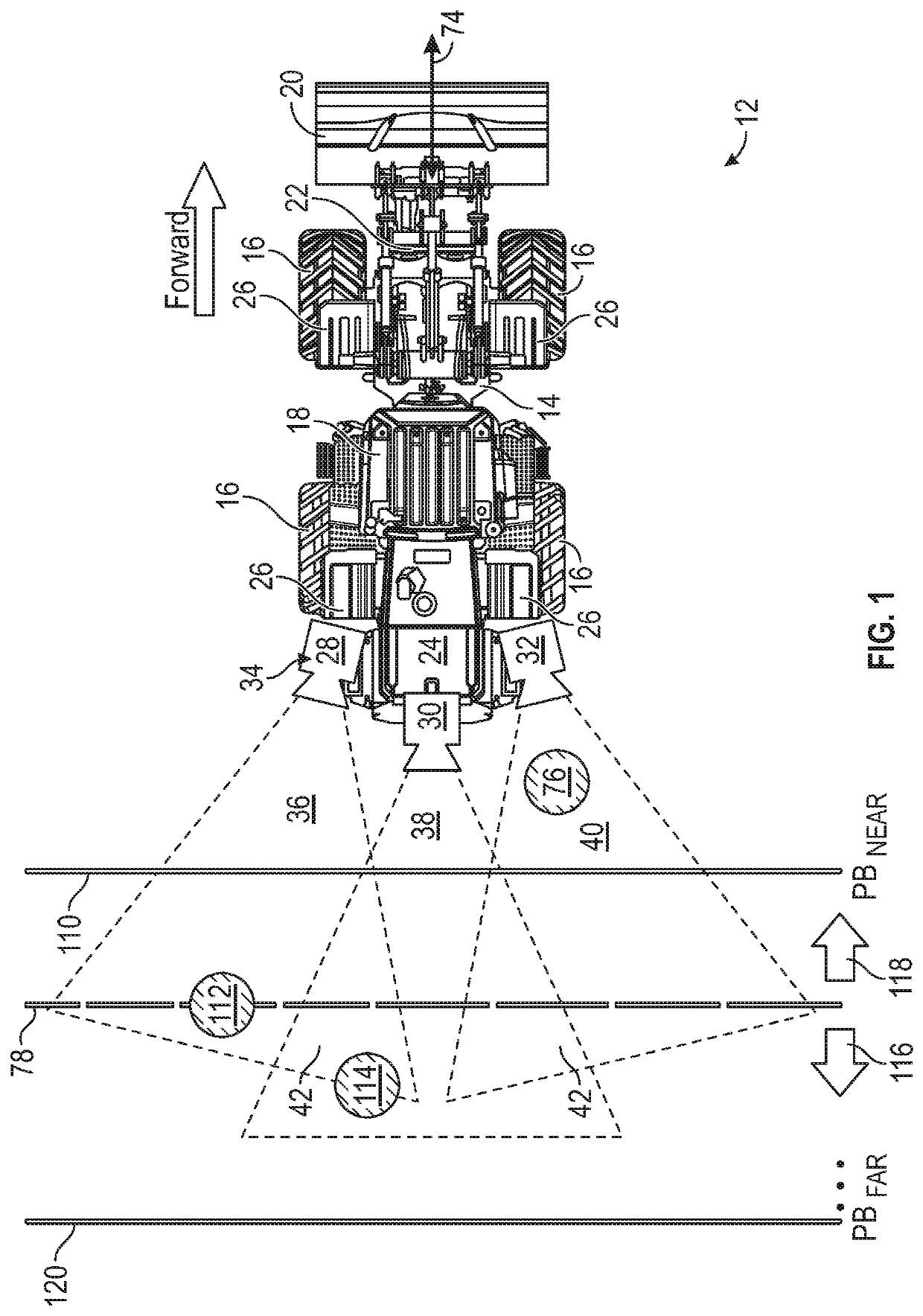

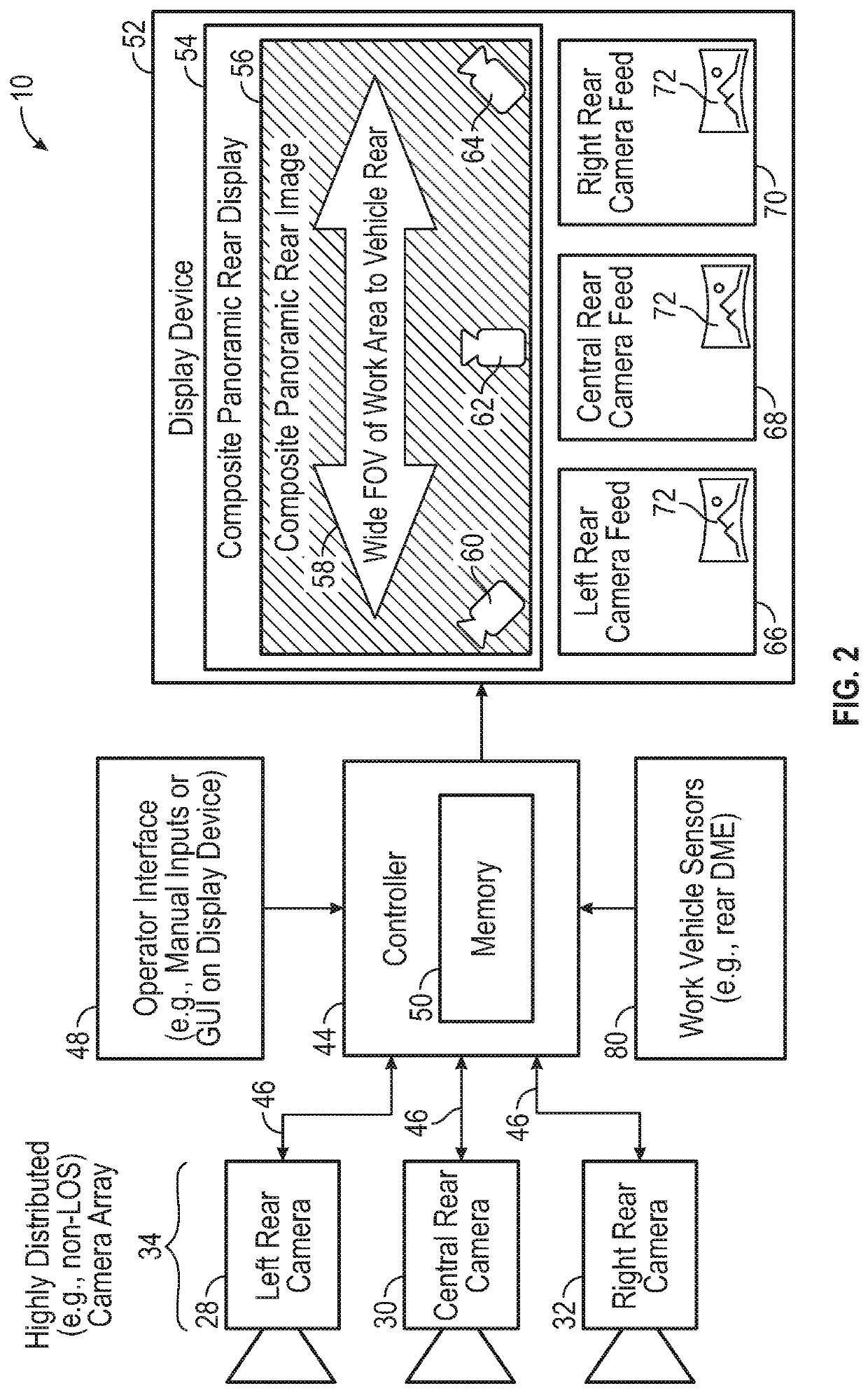

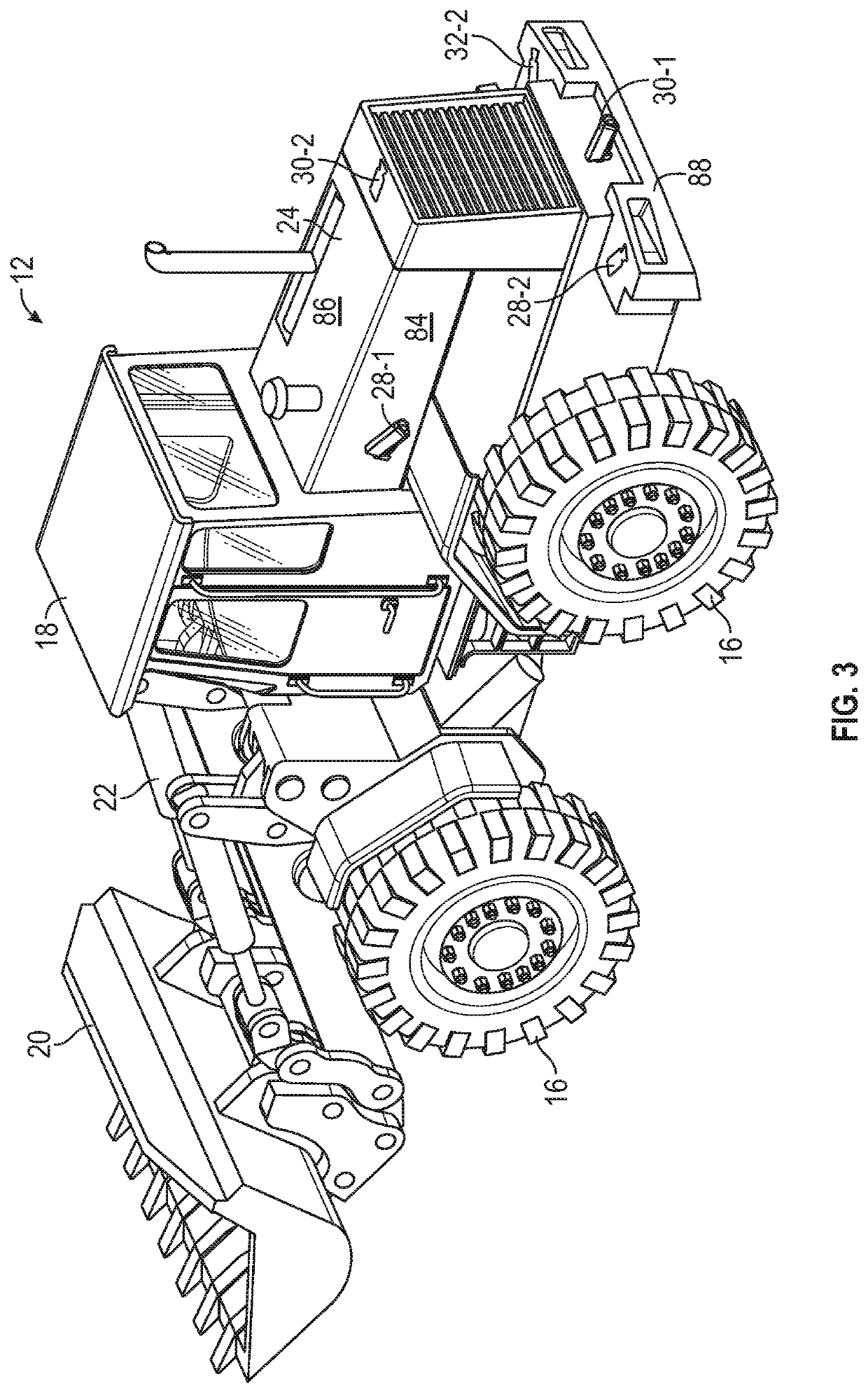

Work vehicle composite panoramic vision systems

ActiveUS10882468B1Television system detailsPedestrian/occupant safety arrangementComputer graphics (images)In vehicle

A composite panoramic vision system utilized onboard a work vehicle includes a display device, a vehicle-mounted camera array, and a controller. The vehicle-mounted camera array includes, in turn, first and second vehicle cameras having partially overlapping fields of view and positioned to capture first and second camera feeds, respectively, of the work vehicle's exterior environment from different first vantage points. During operation of the composite panoramic vision system, the controller receives the first and second camera feeds from the first and second cameras, respectively; generates a composite panoramic image of the work vehicle's exterior environment from at least the first and second camera feeds; and then presents the composite panoramic image on the display device for viewing by an operator of the work vehicle.

Owner:DEERE & CO

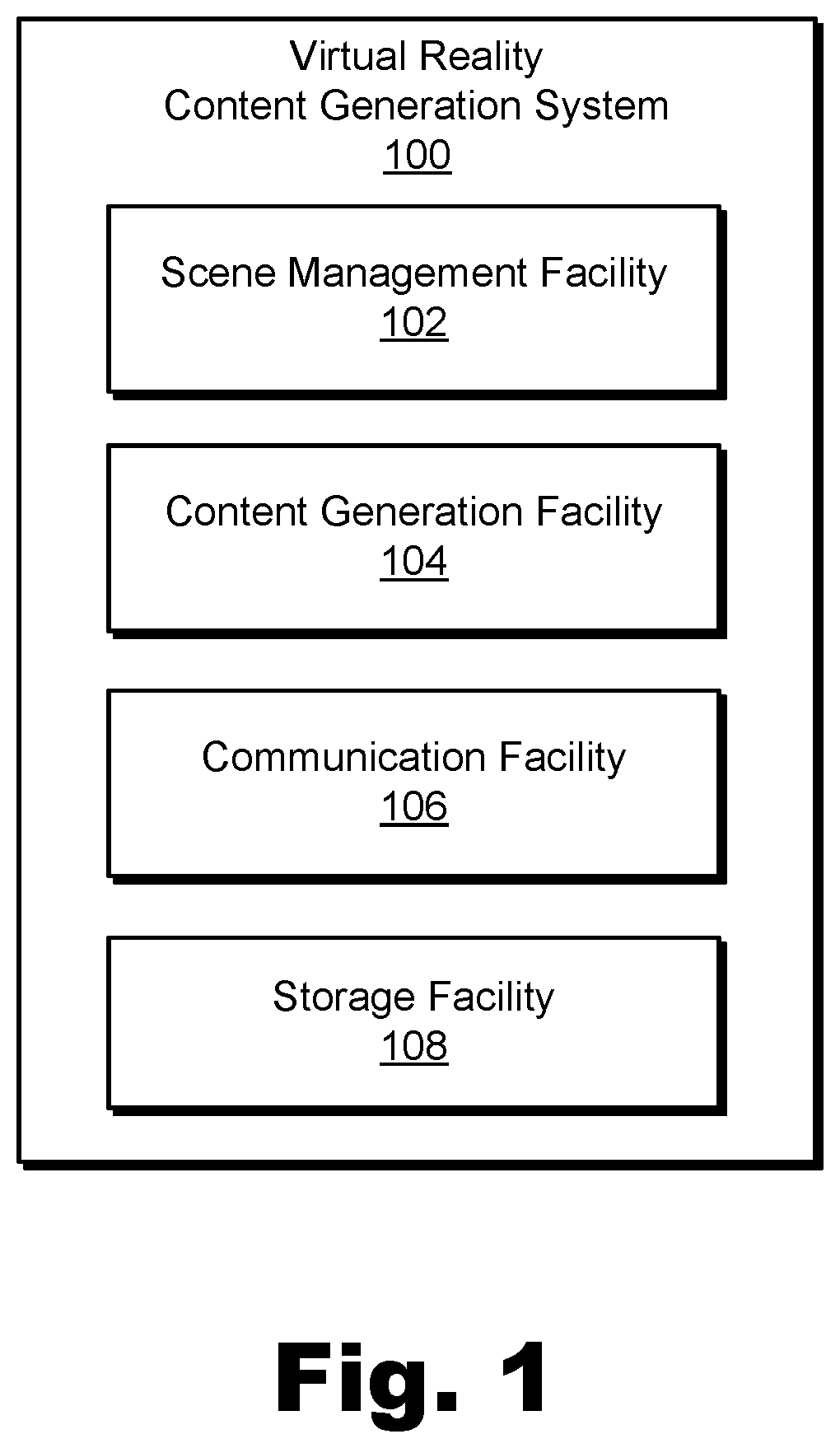

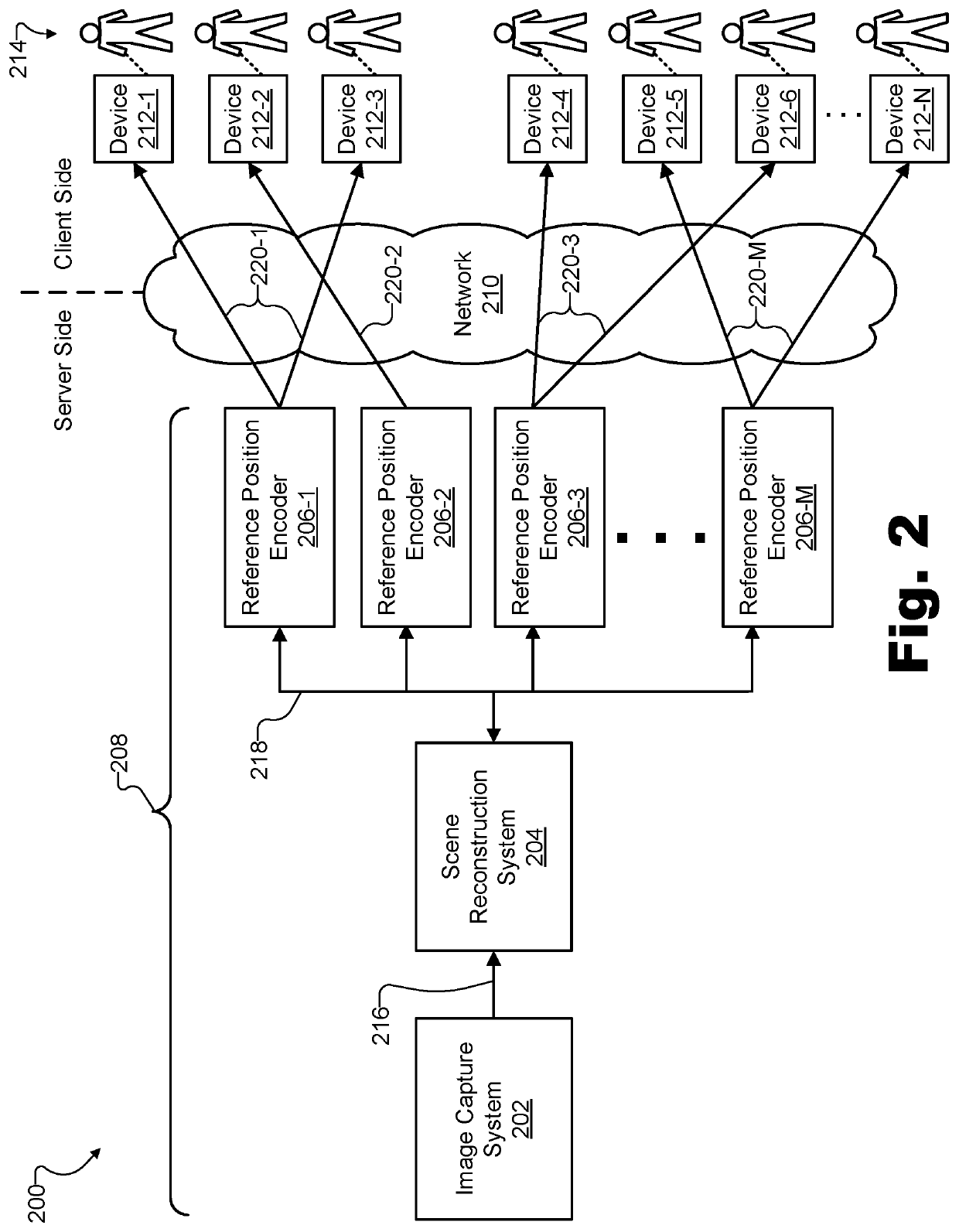

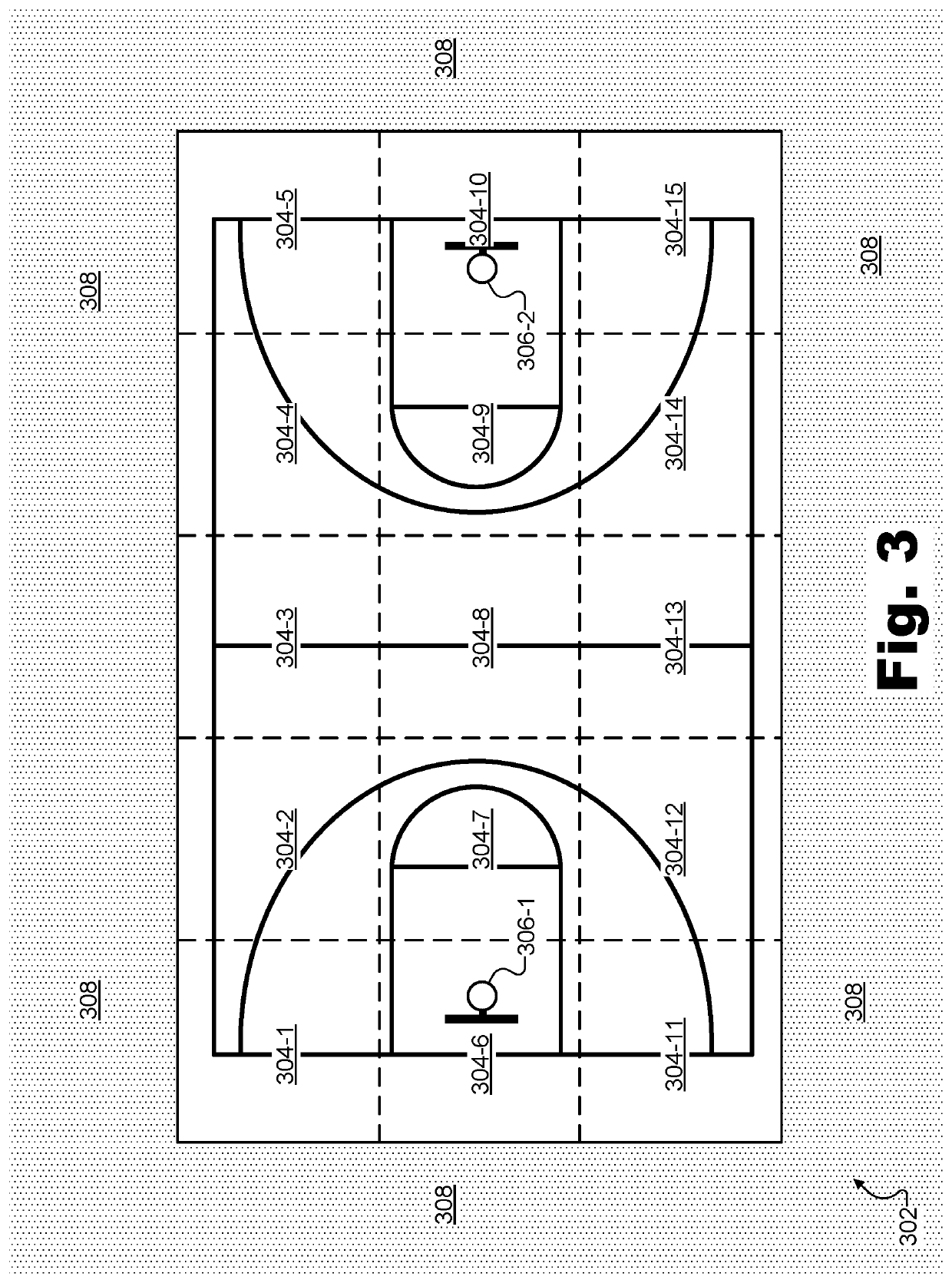

Methods and Systems for Representing a Scene By Combining Perspective and Orthographic Projections

An exemplary virtual reality content generation system manages state data representing a virtual reality scene. Based on the state data, the system generates a scene representation of the virtual reality scene that includes a set of surface data frame sequences each depicting a different projection of the virtual reality scene from a different vantage point. The different projections include a plurality of orthographic projections that are generated based on orthographic vantage points and are representative of a core portion of the virtual reality scene. The different projections also include a plurality of perspective projections that are generated based on perspective vantage points and are representative of a peripheral portion of the virtual reality scene external to the core portion. The system further provides the scene representation to a media player device by way of a network.

Owner:VERIZON PATENT & LICENSING INC

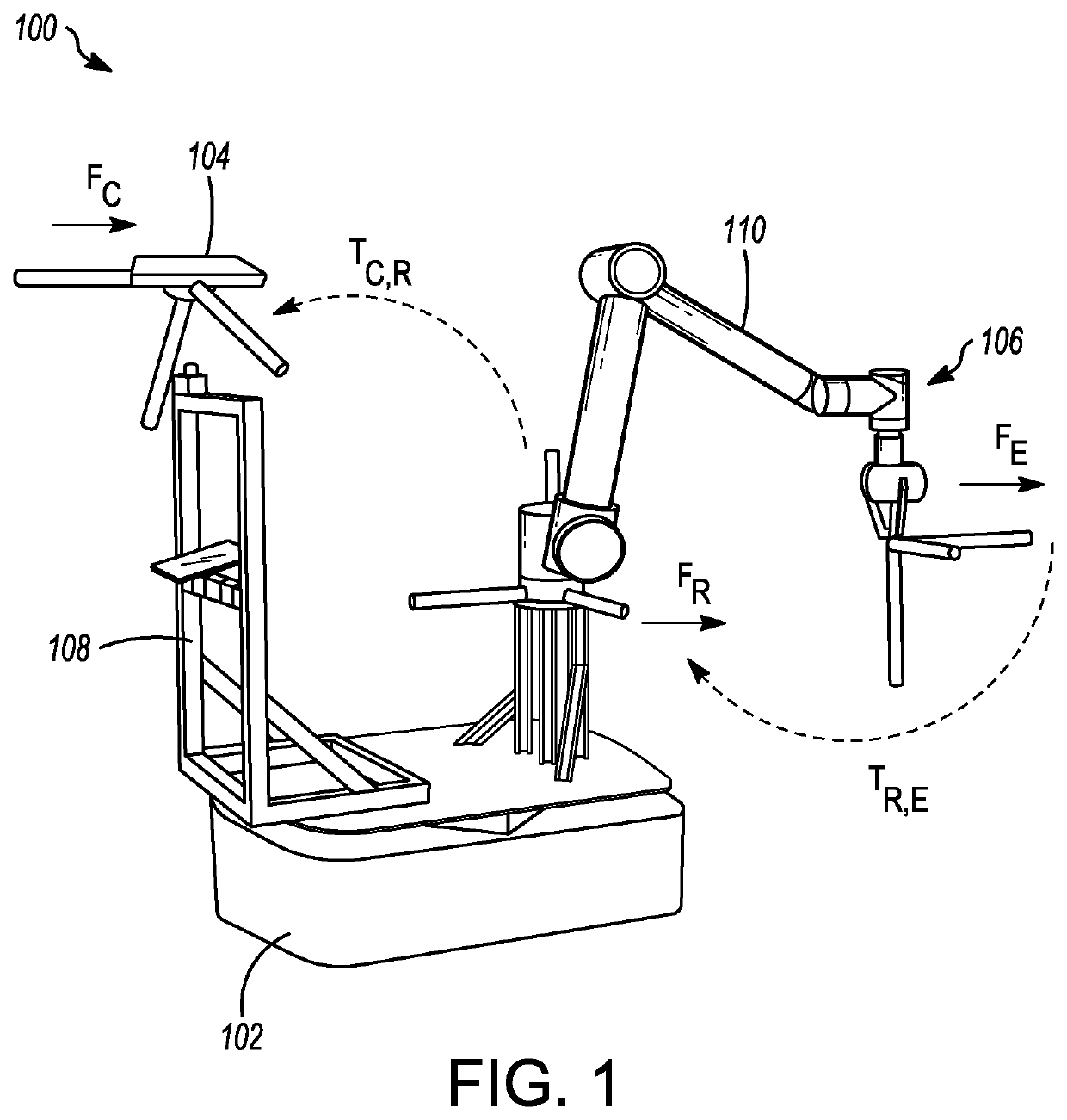

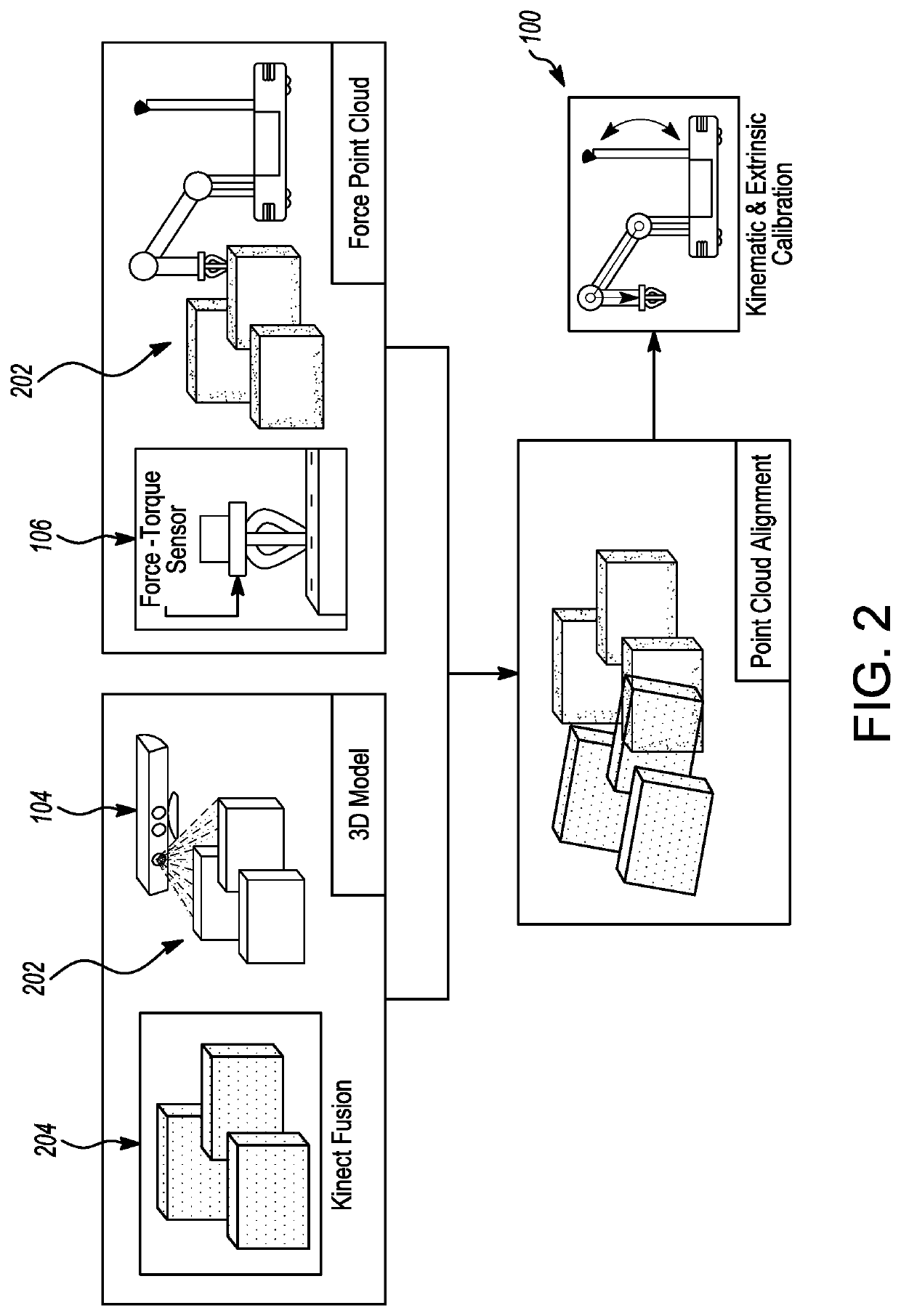

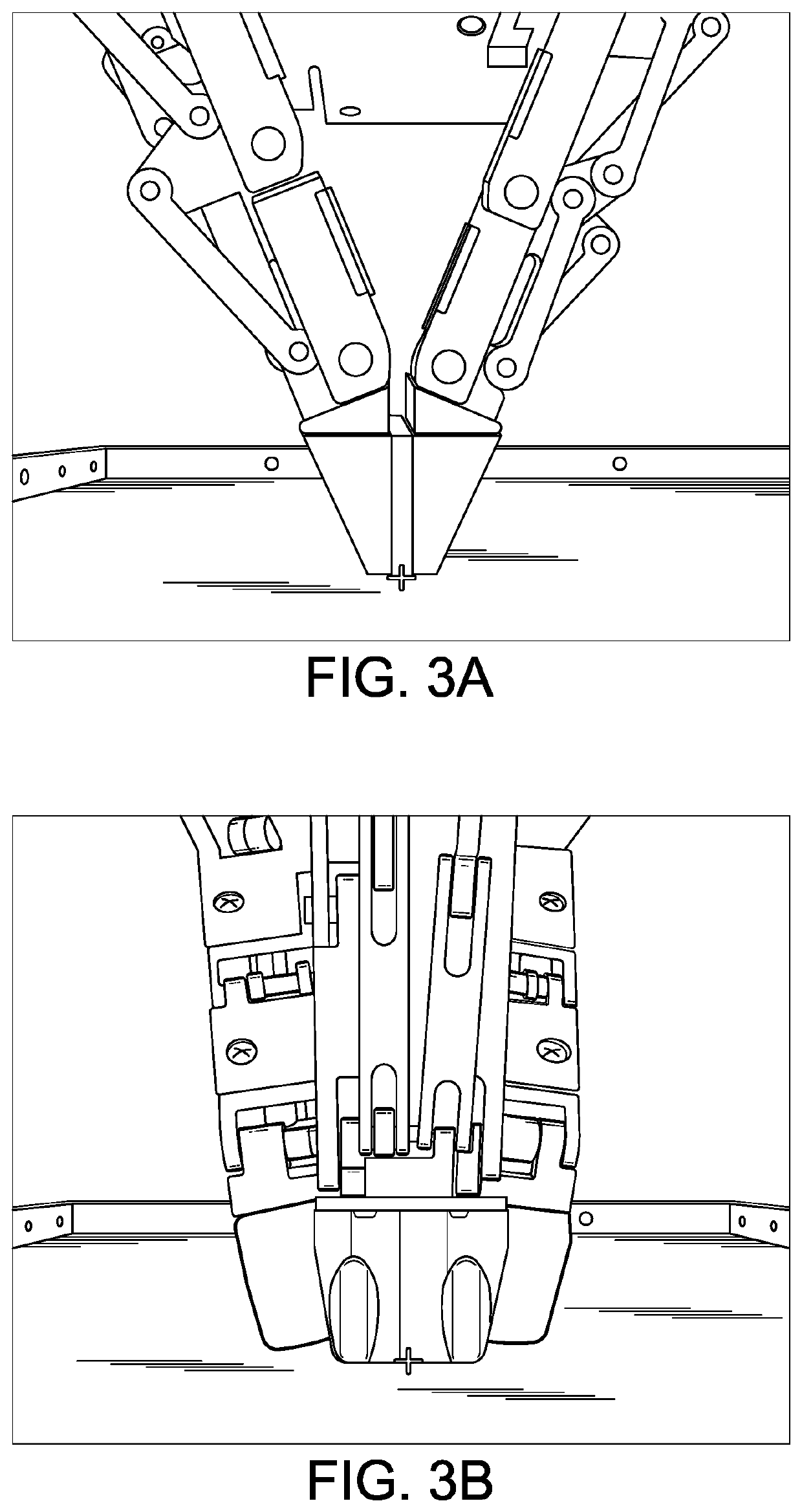

Method of calibrating a mobile manipulator

A method is provided for calibrating a manipulator and an external sensor. The method includes generating a first cloud map using depth information collected using a depth sensor, and generating a second cloud map using contact information collected using a contact sensor, which is coupled to an end effector of the manipulator. Thereafter, the first cloud map and the second cloud map are aligned to recover extrinsic parameters using the iterative closest point algorithm. The depth sensor is stationary relative to a base frame of the manipulator, and the depth information corresponding to a rigid structure is collected by capturing depth information from multiple vantage points by navigating the manipulator. The contact information is collected by moving an end effector of the manipulator over the rigid structure.

Owner:THE GOVERNINIG COUNCIL OF THE UNIV OF TORANTO

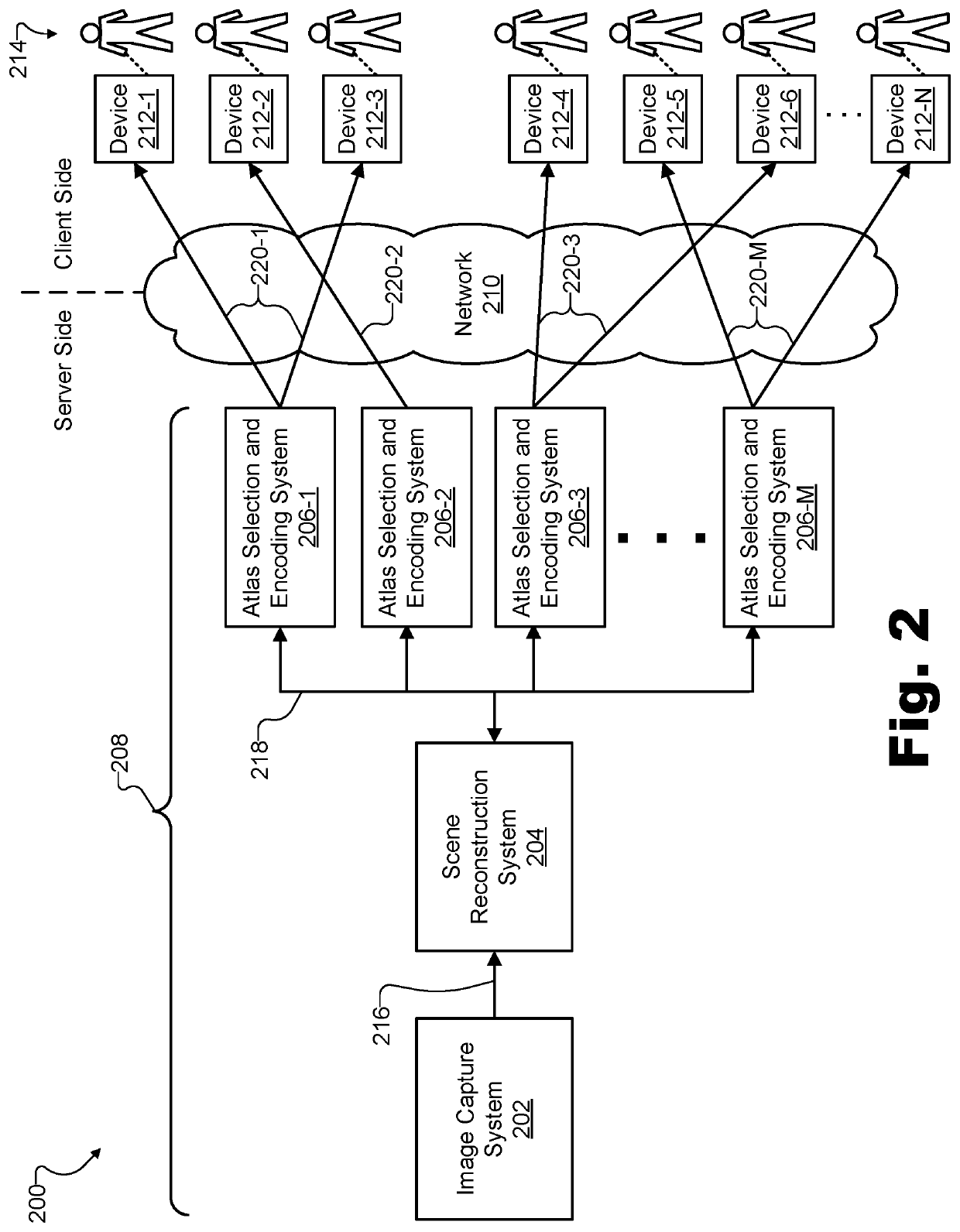

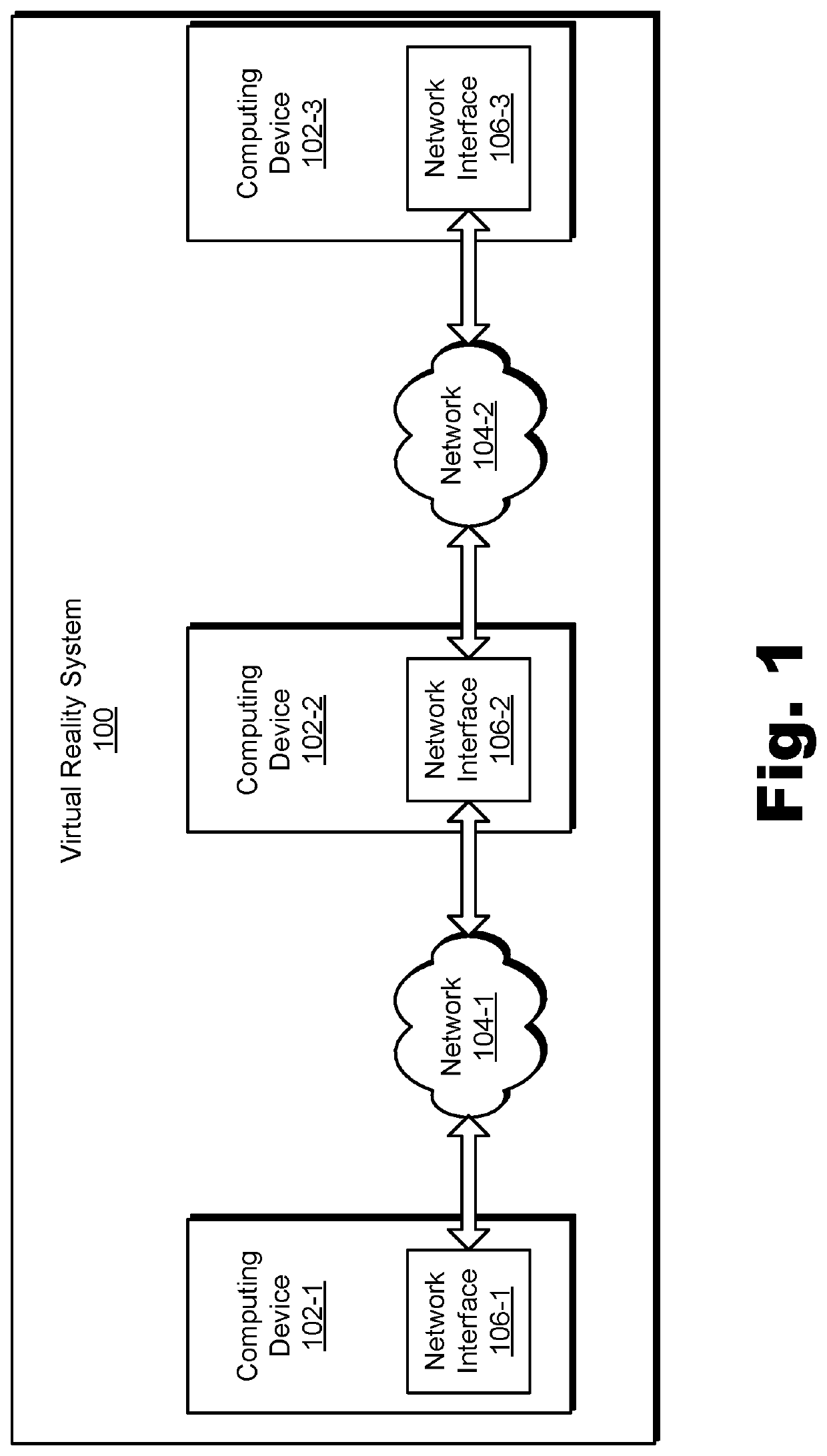

Methods and systems for transmitting data in a virtual reality system

ActiveUS10645360B2Television system detailsStatic indicating devicesData packComputer graphics (images)

An exemplary method includes a first physical computing device of a virtual reality system acquiring, from a capture device physically disposed at a vantage point in relation to a three-dimensional (“3D”) scene, surface data for the 3D scene, the surface data including a first instance of a multi-bit frame, separating the first instance of the multi-bit frame into a most significant byte (“MSB”) frame and a least significant byte (“LSB”) frame, compressing the MSB frame, and transmitting the LSB frame and the compressed MSB frame to a second physical computing device of the virtual reality system by way of the network interface.

Owner:VERIZON PATENT & LICENSING INC

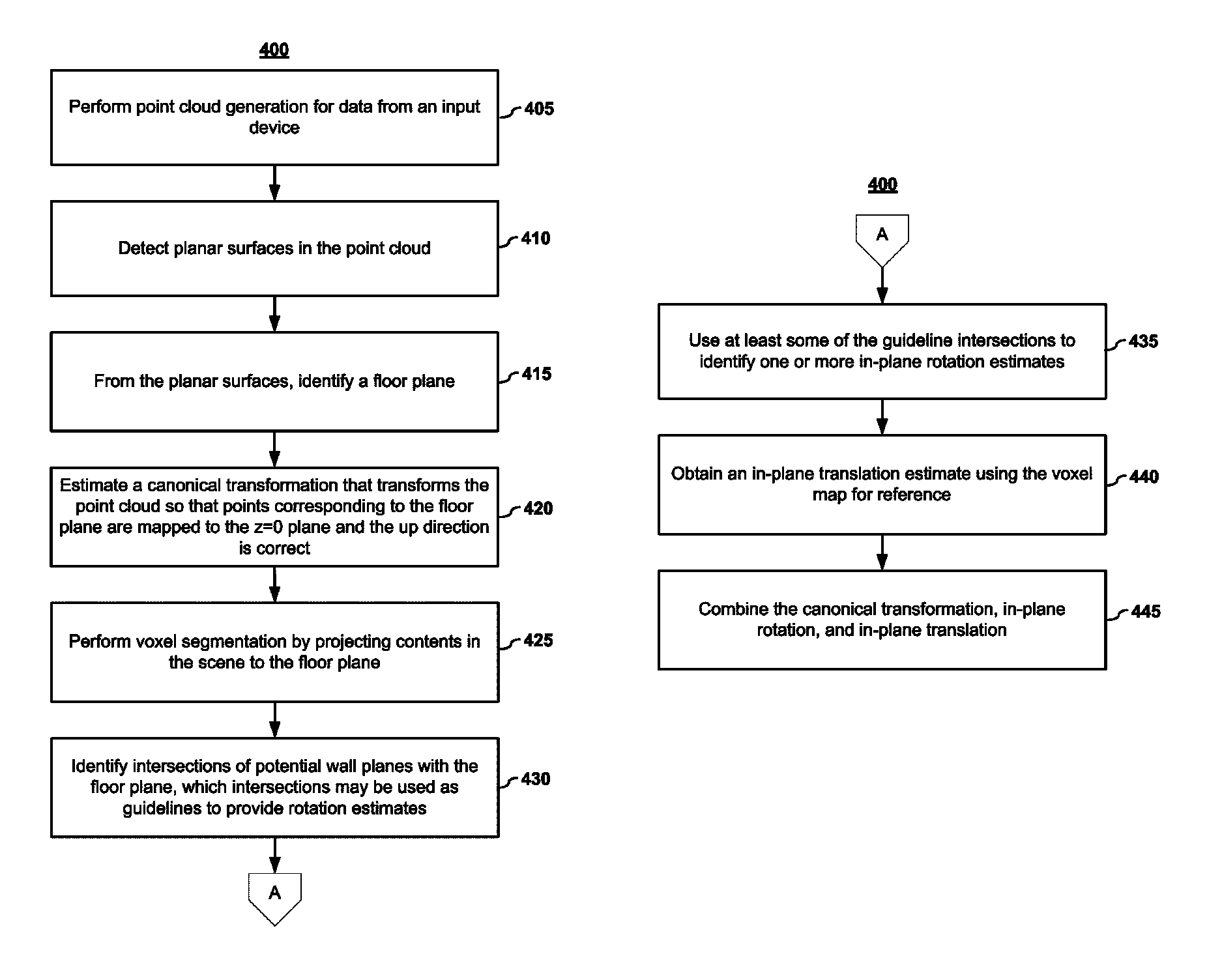

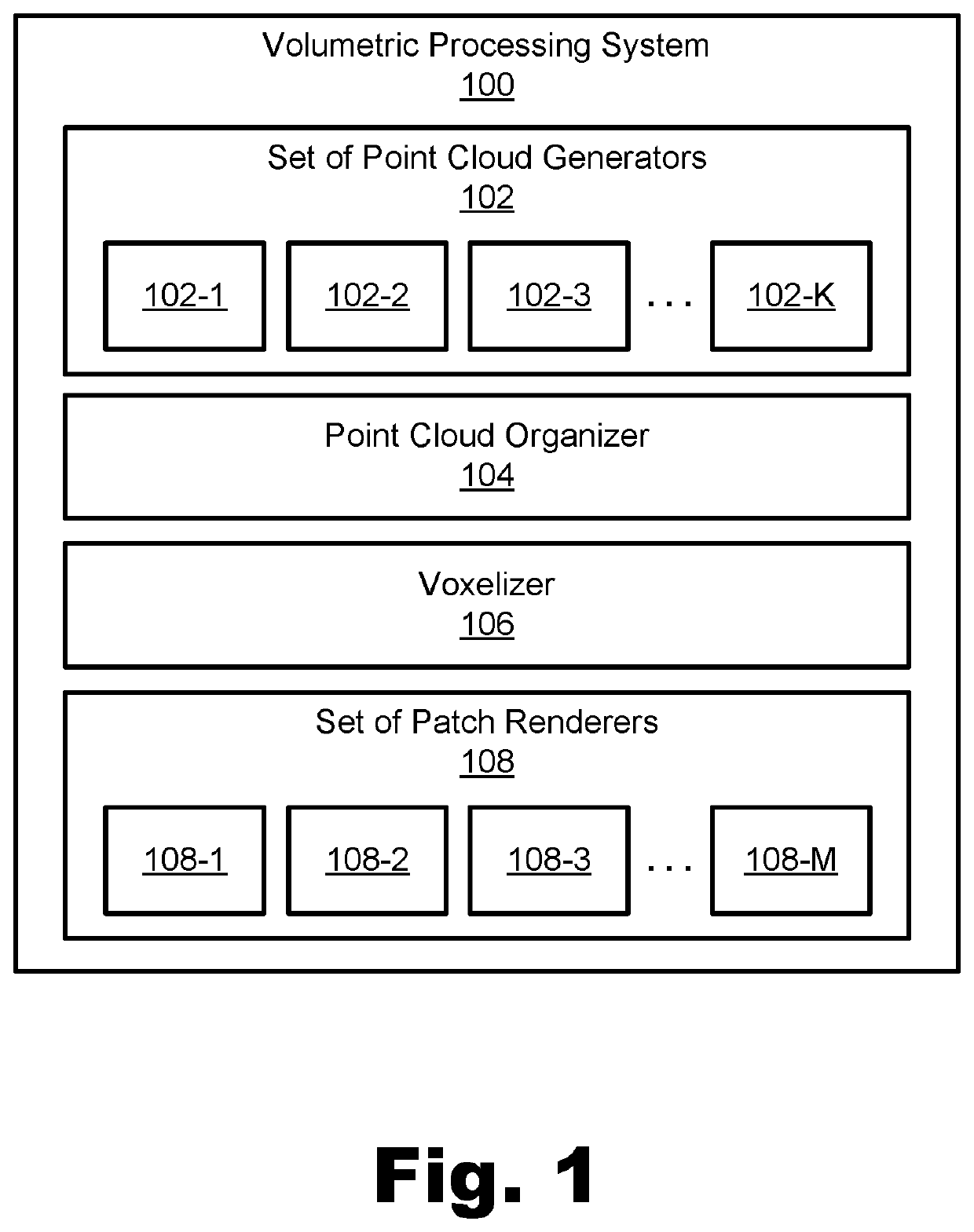

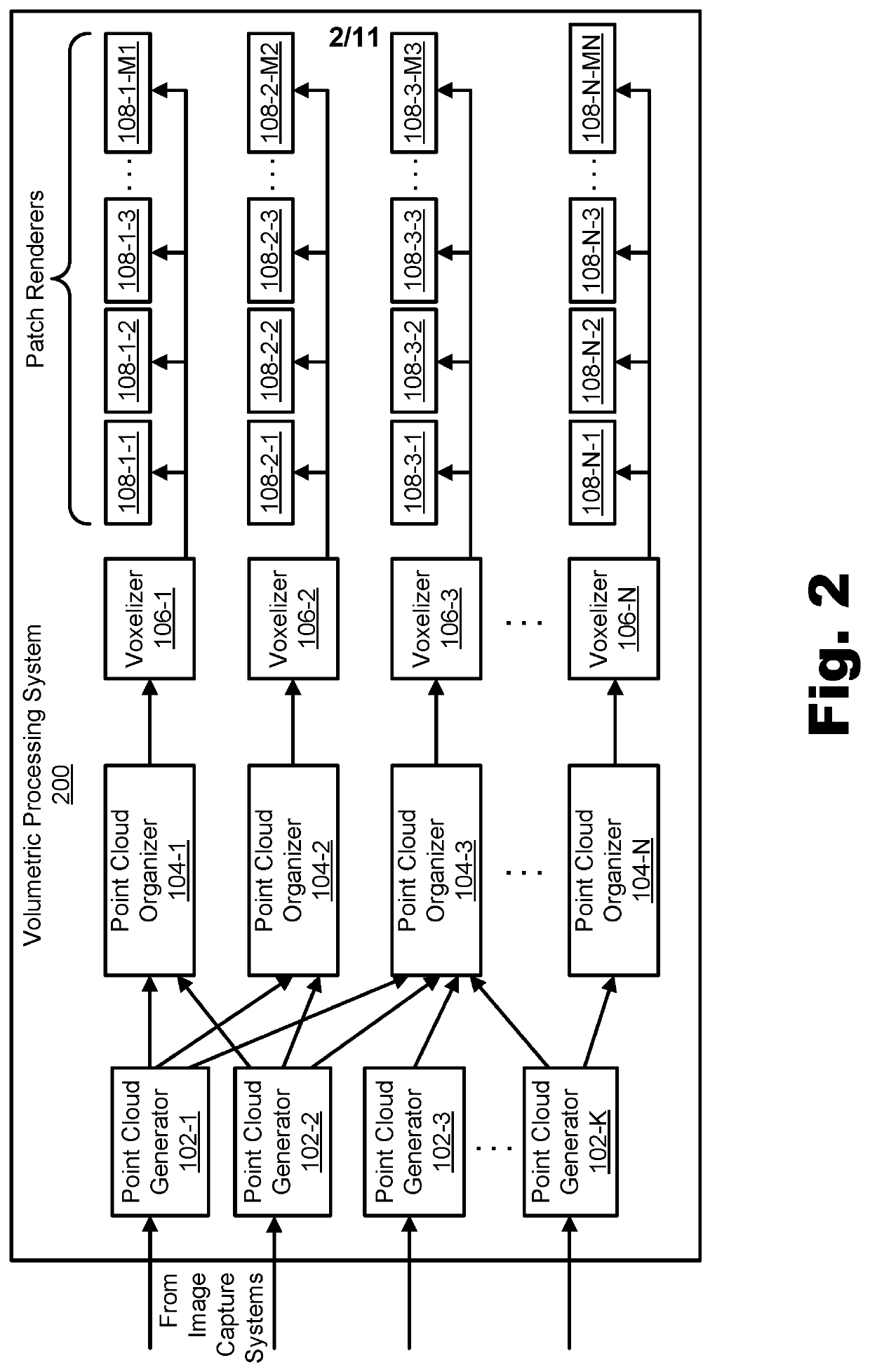

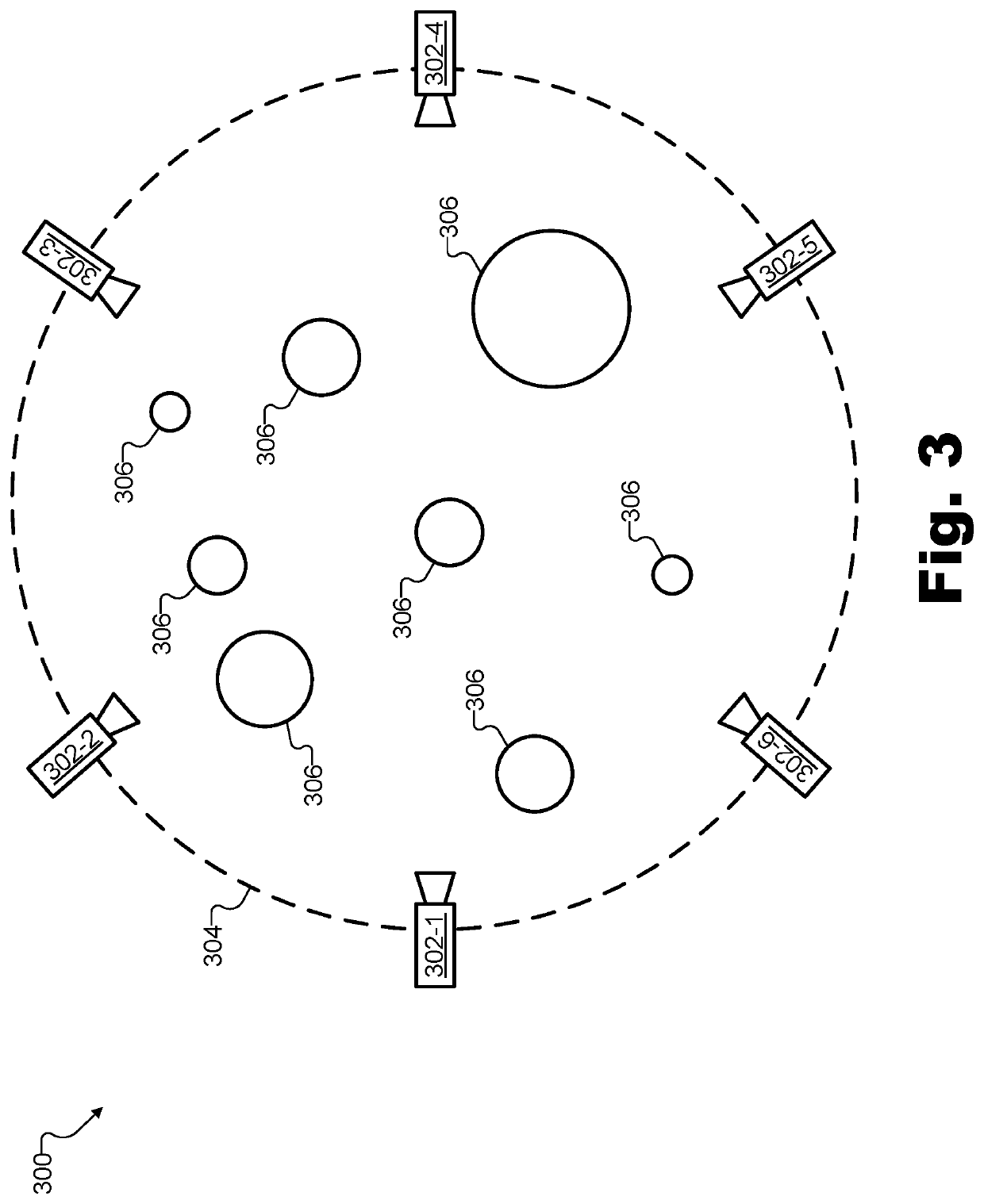

Systems and methods for processing volumetric data using a modular network architecture

An exemplary volumetric processing system includes a set of point cloud generators, a point cloud organizer, a voxelizer, and a set of patch renderers. The point cloud generators correspond to image capture systems disposed at different vantage points to capture color and depth data for an object located within a capture area. The point cloud generators generate respective point clouds for the different vantage points based on the captured surface data. The point cloud organizer consolidates point cloud data that corresponds to a surface of the object. The voxelizer generates a voxel grid representative of the object based on the consolidated point cloud data. The set of patch renderers generates, based on the voxel grid, a set of rendered patches each depicting at least a part of the surface of the object. Corresponding systems and methods are also disclosed.

Owner:VERIZON PATENT & LICENSING INC

Work vehicle composite panoramic vision systems

PendingCN112752068ATelevision system detailsPedestrian/occupant safety arrangementComputer graphics (images)In vehicle

A composite panoramic vision system utilized onboard a work vehicle includes a display device, a vehicle-mounted camera array, and a controller. The vehicle-mounted camera array includes, in turn, first and second vehicle cameras having partially overlapping fields of view and positioned to capture first and second camera feeds, respectively, of the work vehicle's exterior environment from different first vantage points. During operation of the composite panoramic vision system, the controller receives the first and second camera feeds from the first and second cameras, respectively; generates a composite panoramic image of the work vehicle's exterior environment from at least the first and second camera feeds; and then presents the composite panoramic image on the display device for viewing by an operator of the work vehicle.

Owner:DEERE & CO

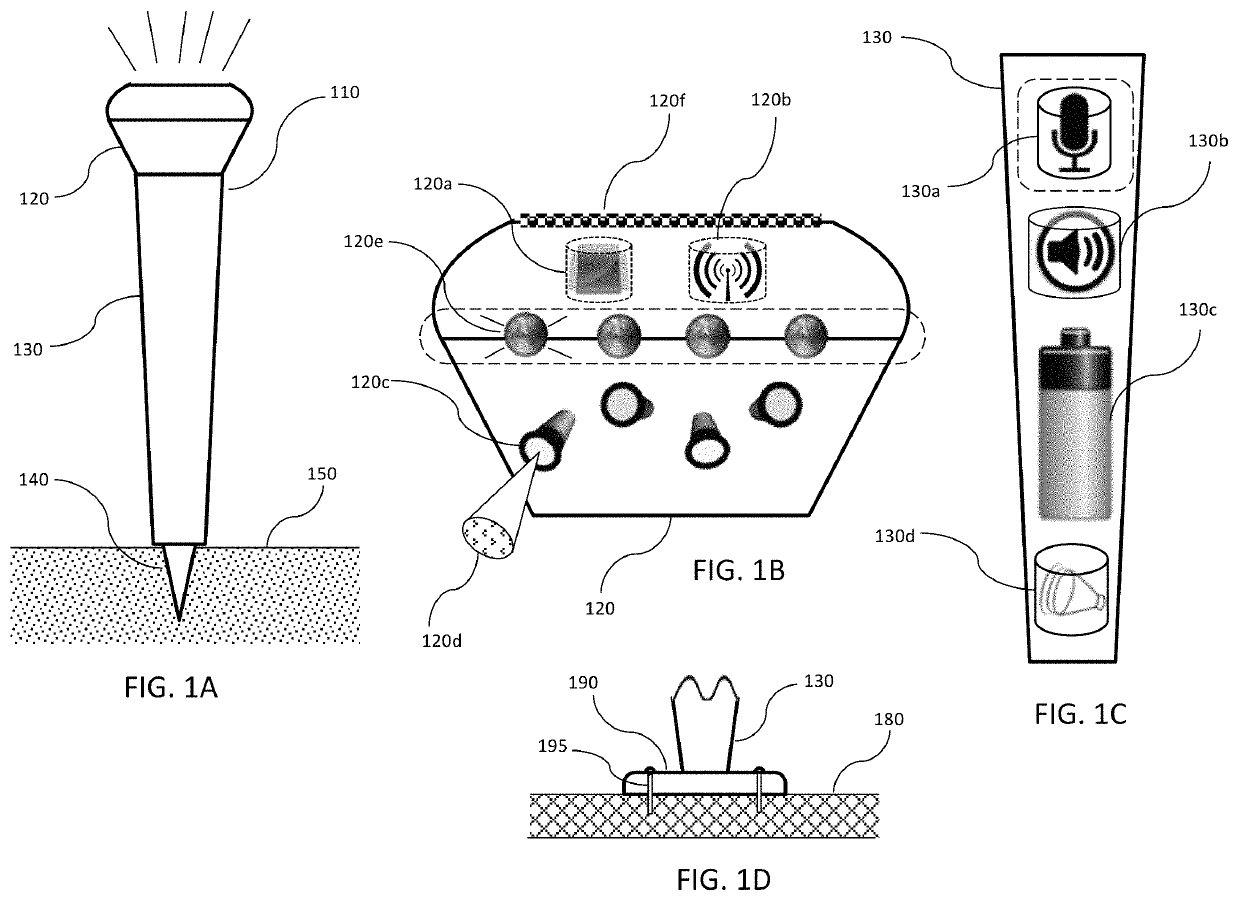

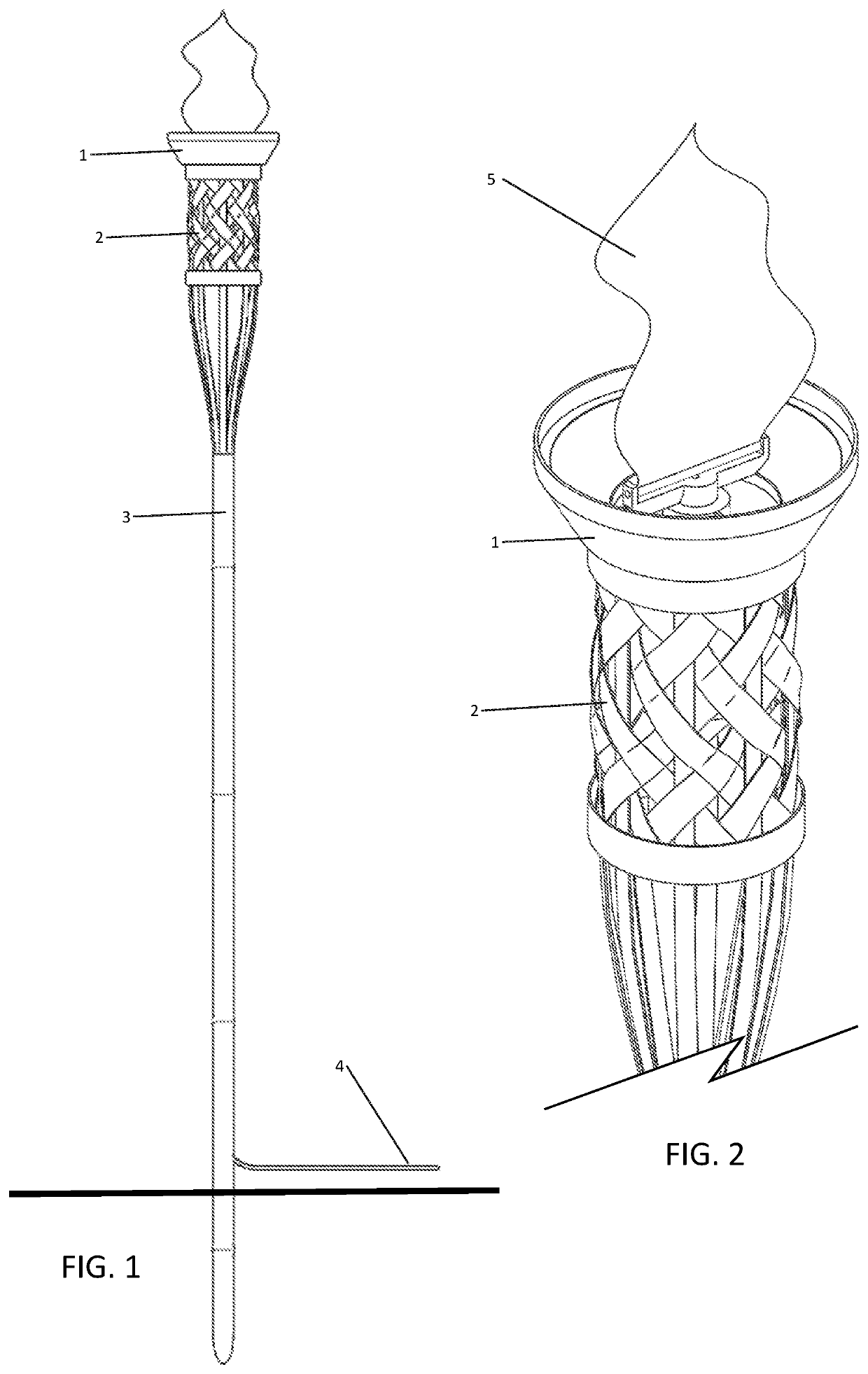

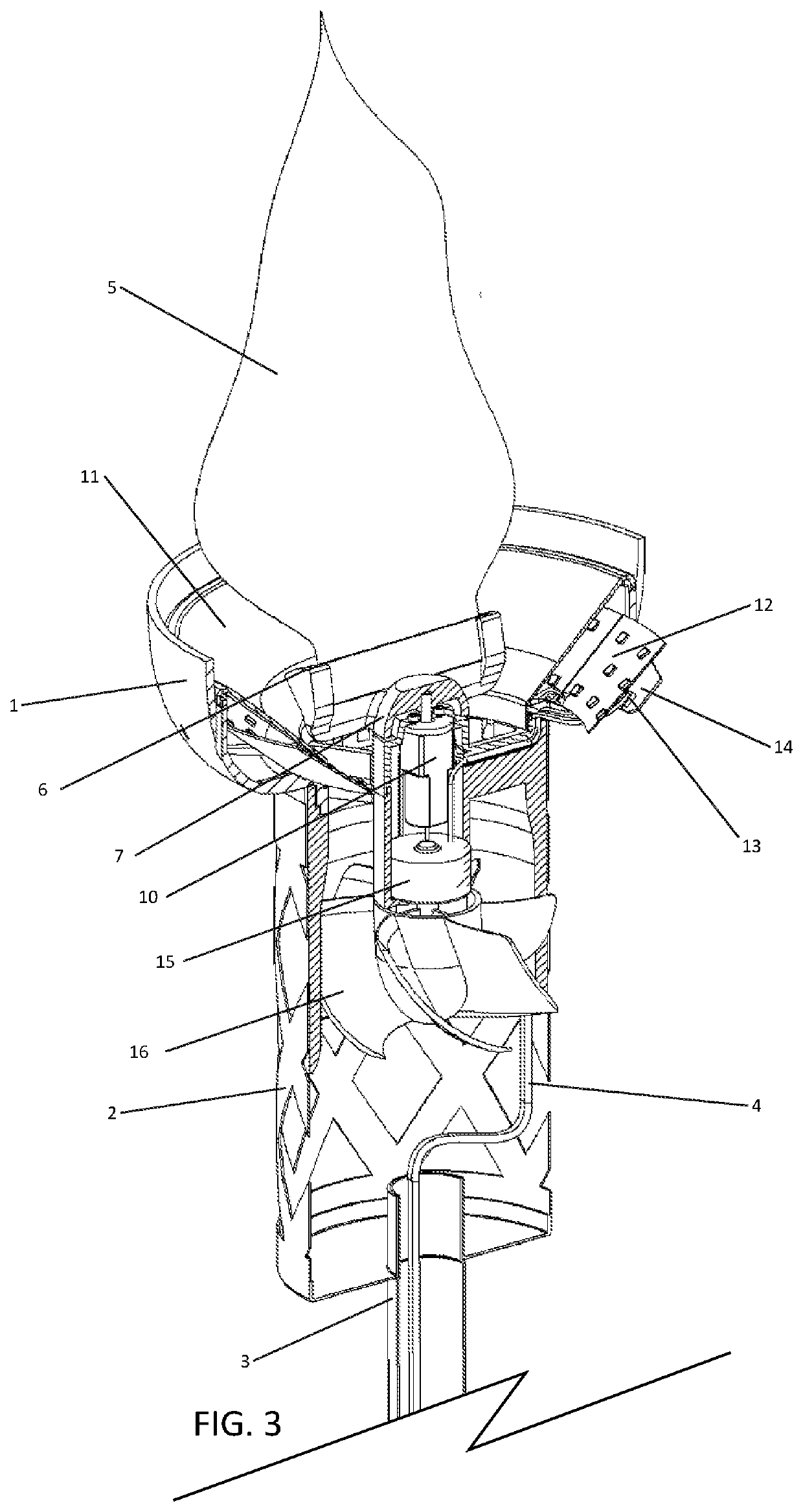

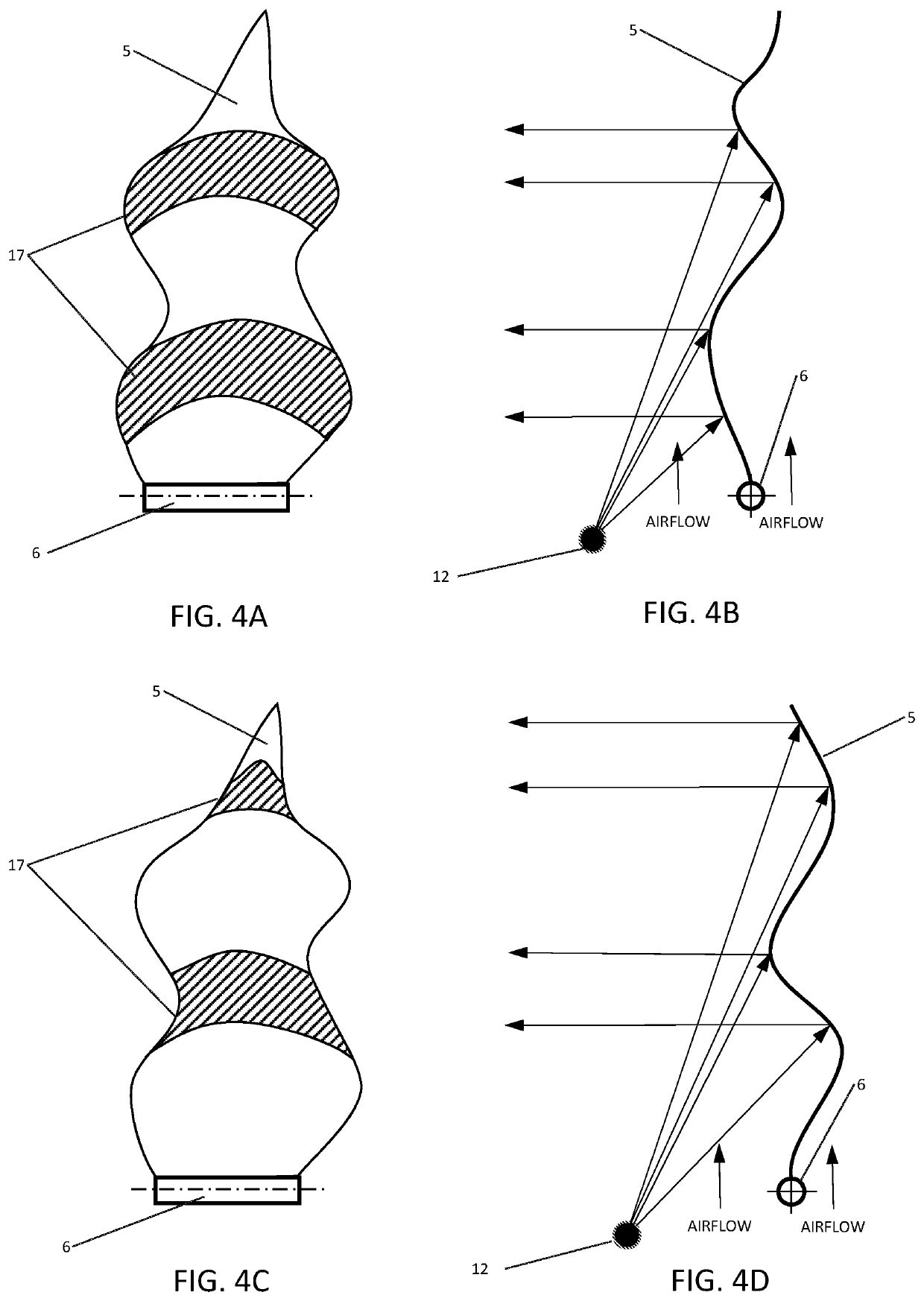

Simulated Torch Novelty Device

ActiveUS20220178508A1Safe can be operated outdoorsReduce carbon footprintElectric circuit arrangementsSemiconductor devices for light sourcesMicrocontrollerFlashlight

An indoor-outdoor novelty device which simulates moving open flame from the upper end of a standing torch. The device provides a realistic illusion of a bright flame changing its shape and brightness pseudorandomly thru a unique combination of lighting, motion, and airflow under microcontroller control. The device can be viewed from a vantage point in a 360-degree perimeter around the device without compromise to the effect.

Owner:BIASOTTI MARK ANDREW

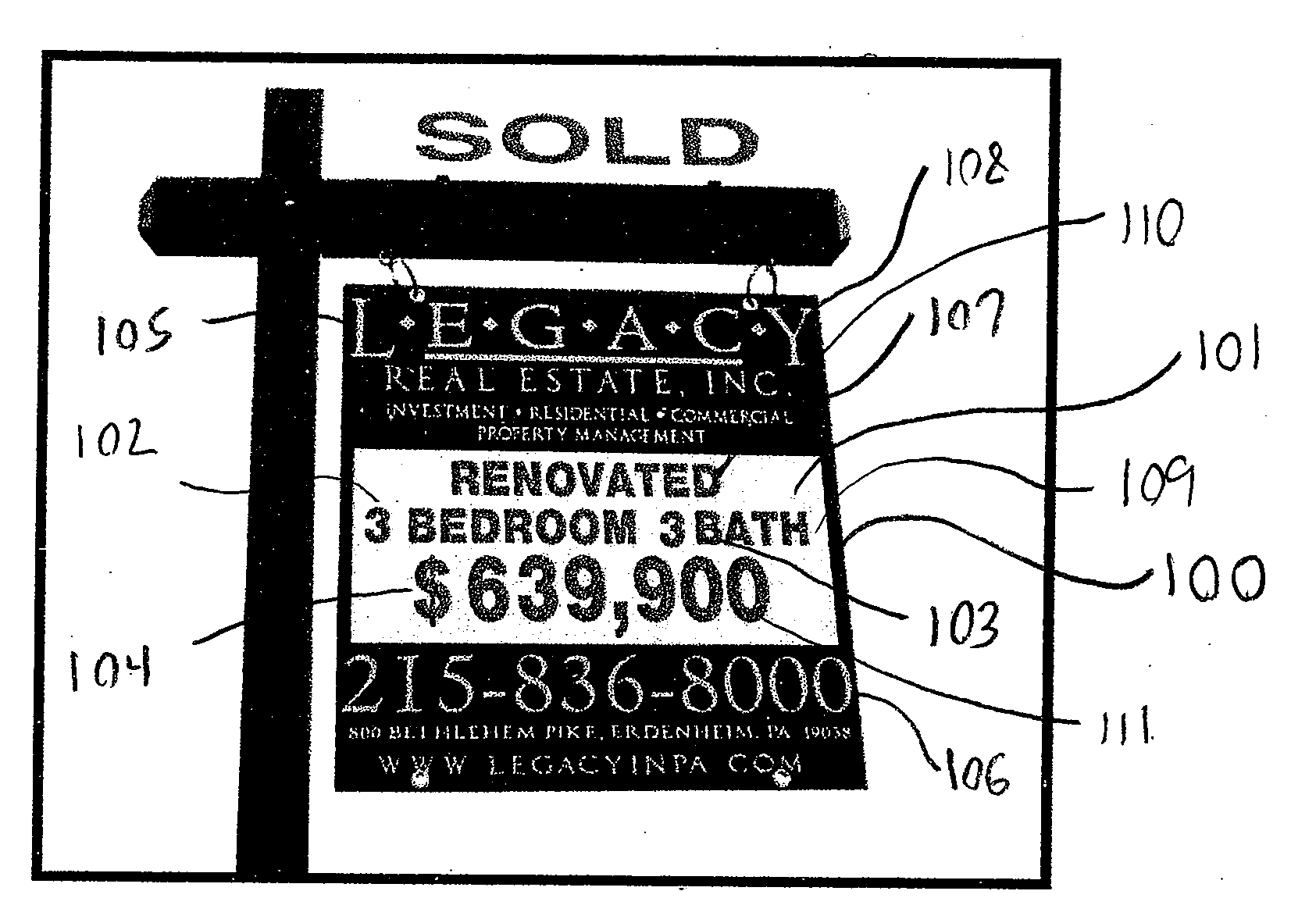

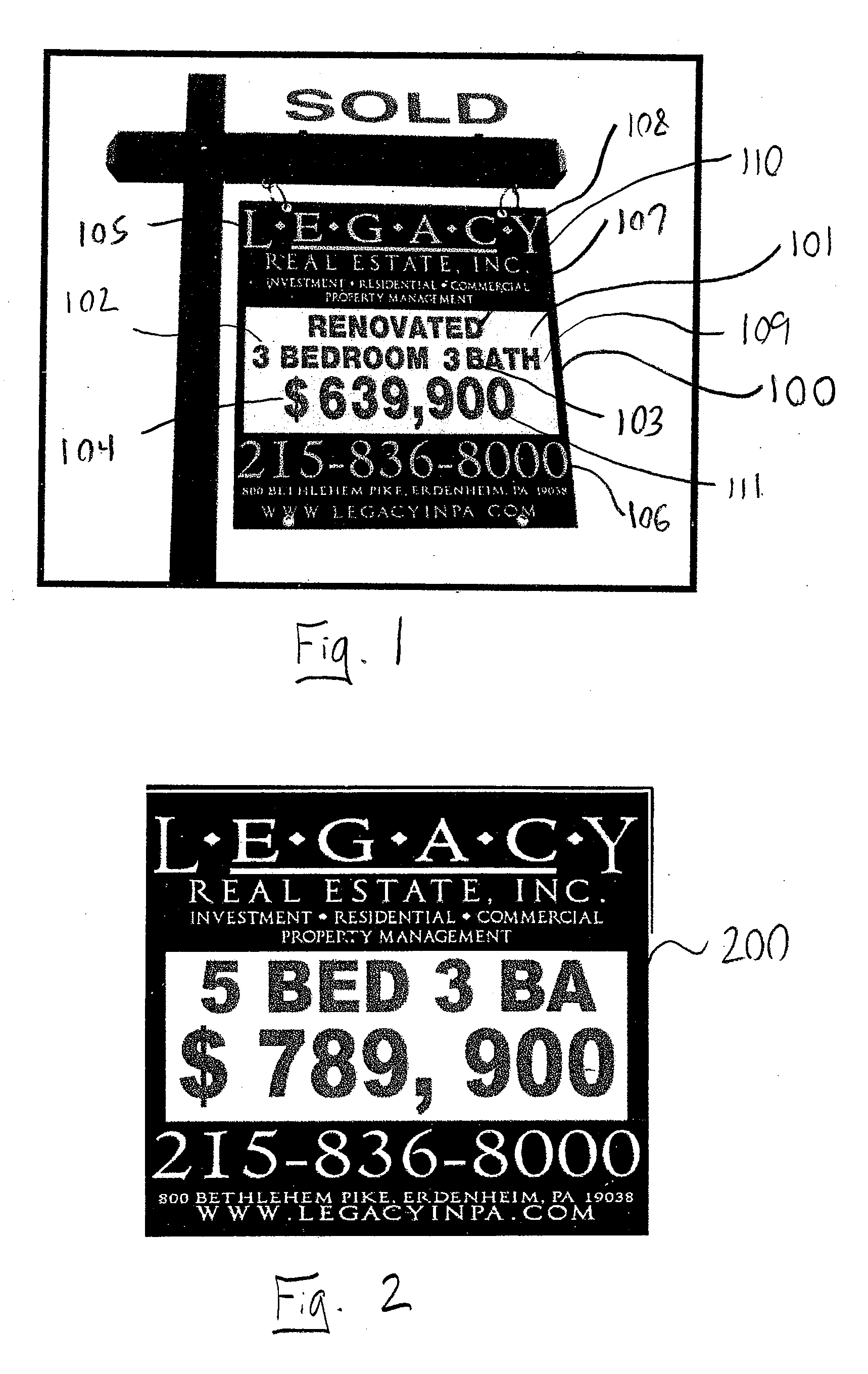

Method of advertising real estate and signage for same

A method of advertising a particular property of real estate comprising preparing a sign particular to the particular property, the sign containing critical information which is unique to the particular property, not readily ascertainable by a person observing the property from a public vantage point, and critical to a potential buyer's decision to purchase the particular property and posting the sign proximate to the particular property.

Owner:JAIN SANJIV K

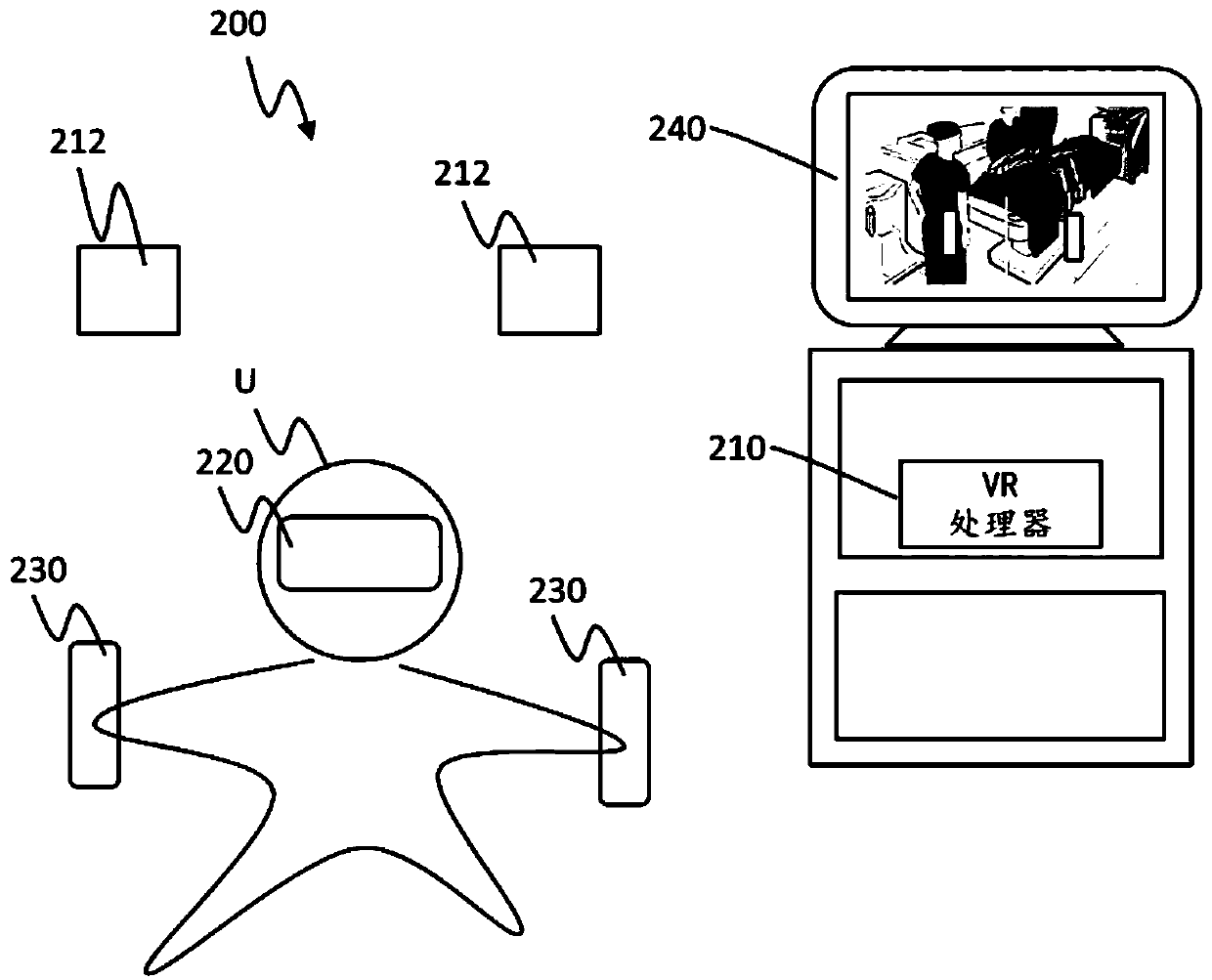

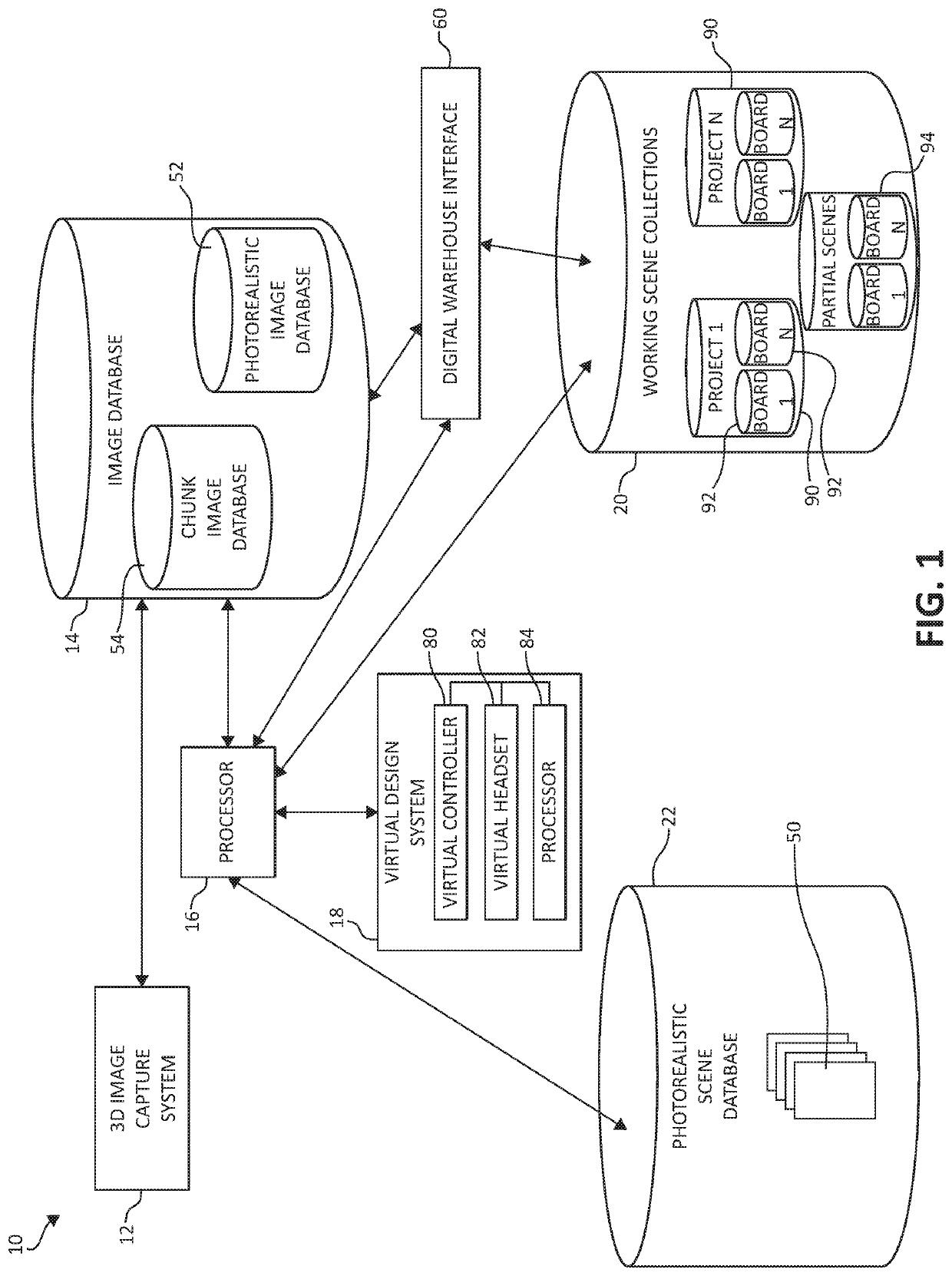

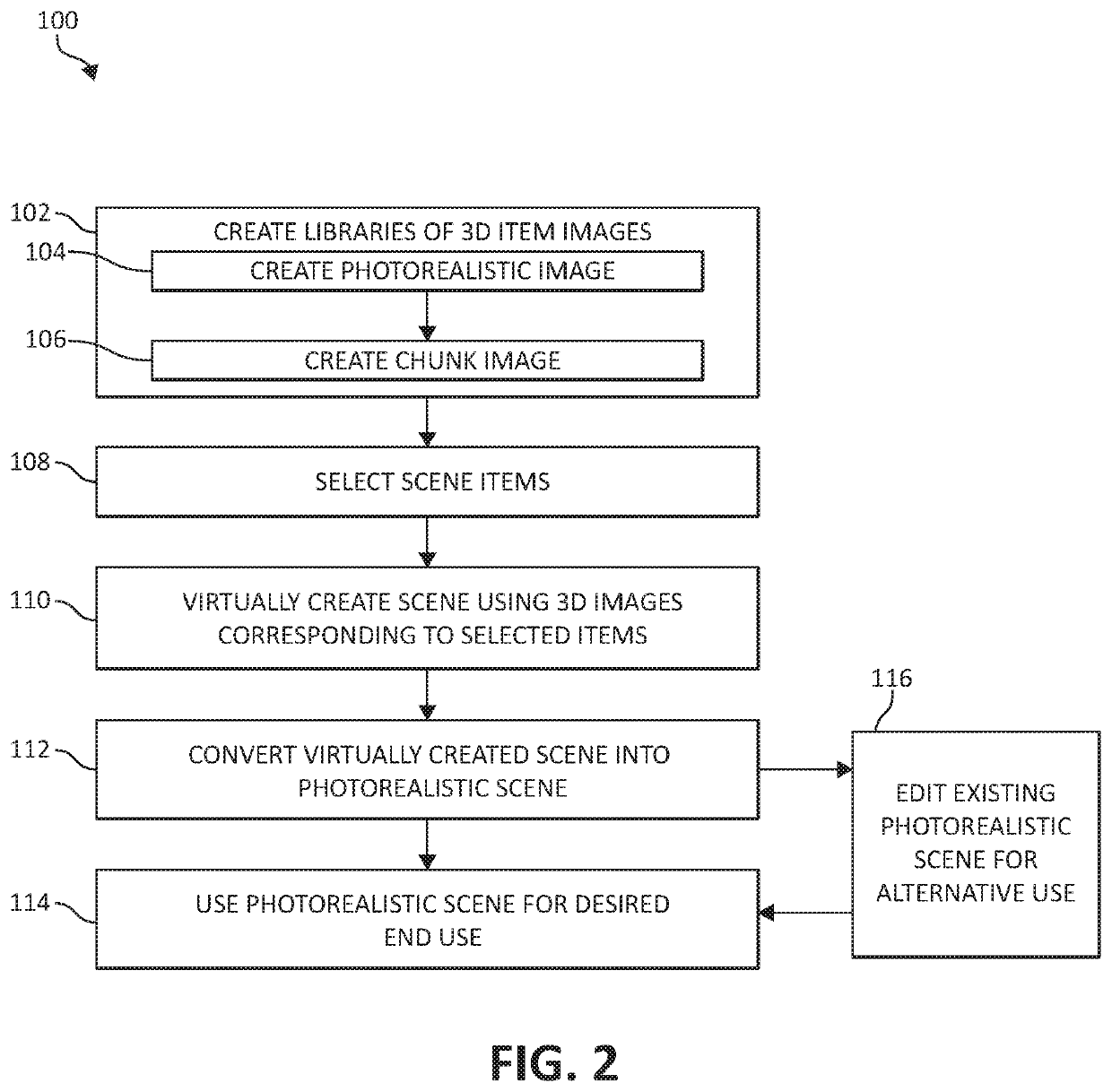

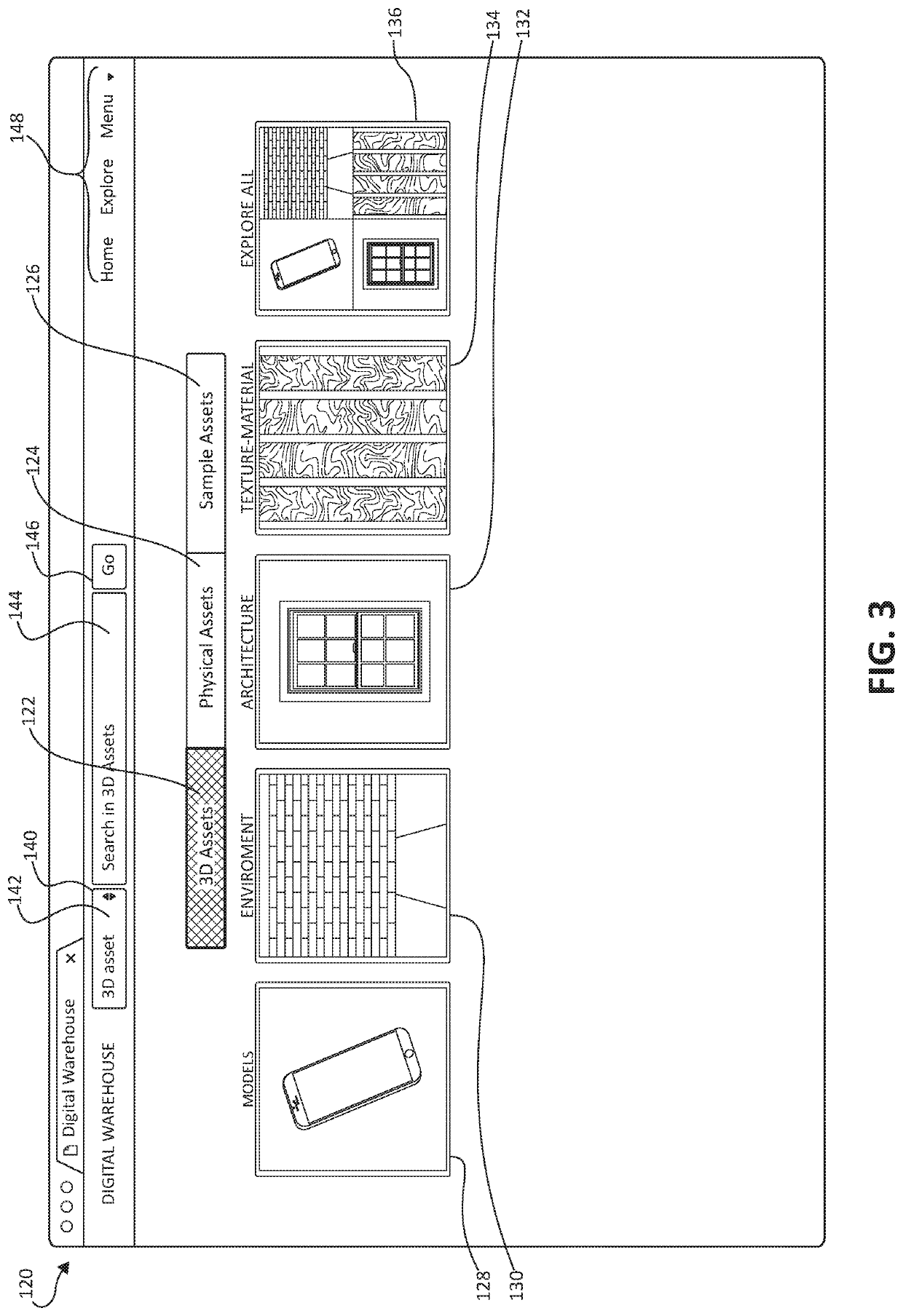

Photorealistic scene generation system and method

ActiveUS10643399B2Input/output for user-computer interactionGraph readingComputer graphics (images)Engineering

A photorealistic scene generation system includes a virtual reality (VR) headset, a VR controller, and a VR processor. The VR headset visually presents a user with a 3D virtual environment. The VR controller receives input from the user. The VR processor communicates with the VR headset and the VR controller and instructs the VR headset to display the 3D virtual environment having an interactive vantage point based on movement of the user based on positional data received from the VR headset and instructs the VR headset to display a source zone superimposed over the 3D virtual environment. The source zone provides visual representations of physical items previously selected for use in styling the 3D virtual environment. The VR processor is programmed to direct movement of one of the visual representations from the source zone and to move the selected one of the visual representations into the 3D virtual environment.

Owner:TARGET BRANDS

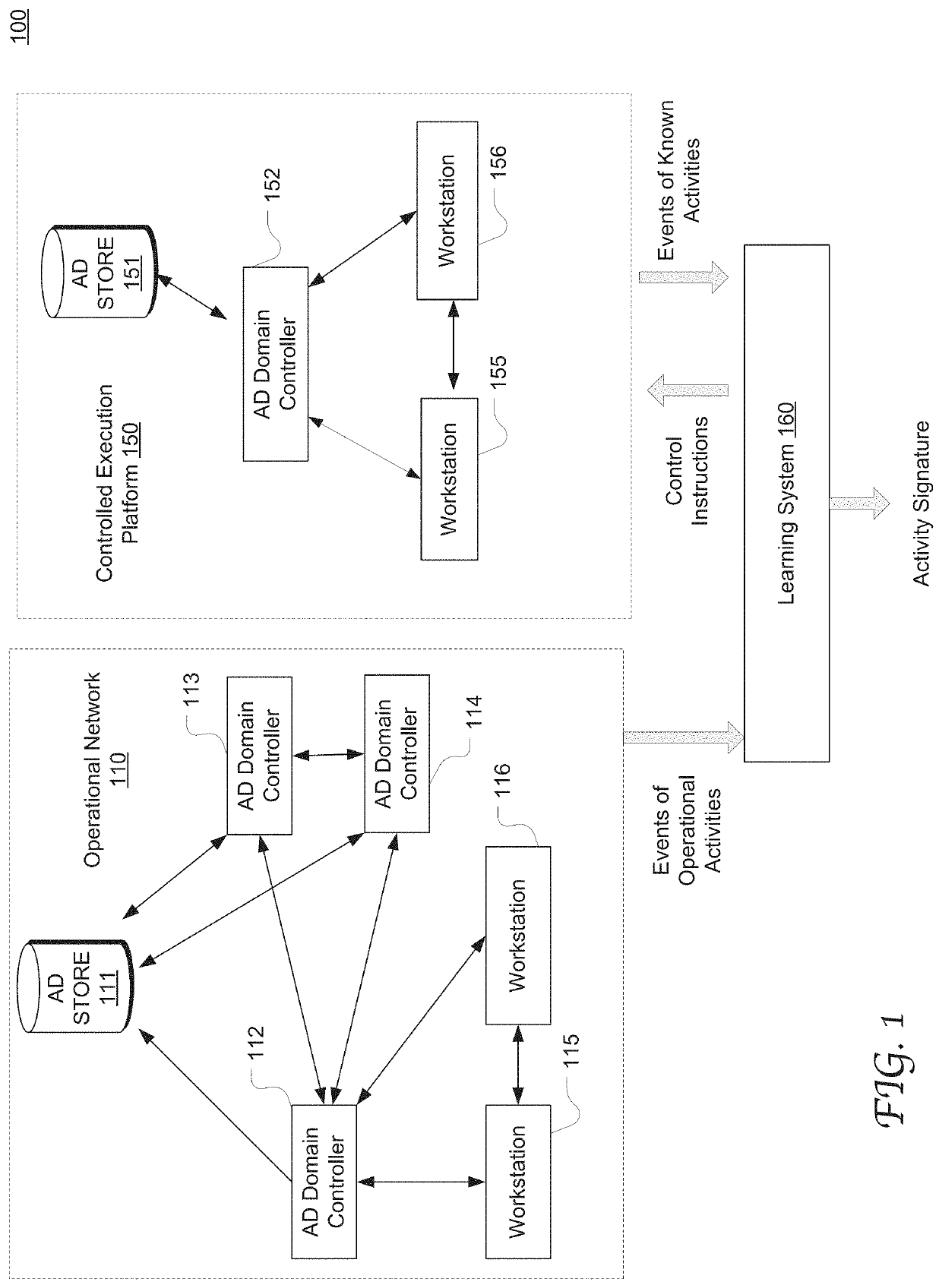

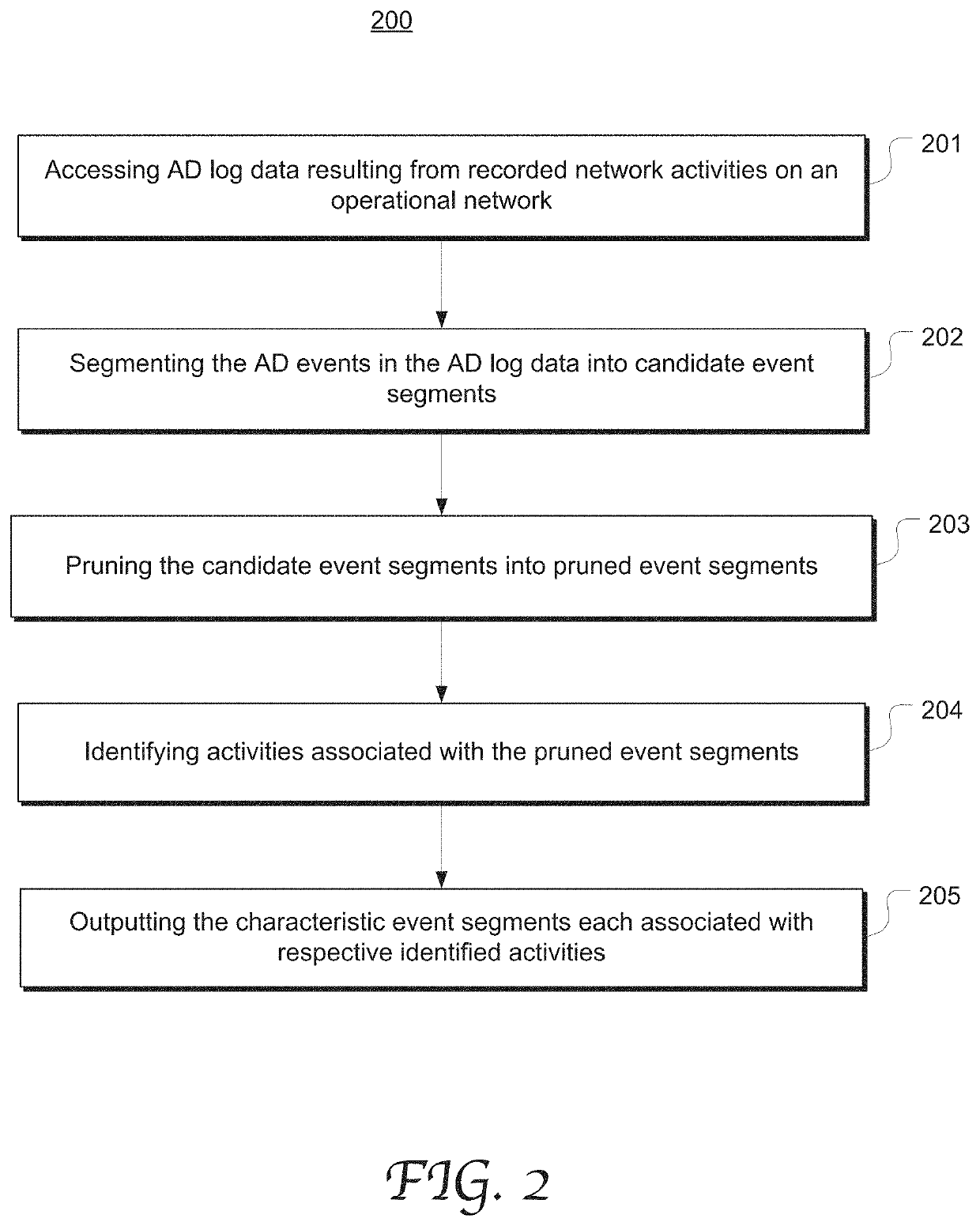

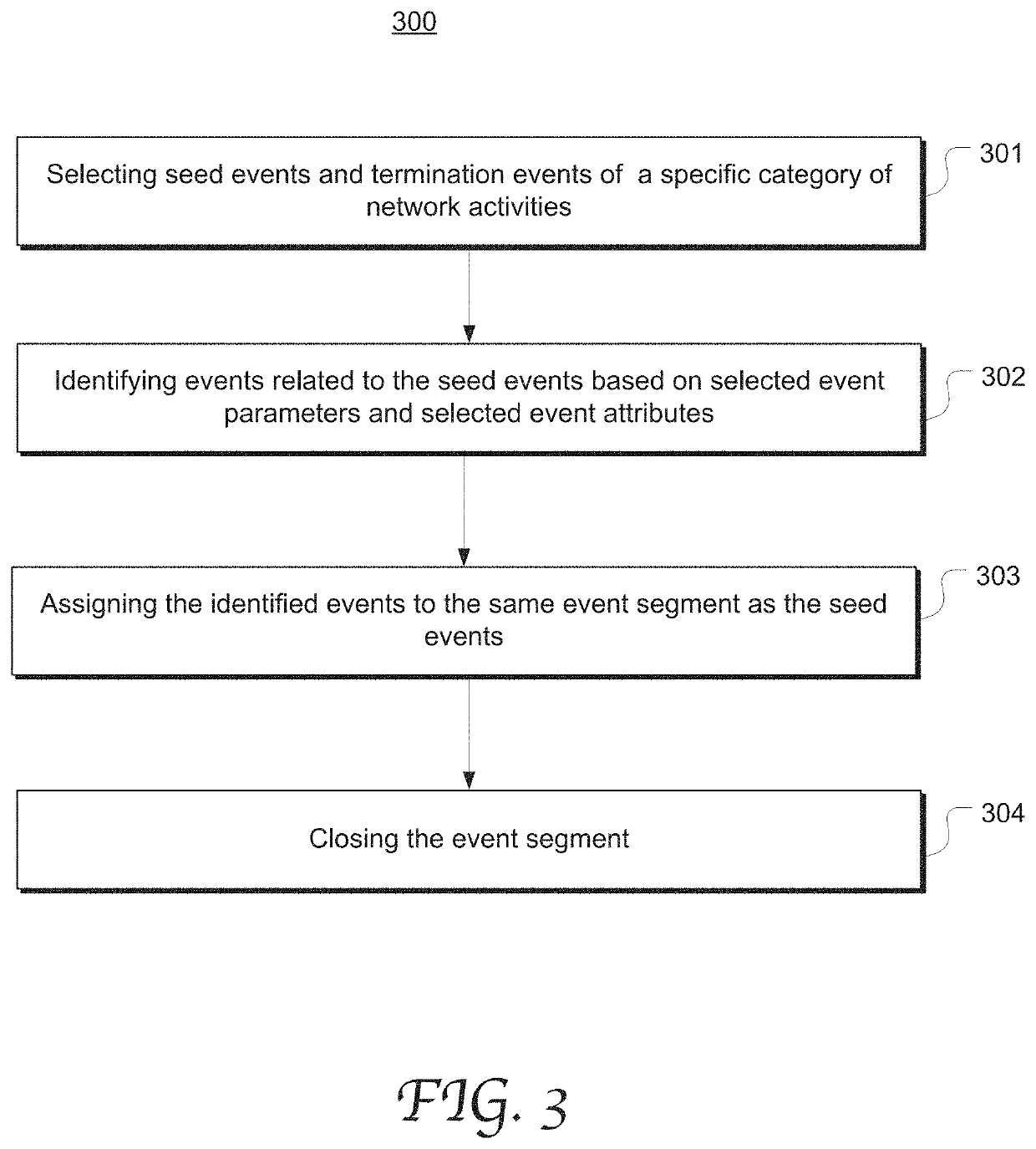

Network activity identification and characterization based on characteristic active directory (AD) event segments

ActiveUS11010342B2Visibility and other reporting limitationEfficient analysisMachine learningKnowledge representationNetwork activityEngineering

A system and method of obtaining and utilizing an activity signature that is representative of a specific category of network activities based on directory service (DS) log data. The activity signature may be determining by a learning process, including segmenting and pruning a training dataset into a plurality of event segments and matching them with activities based on DS log data of known activities. Once obtained, the activity signature can advantageously be utilized to analyze any DS log data and activities in actual deployment. Using activity signatures to analyze DS event log can reveal roles of event-collection machines, aggregate information dispersed across their component events to reveal actors involved in particular AD activities, augment visibility of DS by enabling various vantage points to better infer activities at other domain machines, and reveal macro activities so that logged information becomes easily interpretable to human analysts.

Owner:SPLUNK INC

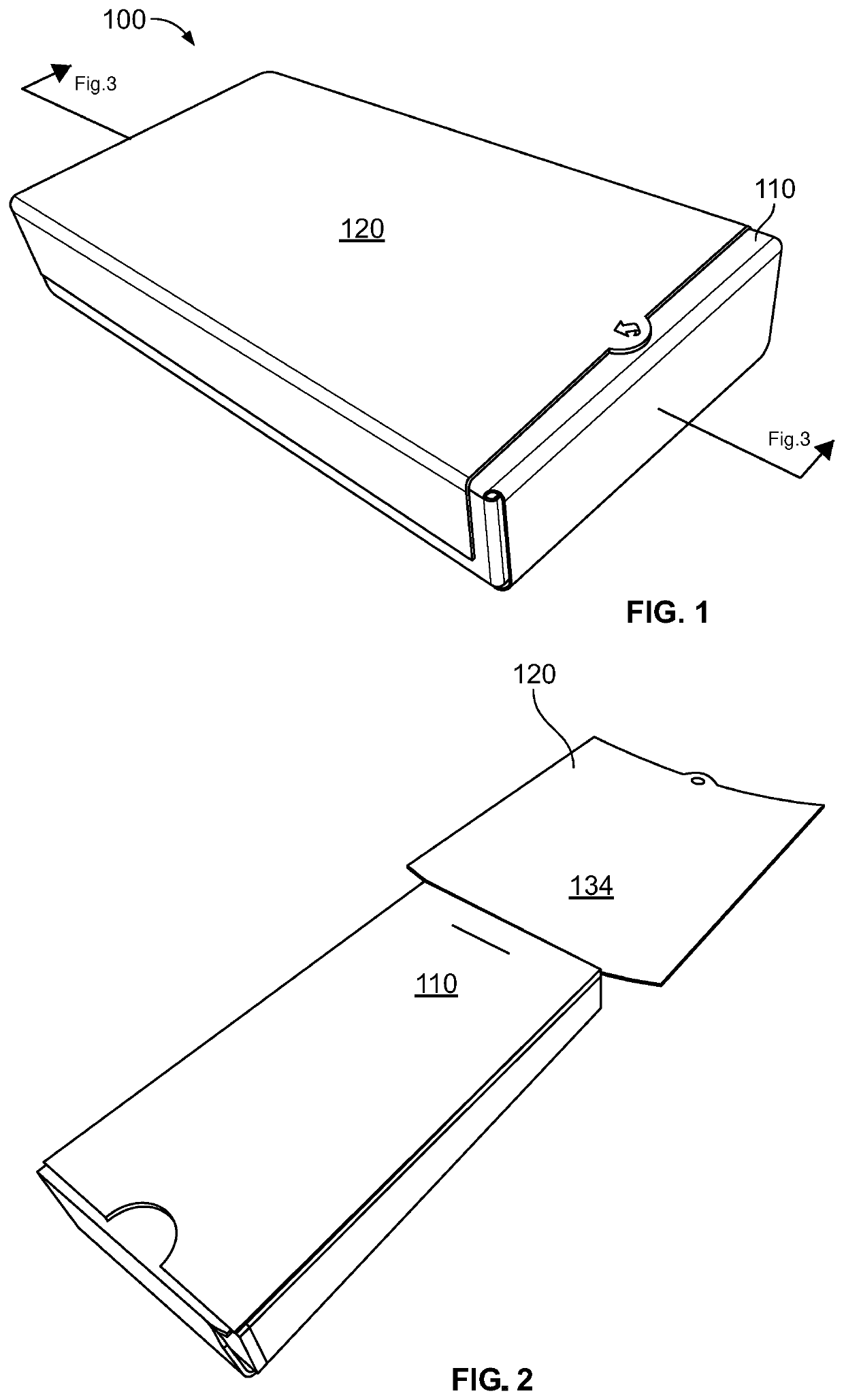

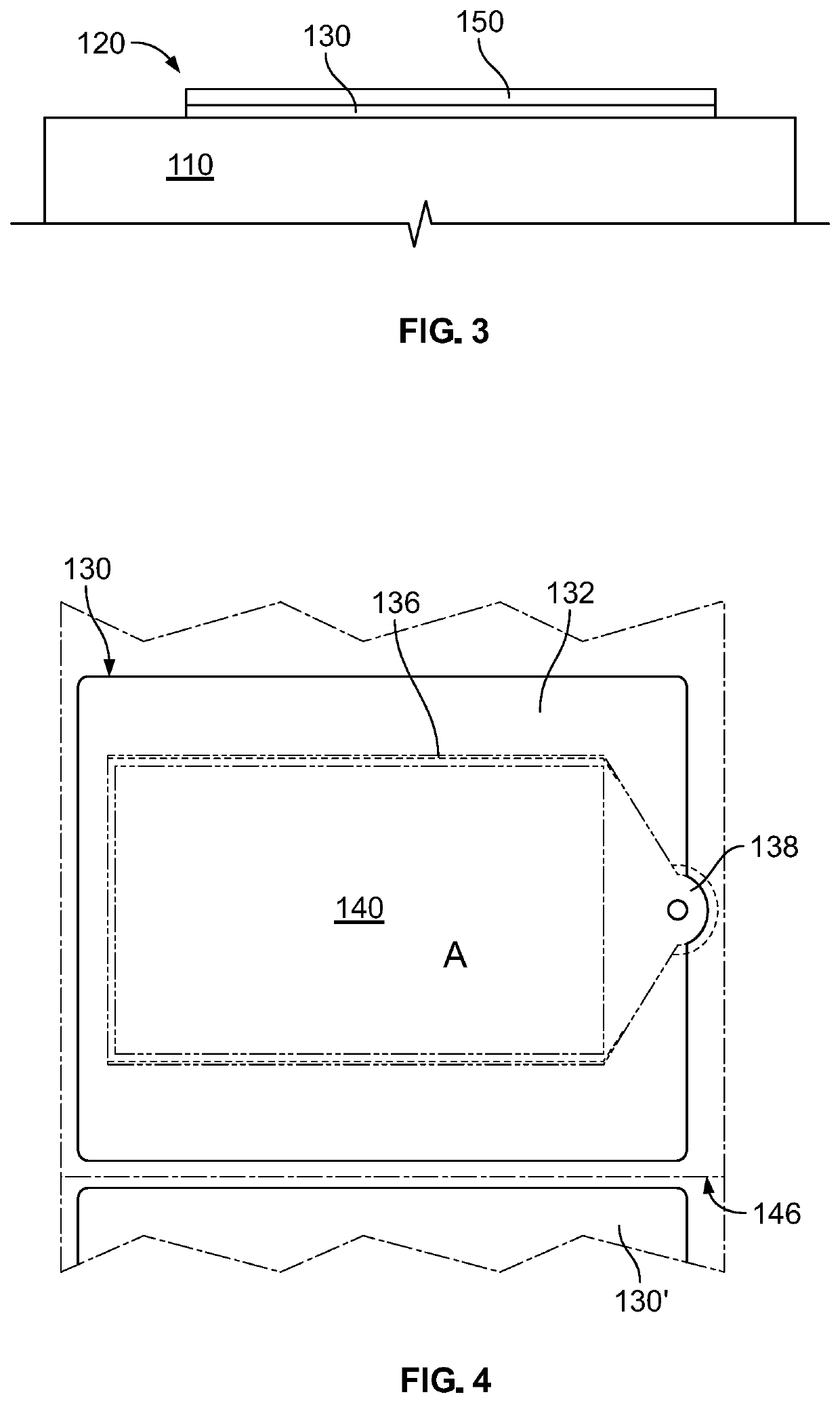

Multilayer Label

A label for a package that includes a base layer and a top layer attached to the base layer is disclosed. The base layer includes a central surface adapted to receive written markings. The top layer covers at least part of the base layer and includes perforations such that a detachable part of the top layer has a perimeter at least partially defined by the perforations. The detachable part includes a front surface and a back surface that faces the base layer while the perforations remain intact. The front and back surfaces of the top layer are adapted to receive written markings. And, the top layer is opaque such that while the perforations remain intact, any written markings on the back surface of the detachable part or on the central surface of the base layer are not visible from a vantage point outside of the label.

Owner:STRYKER EUROPEAN OPERATIONS LIMITED

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com