General caching method

A cache and cache segment technology, applied in the field of data processing, can solve problems such as large system resource consumption, deviation between cached data and real data, and high cache synchronization cost, achieving high read performance, clear management, and high flexibility. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

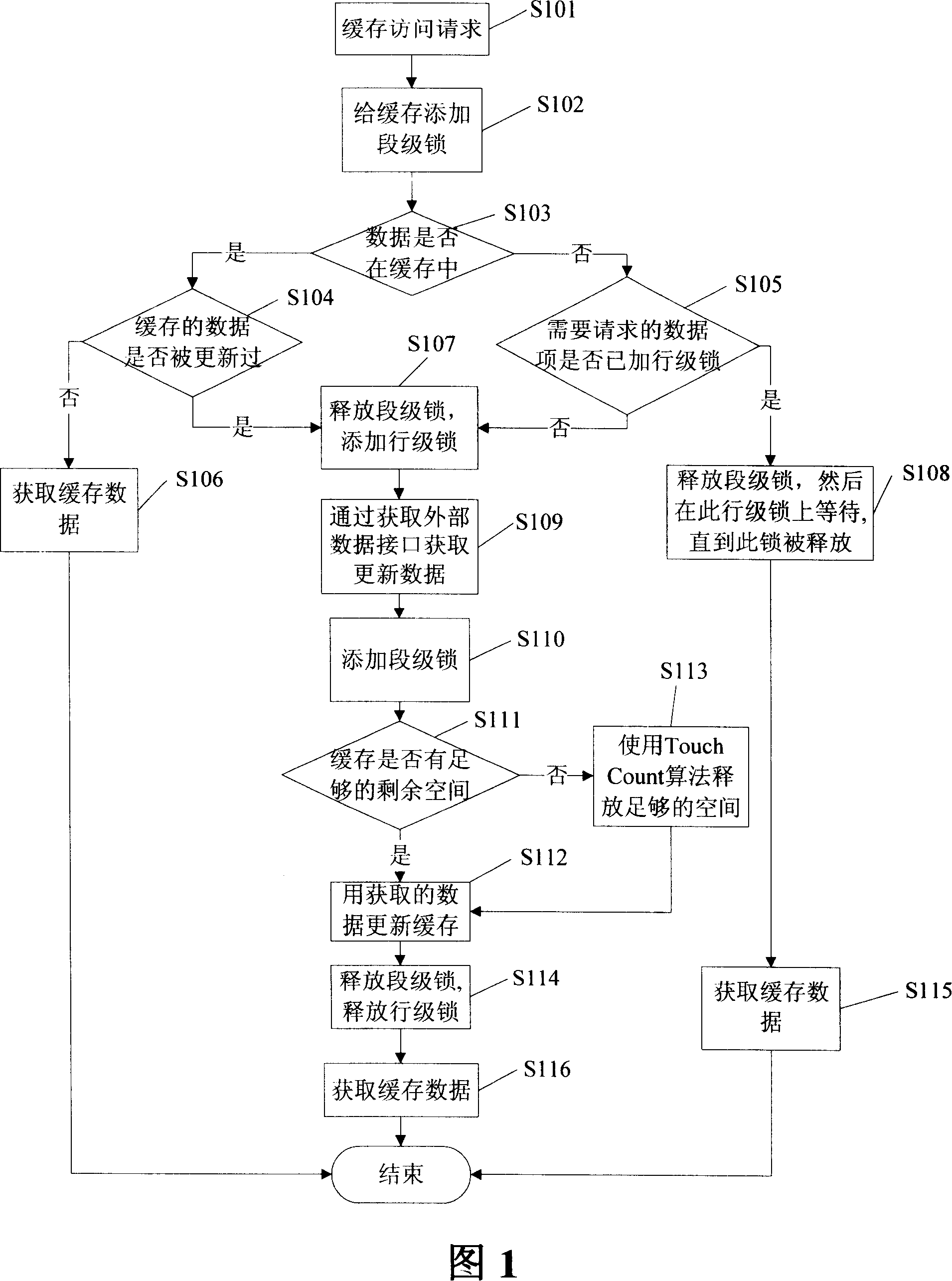

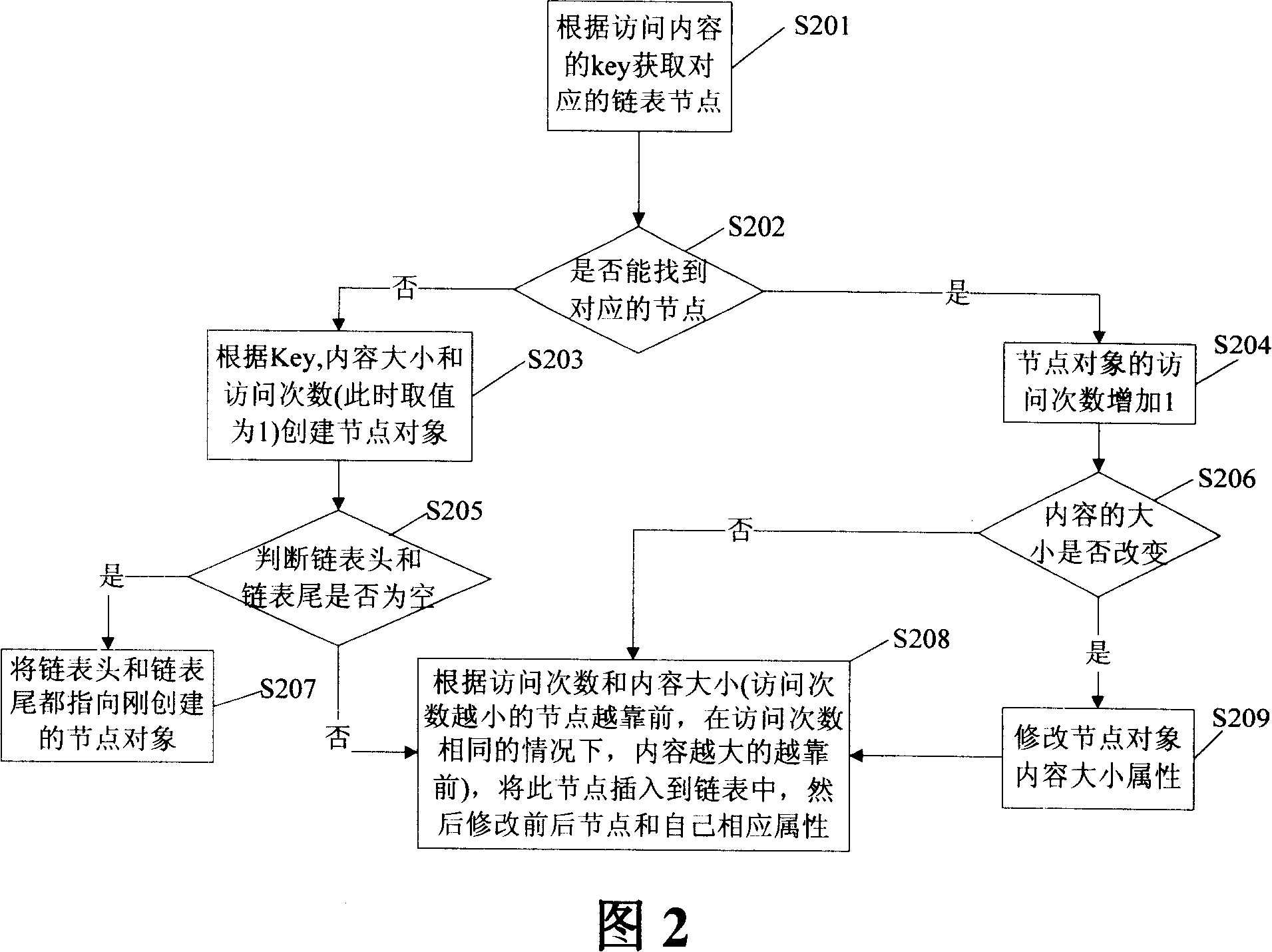

[0120] The currently requested data is not in the specified cache segment and the corresponding cache segment has enough free space to store the requested data.

[0121] When the system receives a new user request content, the system judges that the data is not in the specified cache segment, the system allocates a row-level lock for the requested data key, and then obtains the requested data from an external data source, and calls the segment-level lock to Add this content to the corresponding cache segment in the cache system, release the added lock, and then return this content to the user.

no. 2 example

[0123] When the requested data is already in the cache segment, the cache system fetches the requested data and outputs it back to the user.

no. 3 example

[0125] When the requested data is not in the cache segment and the cache segment does not have enough remaining space, the cache system allocates a row-level lock for the requested data key, and then obtains the requested data from the external data source through the acquisition external data interface, and through The touch count algorithm releases enough space, adds the requested data to the corresponding cache segment, releases the added lock, and finally outputs the requested data to the user.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com