Visualized pronunciation teaching method and apparatus

A technology of pronunciation process and pronunciation organs, which is applied in the field of visual pronunciation teaching methods and devices, which can solve the problems of not being able to dynamically display the position changes of organs in the whole process of pronunciation, and achieve the effect of improving scientificity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

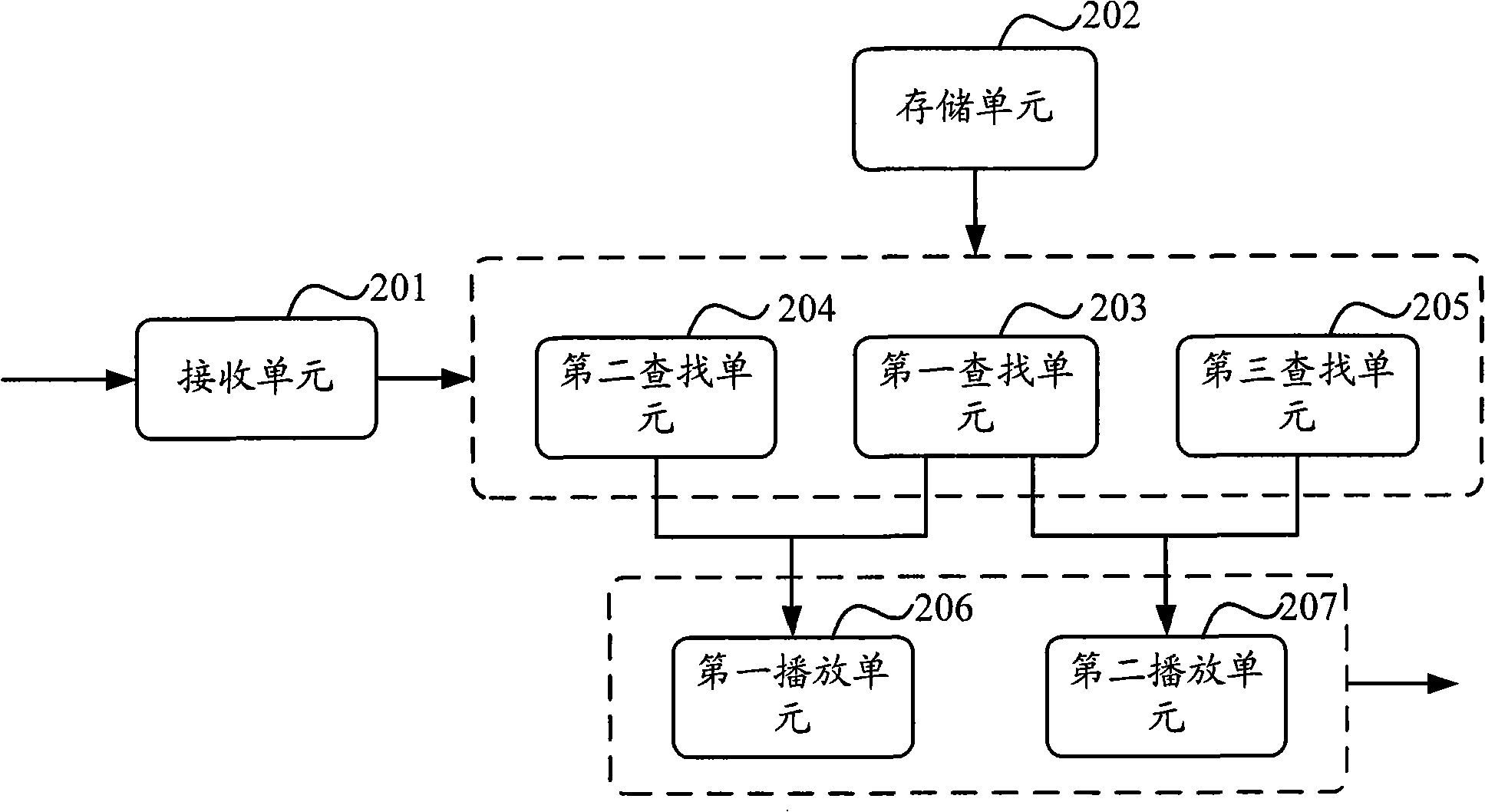

[0013] The embodiment of the present invention proposes that the standard pronunciation audio file corresponding to each pronunciation basic unit information and the correct pronunciation animation material file are played synchronously, so that pronunciation learners can not only hear the correct pronunciation of each pronunciation basic unit, but also intuitively see During the correct pronunciation process of each pronunciation basic unit, the dynamic changes of each pronunciation organ and the change of the strength of the airflow have improved the scientificity, intuition and interest of pronunciation teaching.

[0014] Embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

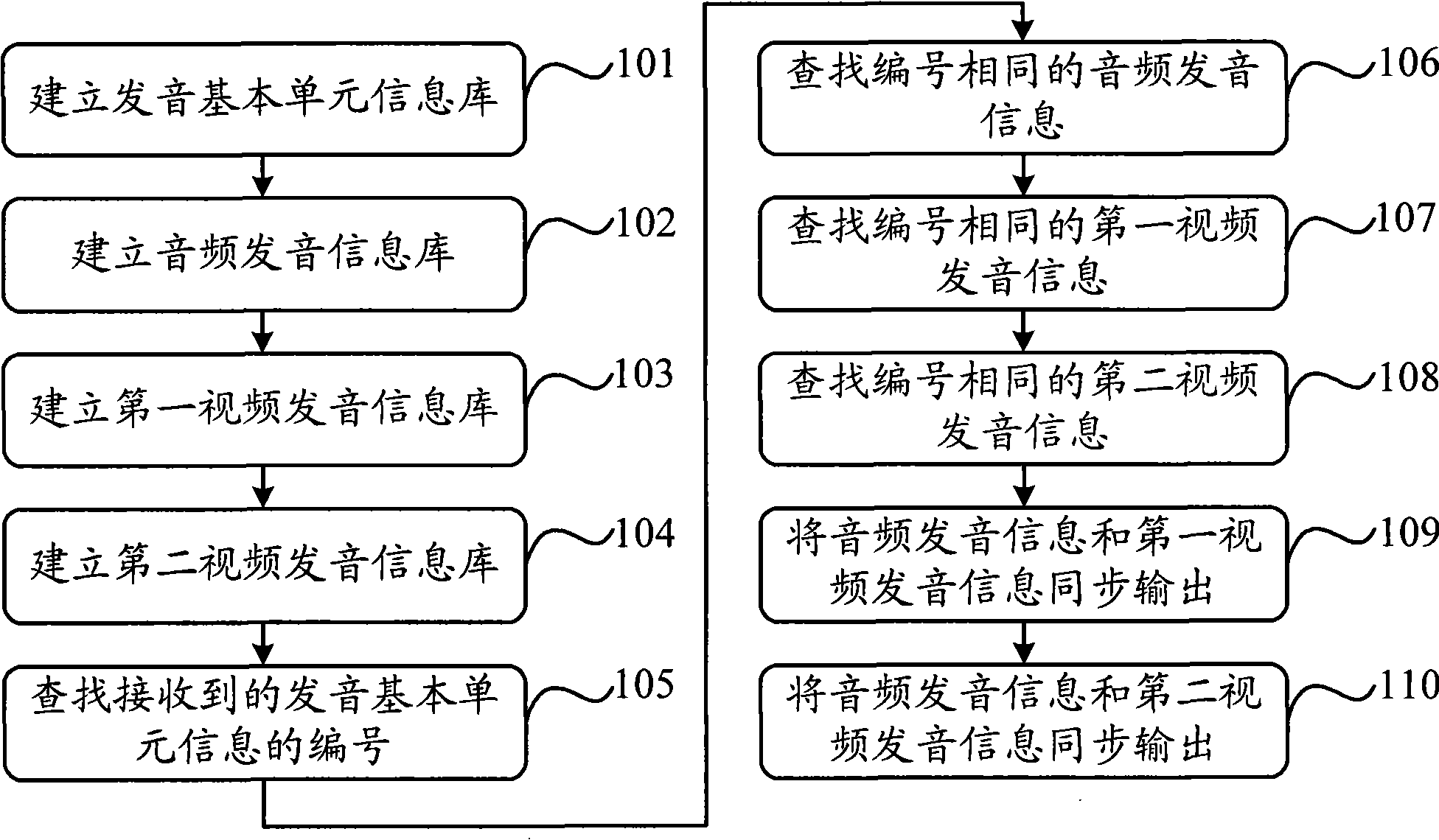

[0015] Such as figure 1 As shown, it is a flow chart of the visual pronunciation teaching method in the embodiment of the present invention, which specifically includes the following steps:

[0016] Step 101, establishing an information ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com