Hand-held device and electronic device capable of switching user interface

A technology of handheld devices and electronic devices, applied in the direction of electrical digital data processing, input/output process of data processing, instruments, etc., can solve problems such as hindering user's touch operation, restriction, touch operation is easy to scratch fingers, etc. Achieve the effect of increasing convenience and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] With the current handheld devices, users can only quickly open some specific functions by pressing hotkeys. However, the number of hotkeys on handheld devices is limited. A user interface with multiple commonly used functions is bound to increase the convenience of operating the handheld device. The present invention is a method for operating a user interface and a handheld device using the method developed based on the above viewpoint. In order to make the content of the present invention more clear, the following specific examples are given as examples in which the present invention can indeed be implemented.

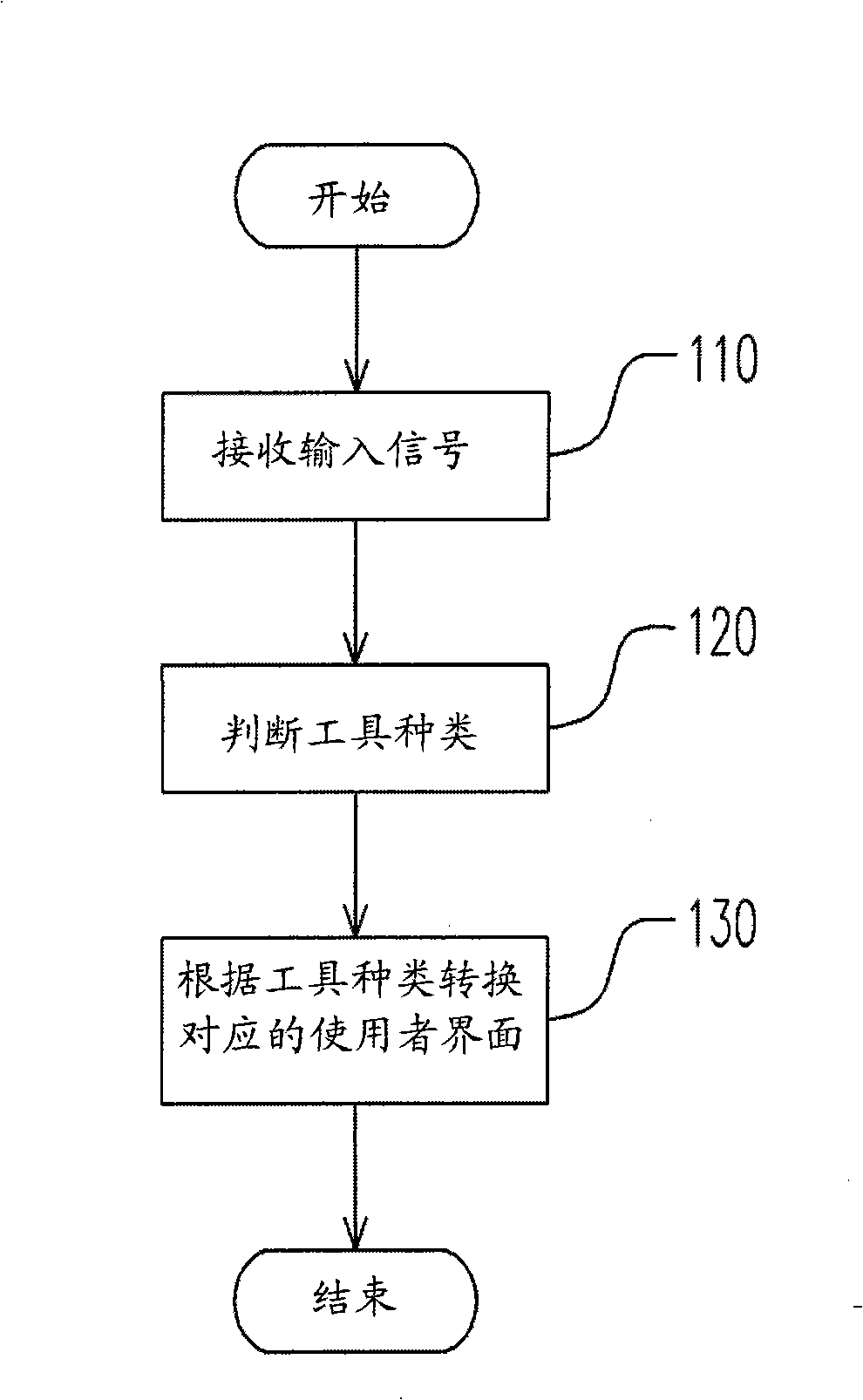

[0048] figure 1 It is a flow chart of the operation method of the user interface according to an embodiment of the present invention. see figure 1 , this embodiment is to illustrate the detailed steps of how the handheld device automatically switches the corresponding user interface according to the different input tools when the user is operating a handhe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com