Method and apparatus for scheduling precoding system based on code book

A scheduling method and precoding technology, applied in baseband system components, synchronization/start-stop systems, orthogonal multiplexing systems, etc., can solve the problem that the PF scheduling algorithm cannot be implemented.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

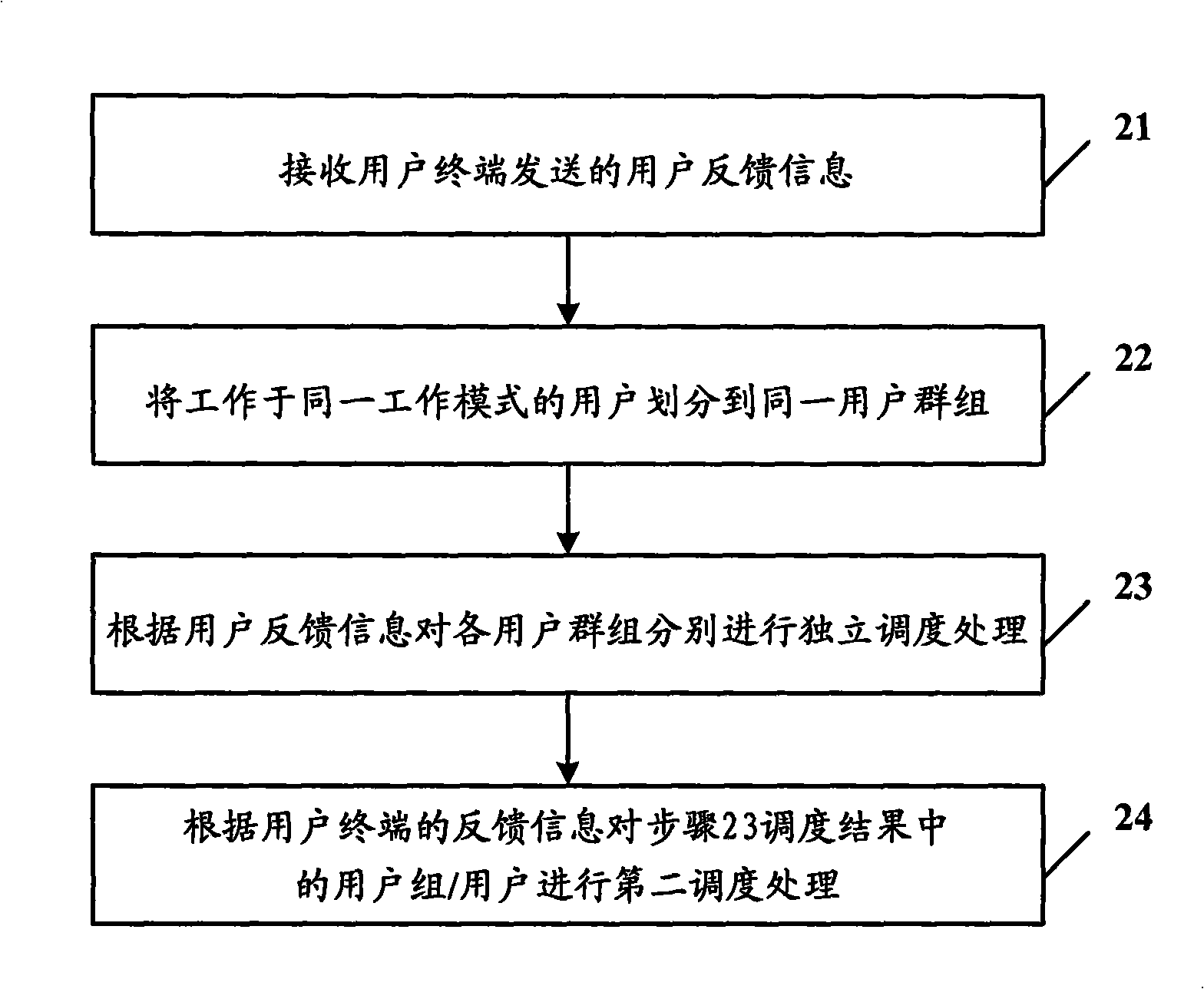

[0130] In the first embodiment of the present invention, all users work in SDMA mode (that is, only users 1 to 12 in the cell), and the PF scheduling algorithm is used in steps 412, 413, and 414.

[0131] The method of the first embodiment of the present invention specifically includes the following steps:

[0132] In step A1, users with the same PMI and PVI are grouped into a group, and the result of the grouping is shown in the user feedback information table working in SDMA mode, that is, users 1, 2, and 3 are in the same group, corresponding to the first matrix. The first vector; users 4, 5, and 6 are in the same group, corresponding to the second vector of the first matrix; users 7, 8, and 9 are in the same group, corresponding to the first vector of the second matrix; users 10, 11, 12 are in the same group, corresponding to the second vector of the second matrix.

[0133] Step A2, use the PF algorithm to perform multi-user scheduling in each group, assuming that 2 users...

no. 2 example

[0143] In the second embodiment of the present invention, all users work in SDMA mode (that is, only users 1 to 12), and use PF, MaxC / I, and PF scheduling algorithms in steps 412, 413, and 414, respectively.

[0144] The method of the second embodiment of the present invention specifically includes the following steps:

[0145] Step B1, divide users with the same PMI and PVI into a group, and the result of grouping is shown in the user feedback information table working in SDMA mode, that is, users 1, 2, and 3 are in the same group, corresponding to the first matrix. The first vector; users 4, 5, and 6 are in the same group, corresponding to the second vector of the first matrix; users 7, 8, and 9 are in the same group, corresponding to the first vector of the second matrix; users 10, 11, 12 are in the same group, corresponding to the second vector of the second matrix.

[0146]Step B2, use the PF algorithm to perform multi-user scheduling in each group, assuming that 2 users...

no. 3 example

[0151] In the third embodiment of the present invention, all users work in SDMA mode (that is, only users 1 to 12), and PF, PF, and MaxC / I scheduling algorithms are used in steps 412, 413, and 414, respectively.

[0152] The method of the third embodiment of the present invention specifically includes the following steps:

[0153] In step C1, users with the same PMI and PVI are grouped into a group. The grouping results are shown in the user feedback information table working in SDMA mode, that is, users 1, 2, and 3 are in the same group, corresponding to the first matrix. The first vector; users 4, 5, and 6 are in the same group, corresponding to the second vector of the first matrix; users 7, 8, and 9 are in the same group, corresponding to the first vector of the second matrix; users 10, 11, 12 are in the same group, corresponding to the second vector of the second matrix.

[0154] Step C2, use the PF algorithm to perform multi-user scheduling in each group, assuming that ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com