Joint estimation method for movement and parallax error in multi-view video coding

A multi-view video and viewpoint direction technology, applied in the field of motion and parallax joint estimation algorithms, can solve the problems of reducing the complexity of motion estimation and parallax estimation, waste, and not making full use of multi-view video, and achieves reliable and guaranteed initial prediction values. The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

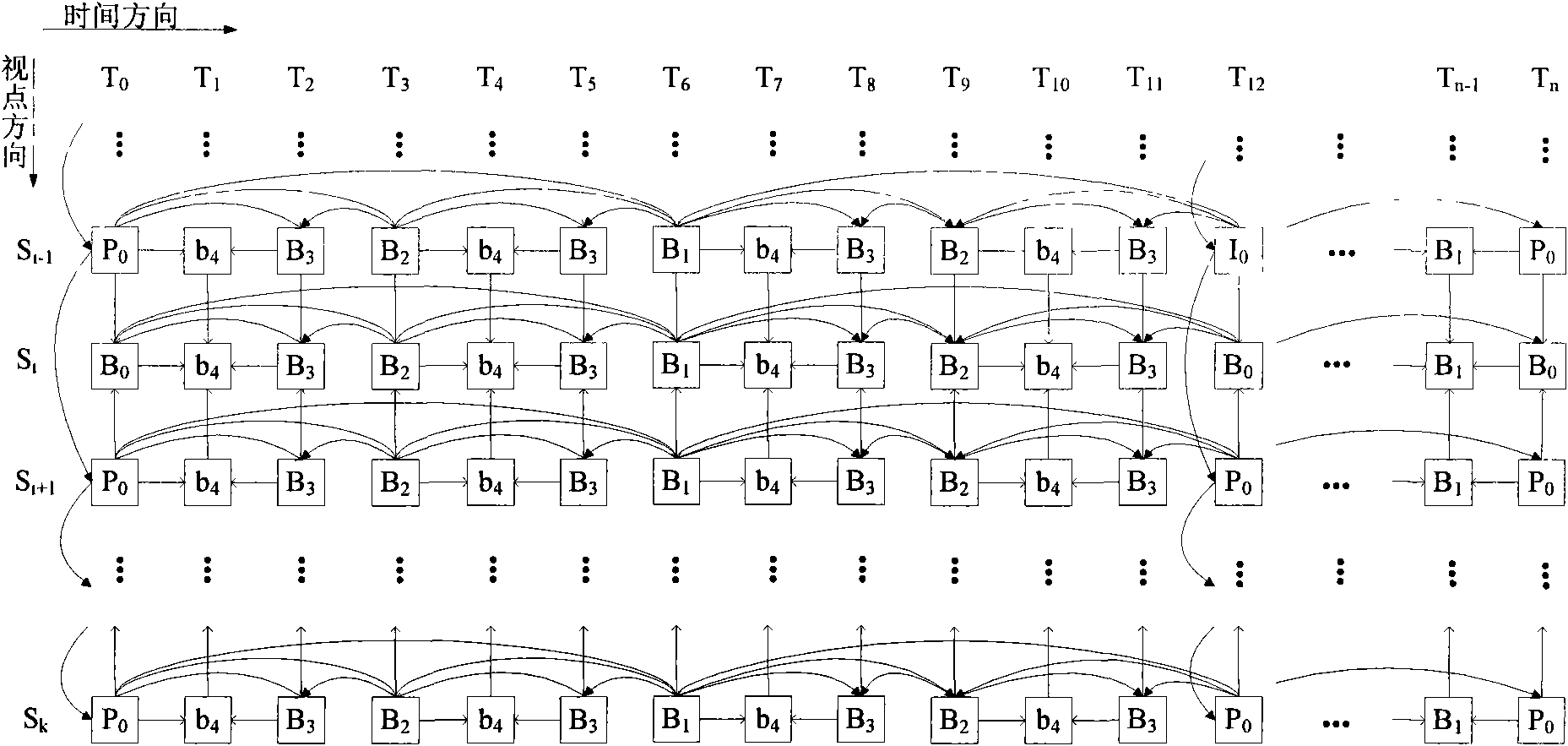

[0062] Multi-viewpoint video refers to the k+1 video sequence {S 0 , S 1 , S 2 …S k}, each video contains from T 0 Time to T n n+1 frames of images at a time. figure 1 is the encoding frame of multi-view video, the vertical direction is the viewpoint direction, and the horizontal direction is the time direction. The first frame of each video is the anchor frame, such as S i / T 0 the B 0 The frame is an anchor frame, and the other frames are coded in units of picture groups. Each image group consists of an anchor frame and multiple non-anchor frames, let N GOP Represents the number of frames contained in an image group, N GOP The value of is an integer power of 2, 12 or 15. In an image group, usually the frame at the end of the image group is the anchor frame, for example, N in the figure GOP = 12, S 1 / T 12 moment of B 0 frame is an anchor frame. When encoding, the anchor frame is first encoded independently, and then each non-anchor frame is encoded according ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com