Shared cache management method and system

一种共享缓存、管理系统的技术,应用在信息领域,能够解决增加硬件成本等问题,达到简化硬件实现、逻辑配置简单的效果

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

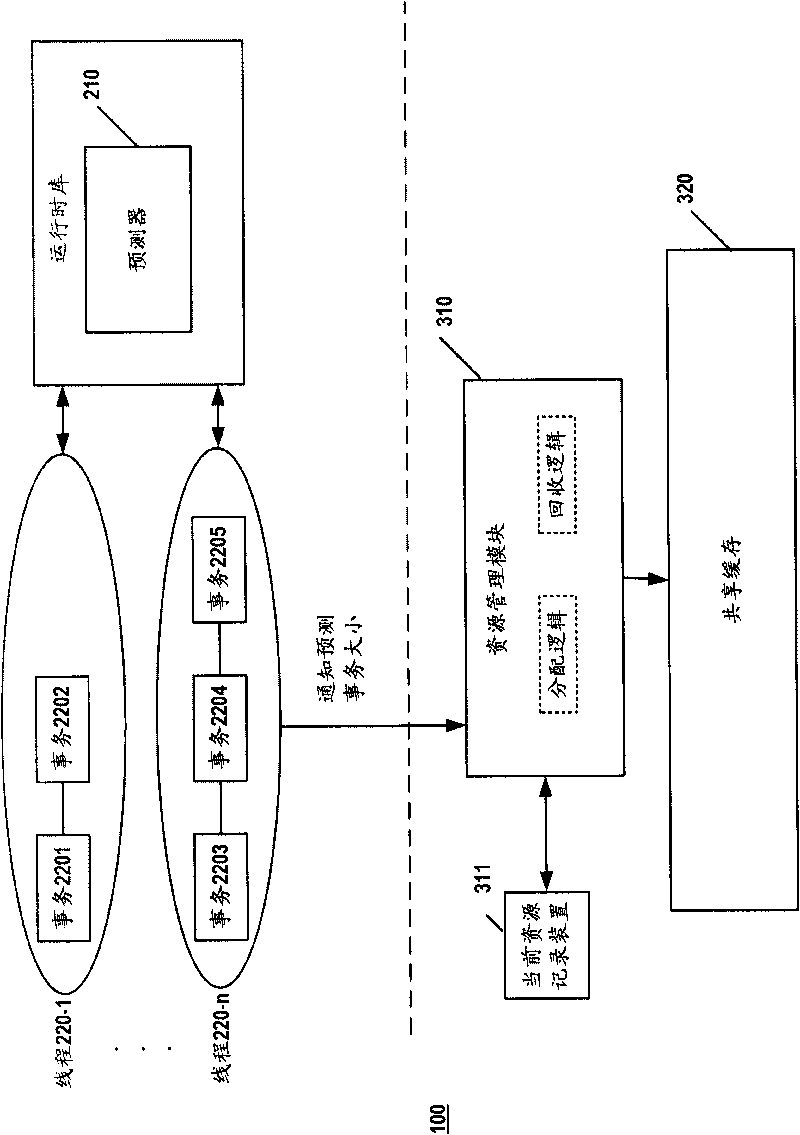

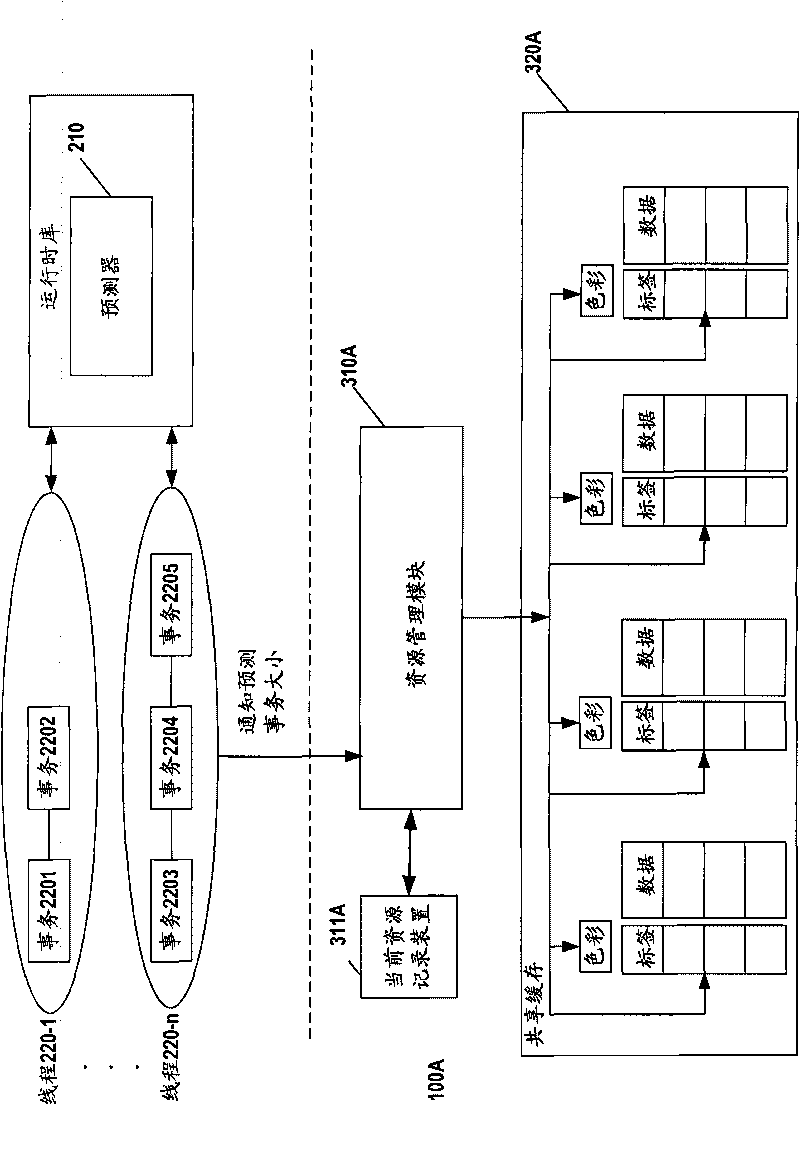

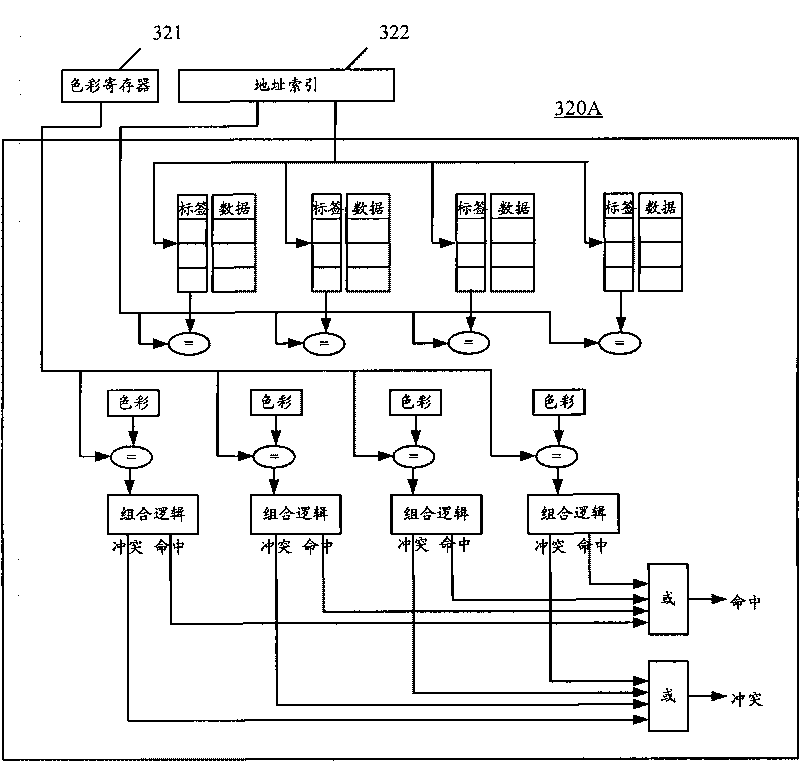

[0025] The present invention is applicable to the technical field of transactional memory. The following description will take the operating environment of transactional memory as an example, that is, the application program running on the processor includes several transactions, and the transaction uses a shared buffer to store intermediate state data, and the transaction is indicated by a color mark The identification number (ID) of the . Of course, those skilled in the art should understand that the present invention is not limited thereto, but transactions can be abstracted into more general conceptual programs, because transactions are part of application programs.

[0026] The present inventors have observed that in shared memory schemes for hardware transactional memory systems, a major challenge lies in on-demand resource management, which is critical to system performance. For example, multiple transactions with different data sizes compete with each other to apply fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com