Fast positioning method for video events from rough state to fine state

A technology of video event and positioning method, which is applied in the research field of video event positioning method, and can solve the problems of statistics, incapable of quantitative and accurate comparison, and calculation cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

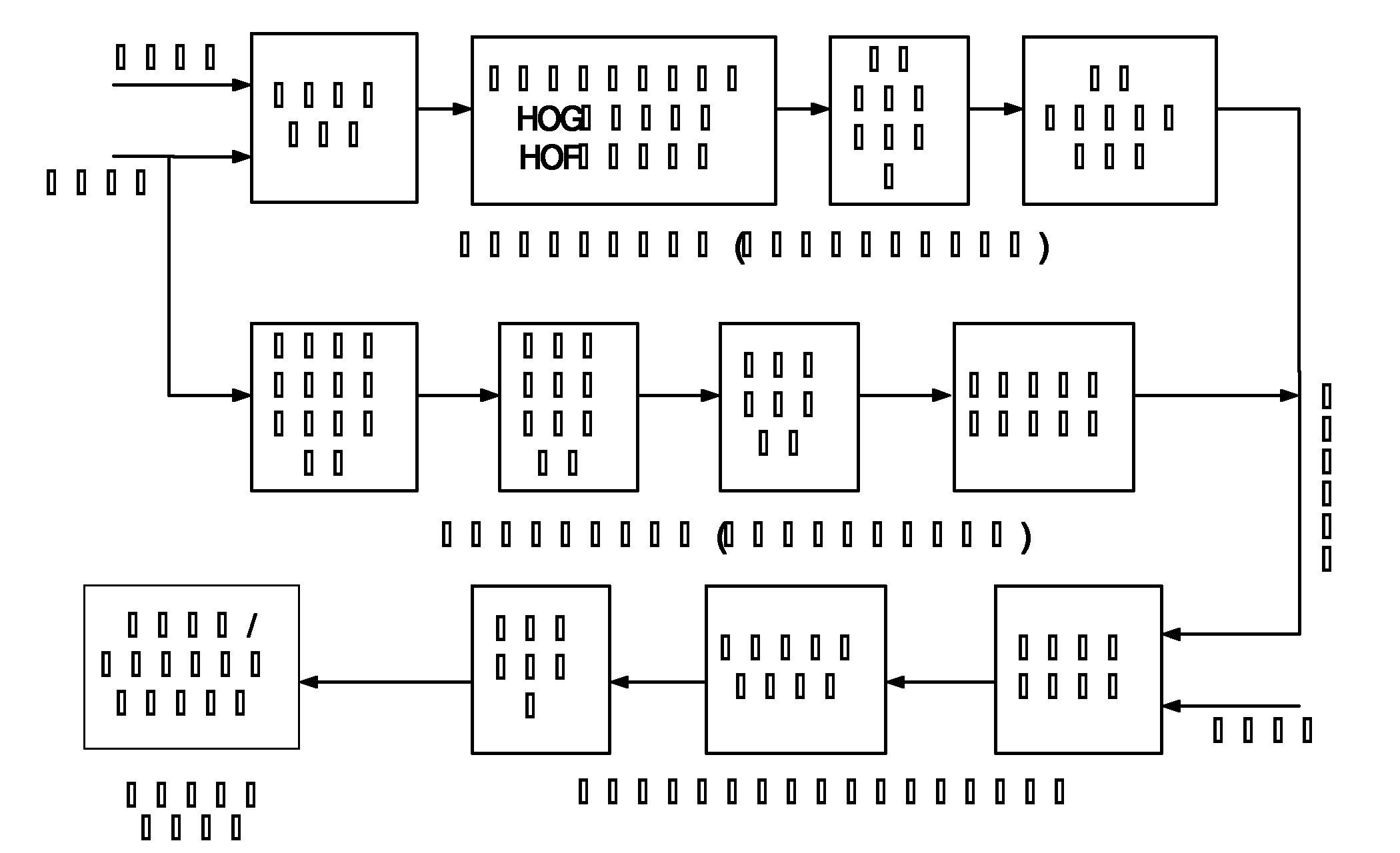

[0085] Such as figure 1 As shown, a rapid localization method of video events from coarse to fine, specifically includes the following steps:

[0086] (1) Coarse search for the space-time body of interest: A group of video clips most likely to contain the query event are obtained by time-segmenting the real video, and the region of interest of each frame is obtained by spatially segmenting the real video. The region of interest of each frame of image in the clip is normalized and stacked in time sequence to form a set of space-time volume of interest. The time segmentation of real video includes space-time interest point detection, HOG feature and HOF feature extraction for space-time split volume , Use the chi-square distance method to perform feature matching on the space-time split, and use the classification algorithm to determine the start and end points of the video clip; the spatial segmentation of the real video includes using the historical frame and current frame inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com