Labelling image scene clustering method based on vision and labelling character related information

A technology for labeling words and images, applied in the field of image processing, can solve problems such as limitations, and achieve the effect of solving sparsity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

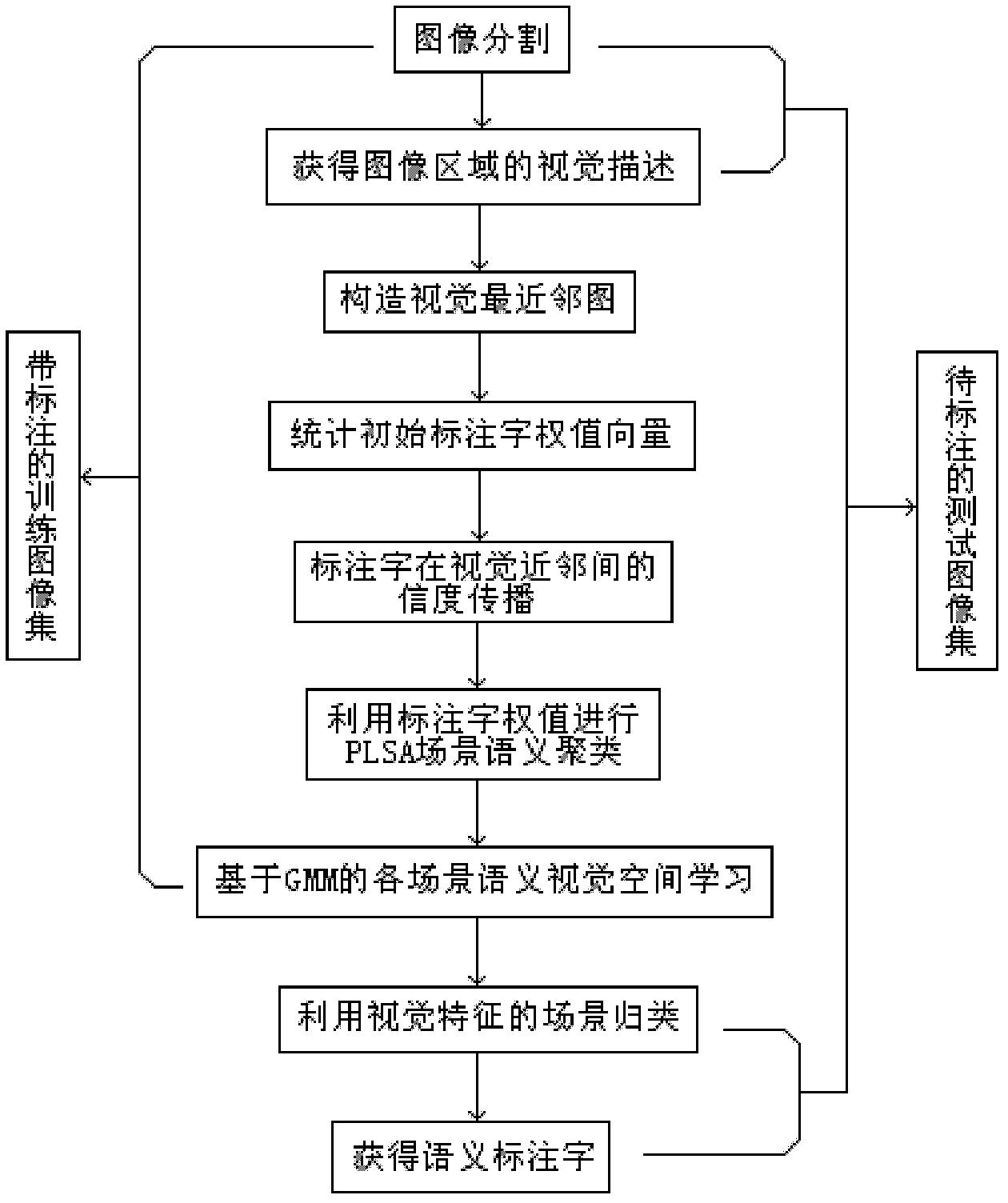

[0020] Below in conjunction with accompanying drawing, the present invention is described in more detail:

[0021] Step 1: Use the NCut (Normalized Cut) image segmentation algorithm to segment the training image (annotated image for learning) and the test image respectively to obtain a visual description of the image area.

[0022] Step 2, Construct all images used for learning {J 1 ,·,J l}PC train The visual nearest neighbor graph of The vertex set V corresponds to each image, each vertex corresponds to an image, and the edge set E represents the visual distance between images. We use the similarity measure of multi-region integrated matching for the visual distance between images - Earth Mover's Distance (EMD), and the weight on the edge connecting two vertices corresponds to the EMD visual distance between images.

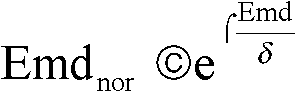

[0023] Step 3, in the training image set, each image has a set of initial normalized tag word weight vectors. The normalization method of the weight vecto...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com