Caching system and method of data caching

A caching system and data caching technology, applied in memory systems, electrical digital data processing, memory address/allocation/relocation, etc., can solve the problems of low transmission rate, different error correction time, and inability to be the same speed, so as to improve the transmission rate. effect of speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

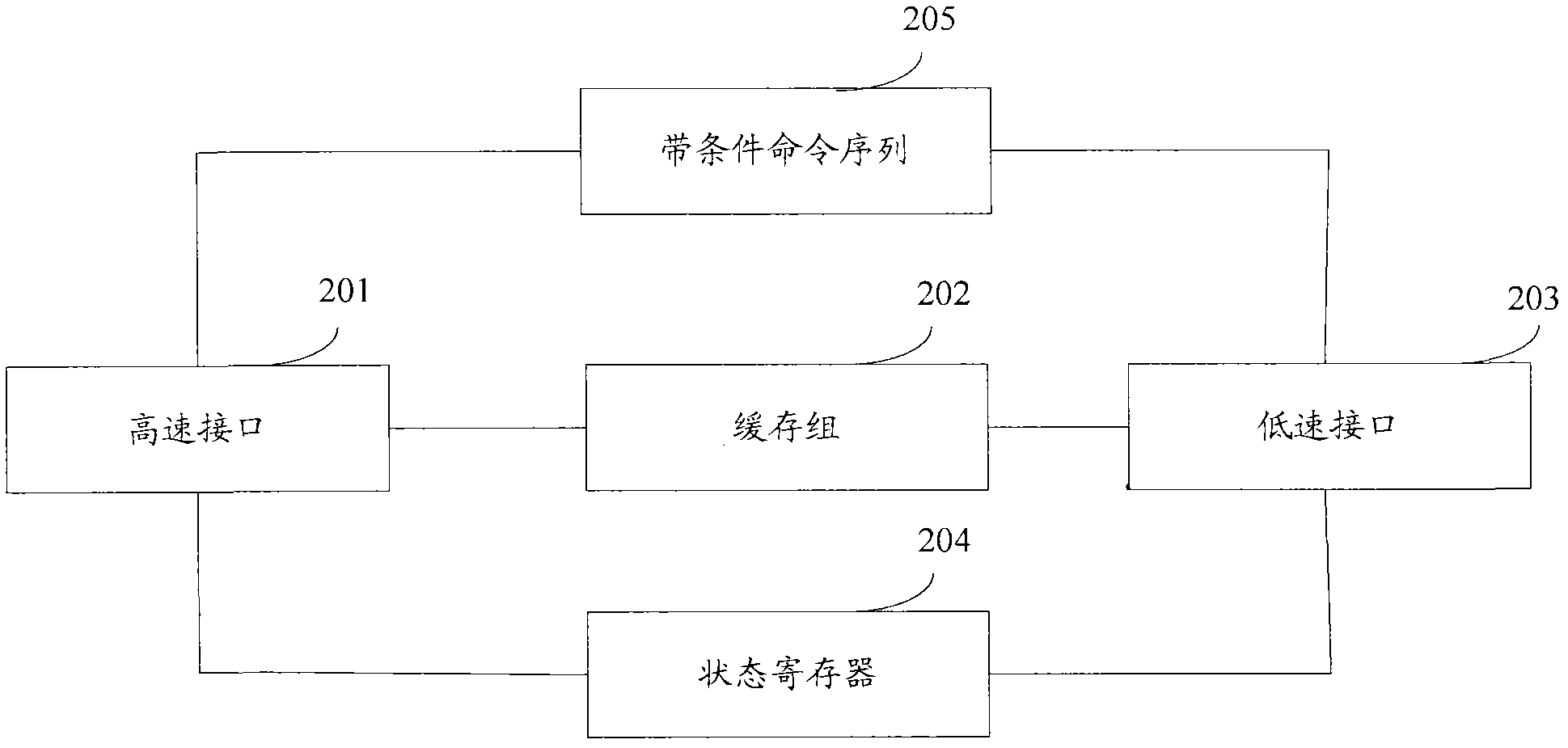

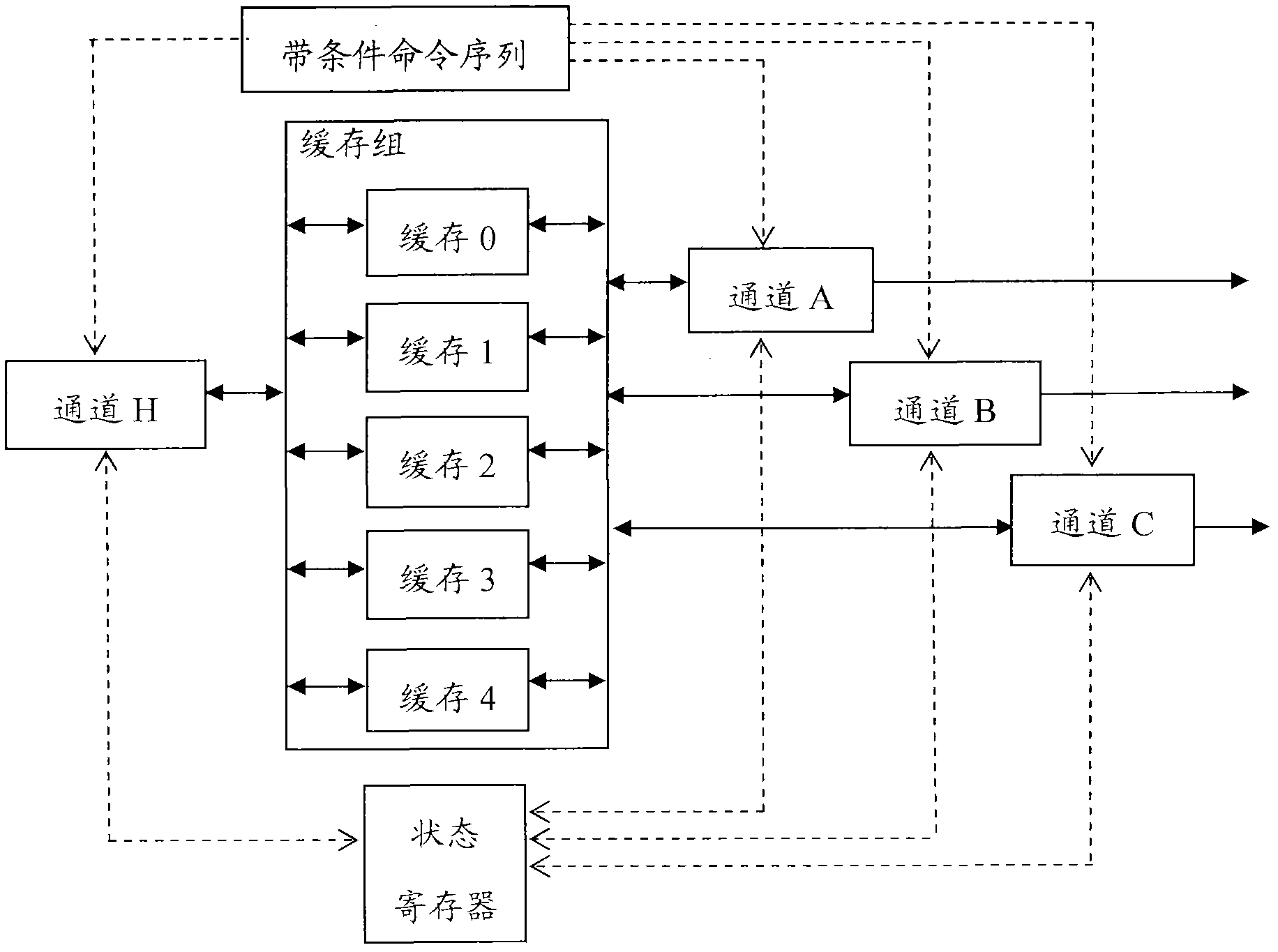

[0026] see figure 2 , which is a schematic structural diagram of an embodiment of a caching system in the present application, such as figure 2 Shown, comprise high-speed interface 201, cache group 202, low-speed interface 203, status register 204 and band command sequence 205, wherein, high-speed interface 201 is a channel, low-speed interface 203 is at least two channels, the cache quantity of cache group 202 at least one more than the number of lanes of the low-speed interface,

[0027] The conditional command sequence 205 is used to control the idle high-speed interface 201 and the low-speed interface 203 to perform data caching according to the caching conditions through the control command. The data reading condition of reading data in, described data writing condition is the cache that has state and is empty, and described data read condition is the cache that has state and is full;

[0028] The high-speed interface 201 and the low-speed interface 203 are used to re...

Embodiment 2

[0036] according to figure 1 The schematic diagram of the structure of the caching system shown in the embodiment of the present application provides a figure 1 The method for implementing data caching in the cache system shown, the method includes: when there is an idle input channel and a cache with an empty state, write the data to be transmitted into the cache with an empty state through the idle input channel In , when there is an idle output channel and a full buffer, read data from the full buffer through the idle output channel.

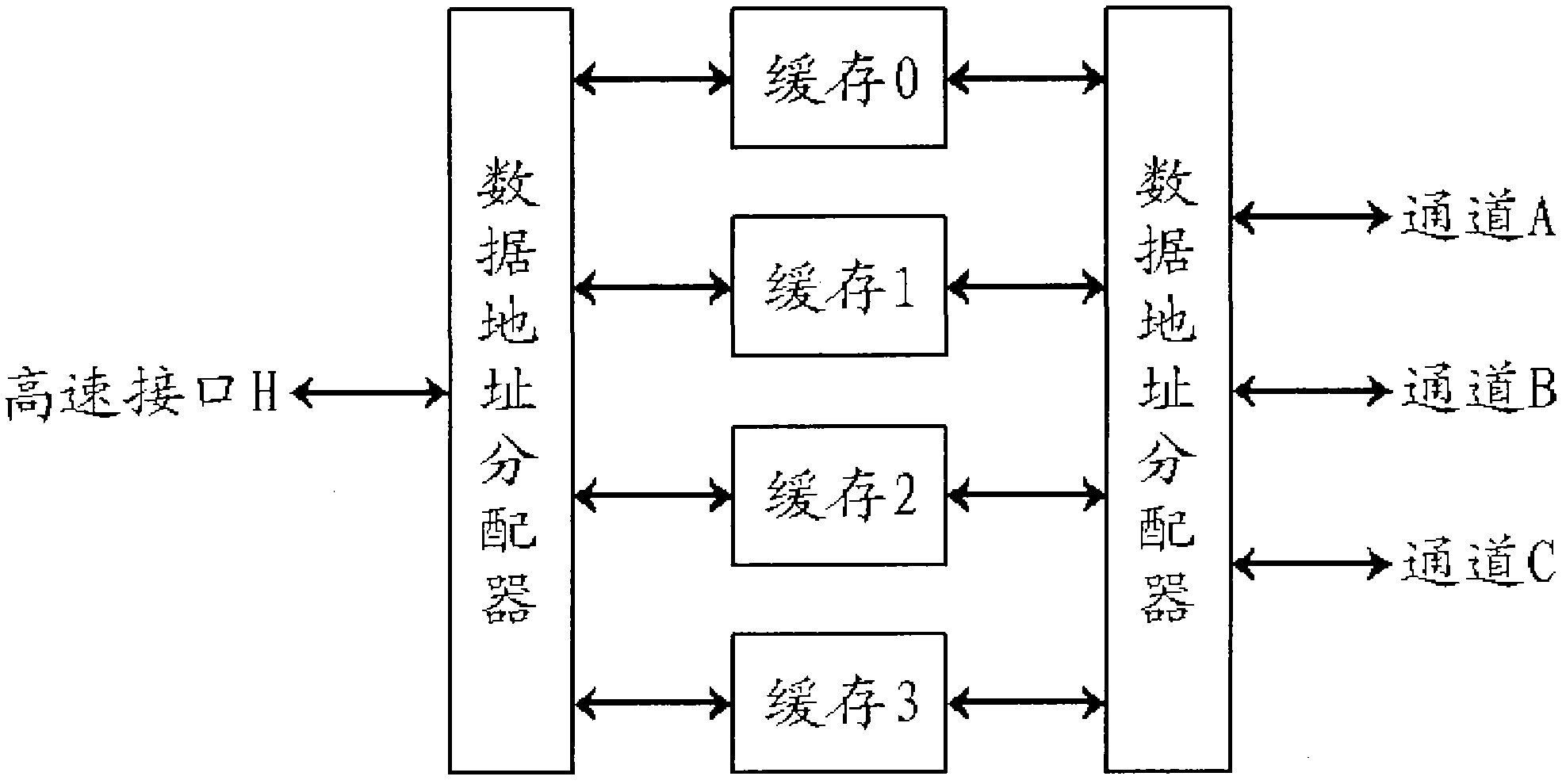

[0037] For example, channel H is a high-speed interface, channels A, B, and C are low-speed interfaces, and there are four caches 0, 1, 2, and 3 in the cache system. When data is transferred from the high-speed interface to the low-speed interface, the input channel is channel H, and the output channels are channels A, B, and C. When a piece of data is written to cache 0 through channel H, channel H is idle at this time, and if only cache 2...

Embodiment 3

[0049] The following describes the data caching process from the channel side as the execution subject. Wherein, the channel in this embodiment may be an input channel or an output channel. see Figure 4 , which is a flowchart of an embodiment of a data caching method of the present application, including the following steps:

[0050] Step 401: the channel is started by the CPU;

[0051] Step 402: When the channel is in an idle state, read the cache condition from the conditional command sequence under the control of the control command;

[0052] Wherein, for the input channel, the data writing condition is read, and for the output channel, the data reading condition is read.

[0053] Step 403: the channel reads the status of each buffer from the status register under the control of the control command;

[0054]Step 404: judge whether the current cache condition is satisfied according to the status of each cache, if yes, enter step 405, if not, return to step 403;

[0055...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com